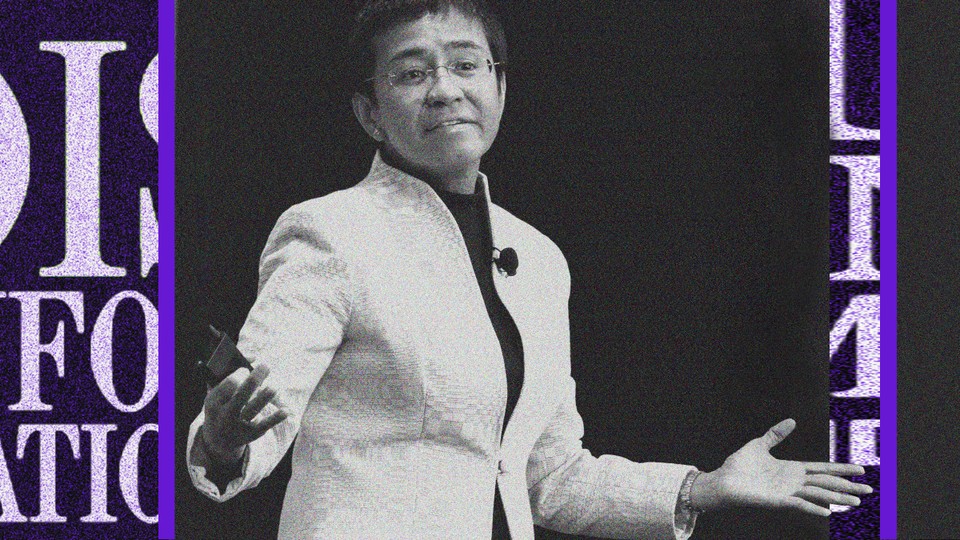

Editor’s Note: This piece was adapted from Ressa’s remarks at Disinformation and the Erosion of Democracy, a conference hosted by The Atlantic and the University of Chicago, on April 6, 2022.

In the Philippines, we’re 33 days before our presidential elections. Filipinos are going to the poll and we are choosing 18,000 posts, including the president and vice president. And how do you have integrity of elections if you don’t have integrity of facts? That’s a reality that we’re living with.

I put all of this stuff together in a book, and this is part of the reason you’ll see these ideas over and over. And the question I really want to ask you is the question we had to confront: What are you willing to sacrifice for the truth? Because these are the times we live in. The prologue for the book—which I pushed in at the end of last year—started with Crimea and the annexation in 2014, because that’s when you began to see the splintering of reality that had geopolitical impact. And of course, I’ve had to revise it once Russia invaded Ukraine. And the question that goes through my head is, if in 2014, eight years ago, we did something about it, would we be where we are today? No, we wouldn’t be.

[Read: The information war isn’t over yet]

So here’s what I’m going to talk about today, really just three things: What happened? How did it change us? And what can we do about it? These are critical for me. It’s existential. I call it an “Avengers assemble” moment in the Philippines. And guess what? Your elections are coming up too. Ain’t so far away for you here.

I’ve said this over and over, that, really, this is like when 140,000 people died instantly in Hiroshima and Nagasaki. The same thing has happened in our information ecosystem, but it is silent and it is insidious. This is what I said in the Nobel lecture: An atom bomb has exploded in our information ecosystem. And here’s the reason why. I peg it to when journalists lost the gatekeeping powers. I wish we still had the gatekeeping powers, but we don’t.

So what happened? Content creation was separated from distribution, and then the distribution had completely new rules that no one knew about. We experienced it in motion. And by 2018, MIT writes a paper that says that lies laced with anger and hate spread faster and further than facts. This is my 36th year as a journalist. I spent that entire time learning how to tell stories that will make you care. But when we’re up against lies, we just can’t win, because facts are really boring. Hard to capture your amygdala the way lies do.

[Read: The grim conclusions of the largest-ever study of fake news]

Think about it like this. All of our debate starts with content moderation. That’s downstream. Move further upstream to algorithmic amplification. That’s the operating system; that’s where the micro-targeting is. What is an algorithm? Opinion in code. That’s where one editor’s decision is multiplied millions and millions of times. And that’s not even where the problem is. Go further upstream to where our personal data has been pulled together by machine learning to make a model of you that knows you better than you know yourself, and then all of that is pulled together by artificial intelligence.

And that’s the mother lode. Surveillance capitalism, which Shoshana Zuboff has described in great detail, is what powers this entire thing. And we only debate content moderation. That’s like if you had a polluted river and were only looking at a test tube of water instead of where the pollutant is coming from. I call it a virus of lies.

Today we live in a behavior-modification system. The tech platforms that now distribute the news are actually biased against facts, and they’re biased against journalists. E. O. Wilson, who passed away in December, studied emergent behavior in ants. So think about emergent behavior in humans. He said the greatest crisis we face is our Paleolithic emotions, our medieval institutions, and our godlike technology. What travels faster and further? Hate. Anger. Conspiracy theories. Do you wonder why we have no shared space? I say this over and over. Without facts, you can’t have truth. Without truth, you can’t have trust. Without these, we have no shared space and democracy is a dream.

[Read: What Facebook did to American democracy]

Surveillance capitalism pulls together four things. We look at them as separate problems, but they’re identical. The first is antitrust: There’s three antitrust bills that are pending at the U.S. Senate. Then data privacy: Who owns your data? Do you? Or does the company that collates them and creates a model of you. Third is user safety: Do we have a Better Business Bureau for our brains? And the fourth: content moderation. As long as you’re thinking about content, you’re not looking at everything else.

This is how we discovered what social media was doing to democracy in the Philippines. In September 2016, a bomb exploded in Davao City, the home city of President [Rodrigo] Duterte. A six-month-old article, “Man with high quality of bomb nabbed at Davao checkpoint,” went viral. This is disinformation: You take a truth and twist it. The story was seeded in fake websites; one of them is a Chinese website today. And it was seeded on Duterte and [Bongbong] Marcos Facebook pages.

Rappler ran a story on the disinformation called “Propaganda War: Weaponizing the Internet.” It was part of a three-part series. The second part was “Fake Accounts, Manufactured Reality on Social Media.” One account we investigated was called Luvimin Cancio. And we looked at this account and found it strange that she didn’t have many friends, but was a member of many, many, many groups. And then we took the photo and did a reverse imaging scan. It was not this person.

And then we took a look at who she was following, who were her friends. This is 2016, so you could still get that. We checked everything about them and tried to prove whether it was actually true: where they went to school, where they lived. Every single thing was a lie. This was a sock-puppet network.

I wrote the last part of the trilogy in 2016, which is “How Facebook Algorithms Impact Democracy.” It didn’t get better. It got worse.

[Read: Social media are ruining political discourse]

So what did we do? We realized it’s systematic, that it is taking a lie and pounding—that it is using free speech to stifle free speech, because when you are pounded to silence, you kind of shut up, unless you are foolish like me. We took all the data and gave it to our social-media team. Rappler is one of two Filipino fact-checking partners of Facebook. I’d say we’re frenemies. But I think tech is part of the solution.

After we published that “weaponization of the internet” series in 2016, we watched social media to see what would happen. Take the Facebook page of Sally Matay as an example. This has now been taken down, but it was a cut-and-paste account. We were still catching their growth. The data is pretty incredible. Everything I tell you is data-driven. We kept track of how many times they were posting in which Facebook pages. This is the first time we saw Marcos and Duterte disinformation networks working together.

I’m going to bring it back to you because the methodology is the same. The Election Integrity Partnership mapped the spread of “Stop the Steal.” This is the same methodology that was used to attack me. In “Stop the Steal,” you can see that the narrative of election fraud was seeded in August 2019 on RT, then picked up by Steve Bannon on YouTube. Then Tucker Carlson picks it up and then QAnon drops it October 7 and then President Trump comes top-down.

[Read: Facebook is hiding the most important misinformation data]

What happened to me in 2016? A lie told a million times became a fact. My meta-narrative? Journalist equals criminal. And then a year later, President Duterte came top-down with the same meta-narrative, except he did it in his State of the Nation Address. And then a week later, I got my first subpoena. That year, we had 14 investigations. In less than two years, by 2019, I got 10 arrest warrants, posted bail 10 times. It’s the same methodology. See how it is happening to you.

In 2020, my former colleague and I were charged in the first case of cyber libel, for a story that was published eight years earlier. The period of prescription for libel is only one year; lo and behold, it became 12 years. I was convicted, and so I’m appealing this at the Court of Appeals. The New York Times ran a story called “Conviction in the Philippines Reveals Facebook’s harms.” These are real-world harms.

This is happening to women. Women journalists. Mark Zuckerberg quotes Louis D. Brandeis all the time, saying, the way you get at bad speech is to have more speech. Brandeis wrote that in 1927, in the old days. In the age of social media, the age of abundance, we have to look back at Brandeis’s words. He also said that women are burned as witches. These words reminded me how gendered disinformation hits women journalists, politicians.

Social media brings out the worst of us. If you’re my age, you remember the old cartoon when you have a conscience and you have a devil and an angel trying to tell you what to do on each shoulder. But what social media has done, what American tech has done (well, you’ve got to include TikTok now) is it’s kicked off the angel, it’s given the devil a megaphone, and it’s injected directly into your brain.

In a UNESCO report called “The Chilling: Global Trends in Online Violence Against Women Journalists,” I was the example for the global South. Carole Cadwalladr, the British journalist who broke the Cambridge Analytica story, was the global North. ICFJ, the International Center for Journalists, said that 73 percent of women journalists experienced online abuse. Twenty-five percent received threats of physical violence like death threats. I get a lot of those. Twenty percent had been attacked or abused offline in connection.

[Read: The global war on journalists]

What happens in the virtual world happens in the real world. That thing where people think they’re different, disabuse your mind of that. There is only one world because we live in both worlds.

The UNESCO report looked at almost half a million social-media attacks against me. There was a point in time when I was getting 90 hate messages per hour. Sixty percent were meant to tear down my credibility. There’s a reason why you don’t believe news organizations anymore; that is an information operation. And then the other 40 percent were meant to tear down my spirit. It didn’t work, but it’s really painful.

After the Nobel, I came back home and realized, Oh my gosh, I’m getting new attacks again. I just went through and found out where the attacks were coming from. We looked at the creation dates of the Twitter accounts, and they were all created around the time of elections. Did I mention it’s 33 days before elections in the Philippines?

It’s like 1986 all over again: Ferdinand Marcos Jr. is running against another widow, Leni Robredo. She’s our vice president. The pro-Marcos accounts had a big spike, but not the pro-Leni accounts. So you can see something that would be looking more organic versus something that looks artificial. We did a story and then we gave the information to Twitter, and then Twitter suspended over 300 accounts in the Marcos network. There’ll be more, which hopefully we will be seeing. If you are the gatekeeper, you cannot abdicate responsibility, because you subject all of us to the harms.

Here’s the solution: tech, journalism, community. Those are the three pillars of Rappler, even from the beginning. Part of the reason I’m here is because I testified at the Senate subcommittee on East Asian affairs and I asked for legislation. It’s not where I started in 2016, because I thought tech platforms were like journalists and could have self-regulation. They’re not. Technology needs guardrails in place.

[Read: The most powerful publishers in the world don’t give a damn]

Journalism needs to survive. We built Rappler’s platform. I spent a lot of money on it, and it took a long time because so much of our money was going to legal fees. But it’s rolling out in time for our elections. We’ve got to help independent media survive. And that’s part of the reason, before the Nobel, I agreed to co-chair the International Fund for Public Interest Media that’s asking democratic governments to actually put some money to help journalists survive.

Now I want to show you how they all come into effect, because we’re in the last 33 days before elections. I call it the #FactsFirst page. It’s a tech platform going through the four layers of this pyramid. We really started—this is kind of what I used the Nobel for—we started building this community in January. News organizations rarely share with each other, have never really in the Philippines. And so what we did is: 16 news organizations committed to fact-check the lies. We asked our communities, when you see a lie, send it to us on a tip line, and then we said, “All right, we’re going to meta-tag everything. It’s going to have the data, and then we’re going to be able to course it through the four layers of this pyramid.”

What are the four layers? Each of the newsgroups have links to each other—that’s good for search. And they also then share each other. Use your power. And then beyond that, it moves to the second layer. I call it “the mesh,” like in that movie Don’t Look Up, where the Planetary Defense system came together like a mesh. That’s kind of the way we have to live right now on social media, because meaning has been commoditized and each of us has to take our area of influence and clean it up. Then we connect it to each other, like a mesh. So all our human-rights organizations, our business groups, the church, environmental groups, civil societies, and NGOs are taking what the newsgroups are doing and sharing it with emotion.

And then after mesh, you go up to research. This was actually inspired by your Election Integrity Partnership in the U.S. Every week a research group will come out and tell Filipinos how we are being manipulated, who is gaining, and who is behind the networks of disinformation.

[Renée DiResta: It’s not information. It’s amplified propaganda.]

The last layer is the most important one, and they’ve been quiet for too long: legal groups. If you don’t have facts, you cannot have rule of law. These legal groups now are working hand in hand with the pyramid. They get the data pipeline and they then file both strategic and tactical litigation. I’ll tell you how it goes in 33 days, but it’s already working. Just on April Fools’ Day, and it wasn’t an April Fools’ joke, seven new legal complaints against me were thrown out. That’s good. But we also have 60 more new ones, so this isn’t ending. It’s still a whack-a-mole game, but we will win it. The one that I think is important is from the solicitor general, who really started the weaponization of the law, and he called fact-checking prior restraint. We’re going to win this.

What this world shows us is that we have a lot more in common than we have differences, believe it or not. As a journalist, I grew up looking at each country and every culture differently. But what the tech platforms actually showed us is the silver lining: We’re all being manipulated the same way. We have a lot more in common. So even things like identity politics, what happened in the U.S. in 2016, when both sides and Black Lives Matter was pounded open. Be aware. Think slow. Not fast.