Here’s a timely antidote to the current A.I.-will-kill-us-all hysteria that yesterday got boosted by a bunch of tech titans and other prominent concerned citizens: a standing order issued by one Judge Brantley Starr of the district court for the Northern District of Texas, who doesn’t want unchecked generative-A.I. emissions on his turf.

After all, why worry about Terminator-esque long-term scenarios, when you can instead worry about the immediate risk of the U.S. justice system becoming polluted with A.I.’s biases and “hallucinations”?

Let’s first rewind a few days. At the end of last week, lawyer Steven A. Schwartz had to apologize to a New York district court after submitting bogus case law that was thrown up by ChatGPT; he claimed he didn’t know that such systems deliver complete nonsense at times, hence the six fictitious cases he referenced in his “research.”

“Your affiant greatly regrets having utilized generative artificial intelligence to supplement the legal research performed herein and will never do so in the future without absolute verification of its authenticity,” Schwartz groveled in an affidavit last week.

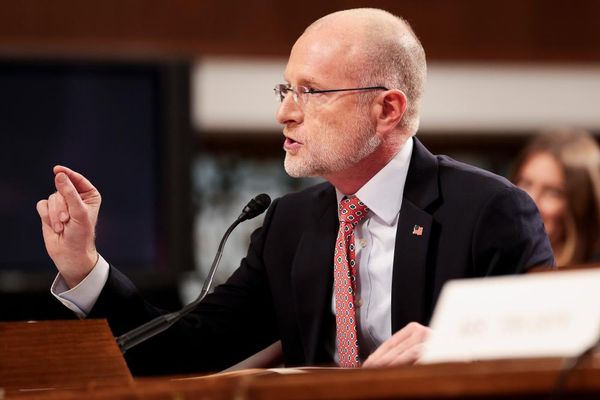

Now over to Dallas, where—per a Eugene Volokh blog post—Judge Starr yesterday banned all attorneys appearing before his court from submitting filings including anything derived from generative A.I., unless it’s been checked for accuracy “using print reporters or traditional legal databases, by a human being.”

“These platforms are incredibly powerful and have many uses in the law: form divorces, discovery requests, suggested errors in documents, anticipated questions at oral argument. But legal briefing is not one of them,” Starr’s order read. “Here’s why. These platforms in their current states are prone to hallucinations and bias.”

The hallucination part was ably demonstrated by Schwartz this month. As for bias, Starr explained:

“While attorneys swear an oath to set aside their personal prejudices, biases, and beliefs to faithfully uphold the law and represent their clients, generative artificial intelligence is the product of programming devised by humans who did not have to swear such an oath. As such, these systems hold no allegiance to any client, the rule of law, or the laws and Constitution of the United States (or, as addressed above, the truth). Unbound by any sense of duty, honor, or justice, such programs act according to computer code rather than conviction, based on programming rather than principle. Any party believing a platform has the requisite accuracy and reliability for legal briefing may move for leave and explain why.”

Very well said, Judge Starr, and thanks for the segue to a particularly grim pitch that landed in my inbox overnight, bearing the subject line: “If you ever sue the government, their new A.I. lawyer is ready to crush you.”

“You,” it turns out in the ensuing Logikcull press release, refers to people who claim to have been victims of racism-fueled police brutality. These unfortunate souls will apparently find themselves going up against Logikcull’s A.I. legal assistant, which “can go through five years’ worth of body-cam footage, transcribe every word spoken, isolate only the words spoken by that officer, and use GPT-4 technology to do an analysis to determine if this officer was indeed racist—or not—and to find a smoking gun that proves the officer’s innocence.” (Chef’s kiss for that whiplash-inducing choice of metaphor.)

I asked the company if it was really proposing to deploy A.I. to “crush” people when they try to assert their civil rights. “Logikcull is designed to help lawyers perform legal discovery exponentially [and] more efficiently. Seventy percent of their customers are companies, nonprofits, and law firms; 30% are government agencies,” a representative replied. “They’re not designed to ‘crush’ anyone. Just to do efficient and accurate legal discovery.”

The crushing language, he added, was deployed “for the sake of starting a conversation.”

It’s certainly true that the increasingly buzzy A.I.-paralegal scene shows real promise for making the wheels of justice turn more efficiently—and as Starr noted, discovery is one of the better use cases. But let’s not forget that today’s large language models divine statistical probability rather than actual intent, and also that they have a well-documented problem with ingrained racial bias.

While a police department with an institutional racism problem would have to be either brave or stupid to submit years’ worth of body-cam footage to an A.I. for semantic analysis—especially when the data could be requisitioned by the other side—it’s not impossible that we could see a case in the near future featuring a defense along the lines of “GPT-4 says the officer couldn’t have shot that guy with racist intent.”

I only hope that the judge hearing the case is as savvy and skeptical about this technology as Starr appears to be. If not, well, these are the A.I. dangers worth worrying about right now.

More news below.

Want to send thoughts or suggestions to Data Sheet? Drop a line here.

David Meyer

Data Sheet’s daily news section was written and curated by Andrea Guzman.