It's always reassuring when your darkest suspicions about tech billionaires are confirmed. A couple of months back, The Atlantic dropped a bombshell that would have been shocking if we weren't all so jaded.

Meta had trained its AI on stolen books. Thousands of them, scanned from LibGen, a massive piracy network of stolen publications. All because Mark Zuckerberg, whose net worth could fund the British Library until the heat death of the universe, had decided that paying authors was a bit steep.

The writing community went ballistic, and rightly so. The Society of Authors released a furious statement. Class action lawsuits sprouted like mushrooms after rain. Authors everywhere took to their keyboards; not to create but to check if they'd been robbed.

Which is exactly what I did.

As I typed my name into The Atlantic's handy search tool, my heart was racing. The result? There it was: my book, Great TED Talks: Creativity: Words of Wisdom from 100 Ted Speakers (HarperCollins, 2021), now unceremoniously fed into Meta's digital maw. I should have been livid, incandescent, ready to join the torch-bearing mob outside Zuckerberg's compound.

Instead, I felt...elation. Relief. The joy of validation.

I'll have to admit, this was a bit of a shock to me. Apparently, according to my lizard brain, there's one thing worse than having your book stolen. It's not having your book stolen.

On further reflection, though, I don't think this is all ego. Before you revoke my author card and banish me from literary festivals for life, hear me out.

To put things into context, my books aren't tender coming-of-age novels or lyrical poetry collections. They're reference books: fat, fact-filled tomes designed to inform rather than move the soul.

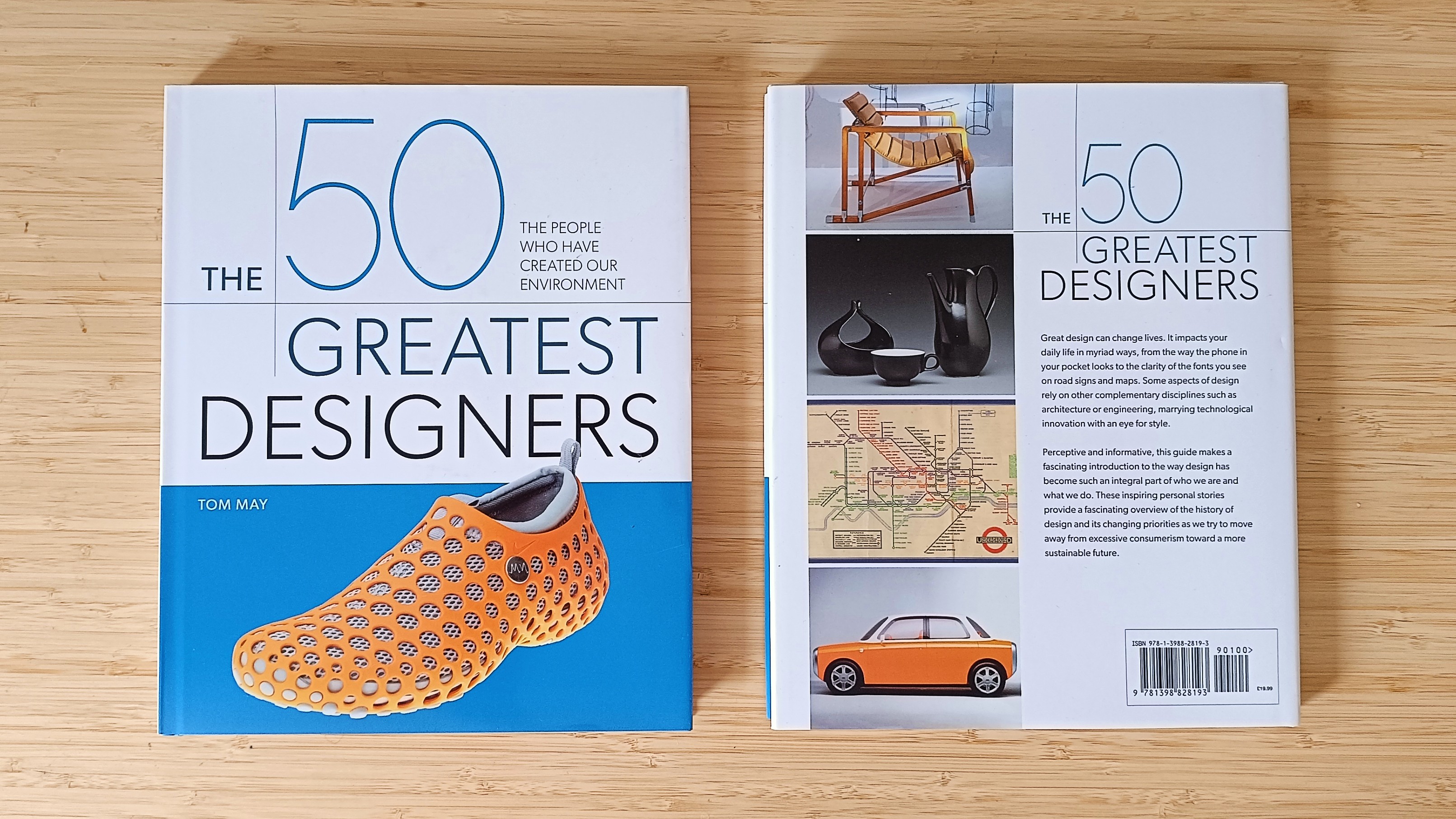

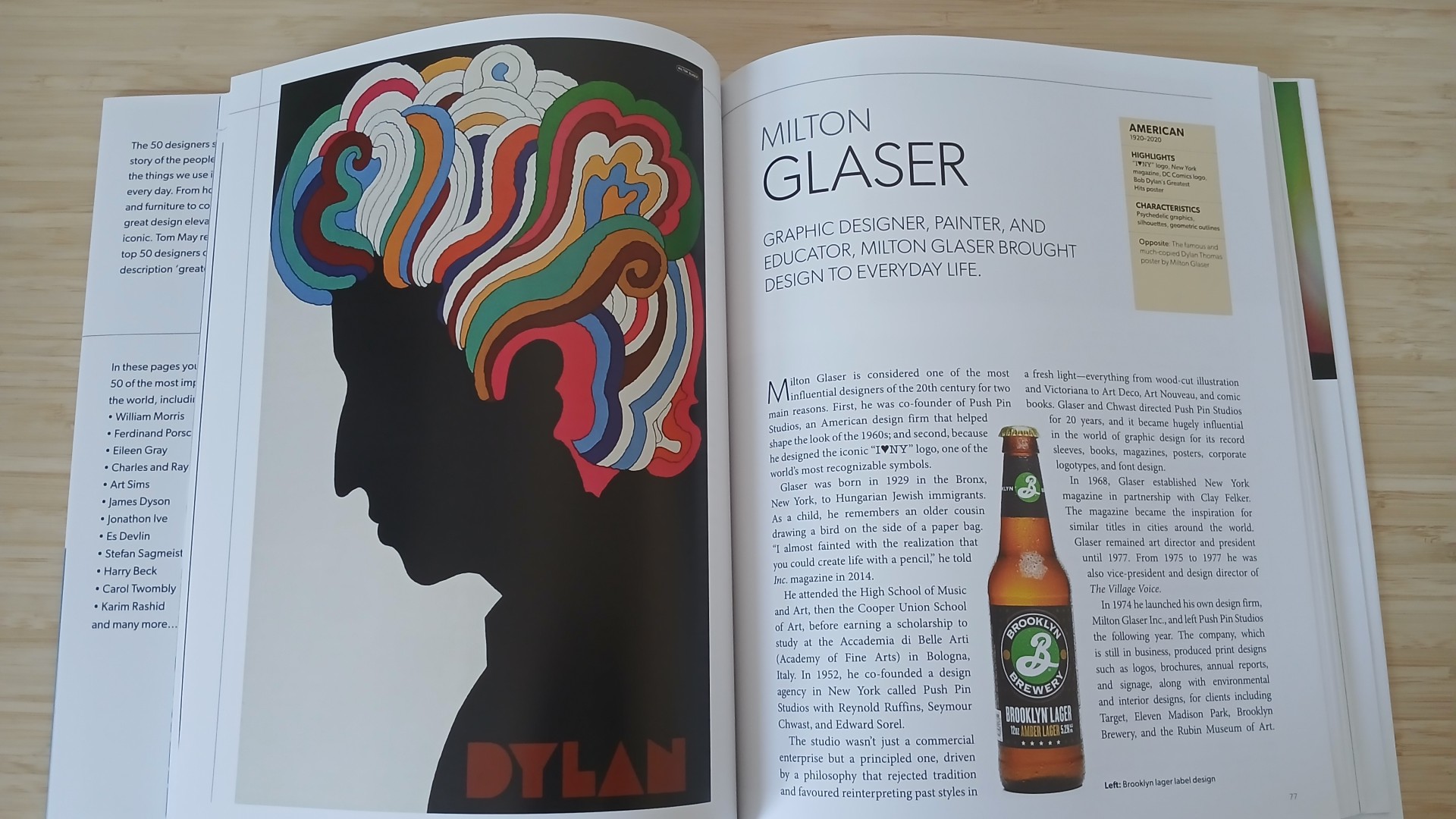

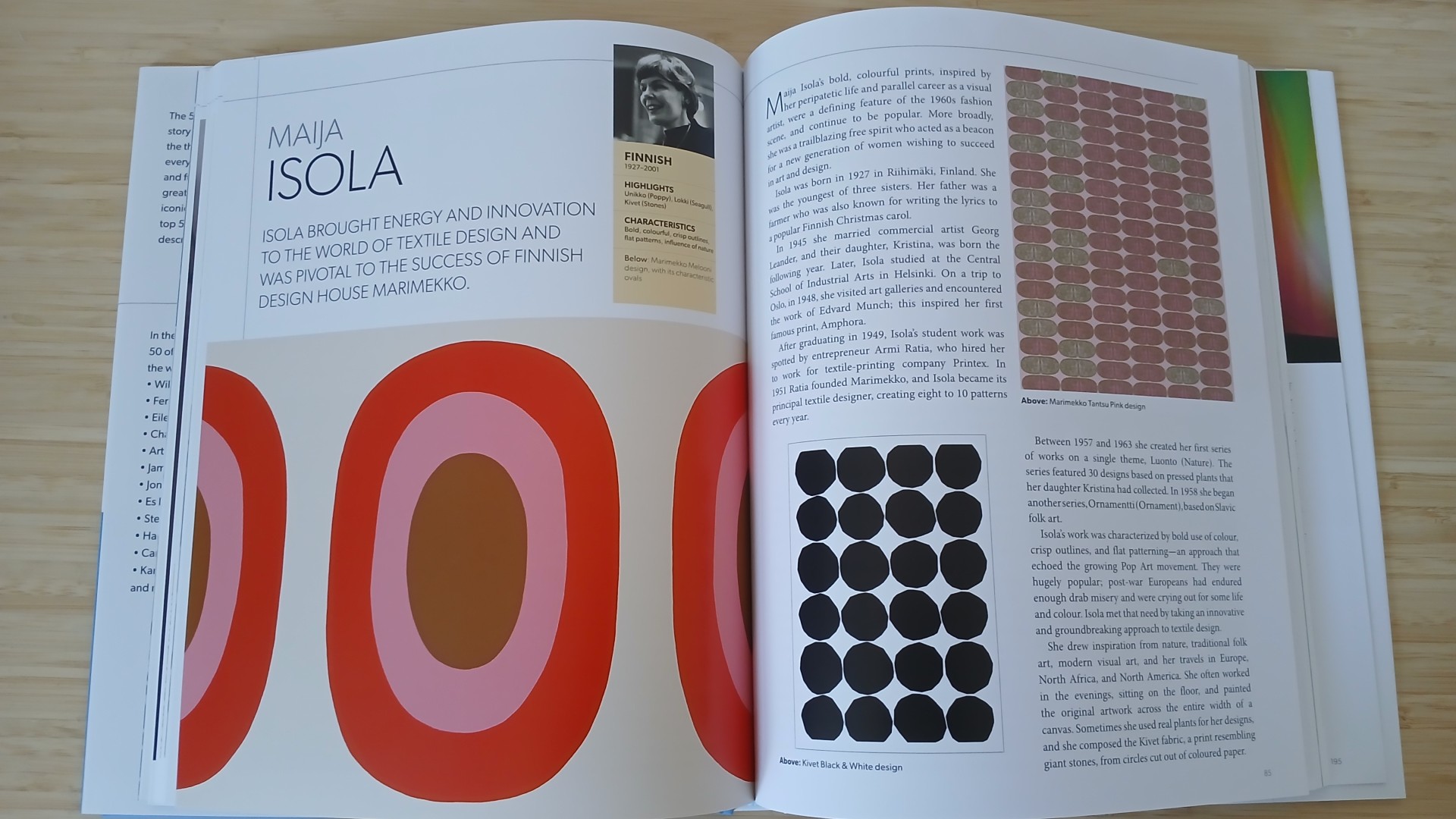

For instance my forthcoming release, The 50 Greatest Designers (Arcturus, out 30 May) is a coffee table book that introduces you to design history's biggest names, from William Morris and Marcel Breuer through to Es Devlin and Pum Lefebure. I'll be honest; a couple of them even I hadn't heard of when I embarked on my research, despite having made a huge impact on designs that are all around us today.

Consequently, it's become my passion to share their stores with the world, and although my publishers probably don't want me to say this, I'd rather that happened through AI than not at all.

More broadly, if AI is going to exist (which it clearly is, regardless of my feelings on the matter), I'd rather it regurgitated my carefully researched facts than hallucinate absolute bollocks.

Because that's what these systems do when they don't have proper training data: they make stuff up with the confidence of a bloke down the pub who's read half an article or watched some dodgy clickbait on YouTube.

AI, in practice, will do the same: invent citations, create fictional studies, and generally talk nonsense while sounding eerily authoritative. Which is, I'd argue, exactly how a lot of politicians get elected.

Where I draw the line

So I hope you'll agree there's a twisted logic to my traitorous position. Basically, if someone's going to ask an AI about my subject matter, I'd rather it pull from my work than conjure information from the digital ether.

But here's where I draw a thick, uncrossable line: truly creative works. Literature, paintings, songs, films. That kind of AI "training" feels fundamentally different and far more invasive.

Reference books are, by design, collections of information presented in a digestible format. The value is in the curation and accuracy, not predominantly in the unique expression. But a novel? A painting? A song? The expression is the entire point.

If AI can write a song that sounds like it was penned by Sleater-Kinney, or churn out a passable new Hunger Games novel, that's not "learning", it's stealing. It's copying the essence of an artist's work without the inconvenience of attribution or payment. It's the creative equivalent of identity theft.

But just watch. Over the next few years, well-paid tech bros will bang on about "fair use" and "transformative works" while their algorithmic minions hoover up everything creative minds have spent centuries producing. They'll claim the AI is "inspired by" rather than "copying" artistic styles; which is precisely what art students say when they're caught tracing.

The irony, of course, is that if all content becomes AI-generated based on existing human work, the machine will eventually start eating its own tail. What happens when there's nothing new to train on except other AI-generated content? It's the cultural equivalent of inbreeding; each generation a bit more warped than the last.

But hey, perhaps that's the future we deserve. An endless stream of content that's not quite right, like a cover version of a cover version played by someone who's forgotten their glasses. As long as the share price stays up, eh?

Explore how AI is impacting graphic design here.