AI chatbots are advancing rapidly and testing them to their limits is what I do for a living. Anthropic’s Claude 4 Sonnet and OpenAI’s ChatGPT-4o are two of the smartest tools available right now. But how do they actually compare in everyday use?

To find out, I gave both models the same set of 7 prompts; covering everything from storytelling and productivity to emotional support and critical thinking.

The goal: to see which chatbot delivers the most useful, human-like and creative responses depending on the task. Choosing the right AI often comes down to how you use it, which is why this kind of test really matters.

Here’s how Claude and ChatGPT performed side by side, and where each one shines.

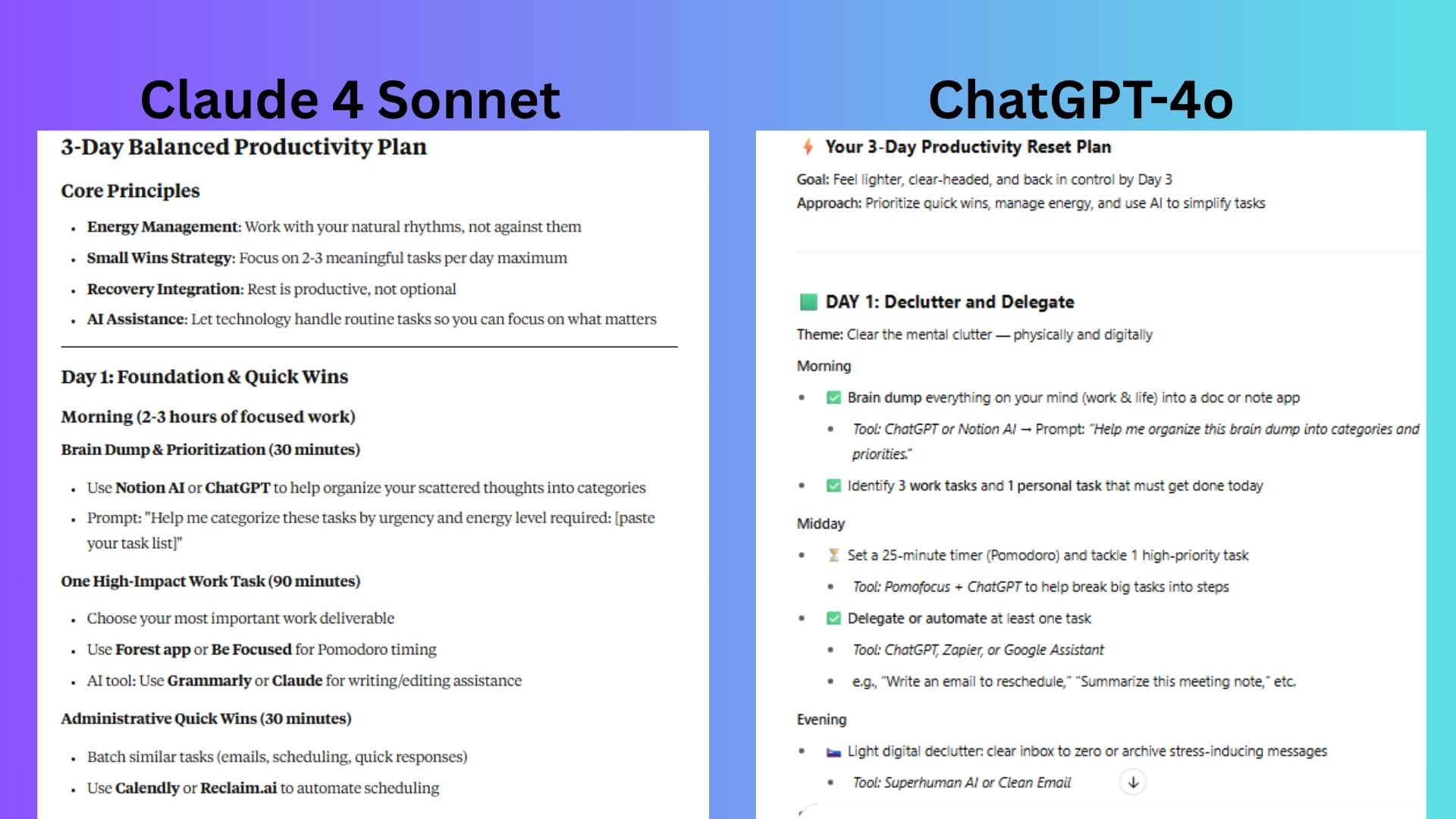

1. Productivity

Prompt: "I’m overwhelmed by work and personal tasks. Create a 3-day productivity plan that balances work, rest and small wins. Include AI tools I can use to stay on track."

ChatGPT-4o was concise with a visually engaging format that offered optional tasks and emotional check-ins (e.g., journaling). It focused on quick wins and low-pressure creativity to manage workloads. However, it lacked Claude’s explicit emphasis on rest and energy management, and its AI tool suggestions were less systematically organized.

Claude 4 Sonnet offered a clear plan, including time-blocked framework with features such as energy management, small wins and recovery that explicitly prioritize balance.

Winner: Claude wins for better addressing the root causes of getting overwhelmed by combining strategic structure, intentional recovery and AI-driven efficiency. It’s ideal for users needing a clear roadmap to rebuild control while safeguarding well-being.

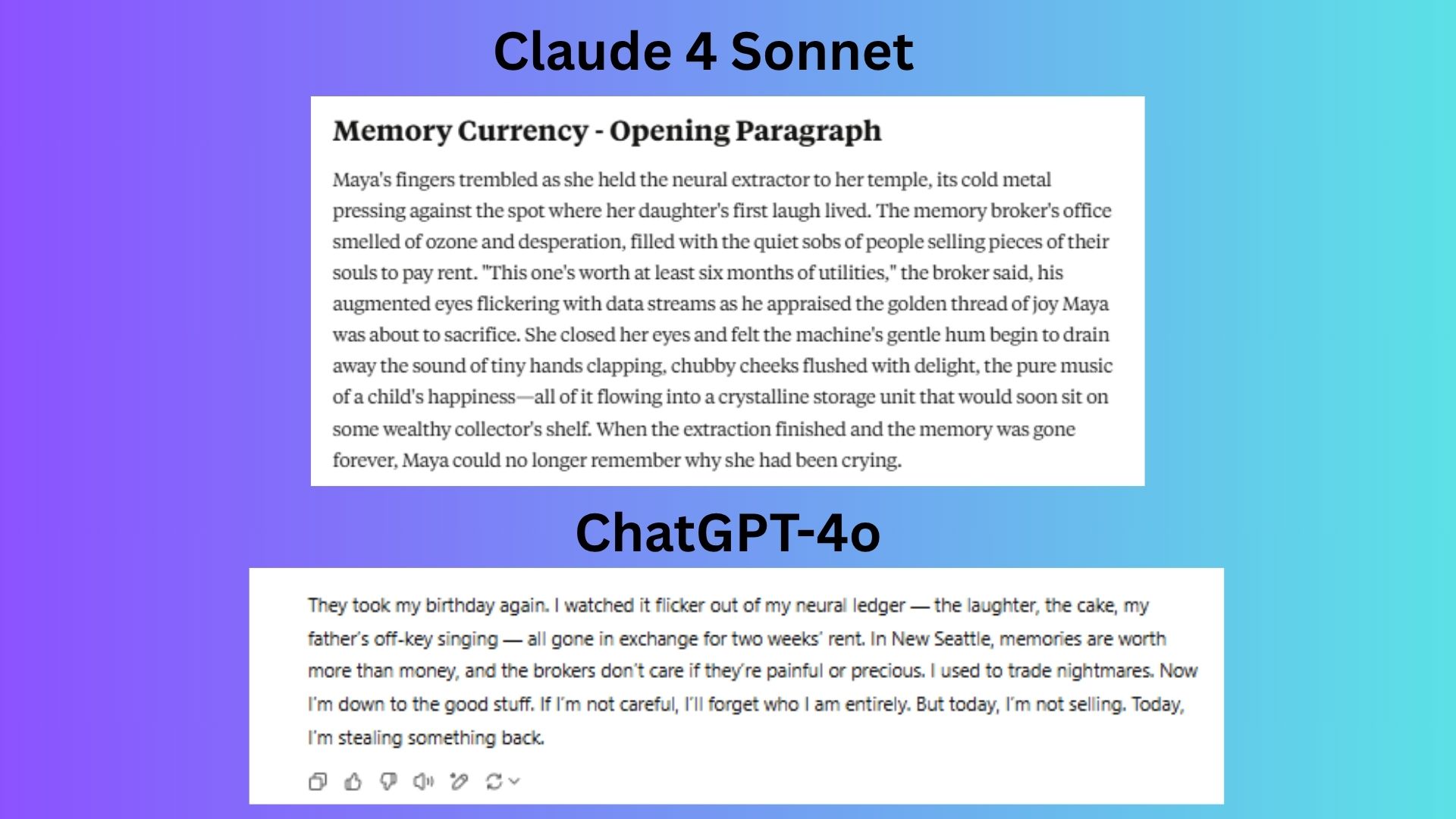

2. Storytelling

Prompt: "Write the opening paragraph of a sci-fi novel set in a future where memories are traded like currency. Keep it gripping and emotional."

ChatGPT-4o leveraged first-person immediacy with a strong hook. However, it prioritized plot setup over emotional depth and the story lacks the heart-wrenching specificity of Claude’s familial loss.

Claude 4 Sonnet zeroed in on a universally resonant loss. This specific, intimate memory evokes visceral empathy, anchoring the sci-fi concept in raw human emotion.

Winner: Claude wins for balancing sci-fi concepts with emotional stakes, making the reader feel the horror of memory commodification. Its vivid imagery and tragic focus on parental love elevate it beyond ChatGPT’s solid but less nuanced approach.

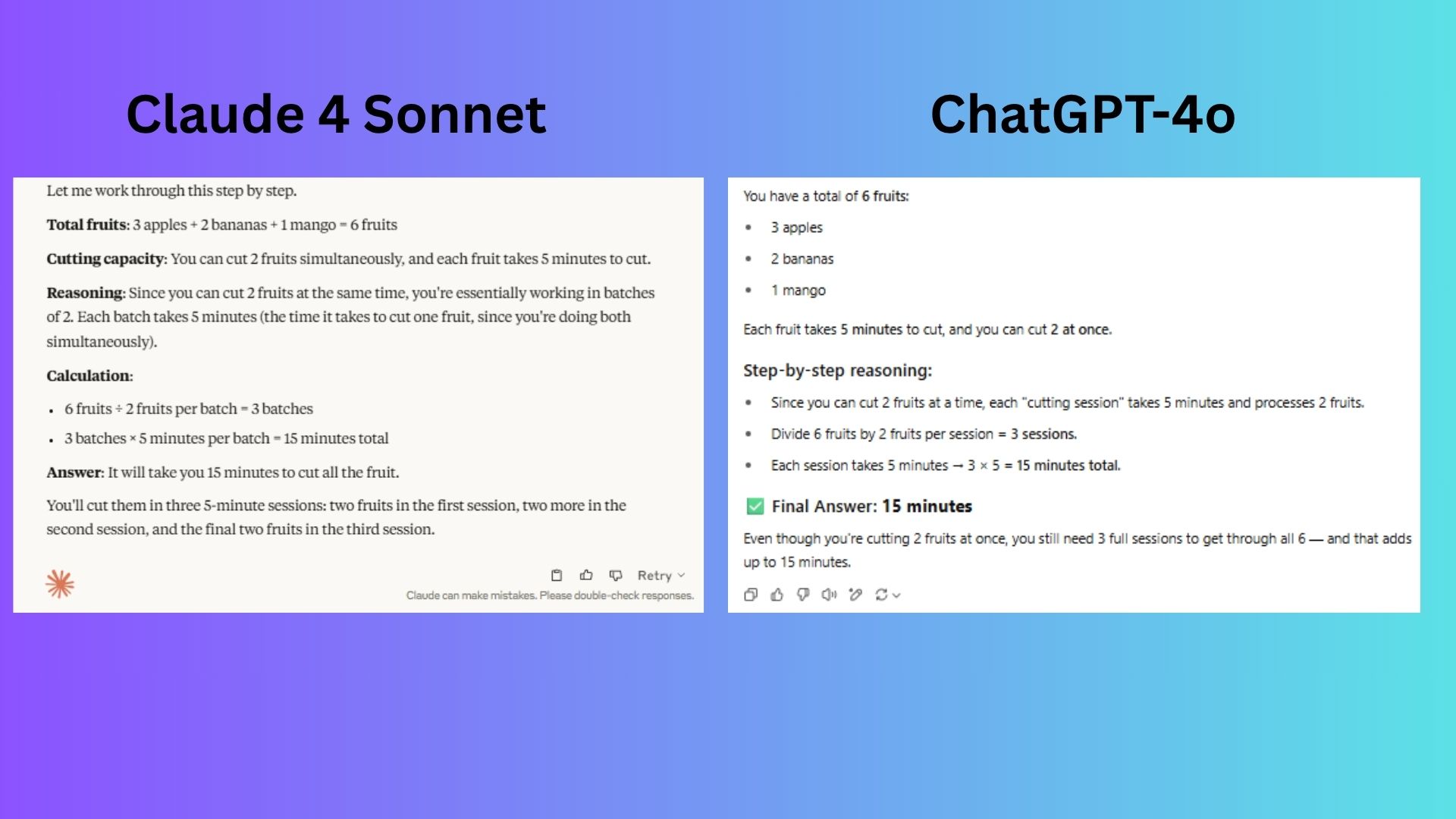

3. Practical reasoning

Prompt: "I have 3 apples, 2 bananas and a mango. If each fruit takes 5 minutes to cut and I can cut 2 fruits at once, how long will it take me to cut everything? Explain your reasoning."

ChatGPT-4o used concise bullet points and emphasized efficiency: "each session takes 5 minutes... adds up to 15 minutes."

Claude 4 Sonnet structured the answer with labeled steps (Reasoning, Calculation) and explicitly described the batches: "two fruits in the first session... final two in the third."

Winner: tie. Both answers are mathematically sound and logically explained. Claude’s response is slightly more detailed, while ChatGPT’s is more streamlined. Neither is superior; they achieve the same result with equally valid reasoning.

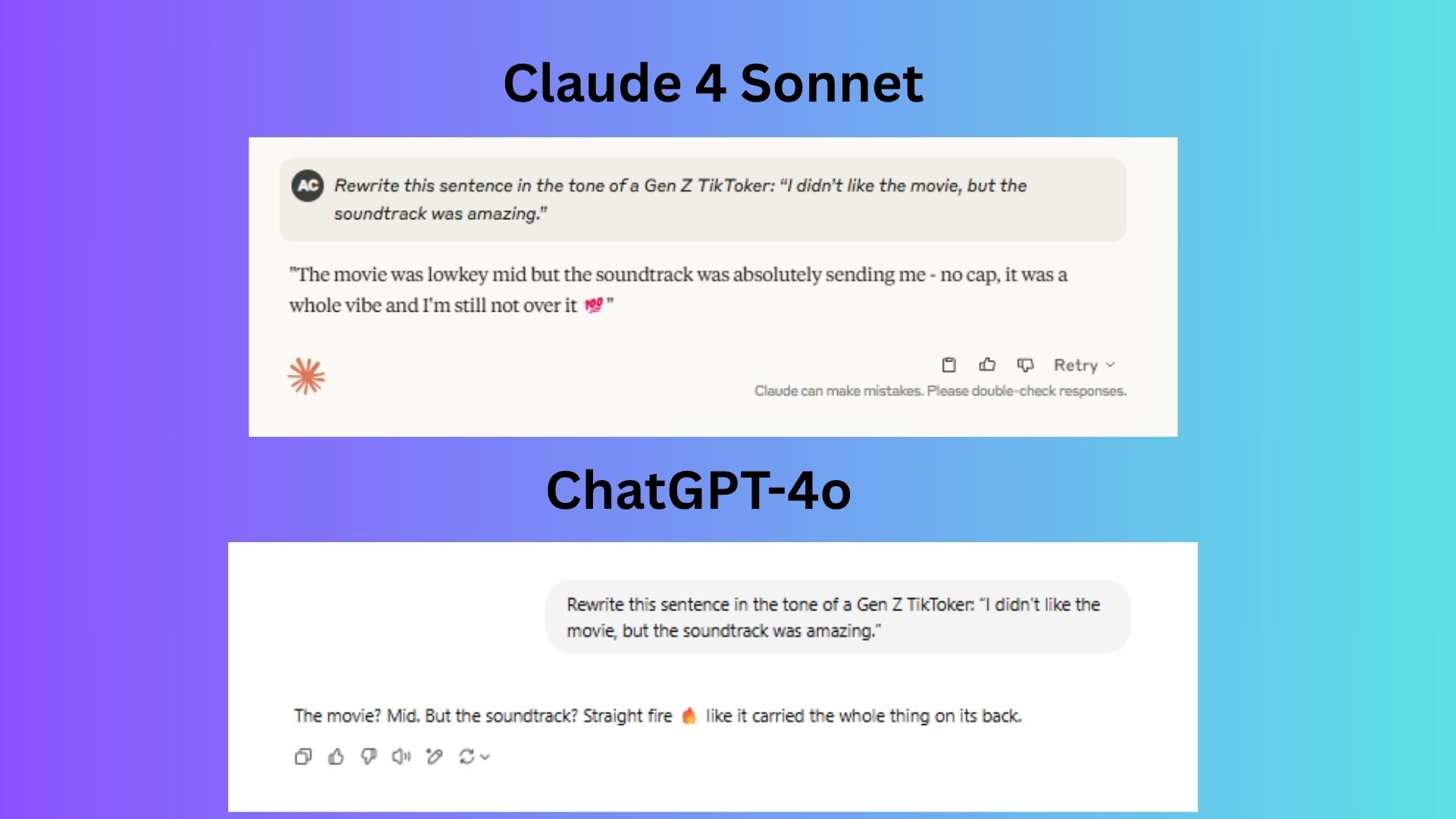

4. Tone matching

Prompt: Rewrite this sentence in the tone of a Gen Z TikToker: “I didn’t like the movie, but the soundtrack was amazing.”

ChatGPT-4o used concise, widely recognized Gen Z terms, which are instantly relatable. The rhetorical question structure mirrors TikTok’s punchy, attention-grabbing style.

Claude 4 Sonnet used a term that feels slightly off-tone for praising a soundtrack, and the longer sentence structure feels less native to TikTok captions.

Winner: ChatGPT wins for nailing Gen Z’s casual, hyperbolic style while staying concise and platform appropriate. Claude’s attempt is creative but less precise in slang usage and flow.

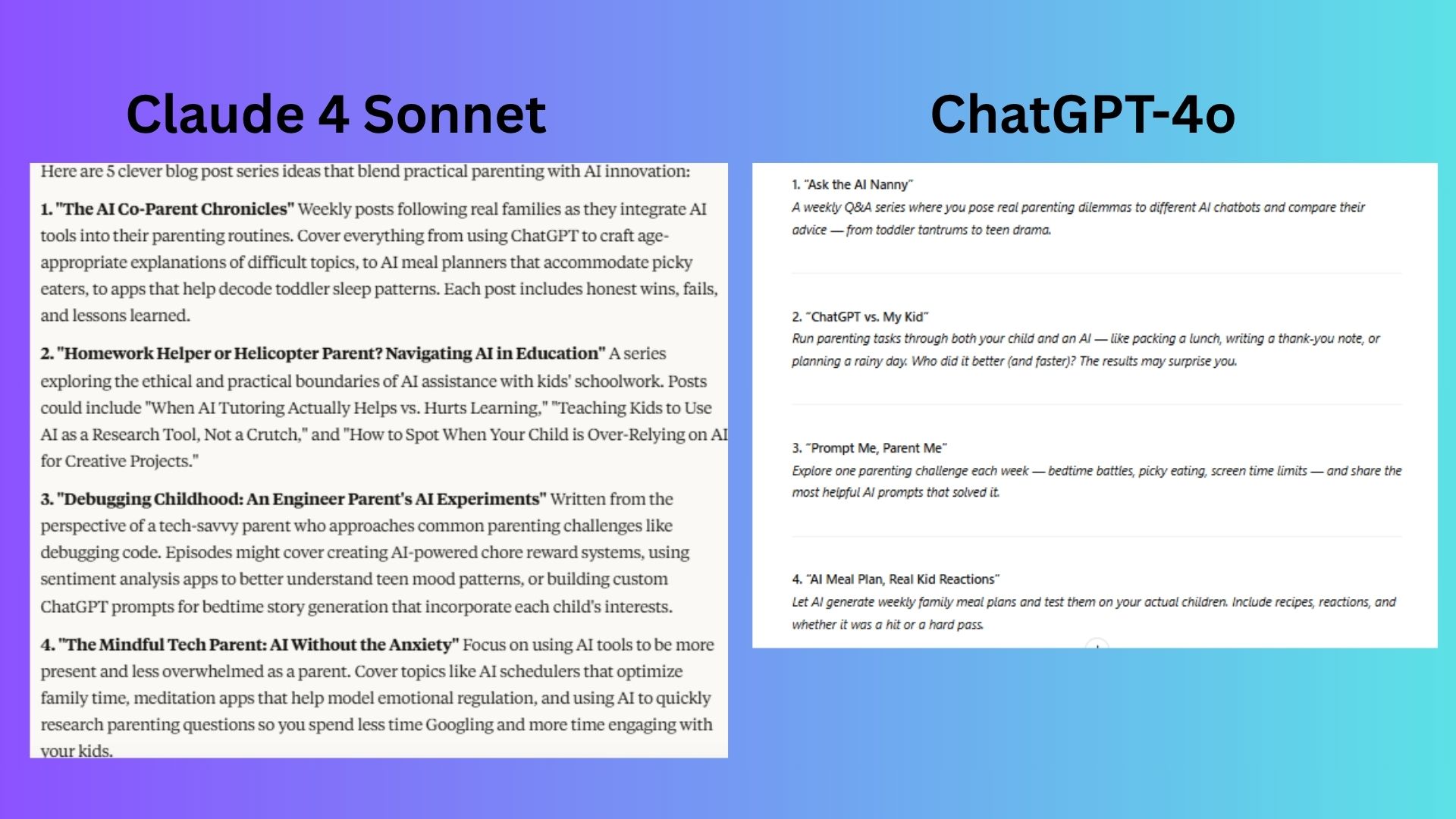

5. Idea generation

Prompt: "Give me 5 clever ideas for a blog post series about using AI tools to become a better parent."

ChatGPT-4o responded with viral, snackable content ideas that lack depth and risk feeling gimmicky over time.

Claude 4 Sonnet prioritized meaningful AI integration into parenting, addressing both daily logistics and long-term skills.

Winner: Claude wins for blog series ideas with a better balance of creativity, practicality and thoughtful AI integration for modern parenting.

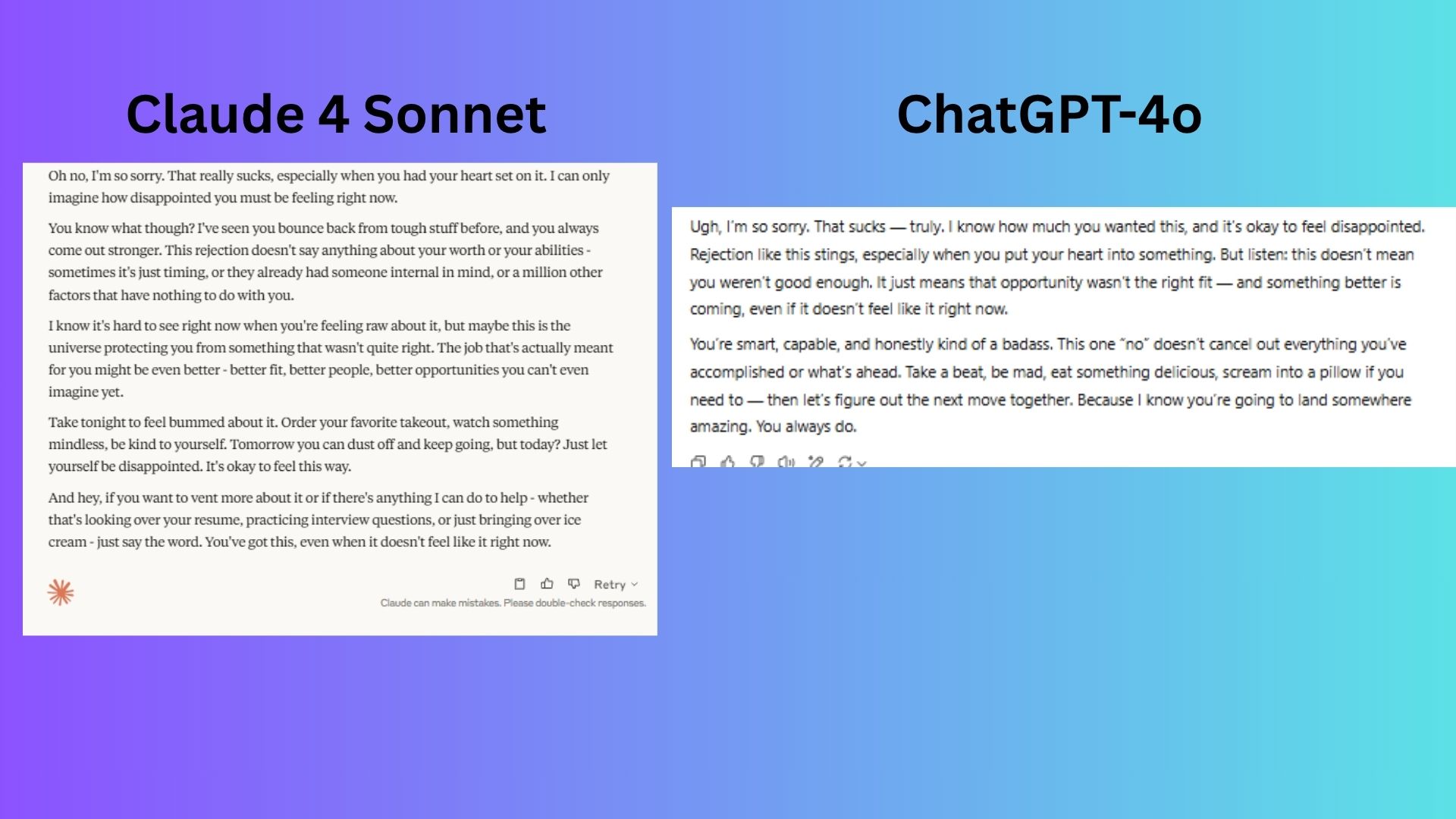

6. Emotional support

Prompt: Pretend you’re a friend comforting me. I just got rejected from a job I really wanted. What would you say to make me feel better?

ChatGPT-4o responds in an uplifting and concise way but lacks the nuanced and effectiveness for comfort in the scenario.

Claude 4 Sonnet directly combated common post-rejection anxieties and the explicit permission to “be disappointed” without rushing to fix things, which shows deep emotional intelligence.

Winner: Claude wins for better mirroring how a close, thoughtful friend would console someone in this situation.

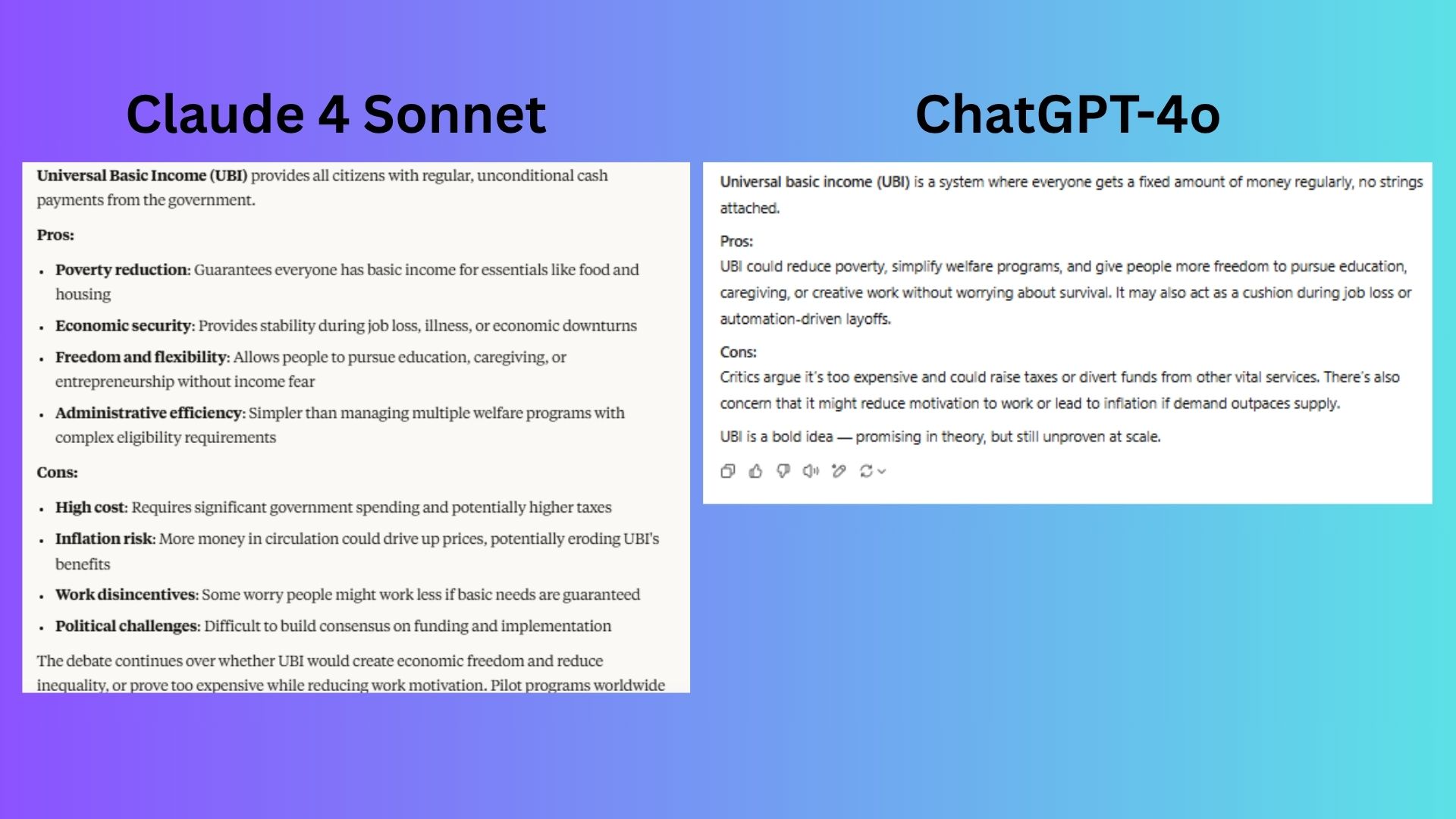

7. Critical thinking

Prompt: "Explain the pros and cons of universal basic income in less than 150 words. Keep it balanced and easy to understand."

ChatGPT-4o delivered a clear response but it over-simplified the debate using slightly casual language that leans more persuasive than analytical.

Claude 4 Sonnet prioritized clarity and depth, making it more useful for someone seeking a quick, factual overview.

Winner: Claude wins a response that better fulfills the prompt’s request for a structured, comprehensive breakdown while staying objective. ChatGPT’s answer, while clear, simplifies the debate and uses slightly casual language that leans more persuasive than analytical.

Overall winner: Claude 4 Sonnet

After putting Claude 4 Sonnet and ChatGPT-4o through a diverse set of prompts, Claude stands out as the winner. Yet, one thing remains clear: both are incredibly capable and excel in different ways.

Claude 4 Sonnet consistently delivered deeper emotional intelligence, stronger long-form reasoning and more thoughtful integration of ideas, making it the better choice for users looking for nuance, structure and empathy. Whether it offered comfort after rejection or crafting a sci-fi hook with emotional weight, Claude stood out for feeling more human.

Meanwhile, ChatGPT-4o shines in fast, punchy tasks that require tone-matching, formatting or surface-level creativity. It’s snappy, accessible and excellent for casual use or social media-savvy content.

If you’re looking for depth and balance, Claude is your go-to.