Why do people cheat? An intriguing study by two Israeli researchers in 2016 put forward a possible reason that has since become well established in the scientific literature and popular media.

The researchers reported a series of experiments apparently showing that people told they have won a skill-based competition, such as a visual task, subsequently cheat more than others in games of chance, such as dice games. The proposed explanation was that winners experienced a sense of entitlement that induced them to cheat.

The paper has been highly cited by other researchers. One scientific comment paper even pointed out its significance in the light of tax evasion costing governments US$3.1 trillion (£2.6 trillion) annually.

But does the finding hold up to scientific scrutiny? We decided to replicate the study and investigate more closely the reasons why people do or don’t cheat.

Our new study, published in Royal Society Open Science, failed twice to replicate the original finding. We found that the original experiments were “statistically underpowered”, meaning they used far too few experimental participants (43 in their main experiment) to sustain the conclusions that were drawn.

There were also problems of experimental design and methodology, notably a failure to randomly decide which participants were winners, losers, or part of a control group that weren’t told how they had done in the skill-based competition.

We began by replicating the original research as closely as possible, but in a large-scale experiment (252 participants) to achieve adequate statistical power. We also assigned participants randomly to conditions.

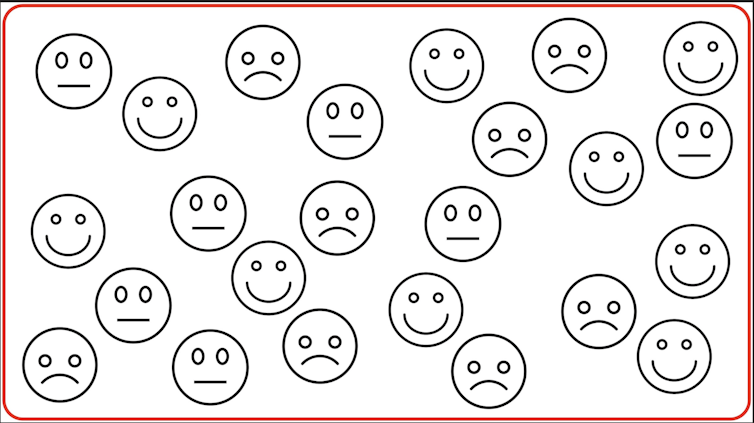

To assign winners and losers, we used the perceptual judgement test used in the original experiment. The test involves the difficult task of estimating which of several different symbols is the most numerous in briefly displayed slides similar to the one shown below.

We put the participants in pairs and told them whether they had a better or worse score than their partner in the skill task. They were then put in new pairs and played a game of chance. The pairs then played a game of chance, also identical to the game in the original research. This involved rolling two dice under an inverted cup and then peeking through a spyhole in its base to see the result.

The players were told to help themselves to money from an envelope provided depending on what numbers the dice showed – 25 pence for each dice spot. While it was impossible to tell who in particular cheated, collecting much significantly more than the average amount was evidence of cheating.

We also assigned one-third of the participants to a control group. They were not told whether or not they had beaten their partner in the visual task before playing the the dice game.

Comparing the results to what we’d expect to happen by chance, a small but statistically significant amount of cheating seemed to have occurred, as in the original Israeli experiment. But our results showed no evidence that winning (or losing) had any statistically significant effect whatsoever on cheating, as can be seen in the graph below, where the dotted line shows the value expected by chance, without cheating.

We also ran an even larger online experiment (275 participants) in which we assigned participants randomly to be winners, losers or control participants using the same perceptual test as before.

In this experiment, each participant tossed a coin ten times and claimed rewards (Amazon gift vouchers) depending on how many heads they tossed. The results were almost identical to our first experiment: we found a similar level of cheating and no evidence of any effect of winning or losing on subsequent cheating.

We used standardised psychometric tests designed to measure differences between people that might influence cheating, including a sense of entitlement, self-confidence, belief in personal luck, and a few other factors. But only one, turned out to be statistically significant in all treatment conditions.

Participants who dislike inequality cheated less than others. This is presumably because they had a stronger sense of fairness and considered cheating unfair. A sense of entitlement, on the other hand, was not significantly associated with cheating in any condition.

Ultimately, what makes some people cheat more than others is not fully understood. But our research suggests people’s feelings about inequality is one part of the explanation. There are also momentary circumstantial factors that encourage some people, but not others, to cheat.

Psychology in crisis

The original Israeli experiment does not replicate, and it should be viewed in the context of what’s known as the replication or reproducibility crisis in psychology. This refers to the fact that many recorded scientific findings are impossible to reproduce when experiments are repeated.

One of the principal drivers of the crisis is inadequate statistical power, meaning the use of sample sizes that are too small to yield trustworthy results. Our two experiments had extremely high (95%) statistical power, as required by the publisher of our registered report.

Another driver of the crisis is “publication bias”, which is when articles with a positive result are more likely to be published than those with a negative one. Factors such as “p-hacking” (performing multiple different statistical tests on data until one of them turns out to be significant) and harking (creating a hypothesis after results are known) are also to blame.

Registered reports, in which investigators submit research proposals, including hypotheses and planned statistical tests before the research is undertaken, can ultimately help eliminate most of the drivers of the replication crisis. Such an approach will no doubt one day help us uncover other reasons why people cheat.

The authors do not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and have disclosed no relevant affiliations beyond their academic appointment.

This article was originally published on The Conversation. Read the original article.