MANILA, Philippines – A day after being publicized online by Vice, the DeepNude application for Windows and Linux, which uses machine learning algorithms to undress pictures of clothed women, was taken offline.

The app's takedown followed considerations by the developer about the potential harm the app could cause.

DeepNude's developer, an anonymous programmer going by the alias "Alberto," originally developed the app "for fun." The artificial intelligence for the app, the developer said, was based off of the Pix2Pix algorithm of the University of California, Berkeley. Pix2Pix colorizes black and white images.

The media attention, he said, brought many visitors to try the app out, causing it to crash.

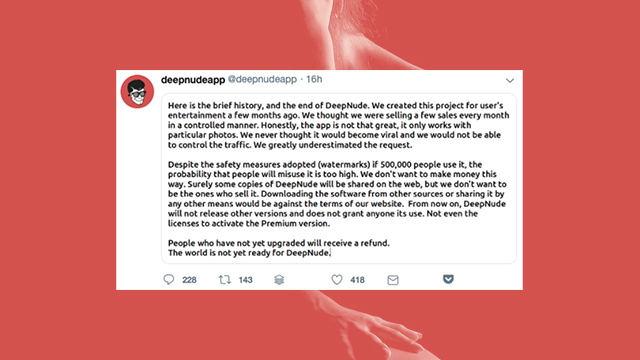

He later chose to end the development of the app because the world was "not yet ready for DeepNude."

He explained how, despite some safeguards put in place, "the probability that people will misuse it is too high. We don't want to make money this way."

DeepNude, for the most part, is dead, save for pirated copies that may eventually surface.

According to the developer: "Surely some copies of DeepNude will be shared on the web, but we don't want to be the ones who sell it.... From now on, DeepNude will not release other versions and does not grant anyone its use. Not even the licenses to activate the Premium version."

The developer added those who did buy a Premium version will receive a refund.

News of this app follows the rise of "deepfakes," in which algorithms can create lifelike mimicries of people saying things they never actually said or did – forgeries which can be used for propaganda, fake news, or pornography. – Rappler.com