Social media companies have done well out of the United States congressional hearings on the January 6 insurrection. They profited from livestreamed video as rioters stormed the Capitol Building. They profited from the incendiary brew of misinformation that incited thousands to travel to Washington D.C. for the “Save America” rally. They continue to profit from its aftermath. Clickbait extremism has been good for business.

Video footage shot by the rioters themselves has also been a major source of evidence for police and prosecutors. On the day of the Capitol Building attack, content moderators at mainstream social media platforms were overwhelmed with posts that violated their policies against incitement to or glorification of violence. Sites more sympathetic to the extreme right, such as Parler, were awash with such content.

In testifying to the congressional hearings, a former Twitter employee spoke of begging the company to take stronger action. In despair, the night before the attack, she messaged fellow employees:

When people are shooting each other tomorrow, I will try to rest in the knowledge that we tried.

Alluding to tweets by former President Trump, the Proud Boys, and other extremist groups, she spoke of realising that “we were at the whim of a violent crowd that was locked and loaded”.

The need for change

In the weeks after the 2019 Christchurch massacre, there were hopeful signs that nations – individually and collectively – were prepared to better regulate the internet.

Social media companies had fought hard against accepting responsibility for their content, citing arguments that reflected the libertarian philosophies of internet pioneers. In the name of freedom, they argued, long established rules and behavioural norms should be set aside. Their success in influencing law makers has enabled companies to avoid legal penalty, even when their platforms are used to motivate, plan, execute and livestream violent attacks.

After Christchurch, mounting public outrage forced the mainstream companies into action. They acknowledged their platforms had played a role in violent attacks, adopted more stringent policies around acceptable content, hired more content moderators, and expanded their ability to intercept extreme content before it was published.

It seemed unthinkable back in 2019 that real action would not be taken to regulate and moderate social media platforms to prevent the propagation of violent, online extremism in all its forms. The livestream was a core element of the Christchurch attack, carefully framed to resemble a video game and intended to inspire future attacks.

Nearly two years later, multiple social media platforms were central to the incitement and organising of the violent attack on the US Capitol that caused multiple deaths and injuries, and led many to fear a civil war was about to erupt.

Indeed, social media was implicated in every aspect of the Capitol Building attack, just as it had been in the Christchurch massacre. Both were fermented by wild and unfounded conspiracy theories that circulated freely across social media platforms. Both were undertaken by people who felt strongly connected to an online community of true believers.

Read more: Uncivil wars? Political lies are far more dangerous than Twitter pile-ons

The process of radicalisation

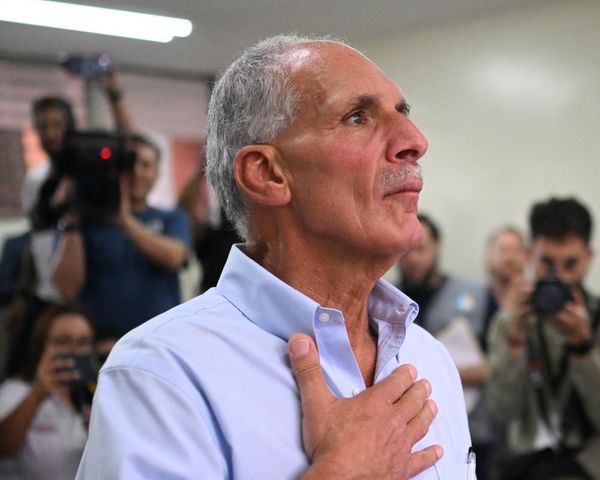

The testimony of Stephen Ayres to the January 6 congressional hearings provides a window into the process of radicalisation.

Describing himself as an “ordinary family man” who was “hard core into social media”, Ayres pleaded guilty to a charge of disorderly conduct for his role in the Capitol invasion. He referenced his accounts on Twitter, Facebook and Instagram as the source of his belief that the 2020 US Presidential election had been stolen. His primary sources were posts made by the former president himself.

Ayres testified that a tweet by President Trump had led him to attend the “Save America” rally. He exemplified the thousands of Americans who were not members of any extremist group, but had been motivated through mainstream social media to travel to Washington D.C.

The role of former US President Trump in the rise of right-wing extremism, in the US and beyond, is a recurring theme in Rethinking Social Media and Extremism, which I co-edited with Paul Pickering. At the time of the Christchurch massacre, there was ample evidence that US-based internet companies were providing global platforms for extremist causes.

Yet whenever their content moderation extended to the voices of the far right, these companies faced censure from conservatives, including from the Trump White House. The message was clear: allowing unfettered free speech for the so-called “alt-right” was the price social media companies would have to pay for their oligopoly. Though the growing danger of domestic terrorism was apparent, the threat of antitrust suits was a powerful disincentive for corporate action against right-wing extremists.

Social media companies have faced significant pressure from nations outside the US. For example, within months of the Christchurch attack, world leaders came together in Paris to sign the Christchurch Call to combat violent extremism online. The document was moderate in tone, but the US refused to sign. Instead, the White House doubled down in alleging that the major threat lay in the suppression of conservative voices.

In 2021, the Biden administration belatedly signed up to the Christchurch Call, but it has not succeeded in advancing any measures domestically. Despite some tough talk during the election campaign, President Biden has been unable to pass legislation that would better regulate technology companies.

With the midterm elections looming – elections which often go against the party of the president – there is little reason for optimism. The decisions of US lawmakers will continue to reverberate globally while ownership of Western social media remains firmly centred in the US.

Read more: How self-publishing, social media and algorithms are aiding far-right novelists

The failure of self-regulation

The spirit of libertarianism lives on within companies that exploded from home-grown start-ups to trillion dollar corporations within a decade. Their commitment to self-regulation suited legislators, who struggled to understand this new and constantly shape-shifting technology. The demonstrable failure of self-regulation has proven lethal for the targets of terrorism and now presents as a danger to democracy itself.

In her chapter in Rethinking Social Media and Extremism, Sally Wheeler asks us to reconsider the basis of the social licence social media companies have to operate within democracies. She argues that, rather than asking whether their activities are legal, we might ask what reforms are needed to ensure social media does not cause serious harm to people or societies.

Now central to the provision of many public services, social media platforms might be deemed public utilities and, for this reason alone, be subject to different and higher rules and expectations. This point was amply if unintentionally demonstrated by Facebook itself when it blocked many sites – including emergency services – during a disagreement with the Australian Government in 2021. In the process, Facebook shone a spotlight on the nation’s growing reliance on a poorly regulated, privately owned platform.

Amid the national outcry following the Christchurch massacre, the Australian government hastily introduced legislation intended to increase the responsibilities of internet companies. Reportedly drafted in just 48 hours before being rushed through both houses of parliament, the bill was always going to be flawed.

Effective reform demands that we first recognise the internet as a space in which actions carry real-world consequences. The most visible victims are those directly targeted by threats of extreme violence – mainly women, immigrants and minorities. Even when the threats are not enacted, people are intimidated into silence, even self-harm.

More insidious but perhaps just as harmful in the long term, is the overall decline in civility that drives public discourse towards extreme positions. On social media, what is known as the Overton Window of mainstream political debate has not so much been pushed out as kicked in.

There is broad agreement that existing legal and regulatory frameworks are simply inadequate for the digital age. Yet even as the global pandemic has accelerated our reliance on all things digital, there is less agreement about the nature of the problem, much less about the remedies required. While action is clearly needed, there is always the danger of overreach.

The functioning of democratic society depends as much on our ability to debate ideas and express dissent as it does on the prevention of violent extremism. Our challenge is to balance free speech against other competing rights on the internet, just as we do elsewhere. The current approach of simply ratcheting up the penalties faced by social media companies is more likely to tip the balance against free speech. In a communication landscape that is increasingly concentrated in the hands of just a few major corporations, we are in need of more voices and more diversity, not less.

Shirley Leitch is co-editor of the book Rethinking Social Media and Extremism, and has contributed two chapters to the book. She receives no royalties from the book, which is available for free download from ANU Press. During the last federal election, she became a volunteer for the Independent MP for Goldstein, Zoe Daniel. Shirley does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

This article was originally published on The Conversation. Read the original article.