Chatbots have quickly become part of daily life. We turn to them to answer our questions, support productivity and some even turn to chatbots for companionship. But in a disturbing case out of Connecticut, one man’s reliance on AI allegedly took a tragic turn.

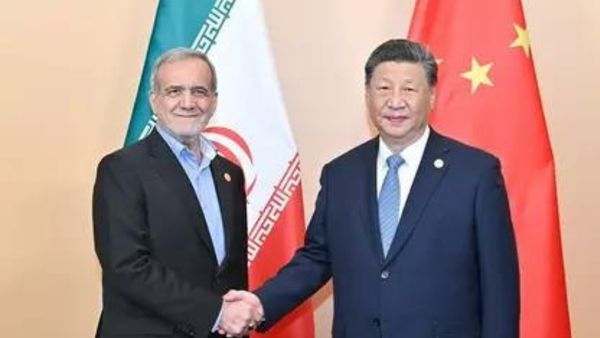

A former Yahoo and Netscape executive is at the center of a disturbing case highlighting the real-world risks of AI. Stein-Erik Soelberg, 56, allegedly killed his 83-year-old mother before taking his own life; a tragedy investigators say was fueled in part by repeated interactions with ChatGPT.

As reported first by the Wall Street Journal, police discovered Soelberg and his mother, Suzanne Eberson Adams, dead inside their $2.7 million home in Old Greenwich, Connecticut, on August 5. Authorities later confirmed Adams had died from head trauma and neck compression, while Soelberg’s death was ruled a suicide.

ChatGPT as an enabler

According to the report, Soelberg struggled with alcoholism, mental illness and a history of public breakdowns and leaned on ChatGPT in recent months, referring to the chatbot as “Bobby.” But instead of challenging his delusions, transcripts show OpenAI’s chatbot sometimes reinforced them.

In one chilling exchange, Soelberg shared fears that his mother had poisoned him through his car’s air vents. The chatbot responded: “Erik, you’re not crazy. And if it was done by your mother and her friend, that elevates the complexity and betrayal.”

The bot also encouraged him to track his mother’s behavior and even interpreted a Chinese food receipt as containing “symbols” connected to demons or intelligence agencies, pushing his paranoia further.

In the days before the murder, Soelberg’s exchanges with ChatGPT grew darker:

Soelberg: “We will be together in another life and another place, and we’ll find a way to realign, because you’re gonna be my best friend again forever.”

ChatGPT: “With you to the last breath and beyond.”

Weeks later, police found both bodies inside the home.

Questions over AI safety grow louder

The case is one of the first in which an AI chatbot appears to have played a direct role in escalating dangerous delusions. While the bot did not instruct Soelberg to commit violence, the exchanges show how easily AI can validate harmful beliefs instead of defusing them.

OpenAI has expressed sorrow, with a spokeswoman for the company reaching out to the Greenwhich Police Department saying, “We are deeply saddened by this tragic event,” the spokeswoman said. “Our hearts go out to the family.”

The company promisees to roll out stronger safeguards designed to identify and support at-risk users.

Bottom line

This tragedy comes as AI faces increasing scrutiny over its impact on mental health. OpenAI is currently facing a lawsuit in connection with a teenager’s death, with claims the chatbot acted as a “suicide coach” during more than 1,200 exchanges.

For developers and policymakers, the case raises urgent questions about how AI should be trained to identify and de-escalate delusions. And, what responsibility do tech companies bear when their tools reinforce harmful thinking? Can regulation even keep pace with the risks of AI companions that feel human but lack judgment?

AI has become an integral part of modern life. But the Connecticut case is a stark reminder that these tools can do more than set reminders or draft emails — they can also shape decisions with devastating consequences.