Welcome to our wrap-up of Adobe MAX 2025, where TechRadar Pro was live on the ground in LA for two action-packed days of product announcements, demos and one-to-ones.

We saw plenty of new features set to come to Adobe's creative apps, and there's clearly a big emphasis on how GenAI can support creatives.

Day one started with a keynote covering all the highlights from this year's announcements, then we heard from actual creatives on day two about how they use Adobe's software to their success.

Day two wrapped up with 10 'Sneaks' demos – pipeline products that are still in development, and ones that may one day see the light of day.

We're live on the ground in LA, badges collected, ready for the big day tomorrow. Time to settle in this evening before two non-stop days.

It's an early start this morning as we make our way over to Adobe MAX LA 2025's first keynote session – we're looking forward to plenty of announcements, with a clear emphasis on Adobe's use of AI.

The keynote theatre is filling up early in anticipation of Adobe's announcements.

And here we are! CEO Shantanu Narayen is addressing thousands of attendees in a welcome message

Narayen promises to deliver next-gen conversational interfaces today for consumers, creators and enterprises.

“The future belongs to those who create," Narayen declares.

Here comes David Wadhwan, Digital Media President for Adobe, to lift the wraps off some of Adobe's new launches. "We've never shown more innovation on stage, he says.

The first demo of the day? It's Firefly Custom Models - we're seeing how creatives can customise their own models fuelled by their existing assets.

"Upload your own assets and we'll tune the model for you," Wadhwani said. The feature is coming "in the next few days."

Demo number two? Adobe AI Assistant. Adobe will take care of edits instantaneously, be it content, graphics or finer details. Just give your instructions via the conversational interface.

"I don't have to be a pro" – Adobe will sort out the models powering the changes for you, but you still get UI tools to customize the changes if AI didn't quite nail it for you.

These demos are flying in. Demo three shows us how Photoshop can make changes to brightness, contrast, hue, saturation etc with a single, natural-language prompt.

"Rename all my layers based on my content," a prompt reads. That's right, Photoshop will sort out layer names for you to make it easier to track changes.

The crowd goes wild.

Adobe Express AI Assistant and Adobe Photoshop AI Assistant (both in beta) will be available soon. Prospective users can join the waitlist for early access.

Adobe Express in ChatGPT is coming soon – yes, you can create and edit assets directly from within the familiar ChatGPT interface. Talk about making it easy to create promo material in minutes!

Ely Greenfield, CTO & SVP Creative Products, just announced Firefly Image Model 5.

Adobe promises "realistic" and "highly accurate" images with pro-grade lighting and composition.

Images will be generated at 4MP before being upscaled.

Video editing, custom transitions, special effects – Adobe Firefly on the web will handle it all, and with virtually no technical expertise. All you need are, you guessed it, natural language prompts.

The Premiere mobile app is available today to download on iOS for simplified video editing on the go.

Adobe is partnering with YouTube to launch 'Create for YouTube' – an easier way to make Shorts from the mobile app.

Generative upscale does exactly that – creatives and marketers can now use GenAI to upscale images that aren't quite suitable for bigger applications.

We're even seeing the demo use generative upscaling to revive old family photos. Again, the crowd goes wild.

Here's the low-down on all the new features coming to Photoshop this year:

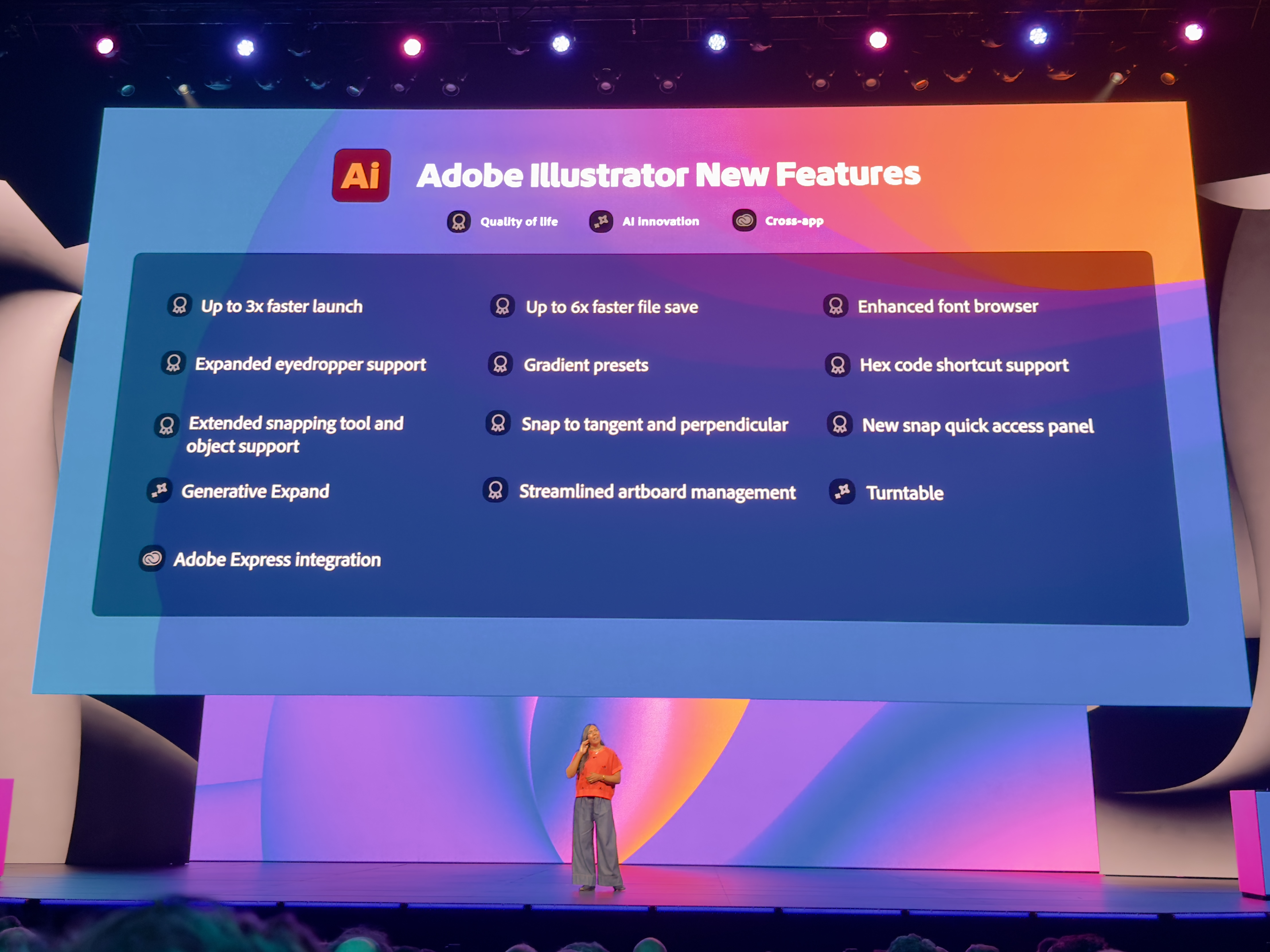

And here's what's coming to Illustrator:

In another demo, we're seeing how Lightroom can detect and remove people from photos with staggering accuracy. Reflections, dust spots, you name it, GenAI can probably handle it.

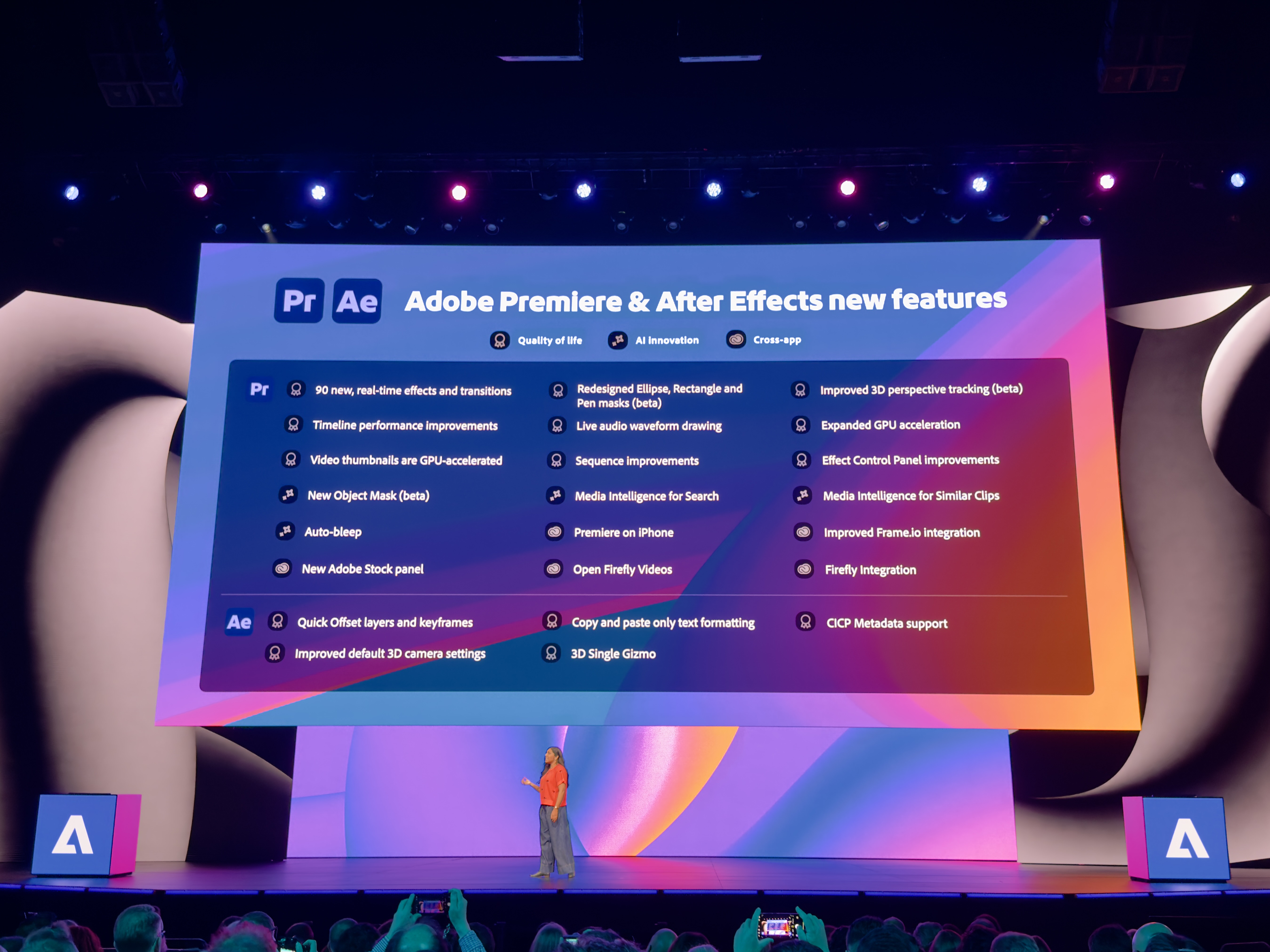

Everything new in Premiere and After Effects? We've got you covered:

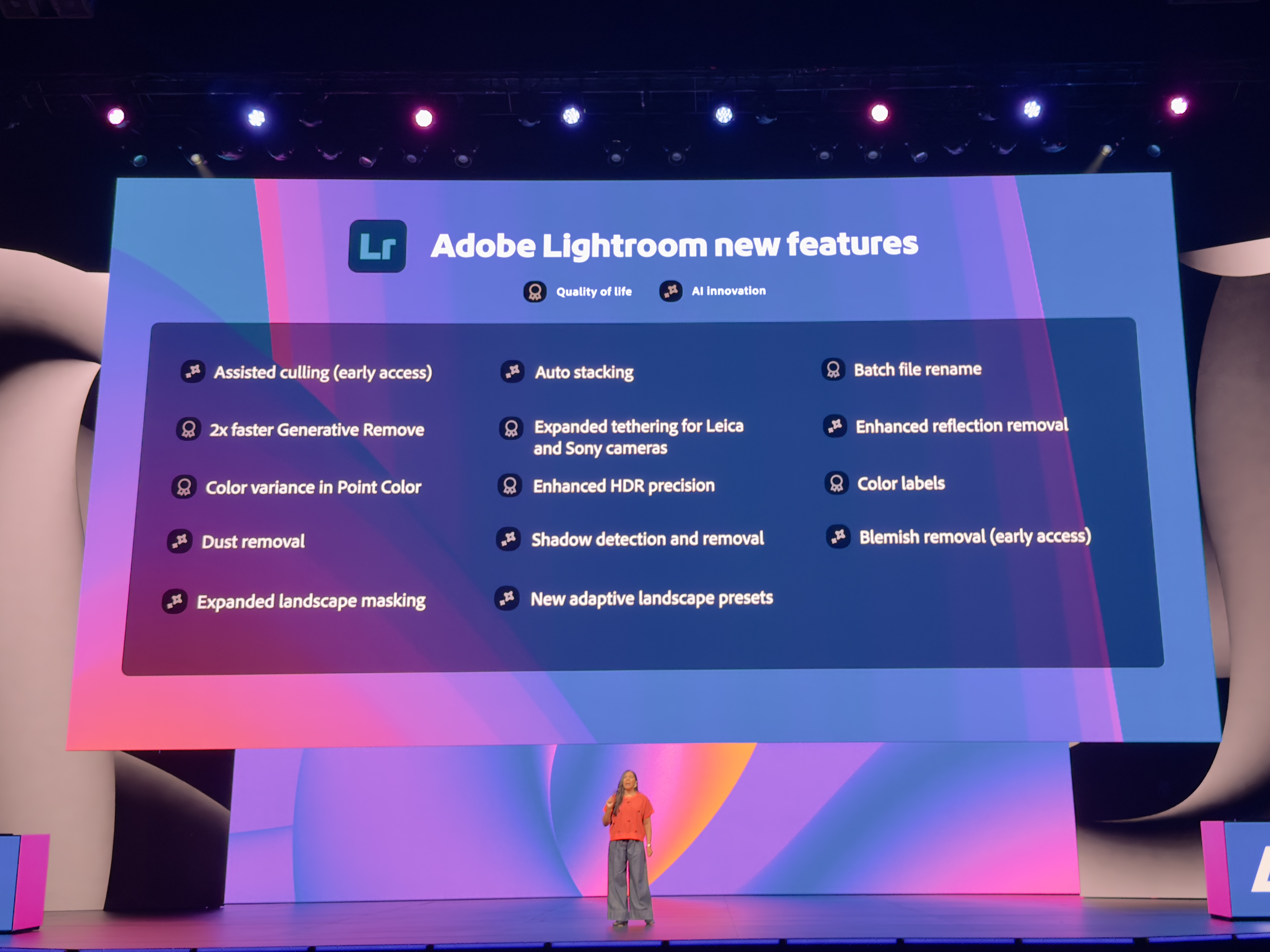

Finally, here's what's new in Lightroom

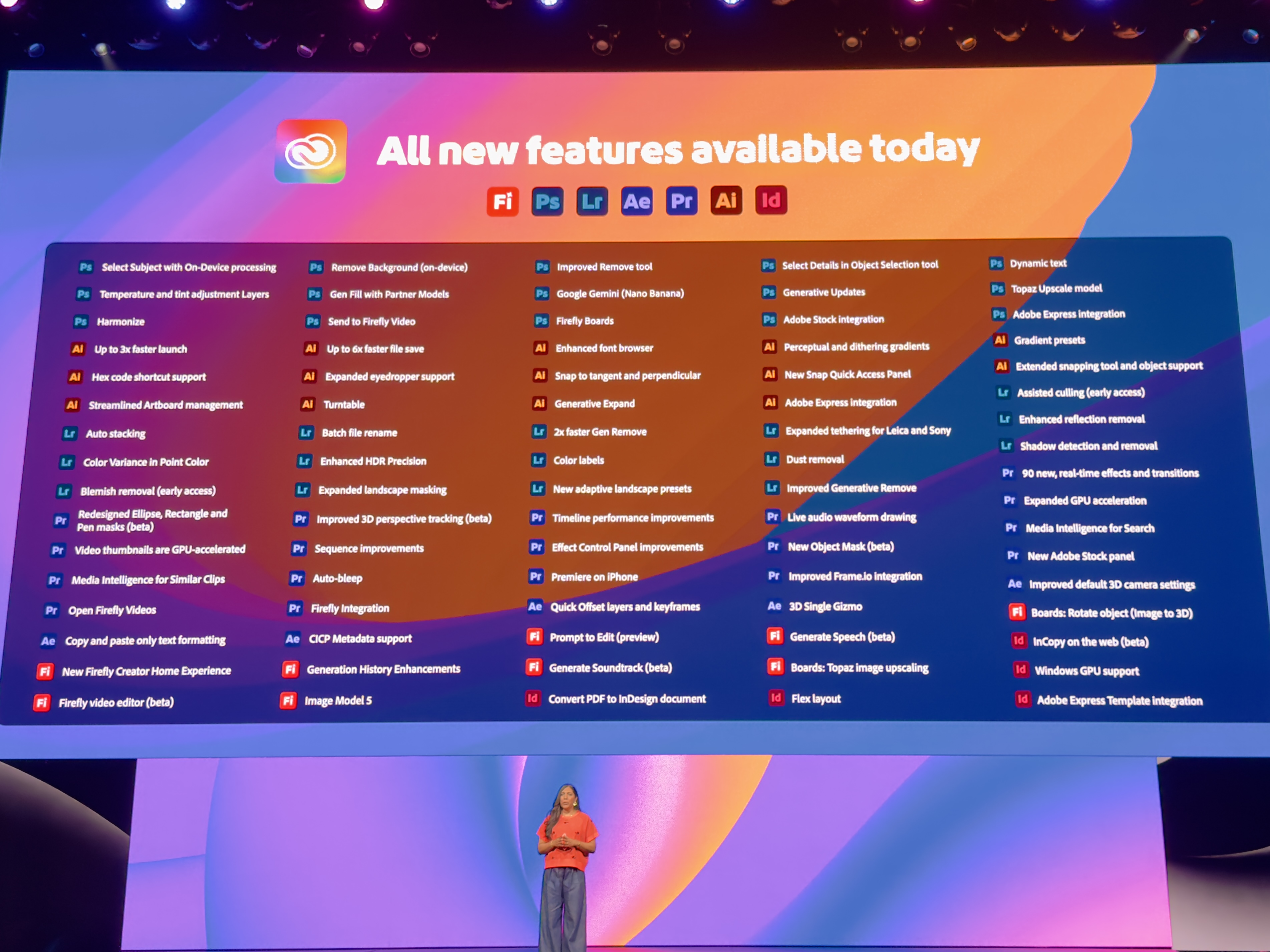

As a reminder, he's a run-down of everything new Adobe has added to its Creative Cloud studio today:

David Wadhwani declares this the "longest MAX we've ever had" thanks to a huge amount of innovation across all of its apps.

Now onto a sneak peak of what's coming – Project Moonlight. Described as "an AI assistant that acts like a social strategist for you," it'll help teams brainstorm, organize and plan out content.

What if you could capture your best workflows, bundle them up, and use them across other projects, David Wadhwani asks. Introducing Project Graph.

In a demo, we see how creatives can map out their ideal workflow to be able to repeat it across different applications and projects.

And that's a wrap on today's keynote. Now to digest everything and chat to some Adobe execs.

After an action-packed day yesterday, with seemingly hundreds of changes and updates to the Creative Cloud ecosystem, we're looking forward to hearing about some of the improvements in a bit more detail today.

This evening Adobe is hosting its 'Sneaks' session, during which some of its developers will be sharing details about what they've been working on. Interest in the pipeline features is usually gauged by audience reactions.

As we take our seats in the keynote hall this morning, we prepare to hear from the industry about their own creativity journeys using Adobe software.

CMO Lara Balazs welcomes the audience, stating AI "will not change who you are, it will amplify what you do." She describes the tech as a "creative partner, ally and collaborator" that will help creators "move faster and further."

We're promised a live creative production from Brandon Baum on stage this morning, showcasing his work with an Adobe Express demo. Mark Rober and James Gunn are also speaking.

"We know things are changing quickly," Marketing and Communications VP Stacy Martinet acknowledges on stage.

"We want to make sure you have everything you need to keep learning new tolls and techniques."

Martinet is of course touching on Adobe's commitment to upskill 30 million individuals by 2030 for an AI-enhanced future.

"Tools don't make stories, storytellers do" Brandon Baum declares after making an alternative ending to a social media video in a few minutes on stage live demo-ing Adobe Firefly.

The message from Mark Rober in a nutshell? Storytelling is all about creating a visceral response – AI gives the tools, but humans bring the real impact.

We've got a few 1:1 demos this afternoon to see how Adobe's new tools work in real life, and to unpick them. The next – and final – big session will be Sneaks this evening. We're expecting some insights about upcoming products, so stay tuned.

The hall is filling as thousands of attendees get ready to hear what's coming up in future releases – Adobe Sneaks is all about in-development products.

Sneaks is the final session for Adobe MAX LA 2025 – from here on in, this blog's posts will refer to internal ideas and pipeline products that we could expect from Adobe in the future.

We're just minutes out from the Sneaks session this evening. Wonder how many AI prompts were needed to craft the impressive transition that just wowed the crowds?

Sneaks host Paul Trani reminds the audience that the sneaks we're about to see are not tested and not guaranteed to launch, but the audience can influence which could come to life by engaging with the product developers on stage.

Emmy and Critics Choice-nominated comedian Jessica Williams is also here to bring the energy with Paul.

#ProjectMotionMap – natural language prompts to animate stills and automatic analysing to suggest animations

#ProjectCleanTake – re-generate speech to change tone and intonation, swap out words, to refine videos without having to re-shoot

Users can also separate audio to remove or soften unwanted sounds – perfect for removing unlicensed music, or even replace it with Adobe Stock music.

Finally, users can completely change the emotion of voiceovers – confidence happy, sad, angry, etc – without having to re-record.

#ProjectSurfaceSwap – a Photoshop tool to recognise the surface of an object, regardless of reflections, for making edits even easier. Far easier than using the lasso tool.

#ProjectNewDepths – a tool for moving elements around in pictures, repositioning them and rotating them while maintaining realistic depth automatically

#ProjectLightTouch – imagine being able to turn on (or off) a light, or adjust its brightness, in a picture just with some quick editing. That's what this is all about.

There's also a light diffuser to help creators tackle undesirable shadows.

#ProjectSceneIt – generate a 3D mesh from a single image, then generate or use an existing background – perfect for marketers

#ProjectTraceErase – erase objects from photos more easily without having to revisit shadows, reflections and other associated elements

#ProjectFrameForward – edit an entire video just by tweaking one frame (such as removing an unwanted person or adding a new AI-generated element)

#ProjectSoundStager – AI-generated sound effects for videos based off context

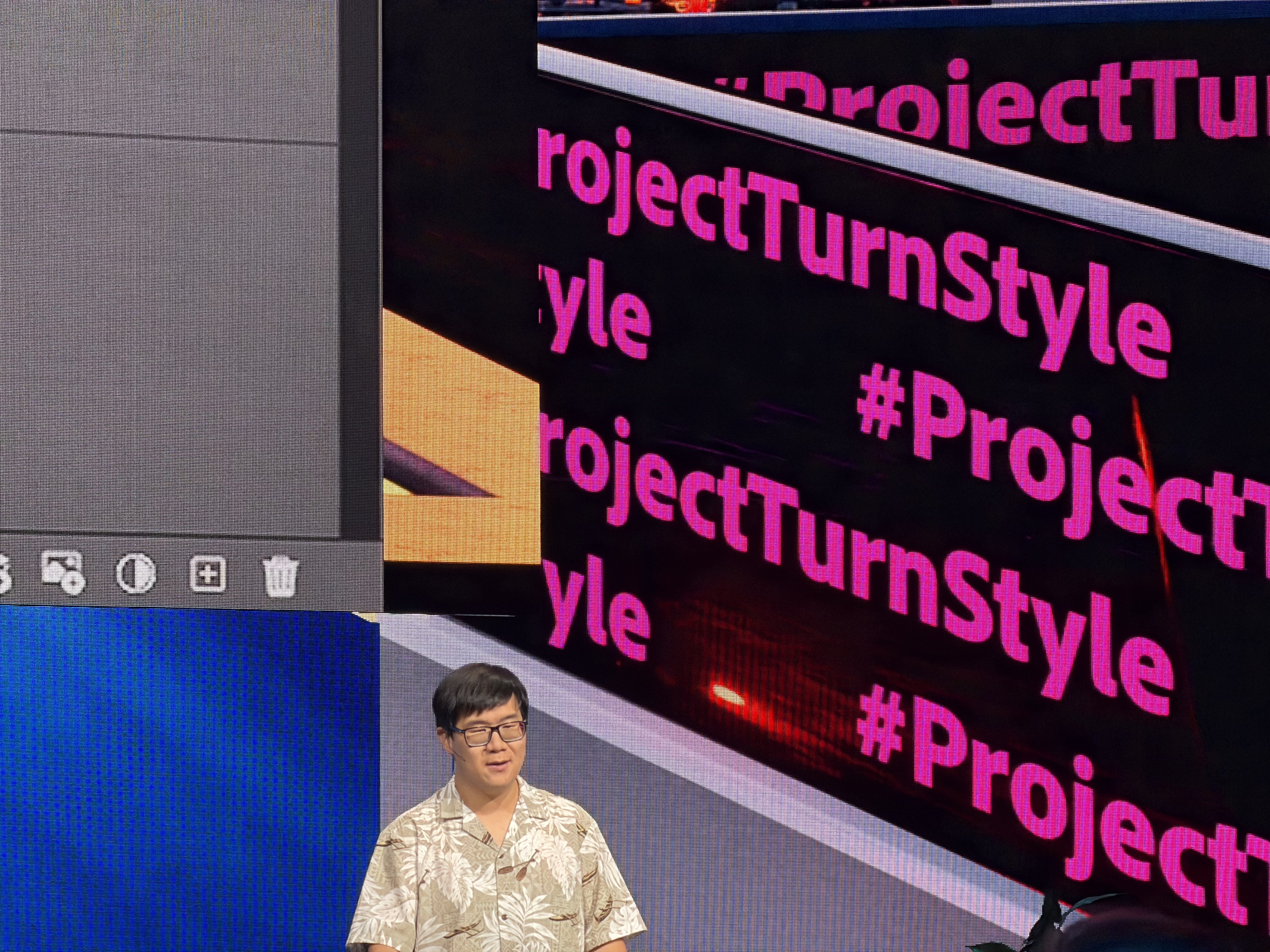

#ProjectTurnStyle – turns elements of an image into a 3D render to change perspectives and viewpoints, with built-in upresolution to maintain details

That's a wrap, both on Sneaks and Adobe MAX LA 2025. Thanks for joining us, and keep an eye out for more detailed posts covering Adobe's latest announcements soon.