By midday, Jessica has dealt with five calls from highly distressed young women in their 20s, all close to tears or crying at the start of the conversations. She absorbs their alarm calmly, prompting them with questions, making sympathetic noises into her headset as she digests the situation. “Are these images sexual in nature?” she asks the last woman she speaks to before lunch. “Do you want to tell me a bit about what happened?” She begins compiling a tidy set of bullet points in ballpoint pen. “It’s all right. Take your time.”

Jessica is sitting on a bank of desks with three other women, responding to calls to the Revenge Porn Helpline. For the past decade, the helpline has been offering advice to callers whose partners or exes have uploaded nude images or footage of them without their consent. “It’s a shocking time for you. I’m so sorry to hear what happened,” she says, as the caller explains that, just a few hours earlier, an ex-partner messaged her to tell her he had decided to post videos of them having sex on the OnlyFans website.

“Have you called the police? And they haven’t got back to you?” She listens, looking out of the window towards a line of trees gently swaying at the edge of the car park. “That is unfortunate. We will do absolutely all we can to get that content removed for you.”

The evolving work of the Revenge Porn Helpline acts as a useful mirror to the rapidly developing ways that people use the internet to inflict pain and misery, and make money. There has been a more than fortyfold increase in calls since the service opened. And there has been a 20.9% increase in reports throughout 2024. The helpline was launched by a small safer technology charity in 2015 (it is now government-funded), at a time when the simultaneous growth in people owning smartphones and the soaring use of social media gave rise to the unexpected problem of nude images being shared without consent. Originally, most callers were female, but by 2023, the balance had shifted, and more men got in touch than women. In its first year, staff handled 521 cases; last year, 22,264 people got in touch. At the current rate of increasing demand, staff expect to be responding to double that caseload by 2028.

The variety of material they encounter is changing fast. A few callers are concerned that AI has been used to insert their faces into pornography performed by other people. In the helpline’s early years, this happened very occasionally, and was easy to detect. “The technology is so much better now that it can be very difficult to determine if footage is synthetic or genuine,” says Sophie Mortimer, the helpline manager. “People tell us: ‘That’s me, but I don’t remember it being filmed.’ It is very disconcerting to see that content and not know whether it’s real or not.”

Although the service remains known as a helpline for the victims of “revenge pornography”, staff deal with a wide range of issues, and are quietly apologetic about the service’s name – since the term suggests the victim has done something to provoke what has happened. In reality, revenge is not the only motivation for this behaviour, and sometimes material is posted by strangers. Many calls come from people who are being blackmailed by criminals who have entrapped them online.

Opposite Jessica, another operator, Alice (both women have asked that their full names not be printed), is advising a man in his 30s who was encouraged into sharing an image of his genitals with a man he had met the night before on a dating platform. Instantly, the man switched from flirtation to extortion, threatening to share the image with a list of friends and relatives, contacts gathered from his victim’s social media accounts. “Have you blocked them?” she asks. “Yes, it is distressing. Are you feeling OK?”

Despite the bleak content under discussion, a day in the helpline’s offices in a business park on the outskirts of Exeter ends up being unexpectedly positive. The staff, who are paid employees rather than volunteers, offer clear, practical advice and explain the constructive routes that can help people extract themselves from a range of desperate situations. Most callers are hugely reassured by the time they hang up.

Jessica explains to her caller that the OnlyFans website is usually very responsive to requests for content to be taken down (the helpline has a 90.9% success rate in getting nonconsensual intimate material removed). She offers to help check whether the material has been posted elsewhere. “You’ll need to email in a photo and we can do a facial-recognition search through public websites.”

After the call, Jessica tells me: “You can hear the relief in their voices when we tell them they’re not to blame. Often, there’s a sense of shame, a feeling that they shouldn’t have shared the images.” The reaction callers receive from the police is often quite different. “Unfortunately, the police will sometimes still say: ‘Why did you take those pictures in the first place?’ That self-blame gets reinforced. We explain that they are the victim of a crime and they can report it.”

The helpline department is separated from the administrative side of the office by screens, with a sign by the entrance warning: “Caution. Content being reviewed”. On a pinboard, a graph shows the dramatic rise in demand for the helpline’s services. Employees have decorated the board with bawdy fridge magnets, keyrings and bottle openers shaped as breasts and penises, brought back from their holidays. A flesh-coloured, torso-shaped teacup with large breasts sits on the windowsill.

“It’s a very close team, very supportive of each other and what we’re trying to achieve. You develop a certain dark humour,” Mortimer says in explanation. The work is stressful, and employees have regular counselling sessions, but there is also a need to destigmatise the content under discussion, she adds. She told a government select committee earlier this year that during her nine years with the helpline, “the rules of romantic engagement as we knew them have changed”.

“Alongside the increasing popularity of sexting, people have simply become much more comfortable exchanging nude images of themselves, regardless of age or gender,” she said. “And as long as you’re an adult, it’s consensual and it’s legal, then there is absolutely nothing wrong with that.”

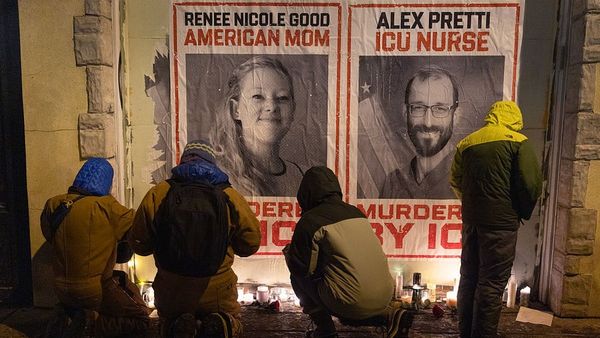

But for those who find that sexual images have been shared online without their consent, the impact can be life-shattering. One woman told the same committee that her full name had been published alongside the nonconsensual photographs, and this had made her life so complicated she had felt obliged to change her name by deed poll. At times, she wished she had been subjected to a physical sexual assault rather than an online one, so that “at least the replaying of the abuse would be within the privacy of their own head, rather than online for anyone to see”, the committee report noted.

Another woman described the impact of this material remaining online as “exhausting”. “I am terrified of applying for jobs for fear the prospective employer will Google my name and see. I am terrified when meeting new people that they will Google my name and see,” she told MPs on the Women and Equalities Committee for their report into nonconsensual intimate image abuse, published earlier this year.

Reality TV star Georgia Harrison told the parliamentary inquiry about the impact of her ex-partner’s decision to share film of them having sex, which he had taken without her consent. “I always compare it with grief: you have to actually grieve a former version of yourself; you feel like you lose your dignity and a lot of pride, there is so much shame involved in it.”

Staff at the helpline are under pressure to remove content quickly, before it is reshared. “It is really like a house fire: the quicker you can put it out, the quicker you can stop it,” Harrison explained to MPs, adding that she had not been alerted to the content in time. “Unfortunately, in four to six days, your house has burned down and it is just too late, everyone knows about this video: your family, your workplace, your peers. However, if you can get through to someone in the first 24 hours, you then have time to stop this going any further and potentially not ruining your life.”

Once material is shared on smaller sites, or sites based outside the UK, it becomes harder to get it removed. Some perpetrators are prolific; staff have reported for removal more than 200,000 images of more than 150 women shared by one individual, but are struggling to get another 8,000 pictures posted by the same man taken down from noncompliant sites. While US legislation has made it easier to oblige companies to comply with take-down requests, sites based in Russia, South America and parts of Asia remain “pretty safe places if you want to set up a site trading in this sort of content”, Mortimer says.

The longer she has spent with the charity, the clearer she has become about the strong correlation between men who share nonconsensual images and coercive and controlling behaviour. “Sharing of these images doesn’t happen in isolation. The cases are often complex … often you find there was already abuse in the relationship. We do a lot of signposting to domestic abuse charities.”

Recent changes to legislation in the Online Safety Act should make it easier to get nonconsensual intimate content removed. The government has made the creation of nonconsensual synthetic content (deepfakes) an offence in principle, in the Data (Use and Access) Act, which received royal assent in June but is only partially in force as yet.

In between calls, Jessica is responding to a stream of emails that have come in overnight, most of them about sextortion; staff have noticed these messages often come through in the early hours of the morning, when people have spent hours on their phones, chatting and sharing content. “You can sense the desperation in the messages. They’re saying: please help me urgently. They’ve been told: if you don’t send the money by 7am, we will release the images. We’re often the first people they get in touch with,” she says.

Meanwhile, Alice commends her caller for quickly blocking the blackmailer, and explains the organised criminal groups behind most of the sextortion attempts usually lose interest once it becomes clear no money is going to be transferred. It is rare that the sextortion images have already been uploaded. “The bargaining chip is lost as soon as they are shared,” she says. She tells him to go to the stopncii.org website, and create a unique code, known as a hash, out of the image he shared, so that a digital fingerprint for the photo can be created and added to a bank of nonconsensual images, which will then be blocked by most reputable partner sites if anyone attempts to upload them.

Not all mainstream internet companies are compliant with the hashing regime; Google has yet to sign up, although helpline staff are hopeful it will change its policy. (Google said in a statement that it is working with StopNCII to find solutions to help address this type of harmful content, and wanted to “make sure that interventions on our platform will work, and won’t lead to negative outcomes”.)

“We hear from some people who have already sent thousands of pounds, but we explain that no money is ever enough once the criminals realise you are able and willing to pay,” Alice says. While sharing of nonconsensual intimate images is overwhelmingly (97%) an offence committed against women, 93% of the sextortion cases involve male victims. Staff cannot explain why this is, but say they have observed that women tend to be slower than men to engage in online sexual activity with strangers. Many of the perpetrators turn out to belong to criminal gangs, based abroad. Students and younger victims are typically asked for smaller sums of money; some people are asked to send an online gift card, because it can be less easy to trace.

Staff know there has been a surge in pornographic deepfakes – artificially generated pornography using a real person’s face, mostly of women – citing research by the campaign group My Image My Choice, which found more than 275,000 deepfake videos on the most popular sites in 2023, with more than 4 billion views, and with more videos uploaded to these sites that year than in all previous years combined. Sometimes, images are collected from people’s holiday pictures, bikini shots that they’ve posted on Instagram, and turned into porn, using easily downloadable apps. The use of nudification apps that use AI to remove someone’s clothes in an image or video clip is soaring; in March, the team reported 29 different undressing apps to Apple for blocking. The apps were removed, but staff are puzzled about why they were initially approved as products suitable for download.

Despite the global proliferation of this phenomenon, it is relatively rare for women to contact the helpline with this issue. Kate Worthington, the senior helpline practitioner, says a lot of the synthetic content is created for private sexual gratification rather than for shaming or hurting people. “The numbers are increasing but, with synthetic material, there isn’t always an intention of the victim knowing that the content has been generated. There is a collector culture side of things, where online spaces are used to sell, swap or trade intimate content, so that’s where they have been circulating. Women wouldn’t necessarily know their images are on these sites.”

Before the end of her shift, Jessica takes a call from a woman who has been quoted £3,500 from a private company to remove nude images shared by an ex-partner that are now appearing on a variety of adult websites. “We would really recommend not doing that,” she advises. “You shouldn’t have to pay for the content to be removed. We’re a government-funded charity – that’s what we can do for you.” The caller is in tears by the end of the conversation, but these seem to be mainly tears of relief. “We can do regular searches to make sure the content has not been reuploaded. We’re here to support you for as long as you need.”

• The Revenge Porn Helpline can be called on 0345 6000 459. If you are aged under 18, call Childline free on 0800 1111. In the UK, the national domestic abuse helpline is 0808 2000 247, or visit the Men’s Advice Line or Women’s Aid. In the US, the domestic violence hotline is 1-800-799-SAFE (7233). In Australia, the national family violence counselling service is on 1800 737 732. Other international helplines may be found via www.befrienders.org.

• Do you have an opinion on the issues raised in this article? If you would like to submit a response of up to 300 words by email to be considered for publication in our letters section, please click here.