Elon Musk’s social media platform X is taking initiative when it comes to fighting misinformation: it’s giving artificial intelligence the power to write Community Notes; those are the fact-checking blurbs that add context to viral posts.

And while humans still get the final say, this shift could change how truth is policed online.

Here’s what’s happening, and why it matters to anyone who scrolls X (formerly Twitter).

What’s actually changing?

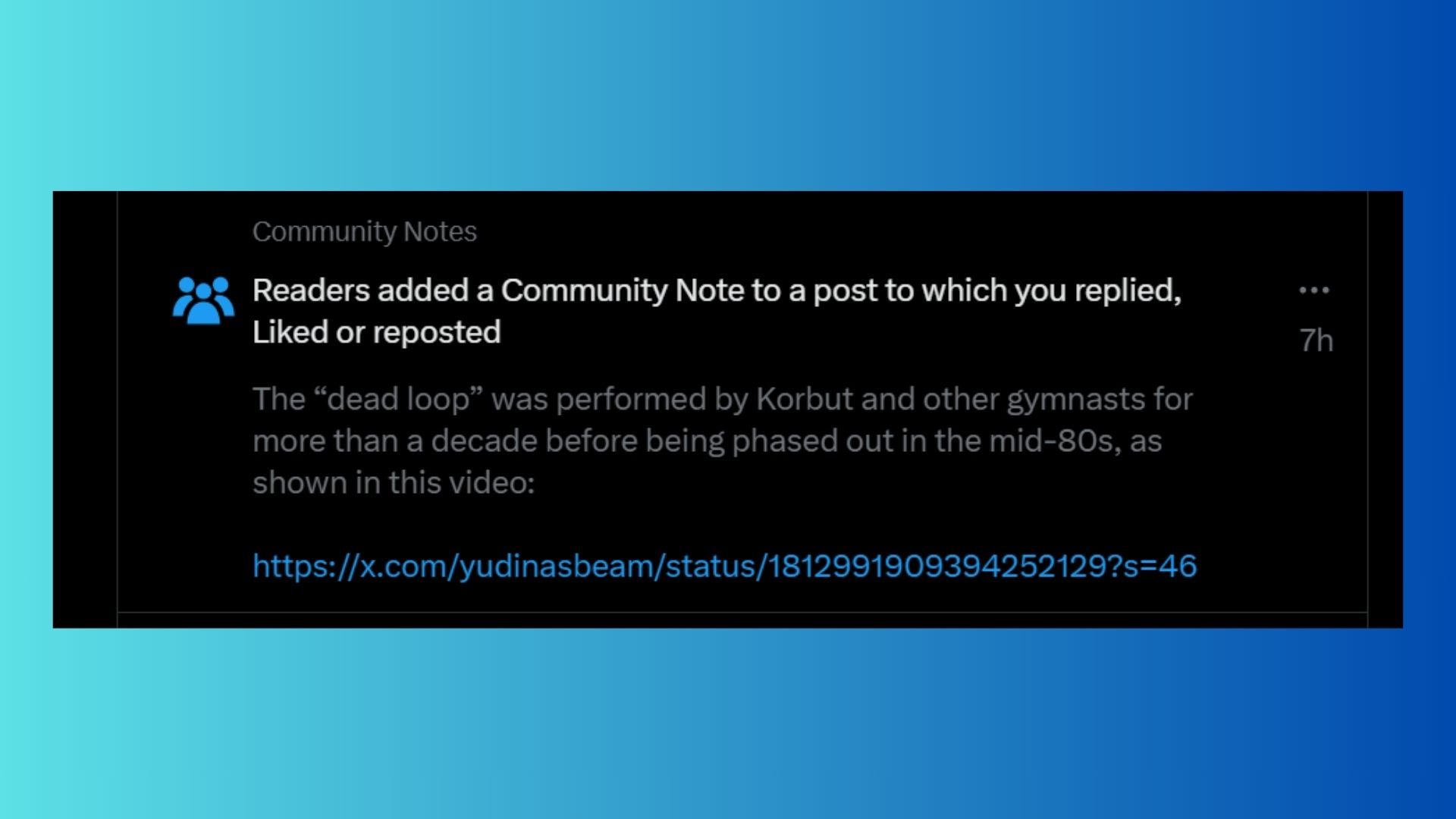

X is currently piloting a program that lets AI bots draft Community Notes. Third-party developers can apply to build these bots, and if the AI passes a series of “practice note” tests, it may be allowed to submit real-time fact-checking content to public posts.

Human review isn’t going away. Before a note appears on a post, it still needs to be rated “helpful” by a diverse group of real users and given proper oversight. That’s how X’s Community Notes system has worked from the start, and it remains in place even with bots in the mix (for now).

The goal is speed and scale. Right now, hundreds of human-written notes are published daily.

But AI could push that number much higher, especially during major news events when misleading posts spread faster than humans can keep up.

Why this move matters

Can we trust AI to handle accuracy? Yes, bots can flag misinformation fast, but generative AI is far from perfect. Language models can hallucinate, misinterpret tone, or misquote sources. That’s why the human voting layer is so important. Still, if the volume of AI-drafted notes overwhelms reviewers, bad information could slip through.

X isn’t the only platform using community-based fact-checking. Reddit, Facebook and TikTok have also explored similar systems.

But automating the writing of those notes is a first, opening up a bigger question about whether we are ready to hand over our trust in bots.

Musk has publicly criticized the system when it clashes with his views. Letting AI into the process raises the stakes: it could supercharge the fight against misinformation, or become a new vector for bias and error.

When does this go live and will it actually work?

The AI Notes feature is still in testing mode, but X says it could roll out later this month.

For this to work, transparency is key, with a hybrid approach of human and bot working together. One of the strengths of Community Notes is that they don’t feel condescending or corporate. AI could change that.

Studies show that Community Notes reduce the spread of misinformation by as much as 60%. But speed has always been a challenge. This hybrid approach, AI for scale, humans for oversight, could strike a new balance.

Bottom line

X is trying something no other major platform has attempted: scaling context with AI, without (fully) removing the human element.

If it succeeds, it could become a new model for how truth is maintained online. If it fails, it could flood the platform with confusing or biased notes.

Either way, this is a glimpse into the future of what information looks like in your feed and encourages asking the question of how much you can trust AI.