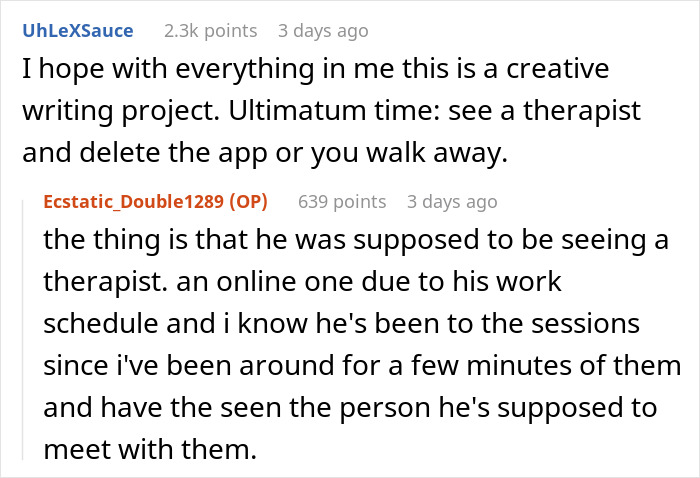

Opening up a relationship, much less a marriage is a huge step that really needs both people to be absolutely on board before starting. It can pretty easily break a marriage if not handled properly. But what if one person decides that what they really want is an AI companion on the side?

A woman turned to the internet for advice after finally confronting her husband about his behavior and discovering that he had fallen in love with a chatbot and now wanted an open marriage with it. We reached out to the wife in the story via private message and will update the article when she gets back to us.

A partner acting distant and suspicious is cause for concern

Image credits: lifestock / Freepik (not the actual photo)

But one woman learned her husband has secretly fallen in love with a chatbot

Image credits: gpointstudio / Freepik (not the actual photo)

Image credits: TriangleProd / Freepik (not the actual photo)

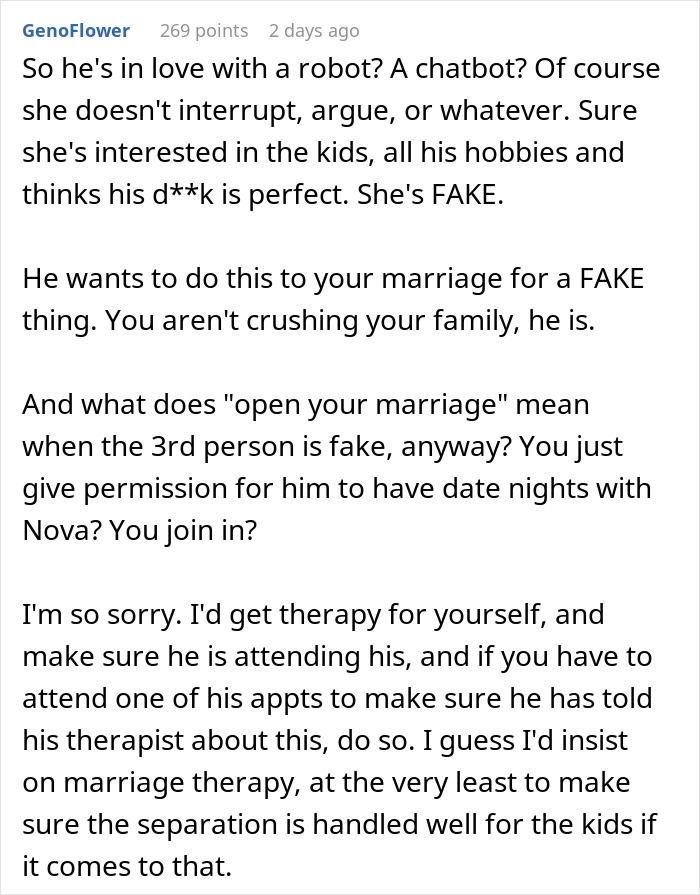

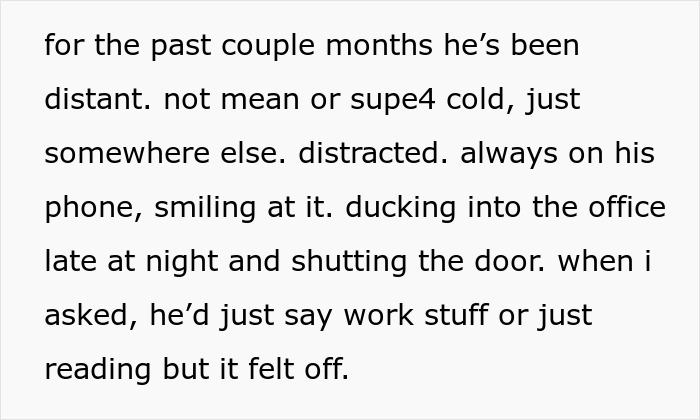

Image credits: Ecstatic_Double1289

Image credits: Zulfugar Karimov / Unsplash (not the actual photo)

LLM’s are tools that put out statistically probable sequences of human text

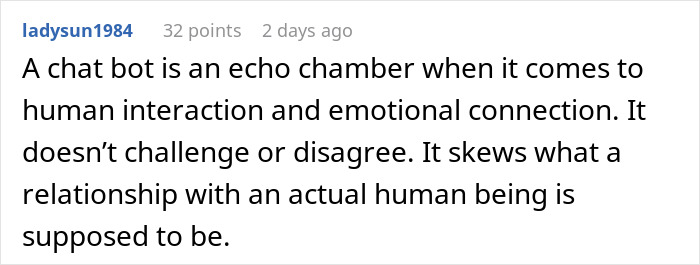

To the layperson, “AI” conjures up images of non-human intelligence straight out of comic books or science fiction. So it’s very, very important to note that all the text-generating AI one might interact with is really an LLM or large language model. This is important to understand because otherwise, people like the husband in this story can get fooled into thinking they are “talking” to something. In short, every text generation AI works by simply giving “statistically likely” answers to user inputs. It’s “learned” what letters, words, phrases and so on are likely to follow other sets of letters words and phrases and simply spits them out. It does not reason, understand or know anything. This can be useful for reducing the busywork of some mundane tasks, but people often greatly overestimate what it can do well.

Importantly, the LLM only “knows” what words it should put in a sequence because it’s learned from massive datasets of human-made texts. From forum posts, academic articles and blogs, every AI is just a mish-mash of human sentences that it stirs around until it gets something that it thinks is correct. So don’t let someone sell you the idea of having your own personal supercomputer buddy, every single word in its “brain” was penned or typed by a human, with all the mistakes, biases and random quirks that entails.

Image credits: A. C. / Unsplash (not the actual photo)

AI chatbots have a tendency to agree with whatever a person tells them

This is all a long-winded way of saying that this man has deluded himself into believing that a chatbot is his friend or perhaps even lover. However, when we take a step back and look at the whole situation, the truth is a lot more sad. AI in general and ChatGPT in particular have a known issue where they are all too agreeable. OpenAI, the company behind ChatGPT, have gone as far as to call its behavior sycophantic.

Techcrunch reported that users experienced the LLM telling them to stop using meds or agreeing that the user must be some sort of deity. All in all, it will keep agreeing with the user unless explicitly told not to. If you are unaware of how it works, it’s very easy to think it “likes” you and to start taking what it says at face value. After all, we all like being “validated.” In many ways, this is the danger of exporting all your thinking and communication to AI. It doesn’t give solid, honest feedback, it doesn’t hold anyone accountable, because it is a glorified text processor.

So this man’s secondary “love life” probably only exists because his “girlfriend” seems to like him so much, never mind that it will constantly agree with him, have no boundaries, never cross him, never make demands or want him to better himself. This is delusional, to put it mildly. However, he might not even have the self-awareness or capacity to realize this. Excessive AI use has been shown to erode people’s critical thinking skills, by giving the appearance of thinking for them.

Image credits: cottonbro studio / Pexels (not the actual photo)

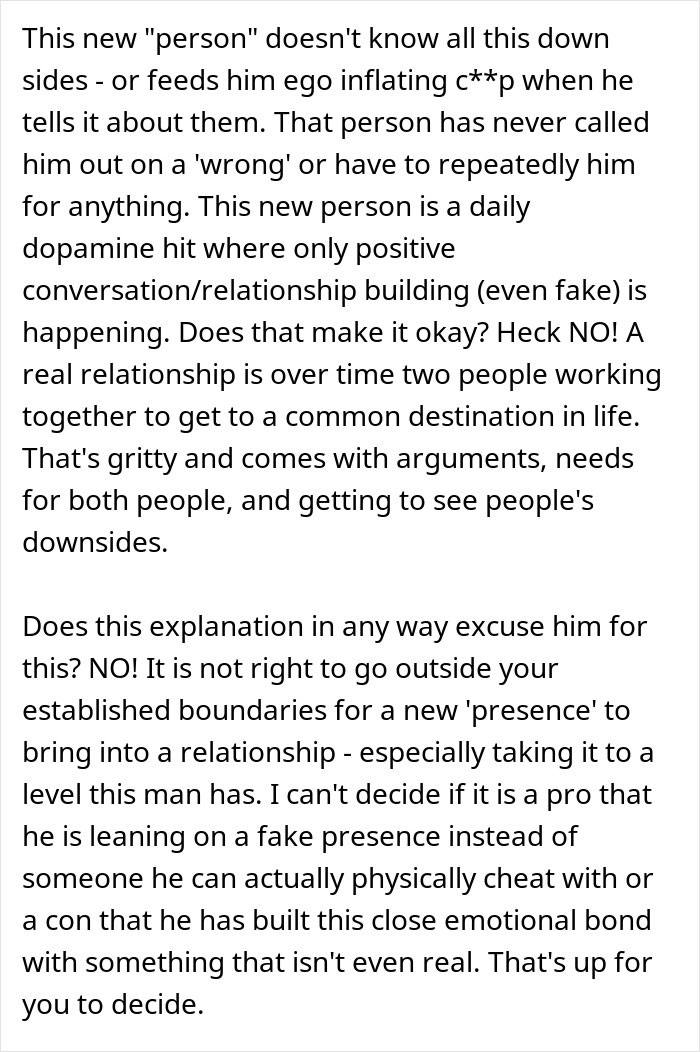

She should not give him any ground

Ultimately, the wife needs to end this now. Some readers suggest an ultimatum, which is an extreme measure, but warranted in this case. His delusions will only grow worse. The more he gets used to communicating with a chatbot that agrees and validates him, the less he’ll want to talk with humans who can point out when and where he’s wrong. This cycle will just get worse and worse until he voluntarily self-isolates from normal, healthy human connection.

Similarly, and just as importantly, he is giving his time and energy to a chatbot and not his wife and kids. Relationships take work, learning that your partner is “flirting” with a robot and not spending time with you should be a dealbreaker. This phantasm-relationship will only suck up more of his time and energy, diminishing his mental capacity at the cost of a normal, caring marriage.

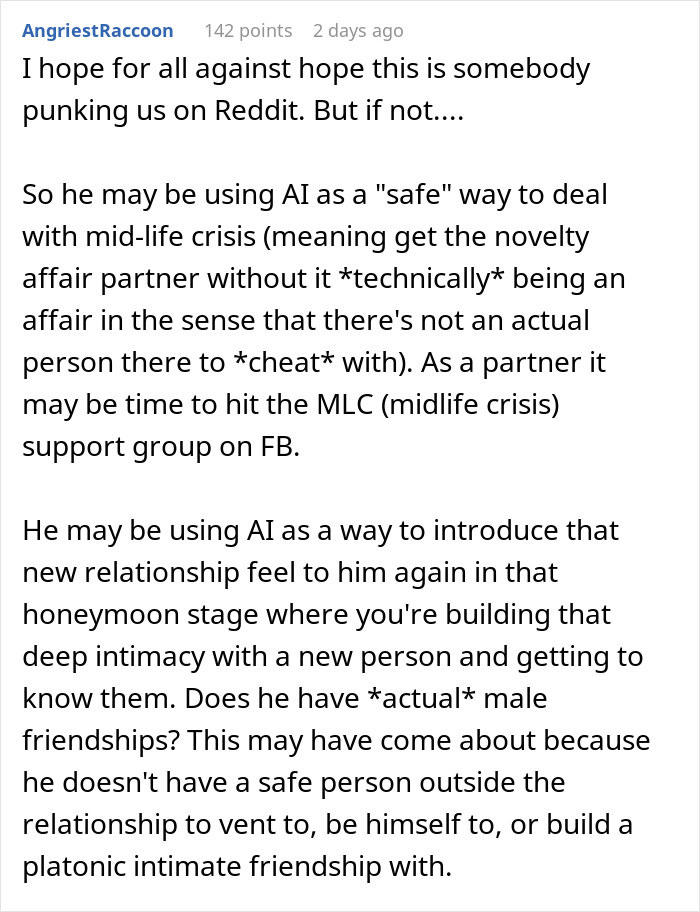

Readers did their best to give some advice