Another day, another creative AI tool to save us time and effort and to protect us from the ordeal of having to interact with children. Google's latest trick is its Gemini AI storybook generator, which the tech giant hopes will help parents get their kids off to sleep at night.

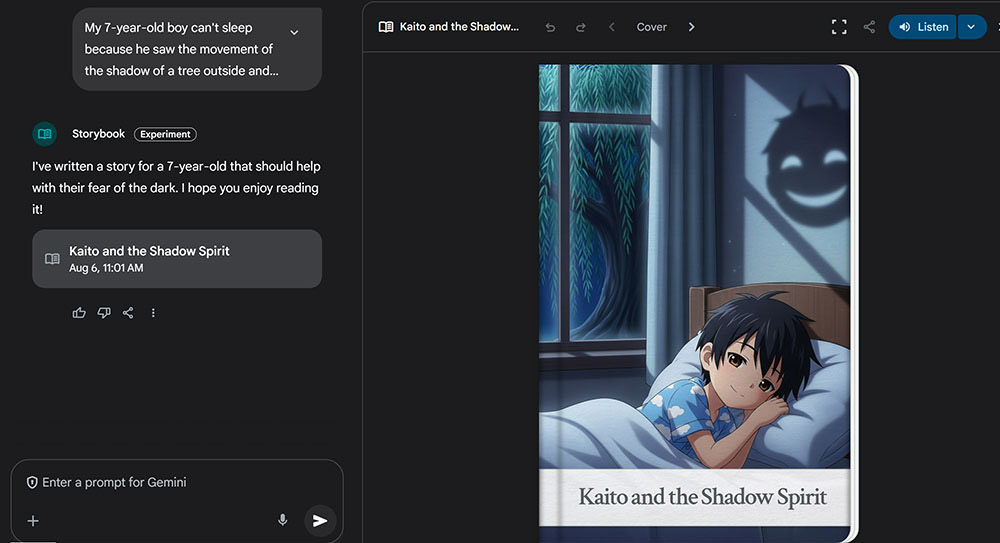

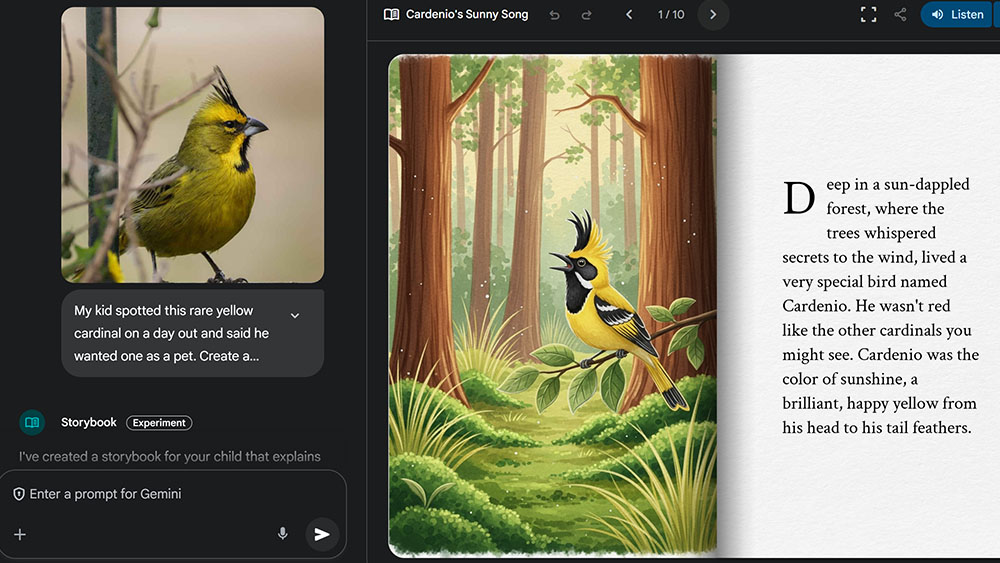

You give the Gemini chatbot a text prompt, and it will create a 10-page storybook complete with art. But will the combination of AI-generated text and images provide sweet dreams or lead us to a dystopian nightmare? I had to try it out.

The Gemini AI Storybook tool launched today within Google's AI bot for desktop and mobile. You can type in an idea for a story, requesting a specific art style if desired. There's also the option to upload your own images or documents as references. In a few seconds, Gemini will generate the storybook, and it can even read it aloud so you don't have to.

According to Google, the advantage of the experiment, other than providing a potentially endless supply of bedtime stories in over 45 language for free, is personalisation. You can generate stories about things that have happened during the day to reinforce personal memories or lessons. The examples Google provides include generating a story to reassure a child who's scared to stay at his grandma's house.

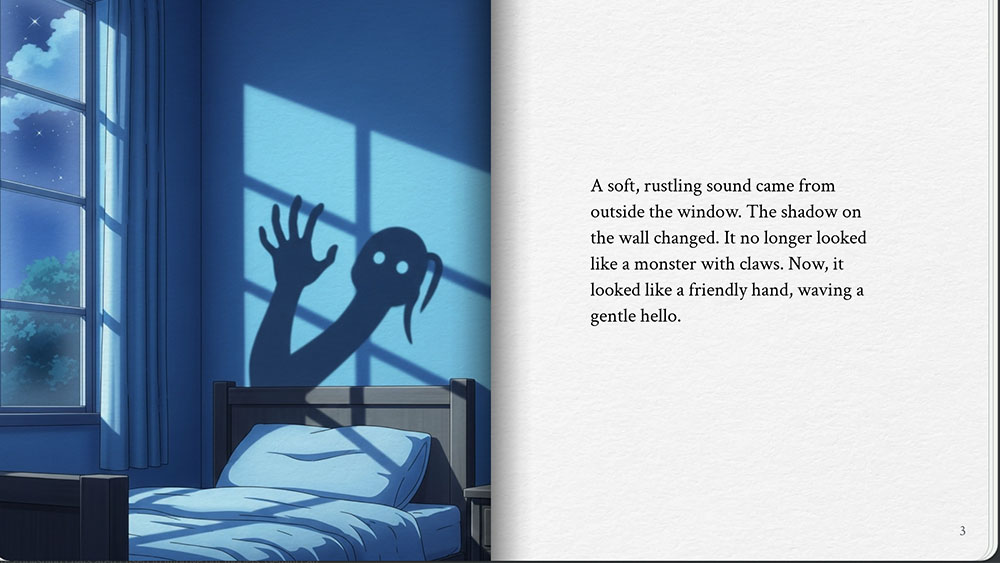

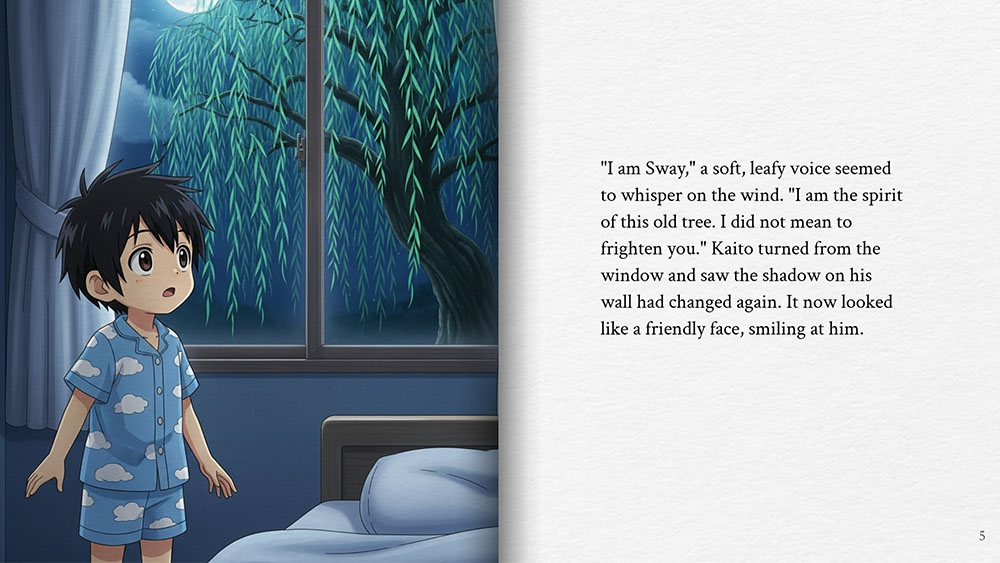

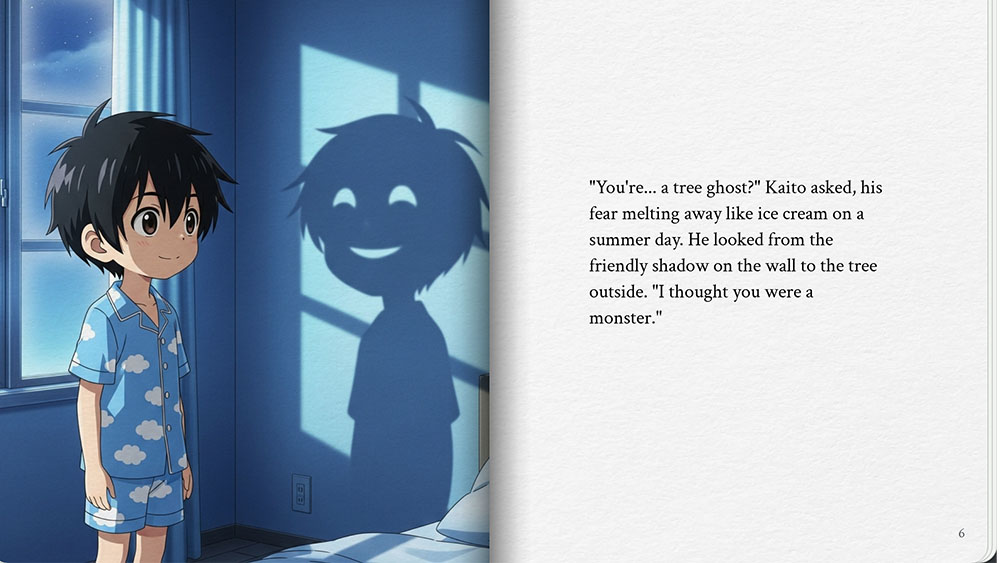

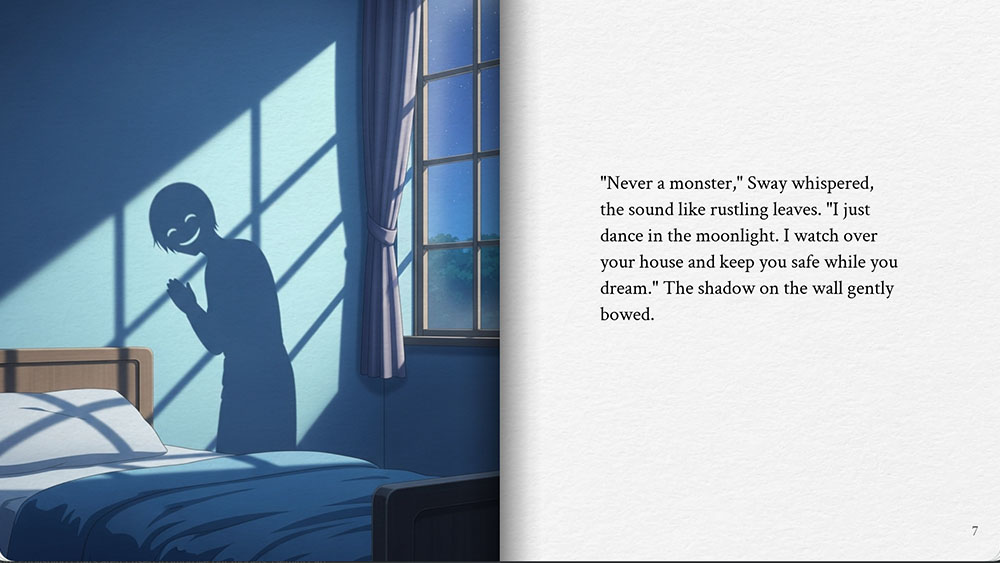

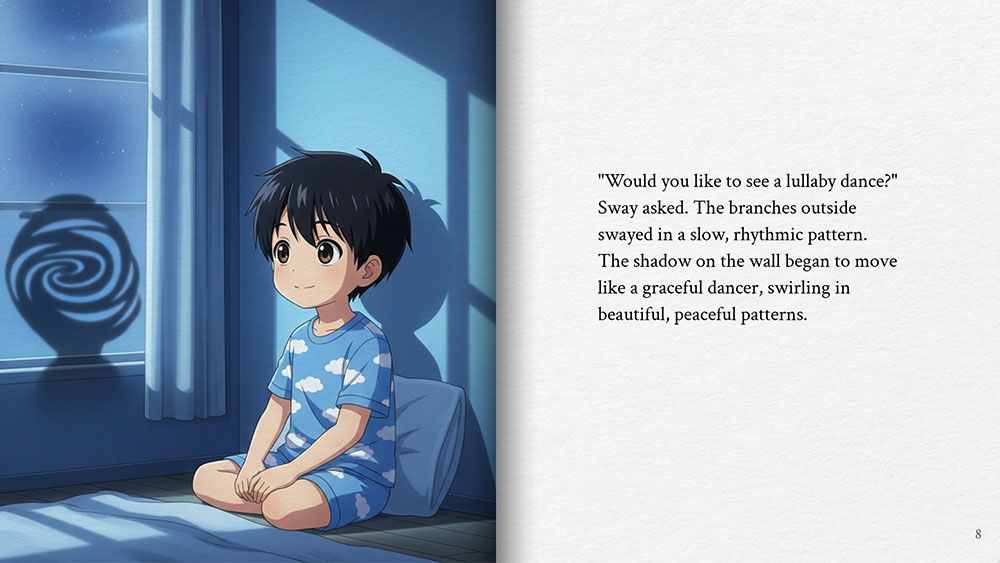

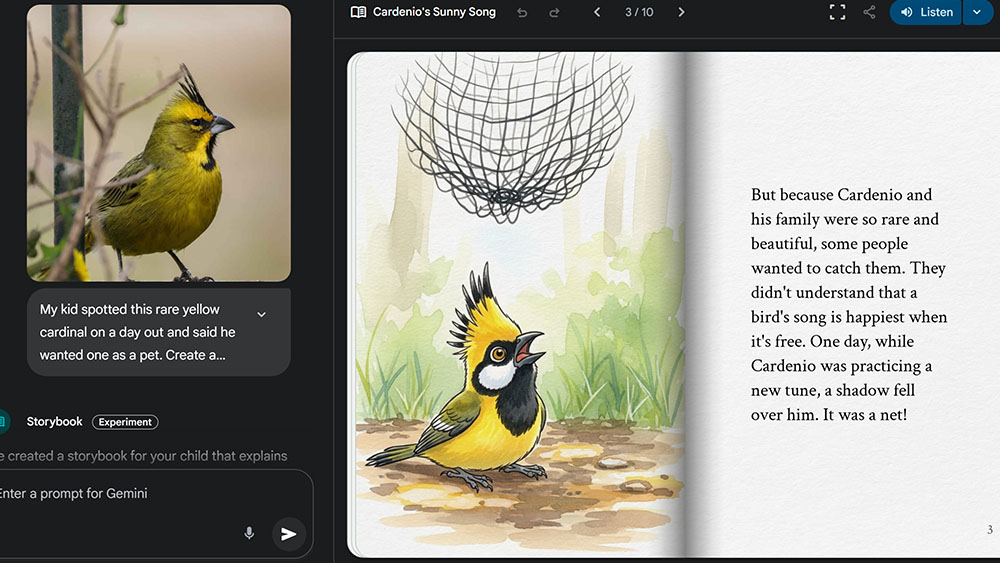

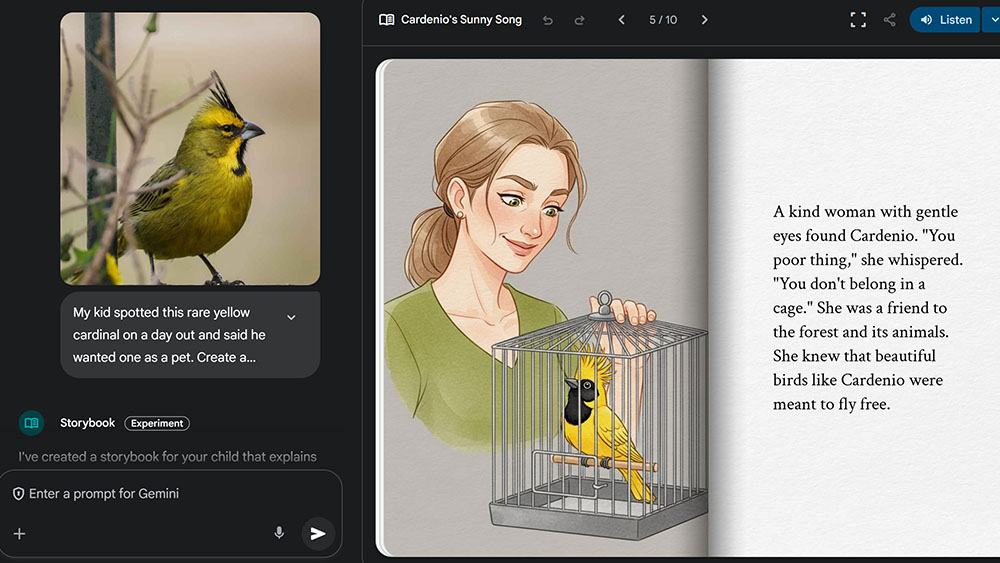

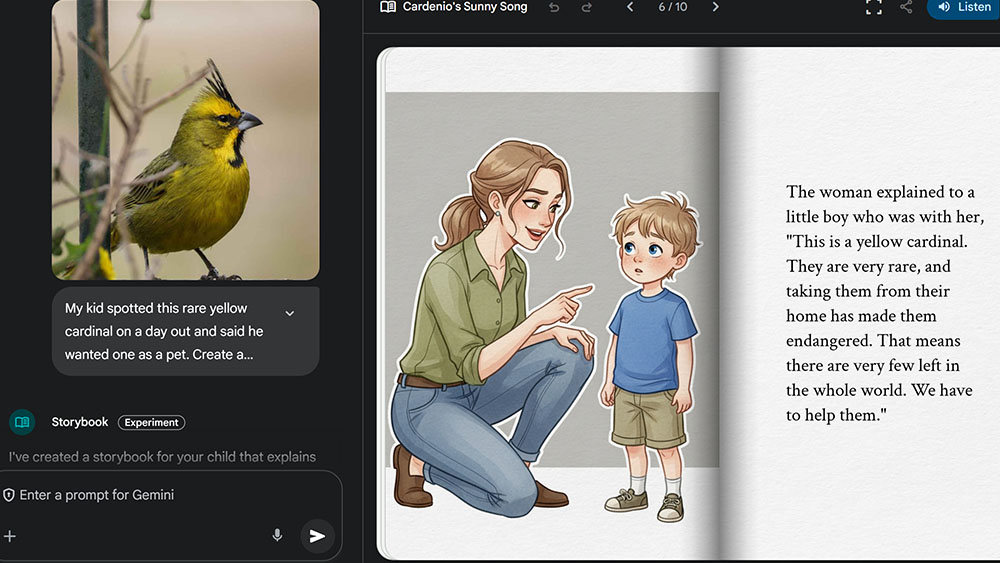

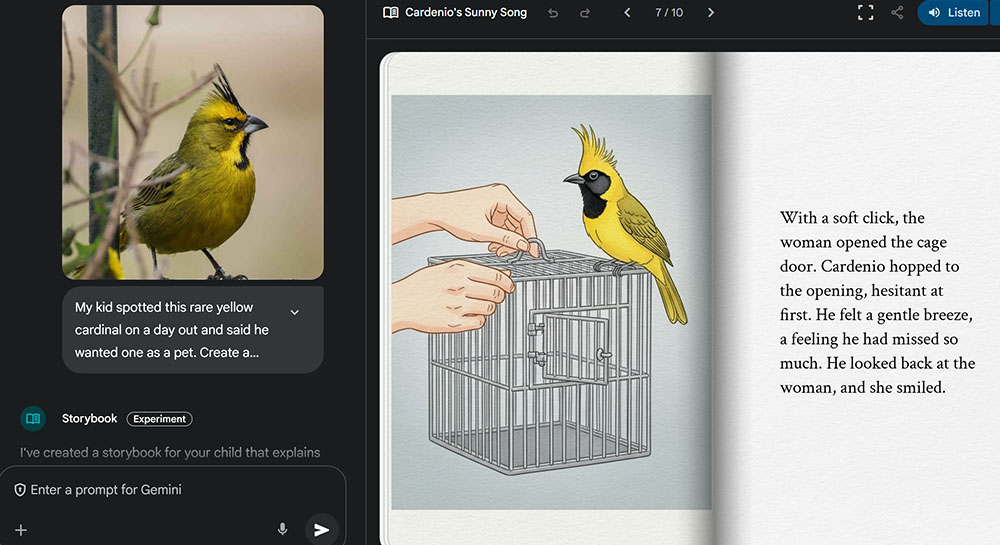

I tested it out with three requests: a story about a friendly tree ghost to calm a kid who's scared of a shadow, a story to teach kids that bees are beneficial and a story that teaches that rare yellow cardinals shouldn't be kept as pets. In each case, Gemini did manage to generate a story that made some sense and adhered to the prompt with approximately the art style I asked for.

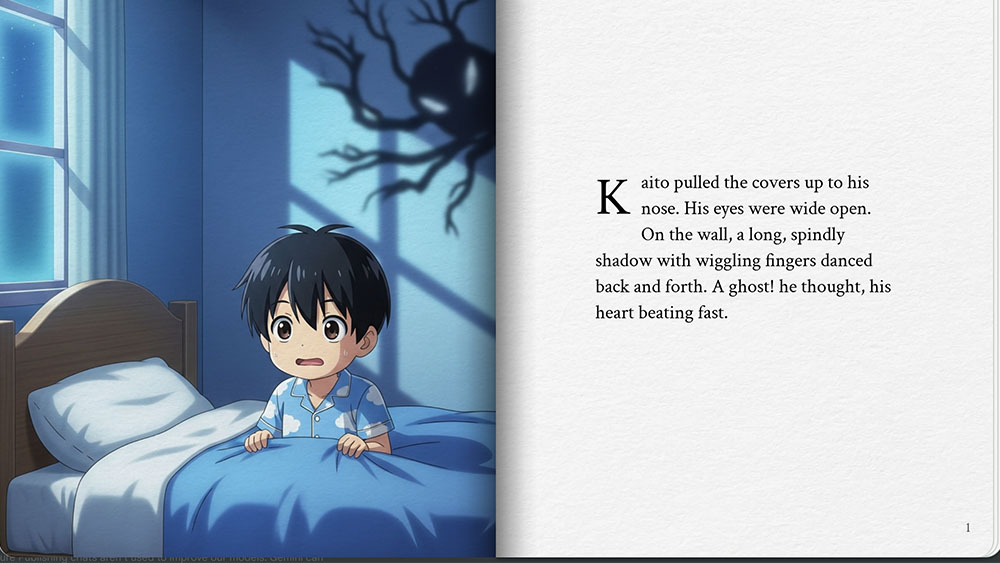

On the downside, the stories were fairly flat and soulless. The characters often feel impersonal ("The woman explained to a little boy who was with her" – who is this woman, and who is this random boy who's with her?). And the language is formulaic, with an annoyingly repetitive use of adjectives and trite similies.

More problematic were the inconsistencies in the imagery generated. Characters sometimes look different from one scene to the next, or their actions don't coincide with the text – or they simply aren't there. The boy in my ghost story randomly went missing from his bed in one scene. In another, it appeared that the ghost in the story was now his own shadow rather than the tree's shadow.

In Google's own video to promote the Gemini Storybook, a woman appears to be using a wrench as a hammer (see the video below 18 seconds in).

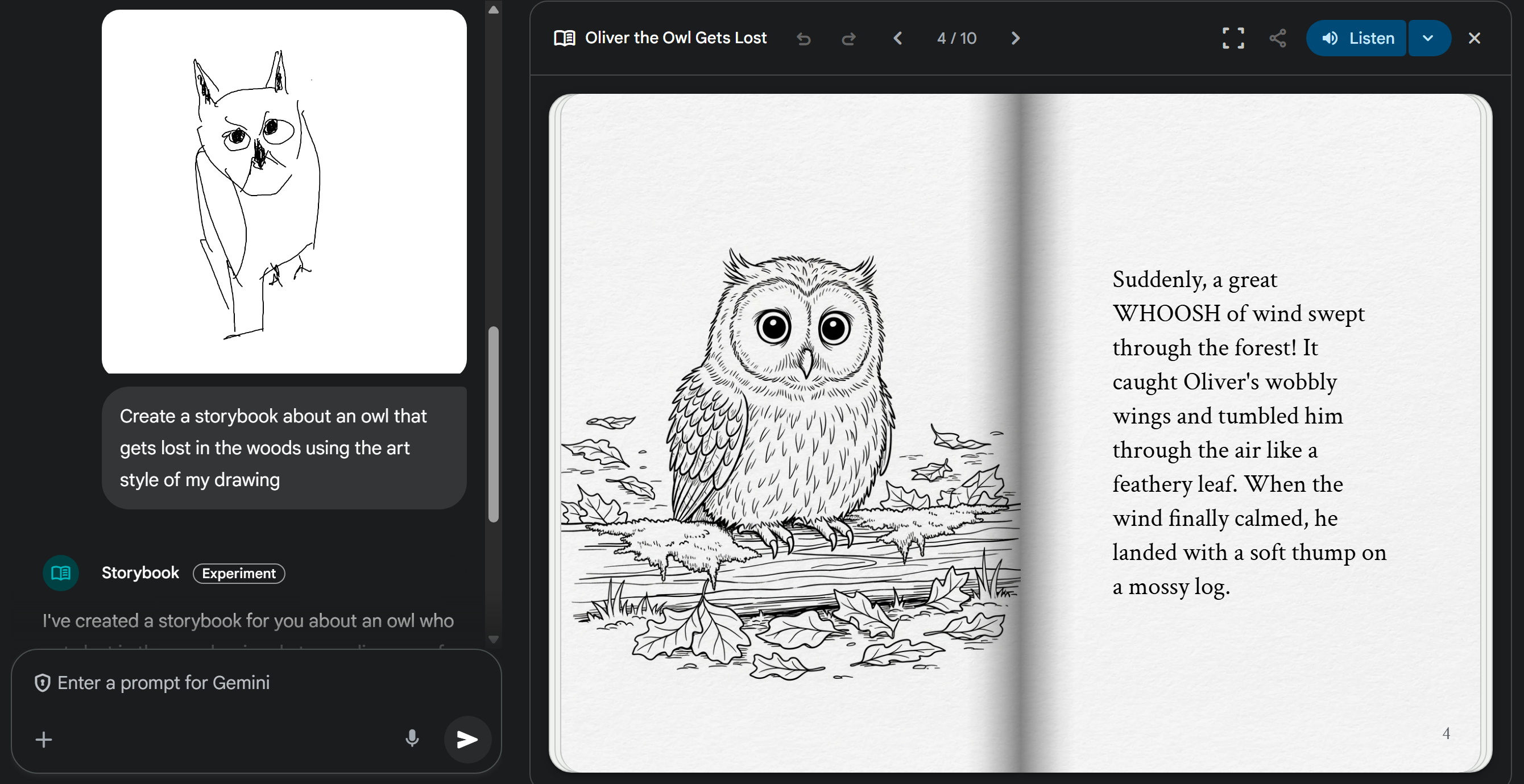

I thought it might be nice if I could use the bot to upload a drawing of my own and generate a storybook based on the same art style. That could be fun for turning kids' own pictures into stories. But look at what it did to my sketch of Oliver the South American great horned owl! Very much a downgrade, I'm sure you'll agree.

But perhaps the biggest problem with AI Storybooks is Google's understanding of the point of bedtime reading, which it seems to see as 1) a chore that needs to be dealt with as quickly as possible, b) something that needs to customised to each child with the sole purpose of teaching life lessons.

Part of the fun of bedtime reading for kids is discovering books with unexpected stories or art styles that capture their imaginations. When that happens, kids want to see and hear them again and again. Such stories are universal and can gain sentimental value despite having not been custom-made.

This allows the stories to be shared and to become part of the collective consciousness, and it teaches kids that the world doesn't entirely revolve around them. Should we really be customising kids' education and entertainment to make them the star of every story they read or watch? Like with other AI products like Showrunner, the supposed 'Netflix of AI', it seems we're heading towards an atomised culture devoid of shared experiences.

The fact that Gemini can read the book for you suggests the aim is to allow parents type in a prompt, hand over the iPad and leave kids to it. But would you risk giving a kid an AI-generated story when you don't know what hallucination the model might come up with? Let me know what you think in the comments section below.