There used to be a time when newspaper journalists had to scroll through countless microfiche reels and dig through clips to find old articles for background material for their stories.

Electronic archives, along with the internet, have made our lives easier and restructured the way we disseminate the news and how audiences consume it.

That’s how it should work. Technology should help media workers do their jobs, not impersonate them or actually carry out the tasks of a real human hired to dig for facts.

This brings us to artificial intelligence, or AI, which has crept into some newsrooms. Most media companies have issued strict guidelines prohibiting the tool from being used for publishable content and images. The few that have — unfortunately, in our view — gone a step further by using AI to pen copy at least clearly mark those write-ups as having been produced by a non-human.

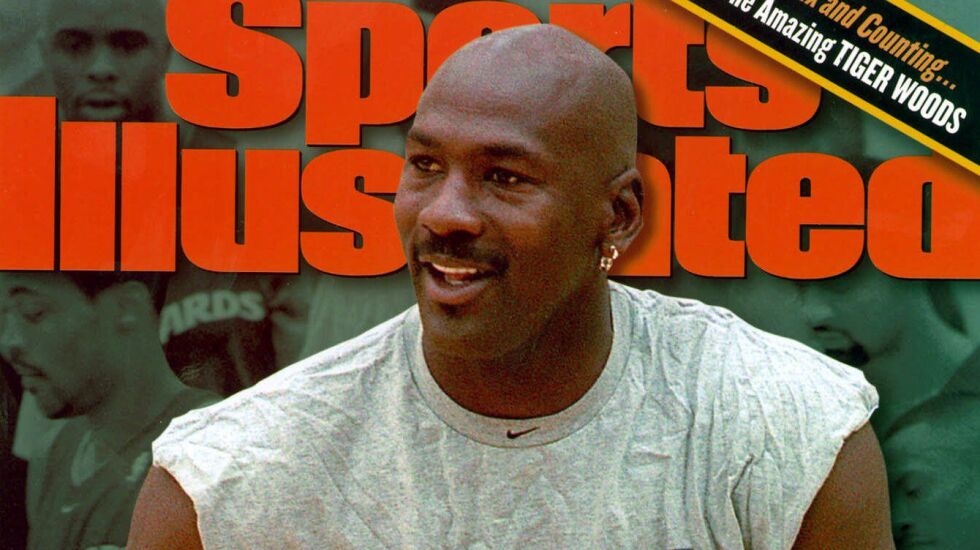

No such disclaimers were in sight when Sports Illustrated fumbled the ball with AI. Once considered a media behemoth in the athletic world, SI recently featured product reviews on its website — with the bylines of reporters who don’t exist.

Talk about foul play, for readers and for journalism as a profession.

The accompanying articles were also “AI-generated,” a staffer told Futurism, a news website dedicated to science and technology.

Sports Illustrated, which is mostly online and churns out a print magazine once a month, maintained the features were written by living, breathing mortals.

AdVon Commerce, the external, third-party company hired for the licensed content, had its employees “use a pen or pseudo name” to protect their privacy, a spokesperson for the magazine’s publisher, The Arena Group, explained in a statement. Which, if true, would be especially galling, given that journalists put their real bylines and names out there for public consumption every day. It’s part of the job.

Missing in the statement was the reasoning behind the use of AI-generated headshots, advertised online as a “neutral white young-adult male” and “joyful Asian young-adult female.”

Even if the stories, and whoever or whatever wrote them, didn’t give false information, there is no question Drew Ortiz, the “neutral white guy,” and Sora Tanaka, the “joyful Asian,” aren’t real people. Their attached “biographies” also reeked of hardcore phoniness.

When you “create somebody from the ether,” you don’t have to pay them, as the Sun-Times’ Rick Telander pointed out last week in his column about the controversy.

Using names like Ortiz — even though it was assigned to a Caucasian bot — and Tanaka also gives the false illusion of diversity, allowing readers to believe Sports Illustrated is serious about hiring people of color.

The smiling faces of these fictional scribes have since been scrubbed off SI’s website and The Arena Group has severed ties with AdVon, as well as two of the publisher’s senior executives.

No ceding ground to AI

Newsrooms will always have to adapt to certain trends or risk extinction. The essence of what a journalist does, however, will never change. That torch can never be handed over to AI. Whether it’s asking tough questions or the basic 5Ws — who, what, where, when and why — no technology will be as adept or qualified at the job as a newswoman or newsman.

AI knows it, too. “AI should not be used in journalism,” opined an experimental St. Louis Post-Dispatch editorial created by Microsoft’s Bing Chat AI program over the fall.

“... it can undermine the credibility and trustworthiness of news. AI can generate fake news, manipulate facts, and spread misinformation.”

That’s the truth.

Gannett over the summer “paused” using LedeAI for some of its high school sports coverage for “errors, unwanted repetition and/or awkward phrasing” that LedeAI CEO Jay Allred admitted to.

We at the Sun-Times Editorial Board concede reporters aren’t perfect. They can make mistakes, too, and when those happen, corrections are issued immediately in print and online.

Bad apples, like Fox Sports reporter Charissa Thompson, also exist, scarring the reputation of countless hard-working sports journalists who don’t fabricate quotes as Thompson copped to doing in her NFL sideline reports. Such behavior is especially egregious when most journalists champion ethics and accuracy, yet are constantly accused of spreading misinformation by politicians and some members of the public.

Throwing AI into the mix will only embolden those who want legitimate media institutions to crumble, which in turn erodes an essential institution of democracy.

When AI spits out inaccuracies, it’s referred to as a “hallucination.”

Most reporters aren’t seeing things when they scribble down notes or type on their laptop.

Newsroom leaders dabbling with AI shouldn’t lose sight of its limitations. It’s delusional to think tech can ever replace real journalists.

The Sun-Times welcomes letters to the editor and op-eds. See our guidelines.