If news about Photoshop tends to pique your interest, you may have seen mention in the past of Adobe Max. This is a slightly odd 'creative conference' in which the software giant invites people to spend around £100 to hear talks on the latest Creative Cloud features from Adobe's experts, as well as a few non-Adobe speakers.

The company will typically time the global release of new software features to coincide with the event, and that's exactly what's just happened this morning.

I'm at Adobe Max London at Evolution in Battersea, along with 1,500 other people, and the company has just announced a slew of features for Photoshop. These are all contained within the new version of its generative AI tool, Firefly – which it's catchily dubbed Firefly Image 3 Model.

So why have AI features become the main obsession for Adobe, what can they offer photographers, and what are the newest additions to this toolkit?

What's Firefly doing in Photoshop?

First, a little context for the uninitiated. Standard generative AI tools such as Midjourney and DALL-E 2 are basically about generating an image from scratch using a text prompt: something like "cat dressed as a fireman holding a hose and spraying water onto a burning house".

This will generally look like a bad cartoon unless you add a term like 'photorealistic', in which case it'll look a bit more like a photo. But while the quality of outputs is improving all the time, mostly it looks pretty bad. So while generative AI can be useful for things like storyboarding and ideation, in my opinion, it's not going to replace photographers any time soon.

What's different about Firefly in Photoshop, though, is that it's not about replacing photographers, but helping photographers improve their photos, without a lot of time-consuming effort. So for example, you could select an area of an image and use the Generative Fill tool to add in a tree.

It usually does a pretty good job. And it even does this ethically, compared to other tools, because the AI is only trained on its own stock images, where the creator has agreed for their work to be scraped. For more details on the practicalities, see How to make Firefly work for you.

But are they any good? Well, it seems Photoshop users think they are. Because as Adobe's Deepa Subramaniam revealed to me last night, the two features most often in Photoshop are AI tools: Generative Fill and the Contextual Bar, which is a taskbar that anticipates the next most useful action in your workflow. Yes, you read that right. They are now more popular than the Crop tool, which has now been relegated to third on the list.

In other words, you may like, loathe or feel ambivalent about the use of generative AI in Photoshop. But in reality, millions of people are using them daily. So it's at least worth knowing what they are.

New for 2024: the Reference Image tool

So what's new for Firefly in Photoshop? Well, the headline new feature is Generate Fill with Reference Image. As the name suggests, this allows you to give Adobe a steer in how it generates AI images for you, by uploading a reference image.

Last night Terry White, principal worldwide design and photography evangelist for Adobe, demonstrated an example of how this might work for you in practice. He uploaded a photo of himself on stage wearing a jacket. He didn't like how the jacket looked, so he wanted the AI to replace it with another one.

As a reference image, he uploaded a stock image of an entirely different jacket. And then, without any clicking or text prompting required, Firefly replaced the jacket in the first photo, in an entirely convincing way. Yes, you had to wait a couple of seconds for it to do so, but it was definitely worth the wait.

Here's another example that Adobe just shared in a press release.

Anyone who's spent ages writing a long, complicated prompt and still not getting the right results – when you know exactly what you want the image to look like – will appreciate this addition to the generative capabilities of Photoshop. In this case, a picture really is worth a thousand words.

Because Adobe is taking an 'ethical' stance to image generation, it makes it clear in its terms and conditions that you need to have permission to use the reference image in question. Whether or not people actually stick to this, of course, is another matter.

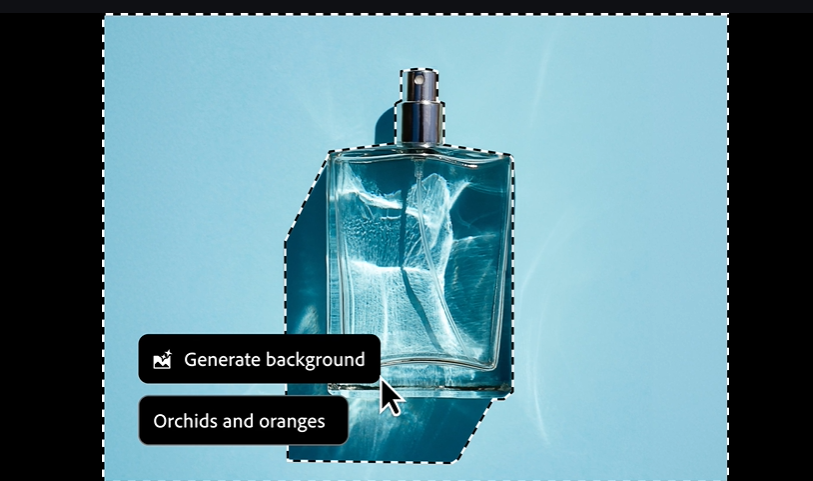

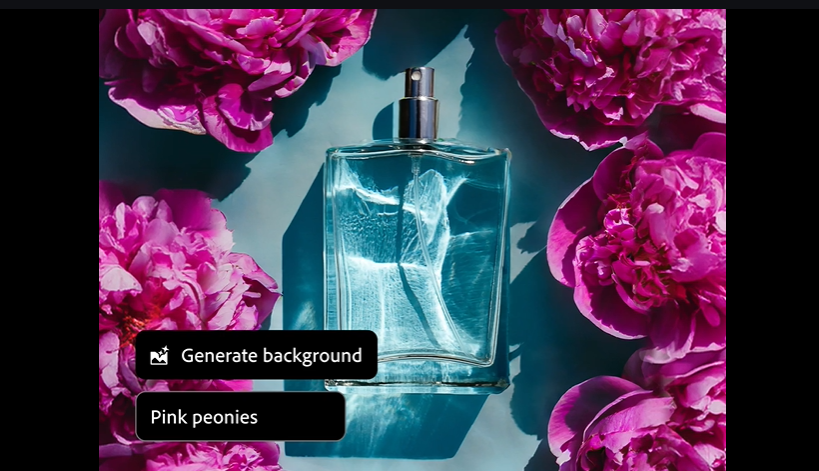

New for 2024: Generate Background

Another headline feature is Generate Background and it does exactly what it says on the label. Last night, Terry showed me a photograph of his dog being cute in his backyard, and then used the new feature to effortlessly switch between backgrounds. It really all did happen in a single click, and although I didn't examine the images with a magnifying glass, they all looked pretty convincing to me: I certainly wouldn't have been able to tell which was the original and which was the edited version at first glance.

"You wouldn't believe how many people come to Photoshop every day to change a background," says Terry. "This new tools make it so much easier." Here are a few more examples shared by Adobe today.

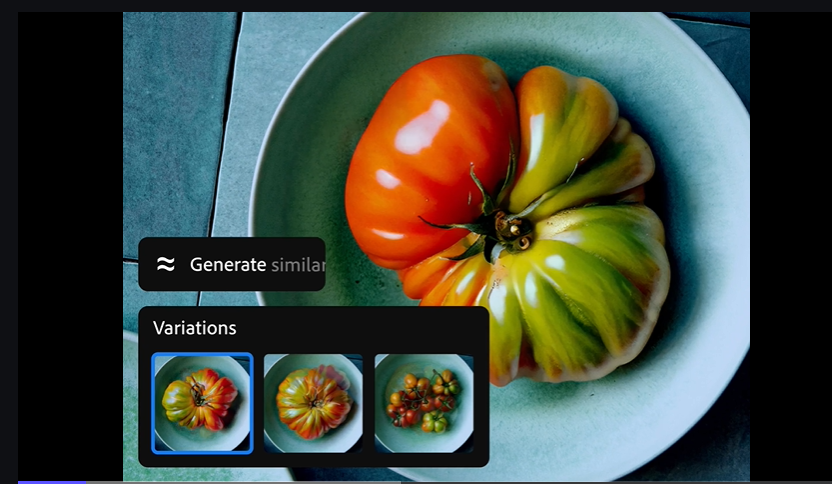

New for 2024: Generate Similar

Generate Similar is another variation on this theme. This time you get to upload an image (which may be your own, a stock image or another AI image) and ask for Photoshop to generate similar images. Again, pretty quick and straightforward in use and it's quite fun to play around with, even if it's just to give you fresh ideas.

Other new additions to Photoshop include Adjustment Brush, which enables you to easily apply non-destructive adjustments to specific portions of images, pretty much like you can already do in Lightroom and Adobe Camera Raw.

Meanwhile, the Enhance Detail feature is designed to fine-tune images, enhancing their sharpness and clarity. An improved Font Browser makes it easier to get real-time access to Adobe’s over 25,000 fonts in the cloud – without leaving Photoshop. And more Adjustment Presets have been added to Photoshop. (In case you didn't know, they enable you to easily change the appearance of images with filters that apply effects in a single click. You can create and save customized presets as well.)

All these new features are available in beta form in Photoshop today, globally. You can access it via the Firefly web application at firefly.adobe.com. Adobe offers both free and paid plans that include an allocation of Generative Credits for the use of generative AI features powered by Firefly in Adobe applications.