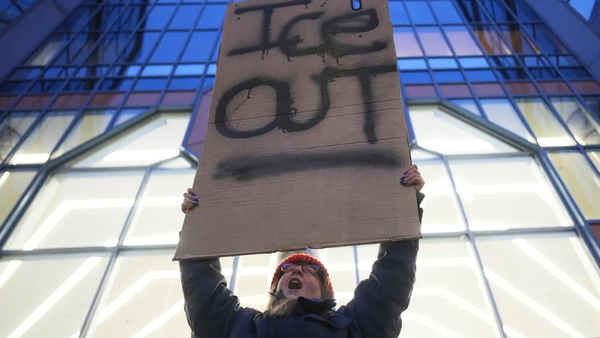

The line to enter the 985-seat basement auditorium at University College London where OpenAI cofounder and CEO Sam Altman is about to speak stretches out the door, snakes up several flights of stairs, carries on into the street, and then meanders most of the way down a city block. It inches forward, past a half-dozen young men holding signs calling for OpenAI to abandon efforts to develop artificial general intelligence—or A.I. systems that are as capable as humans at most cognitive tasks. One protester, speaking into a megaphone, accuses Altman of having a Messiah complex and risking the destruction of humanity for the sake of his ego.

Messiah might be taking it a bit far. But inside the hall, Altman received a rock star reception. After his talk, he was mobbed by admirers, asking him to pose for selfies and soliciting advice on the best way for a startup to build a “moat.” “Is this normal?” one incredulous reporter asks an OpenAI press handler as we stand in the tight scrum around Altman. “It’s been like this pretty much everywhere we’ve been on this trip,” the spokesperson says.

Altman is currently on an OpenAI “world tour”—visiting cities from Rio and Lagos to Berlin and Tokyo—to talk to entrepreneurs, developers, and students about OpenAI’s technology and the potential impact of A.I. more broadly. Altman has done this kind of world trip before. But this year, after the viral popularity of A.I.-powered chatbot ChatGPT, which has become the fastest growing consumer software product in history, it has the feeling of a victory lap. Altman is also meeting with key government leaders. Following his UCL appearance, he was off to meet U.K. Prime Minister Rishi Sunak for dinner, and he will be meeting with European Union officials in Brussels.

What did we learn from Altman’s talk? Among other things, that he credits Elon Musk with convincing him of the importance of deep tech investing, that he thinks advanced A.I. will reduce global inequality, that he equates educators’ fears of OpenAI’s ChatGPT with earlier generations’ hand-wringing over the calculator, and that he has no interest in living on Mars.

Altman, who has called on government to regulate A.I. in testimony before the U.S. Senate and recently coauthored a blog post calling for the creation of an organization like the International Atomic Energy Agency to police the development of advanced A.I. systems globally, said that regulators should strike a balance between America’s traditional laissez-faire approach to regulating new technologies and Europe’s more proactive stance. He said that he wants to see the open source development of A.I. thrive. “There’s this call to stop the open source movement that I think would be a real shame,” he said. But he warned that “if someone does crack the code and builds a superintelligence, however you want to define that, probably some global rules on that are appropriate.”

“We should treat this as least as seriously as we treat nuclear material, for the biggest scale systems that could give birth to superintelligence,” Altman said.

The OpenAI CEO also warned about the ease of churning out massive amounts of misinformation thanks to technology like his own company’s ChatGPT bot and DALL-E text-to-image tool. More worrisome to Altman than generative A.I. being used to scale up existing disinformation campaigns, he pointed to the tech’s potential to create individually tailored and targeted disinformation. OpenAI and others developing proprietary A.I. models could build better guardrails against such activity, he noted—but he said the effort could be undermined by open source development, which allows users to modify software and remove guardrails. And while regulation “could help some,” Altman said that people will need to become much more critical consumers of information, comparing it to the period when Adobe Photoshop was first released and people were concerned about digitally edited photographs. “The same thing will happen with these new technologies,” he said. “But the sooner we can educate people about it, because the emotional resonance is going to be so much higher, I think the better.”

Altman posited a more optimistic vision of A.I. than he has sometimes suggested in the past. While some have postulated that generative A.I. systems will make global inequality worse by depressing wages for average workers or causing mass unemployment, Altman said he thought the opposite would be true. He noted that enhancing economic growth and productivity globally, ought to lift people out of poverty and create new opportunities. “I’m excited that this technology can, like, bring the missing productivity gains of the last few decades back, and more than catch up,” he said. He noted his basic thesis, that the two “limiting reagents” of the world are the cost of intelligence and the cost of energy. If those two become dramatically less expensive, he said, it ought to help poorer people more than rich people. “This technology will lift all of the world up,” he said.

He also said he thought there were versions of A.I. superintelligence, a future technology that some, including Altman in the past, have said could pose severe dangers to all of humanity, that can be controlled. “The way I used to think about heading towards superintelligence is that we were going to build this one, extremely capable system,” he said, noting that such a system would be inherently very dangerous. “I think we now see a path where we very much build these tools that get more and more powerful, and there are billions of copies, trillions of copies being used in the world, helping individual people be way more effective, capable of doing way more; the amount of output that one person can have can dramatically increase. And where the superintelligence emerges is not just the capability of our biggest single neural network but all of the new science we are discovering, all of the new things we’re creating.”

In response to a question about what he learned from various mentors, Altman cited Elon Musk. “Certainly learning from Elon about what is just, like, possible to do and that you don’t need to accept that, like, hard R&D and hard technology is not something you ignore, that’s been super valuable,” he said.

He also fielded a question about whether he thought A.I. could help human settlement of Mars. “Look, I have no desire to go live on Mars, it sounds horrible,” he said. “But I’m happy other people do.” He said robots should be sent to Mars first to help terraform the planet to make it more hospitable for human habitation.

Outside the auditorium, the protesters kept up their chants against the OpenAI CEO. But they also paused to chat thoughtfully with curious attendees who stopped by to ask them about their protest.

“What we’re trying to do is raise awareness that A.I. does pose these threats and risks to humanity right now in terms of jobs and the economy, bias, misinformation, societal polarization, and ossification, but also slightly longer term, but not really long term, more existential threats,” said Alistair Stewart, a 27-year-old graduate student in political science and ethics at UCL who helped organize the protests.

Stewart cited a recent survey of A.I. experts that found 48% of them thought there was a 10% or greater chance of human extinction or other grave threats from advanced A.I. systems. He said that he and others protesting Altman’s appearance were calling for a pause in the development of A.I. systems more powerful than OpenAI’s GPT-4 large language model until researchers had “solved alignment”—a phrase that basically means figuring out a way to prevent a future superintelligent A.I. system from taking actions that would cause harm to human civilization.

That call for a pause echoes the one made by thousands of signatories of an open letter, including Musk and a number of well-known A.I. researchers and entrepreneurs, that was published by the Future of Life Institute in late March.

Stewart said his group wanted to raise public awareness of the threat posed by A.I. so that they could pressure politicians to take action and regulate the technology. Earlier this week, protesters from a group calling itself Pause AI have also begun picketing the London offices of Google DeepMind, another advanced A.I. research lab. Stewart said his group was not affiliated with Pause AI, although the two groups shared many of the same goals and objectives.