I've been tinkering about with Ollama in recent months, as it's probably one of the best ways normal folks such as myself can interact with AI LLMs on our local machines.

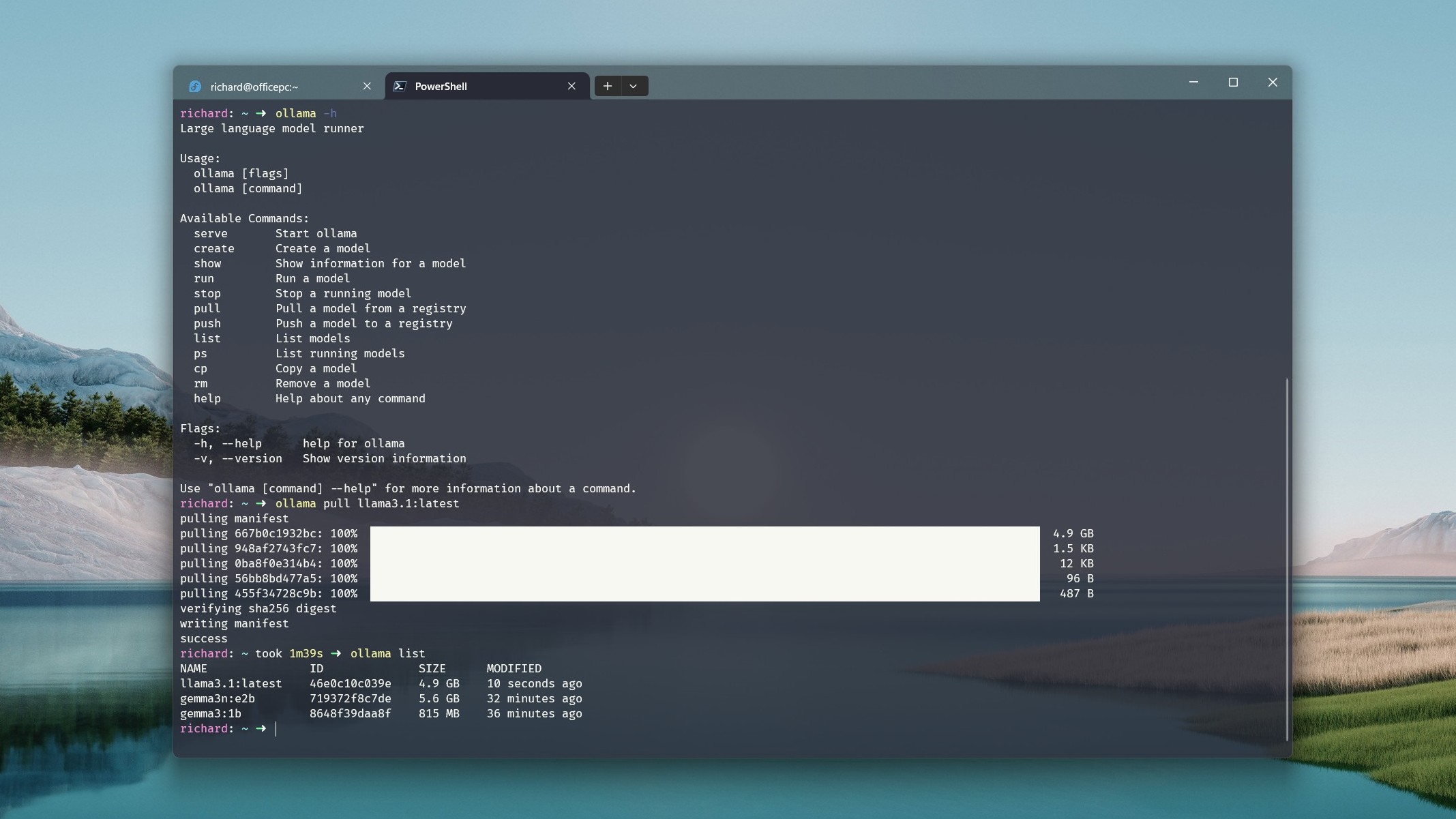

Installing and using Ollama has never been particularly difficult, but it has required living inside a terminal window. Until now.

Ollama now has a shiny GUI for Windows in the form of an official app. It's installed alongside the CLI components now when you set it up, and it removes much of the need to go into a terminal to get your LLM on.

If that's more your thing, you can still download a pure CLI version of Ollama from its GitHub repository.

Installing both portions is as simple as downloading Ollama from the website and running the installer. By default, it'll run in the background, accessible via the system tray or through the terminal. Or you can now launch the app from there or through the Start Menu.

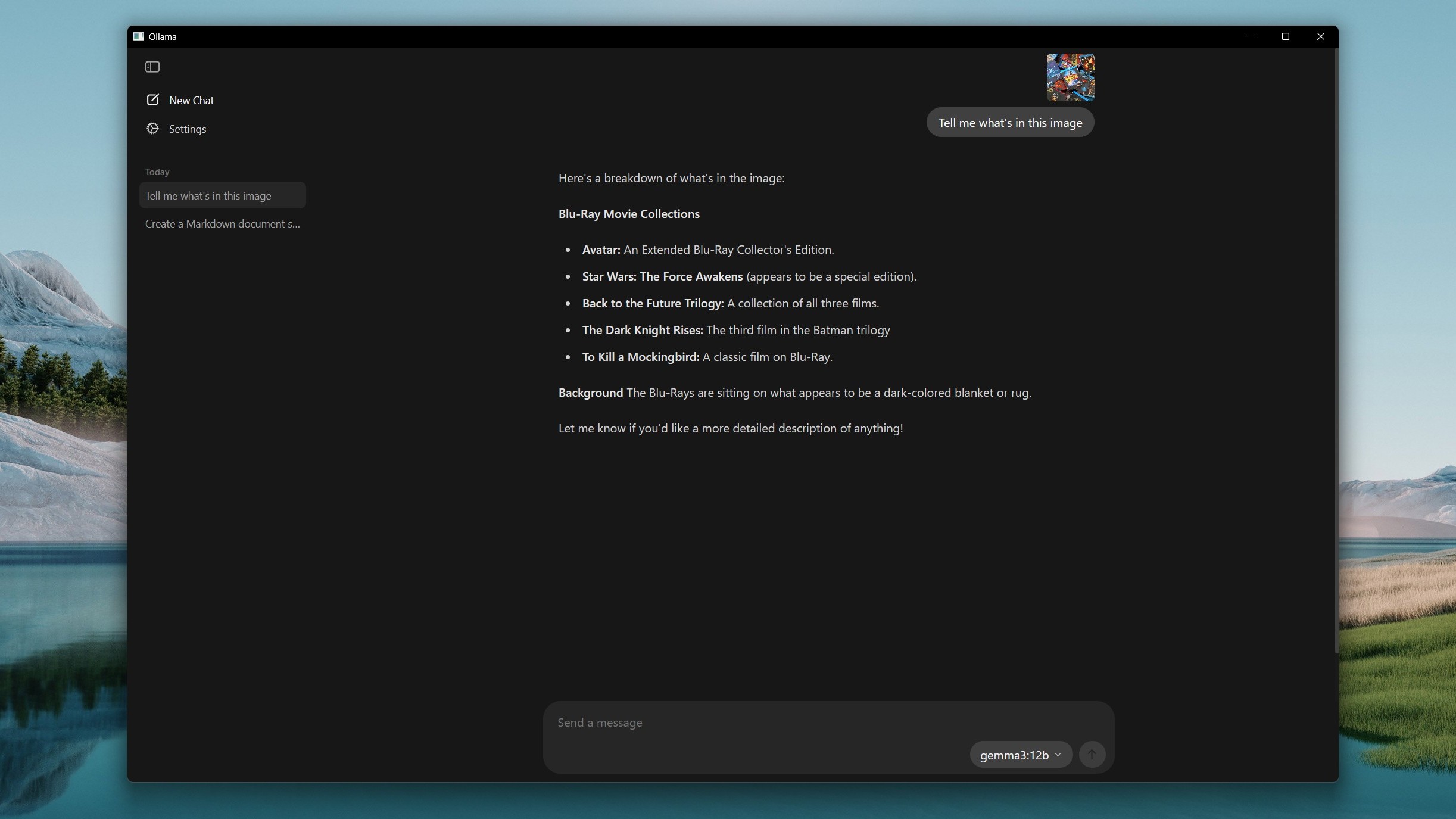

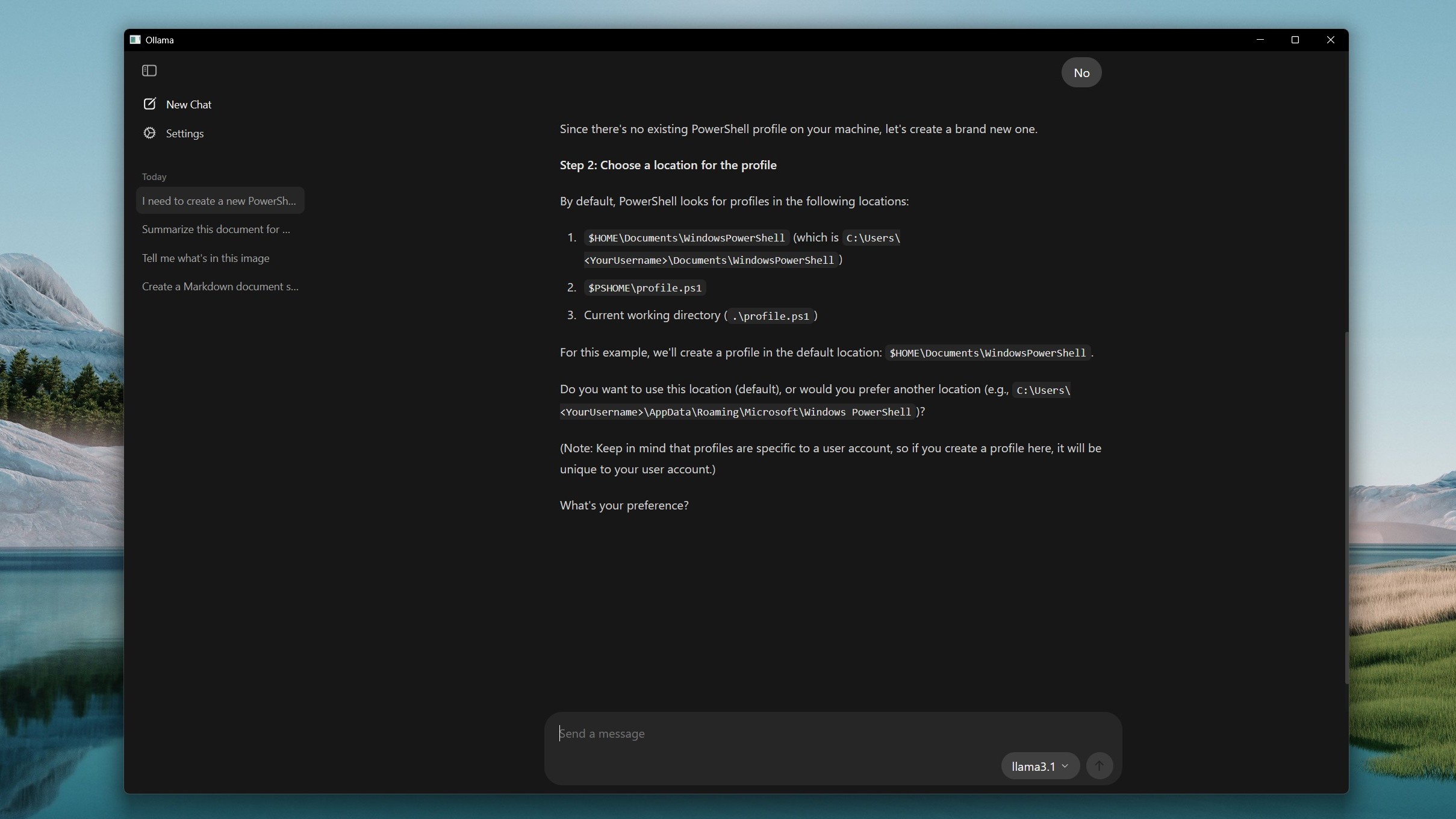

It's a fairly simple experience and familiar if you've ever used basically any AI chatbot. Type in the box, Ollama will respond using whichever of the models you designate.

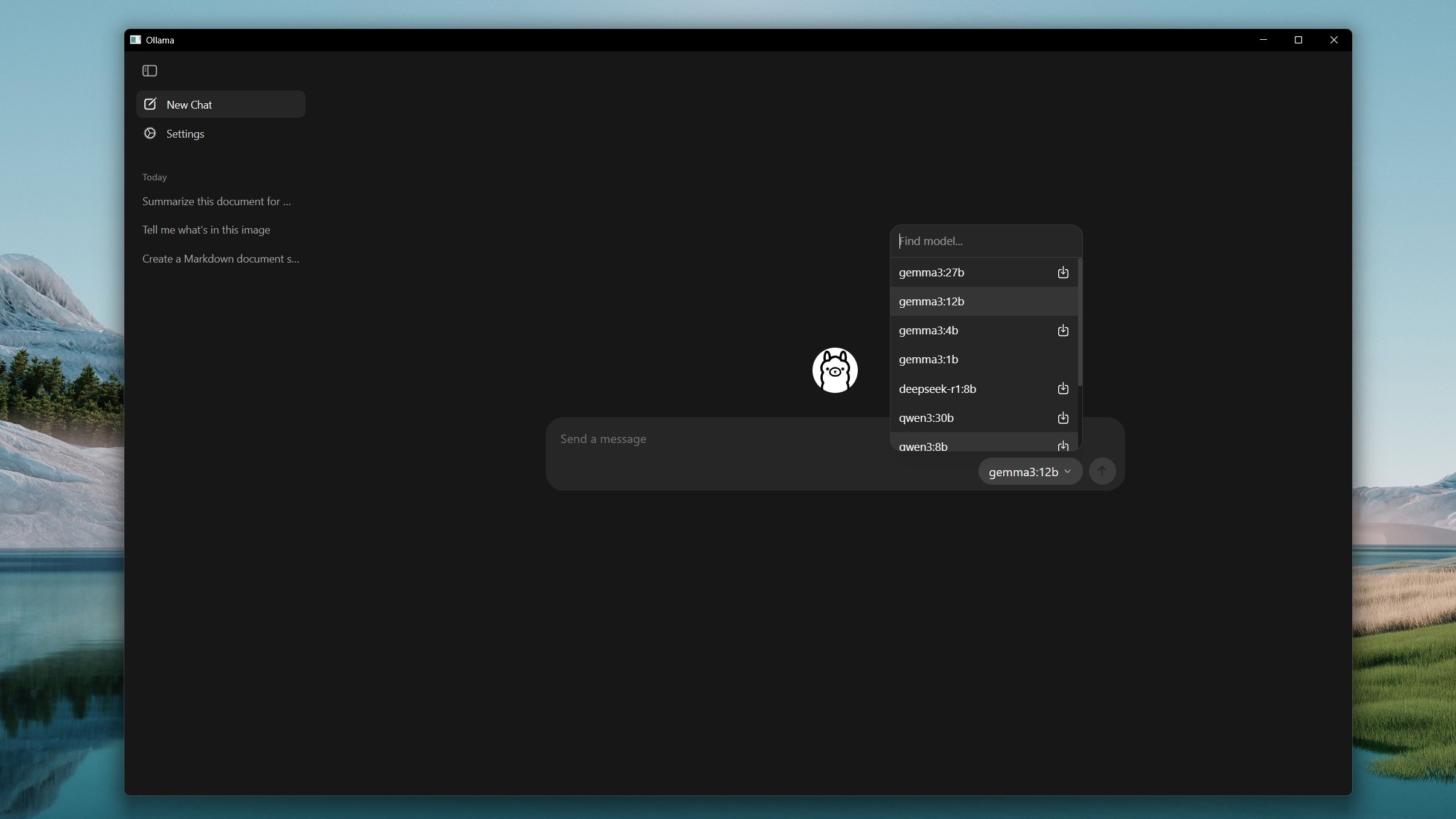

I'm assuming you're supposed to be able to click the icon next to a model you don't have installed to pull it to your machine, but it doesn't seem to do anything right now. You can search for models from the dropdown, though, to find them.

The workaround I found is to select the model, then try to send a message to it, which then prompts the Ollama app to pull it first. You can of course still go into the terminal and pull models this way, and they'll appear in the app.

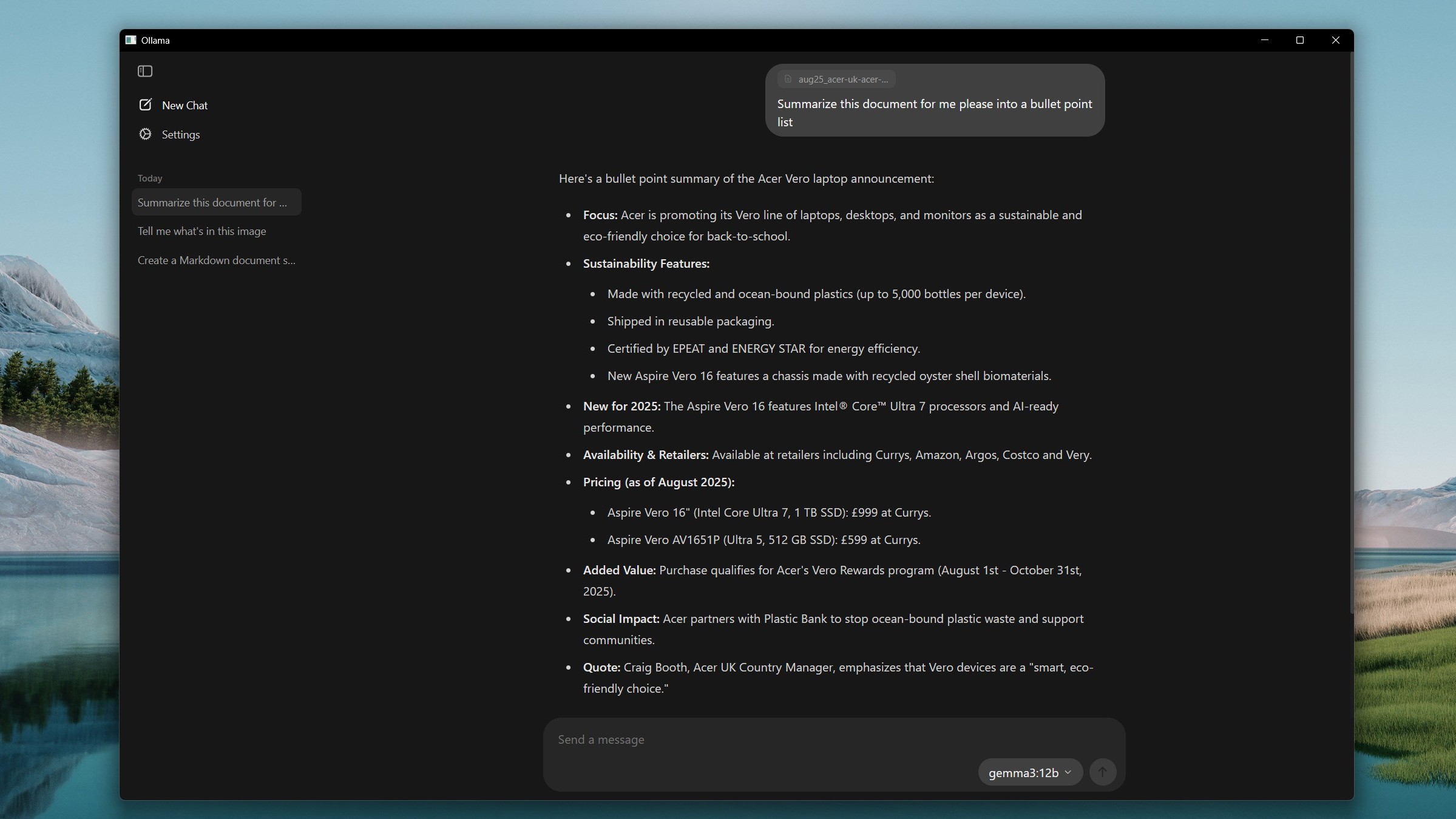

Settings are fairly basic, but one of them allows you to change the size of the context window. If you're dealing with large documents, for example, increase the context length with the slider, at the cost of needing more system memory to do so. Which is understandable.

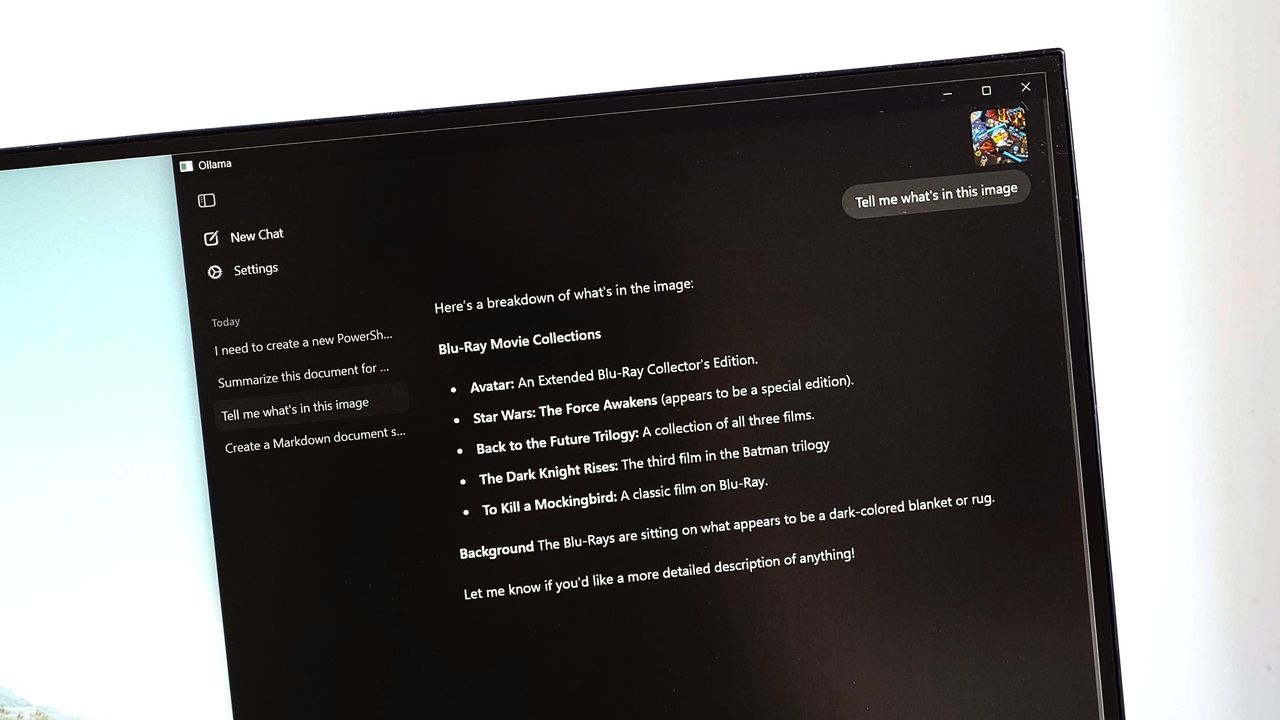

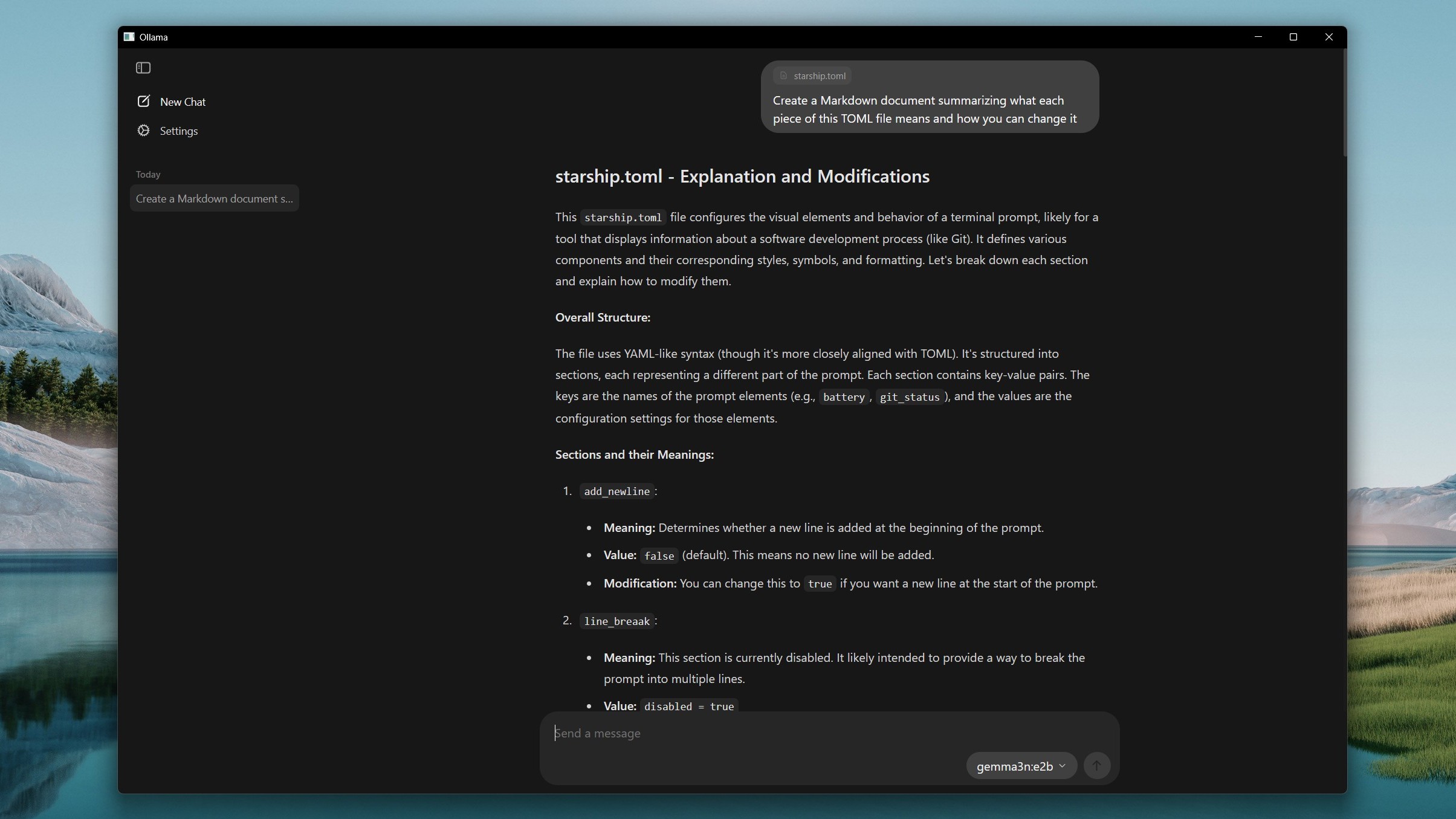

The new Ollama app has some nifty features, though, and the blog post has a couple of videos showing them in action. With multimodal support you can drop images into the app and send them to models that support interpreting them, such as Gemma 3.

Ollama can also interpret code files you drop in, with an example provided where the app is asked to produce a Markdown document explaining the code and how to use it.

Being able to drag and drop files into Ollama is definitely more convenient than trying to interact with them using the CLI. You don't need to know file locations, for one.

You'll still, for now at least, potentially want to use the CLI for some of the other features available in Ollama, though. For example, you can't push a model using the app, only the CLI. Likewise for creating models, listing running models, copying and removing.

For general use, though, I think this is a great step forward. Granted, a lot of the folks that have been using local LLMs to this point are probably comfortable inside a terminal window. And using the terminal and being happy using it is certainly a skill I'd always recommend.

But having a user-friendly GUI makes it more accessible to everyone. There have been third-party tools, such as Open WebUI that have done this before, but being built into the package just makes it easier.

What remains, though, is that you'll still need a PC with enough horsepower to use these models. Ollama doesn't yet support NPUs, so you're reliant on the rest of your system.

It's recommended to have at least an 11th Gen Intel CPU or a Zen 4-based AMD CPU with support for AVX-512 and ideally 16GB of RAM. 8GB is the bare minimum Ollama recommends for the 7B models, and there are smaller models you can use than this that will use even less resources.

You don't need a GPU, but if you have one, you'll naturally get better performance. If you want to use some of the largest models, it's definitely something you'll want. And the more VRAM, the better.

Running a local LLM, though, is something worth trying at least once if your PC can handle it. You're not reliant on a web connection, so you can use it offline, and you're not feeding the great AI cloud computer in the sky, either.

You don't have web search out of the box, but there are ways and means if you absolutely need this. But you'll need something other than the Ollama app to do that. But download it, give it a try, and you might find your new favorite AI chatbot.