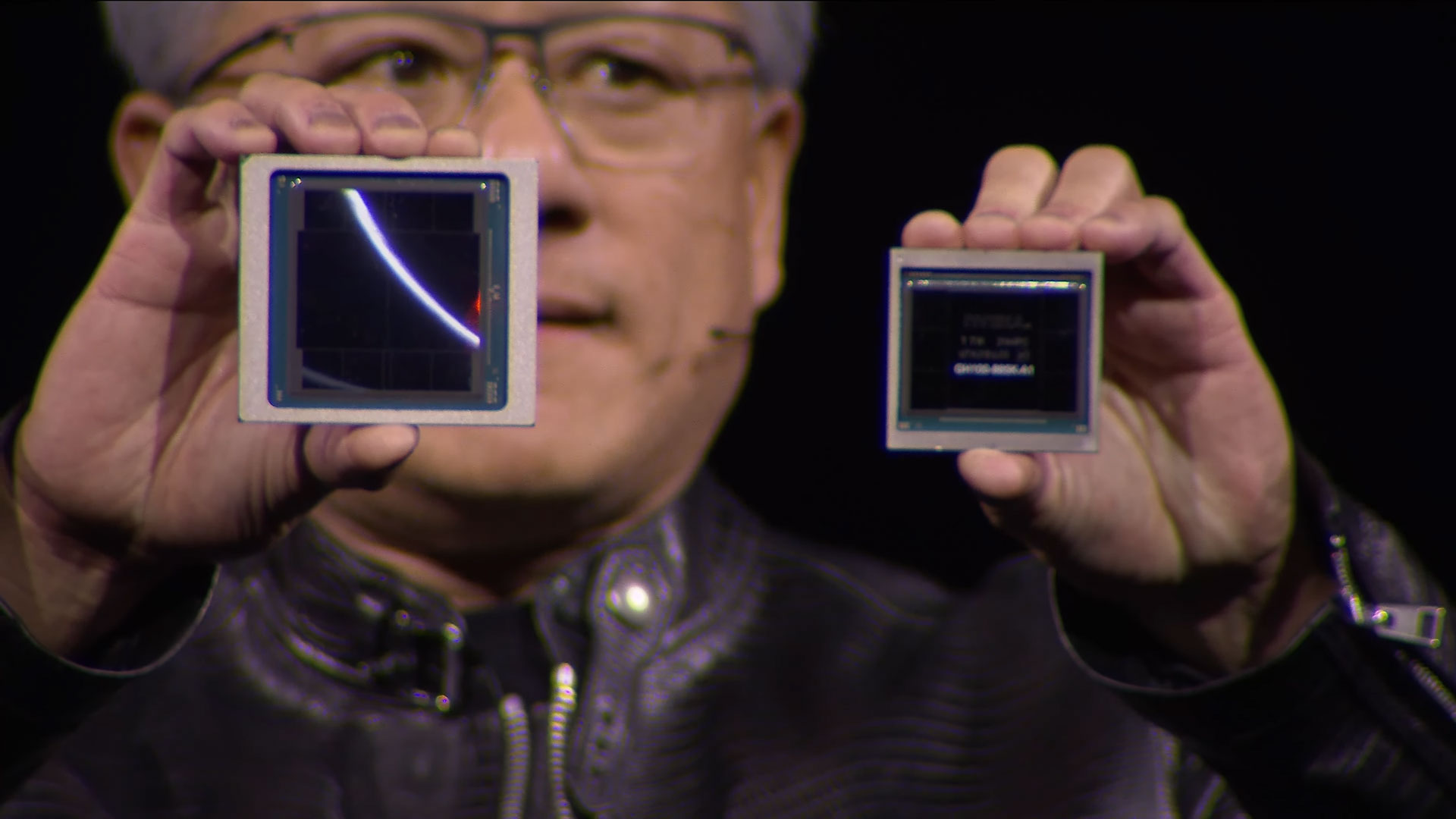

Nvidia's Blackwell GPUs for AI applications will be more expensive than the company's Hopper-based processors, according to analysts from HSBC cited by @firstadopter, a senior writer from Barron's. The analysts claim that one GB200 superchip (CPU+GPUs) could cost up to $70,000. However, Nvidia may be more inclined to sell servers based on the Blackwell GPUs rather than selling chips separately, especially given that the B200 NVL72 servers are projected to cost up to $3 million apiece.

HSBC estimates that Nvidia's 'entry' B100 GPU will have an average selling price (ASP) between $30,000 and $35,000, which is at least within the range of the price of Nvidia's H100. The more powerful GB200, which combines a single Grace CPU with two B200 GPUs, will reportedly cost between $60,000 and $70,000. And let's be real: It might actually end up costing quite a bit more than that, as these are merely analyst estimates.

HSBC estimates pricing for NVIDIA GB200 NVL36 server rack system is $1.8 million and NVL72 is $3 million. Also estimates GB200 ASP is $60,000 to $70,000 and B100 ASP is $30,000 to $35,000.May 13, 2024

Servers based on Nvidia's designs are going to be much more expensive. The Nvidia GB200 NVL36 with 36 GB200 Superchips (18 Grace CPUs and 36 enhanced B200 GPUs) may be sold for $1.8 million on average, whereas the Nvidia GB200 NVL72 with 72 GB200 Superchips (36 CPUs and 72 GPUs) could have a price of around $3 million, according to the alleged HSBC numbers.

When Nvidia CEO Jensen revealed the Blackwell data center chips at this year's GTC 2024, it was quite obvious that the intent is to move whole racks of servers. Jensen repeatedly stated that when he thinks of a GPU, he's now picturing the NVL72 rack. The whole setup integrates via high bandwidth connections to function as a massive GPU, providing 13,824 GB of total VRAM — a critical factor in training ever-larger LLMs.

Selling whole systems instead of standalone GPUs/Superchips enables Nvidia to absorb some of the premium earned by system integrators, which will increase its revenues and profitability. Considering that Nvidia's rivals AMD and Intel are gaining traction very slowly with their AI processors (e.g., Instinct MI300-series, Gaudi 3), Nvidia can certainly sell its AI processors at a huge premium. As such, the prices allegedly estimated by HSBC are not particularly surprising.

It's also important to highlight differences between H200 and GB200. H200 already commands pricing of up to $40,000 for individual GPUs. GB200 will effectively quadruple the number of GPUs (four silicon dies, two per B200), plus adding the CPU and large PCB for the so-called Superchip. Raw compute for a single GB200 Superchip is 5 petaflops FP16 (10 petaflops with sparsity), compared to 1/2 petaflops (dense/sparse) on H200. That's roughly five times the compute, not even factoring in other architectural upgrades.

It should be kept in mind that the actual prices of data center-grade hardware always depend on the individual contracts, based on the volume of hardware ordered and other negotiations. As such, take these estimated numbers with a helping of salt. Large buyers like Amazon and Microsoft will likely get huge discounts, while smaller clients may have to pay an even higher price that what HSBC reports.