Nvidia CEO Jensen Huang will take to the stage today, March 21, at 8am PT to deliver his GTC keynote. We're expecting to hear a lot more about AI and deep learning technologies, as tools like ChatGPT are driving the adoption of increasingly large numbers of Nvidia GPUs.

GTC tends to focus on the data center aspects of GPUs, rather than gaming and GeForce cards, so it's unlikely Nvidia will have much to say about the rumored RTX 4070 and RTX 4060 — though we could get a surprise. You can watch the full keynote on YouTube (we'll embed below once the live blogging is over).

Jensen kicks things off with a discussion of the growing demands of the modern digital world, with growth that at times outpaces the rate of Moore's Law. We'll be hearing from several leaders in the AI industry, robotics, autonomous vehicles, manufacturing, science, and more.

"The purpose of GTC is to inspire the world on the art-of-the-possible of accelerated computing, and to celebrate the achievements of the scientists that use it."

And now we have the latest "I Am AI" introductory video, a staple of the GTC for the past several years. It gets updated each time with new segments, though the underlying musical composition doesn't seem to have changed at all. ChatGPT did make an appearance, "helping to write this script."

No surprise, Jensen is starting the detailed coverage with ChatGPT and OpenAI. One of the most famous deep learning revolutions was with AlexNet back in 2012, an image recognition algorithm that need 262 petaflops worth of computations. Now, one of those researchers is at OpenAI, and GPT-3 required 323 zettaflops worth of computations for training. That's over one million times the amount of number crunching just a decade later. That's what was required as the foundation of ChatGPT.

Along with the computations required for training models used in deep learning, Nvidia has hundreds of libraries to help various industries and models. Jensen is running through a bunch of the biggest libraries and companies using them.

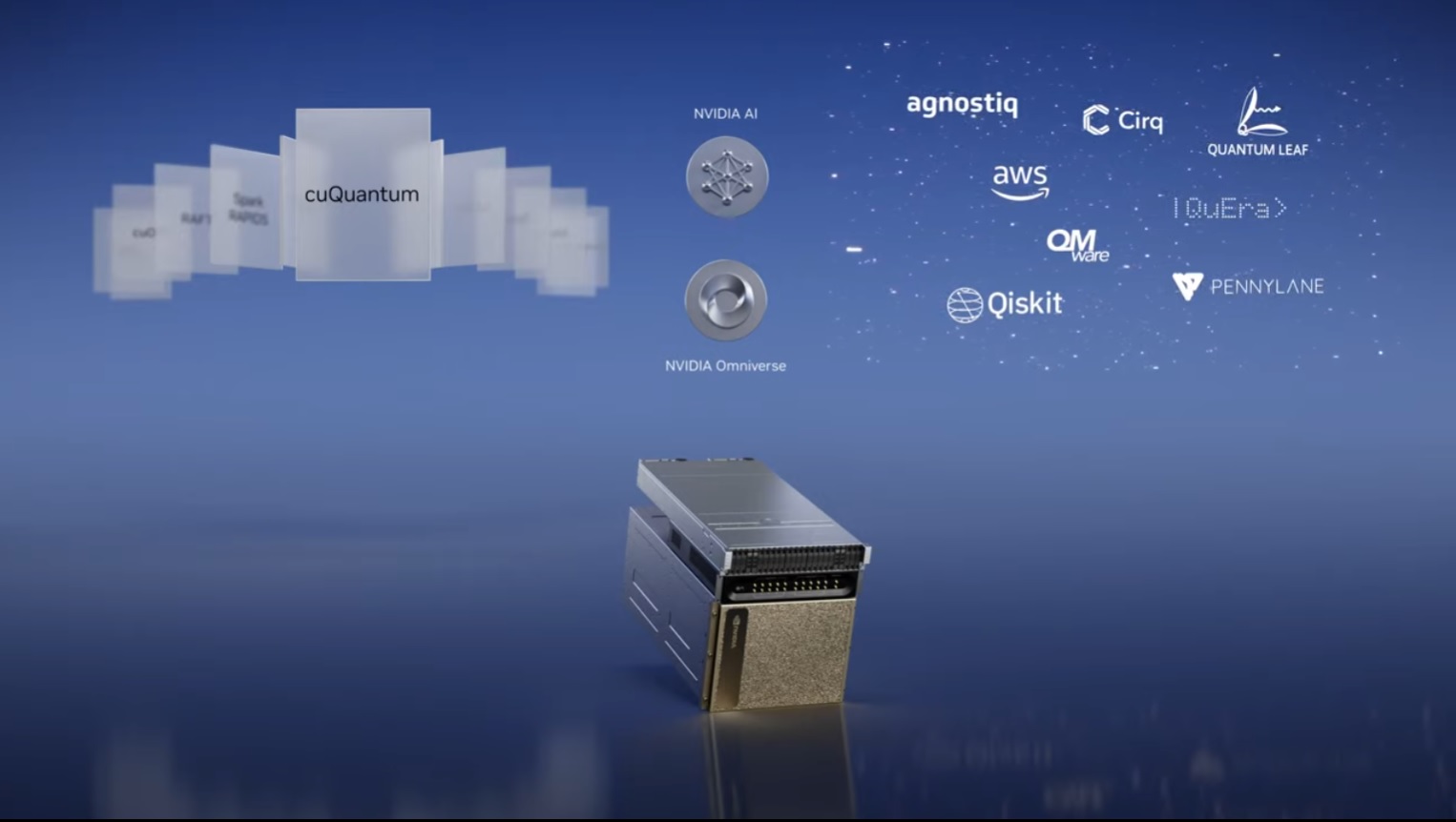

Nvidia's quantum platform, including cuQuantum, for example is used to help researchers in the field of quantum computing. People are predicting some time in the next decade or two (or three?), we'll see quantum computing go from theoretical to practical.

Jensen is now talking about the traveling salesperson problem, and NP-Hard algorithm where there's not efficient solution. It's something that a lot of real-world companies have to deal with, the pick up and delivery problem. Nvidia's hardware and libraries have helped to set a new record in calculating optimal routes for the task, and AT&T is using the technology in its company.

Lots more discussion of other libraries, including cuOpt, Triton, CV-CUDA (for computer vision), VPF (python video encoding and decoding), medical fields, and more. The cost of genome sequencing has now dropped to $100, apparently, thanks to Nvidia's technology. (Don't worry, someone will likely still charge you many times more than that should you need your DNA sequenced.)

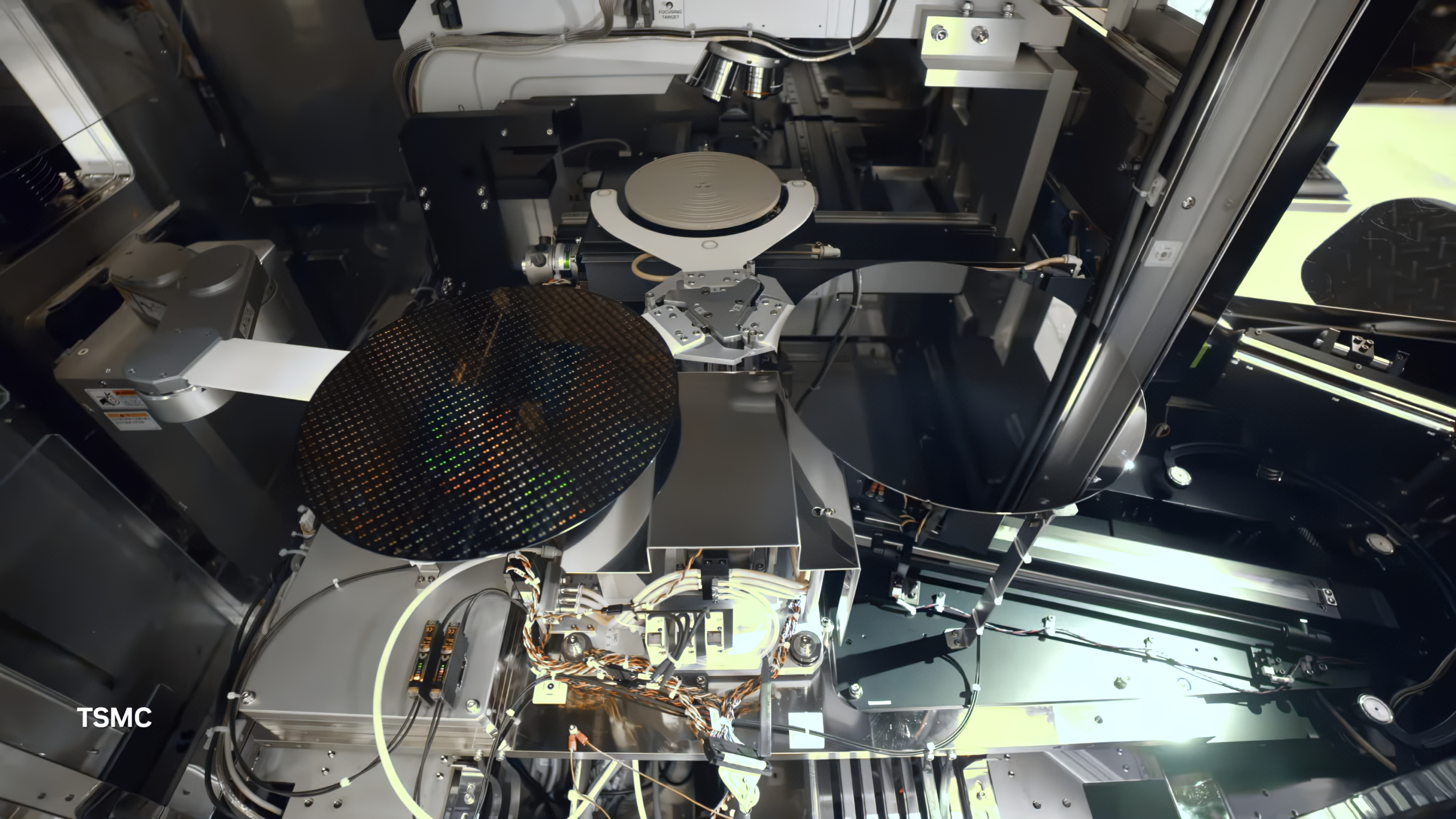

This is a big one, cuLitho, a new tool to help optimize one of the major steps in the design of modern processors. We have a separate deeper dive on Nvidia Computational Lithography, but basically the creation of the patterns and masks used for the latest lithography processes are extremely complex and Nvidia says they can take weeks for the calculations behind just one mask. cuLitho, a library for computational lithography, provides up to 40X the performance of currently used tools.

With cuLitho, a single reticle that used to take two weeks can now be processed in eight hours. It also runs on 500 DGX systems compared to 40,000 CPU servers, cutting power costs by 9X.

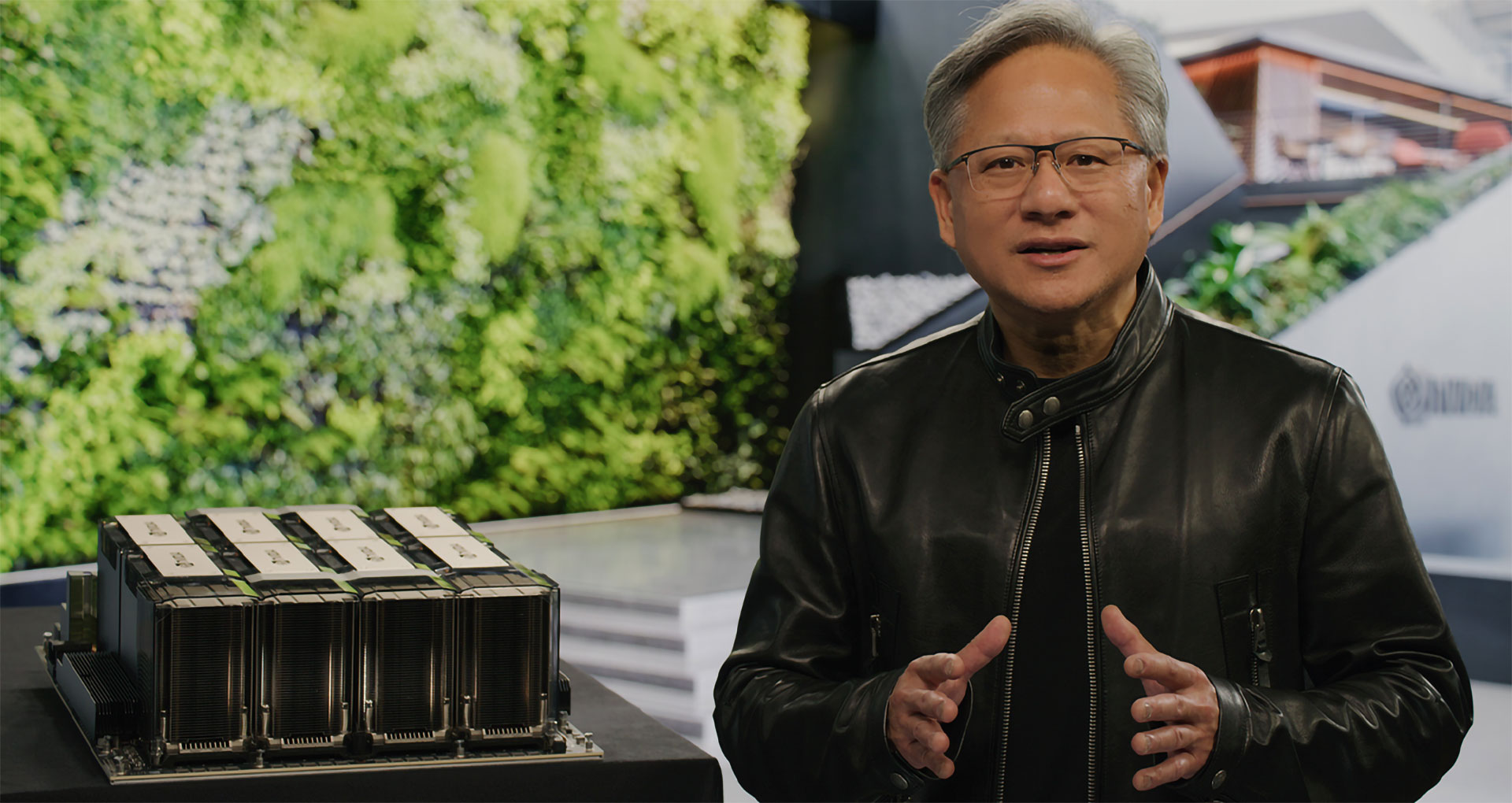

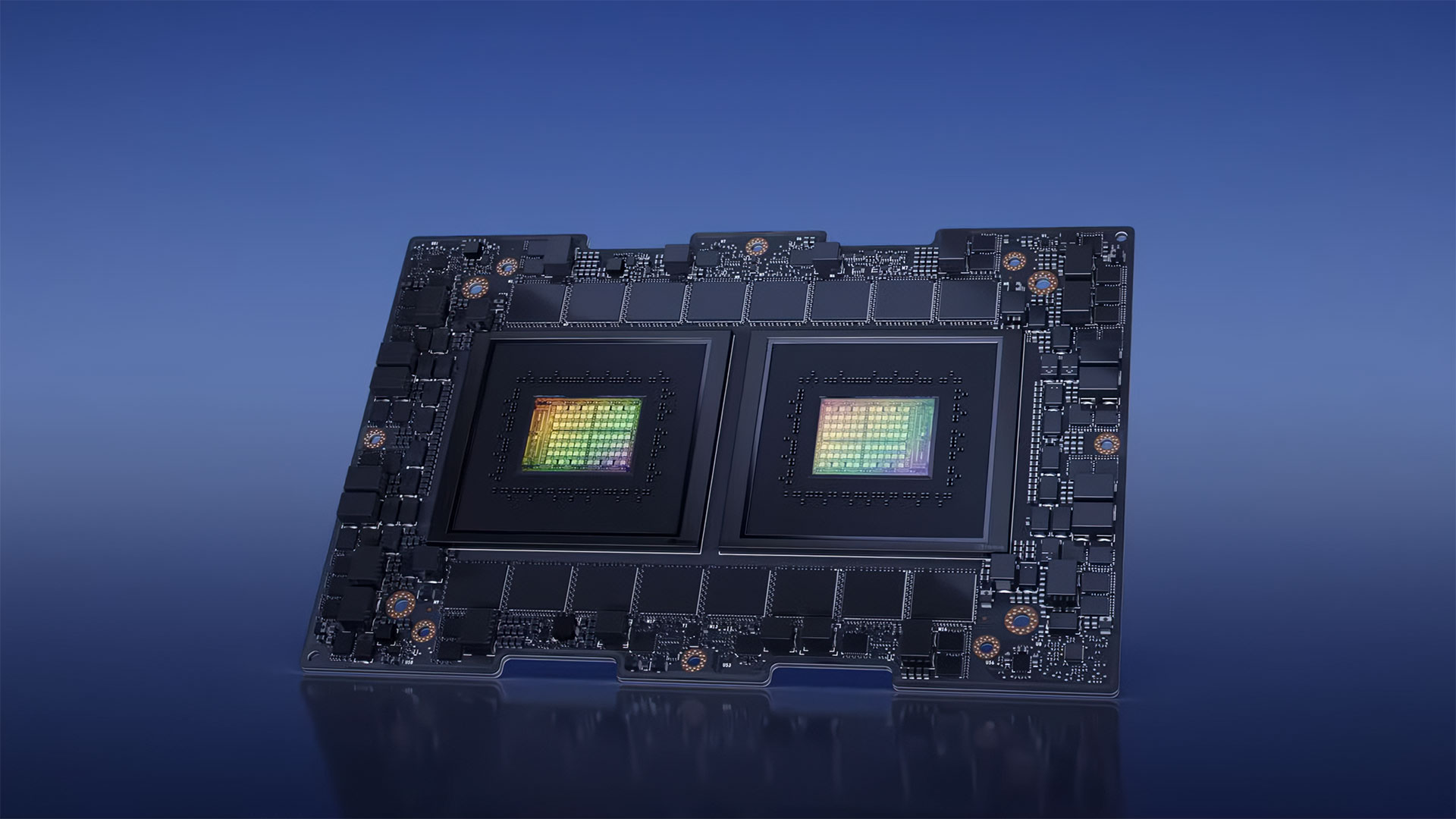

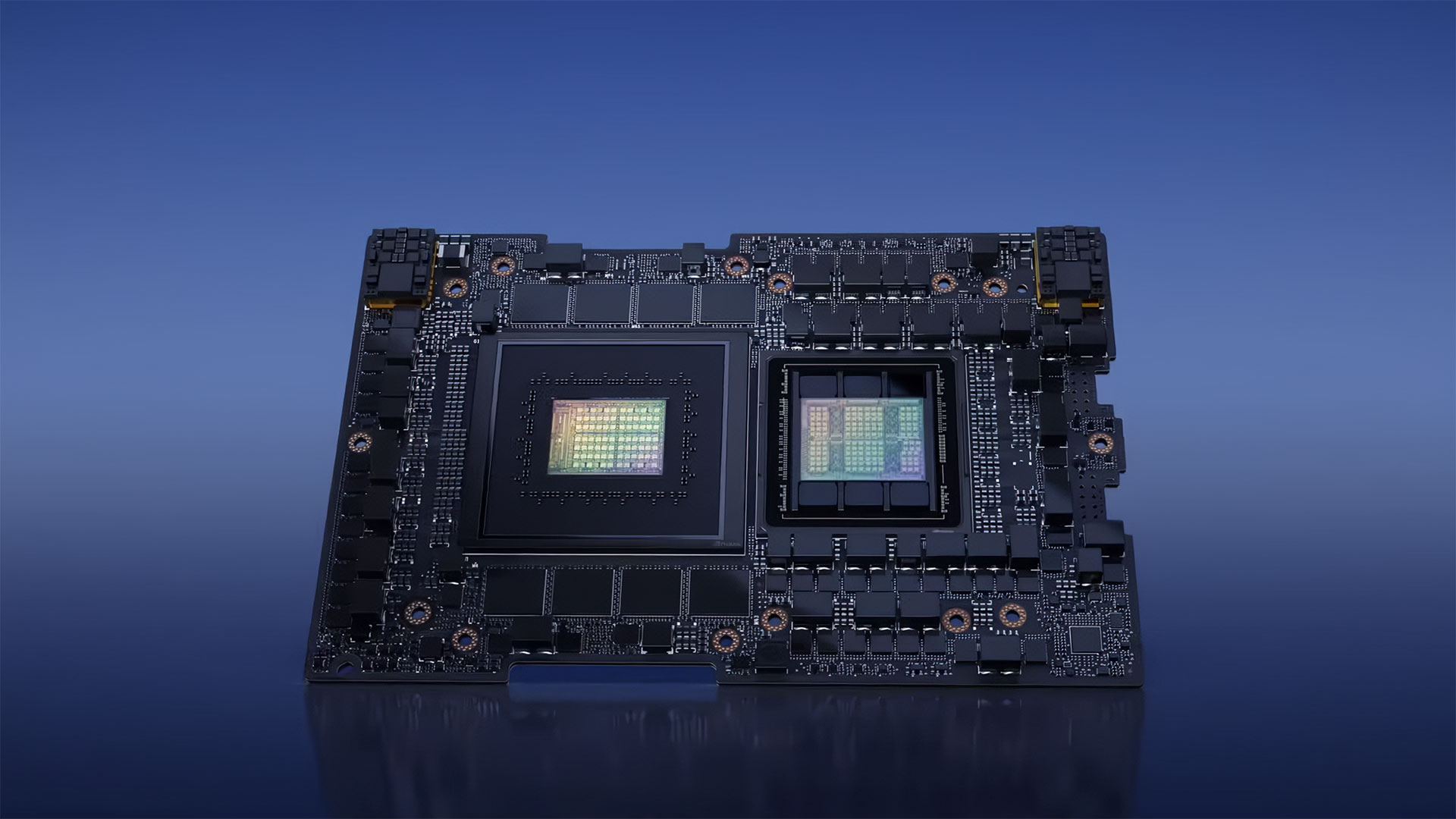

Nvidia has been talking about its Hopper H100 for over a year, but now it's finally in full production and is being deployed at many data centers, including those from Microsoft Azure, Google, Oracle, and more. The heart of the latest DGX supercomputers, pictured above, consists of eight H100 GPUs with massive heatsinks packed onto a single system.

Naturally, getting your own DGX H100 setup would be very costly, and DGX Cloud solutions will be offering them as on demand services. Services like ChatGPT, Stable Diffusion, Dall-E, and other have leveraged cloud solutions for some of their training, and DGX Cloud aims to open that up to even more people.

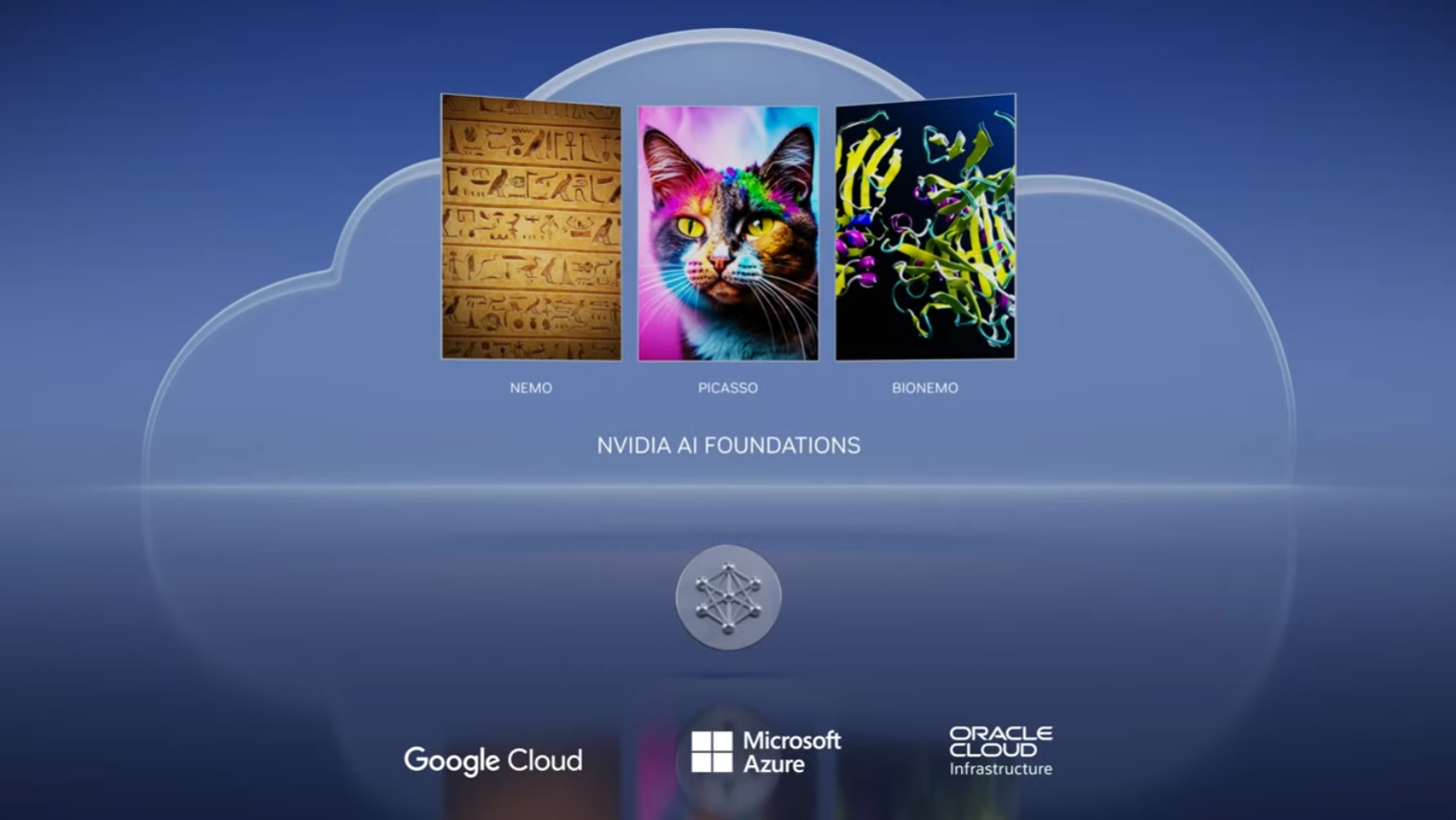

Jensen is now talking about how the GPT industry (Generative Pre-trained Transformer) needs a "foundation" equivalent for these models — a software and deep learning take on what TSMC does for the chip manufacturing industry. To that end, it's announcing Nvidia AI Foundations.

Customers can work with Nvidia experts to train and create models, which can continue to update based on user interactions. These can be fully custom models, including things like image generation based on libraries of images that are already owned. Think of it as Stable Diffusion, but built specifically for a company like Adobe, Getty Images, or Shutterstock.

And we didn't just drop some names there. Nvidia announced that all three of those companies are working with Nvidia's Picasso tool, using "responsibly licensed professional images."

Nvidia AI Foundations also has BioNemo, a tool to help with drug research and the prescription industry.

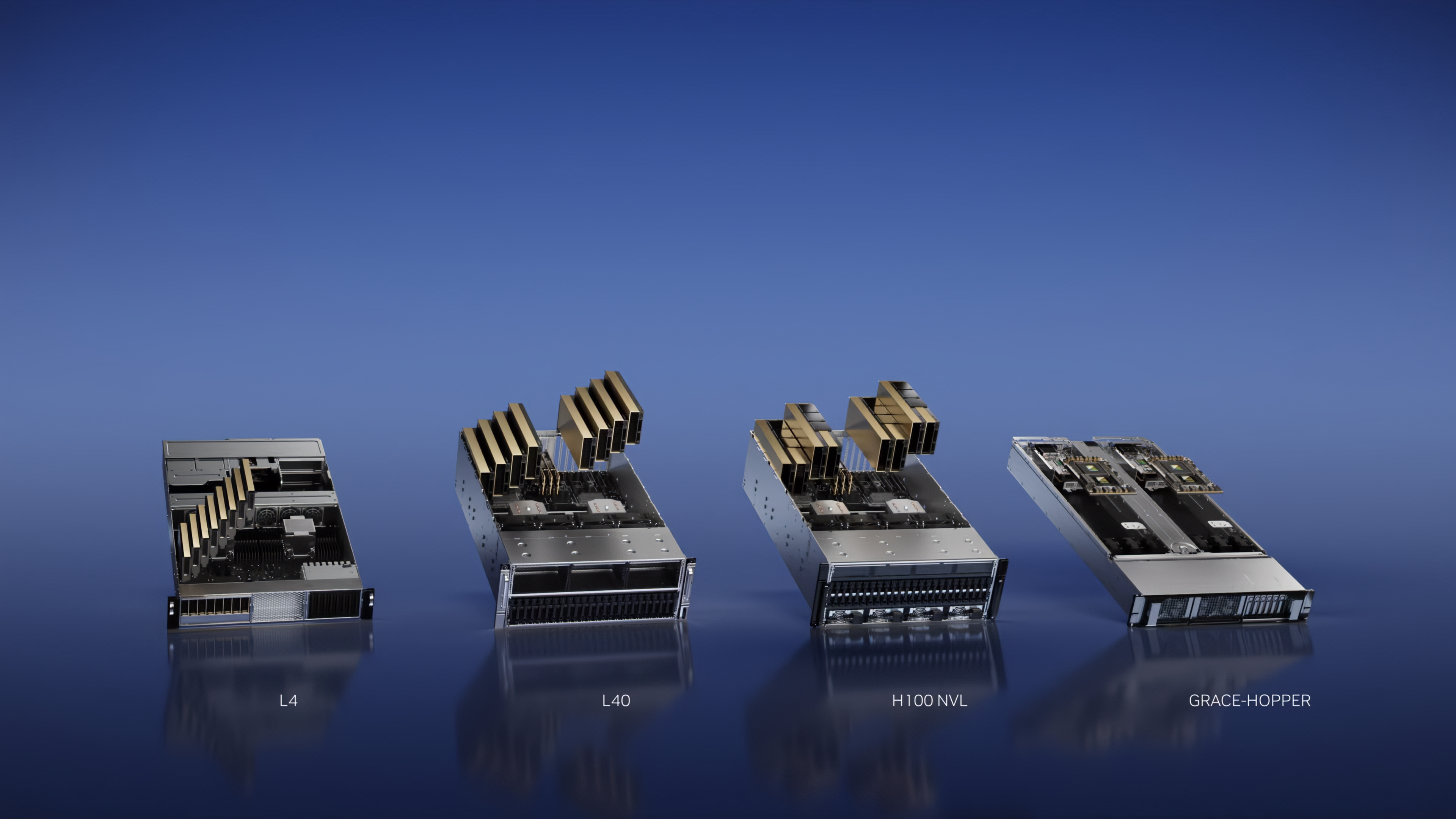

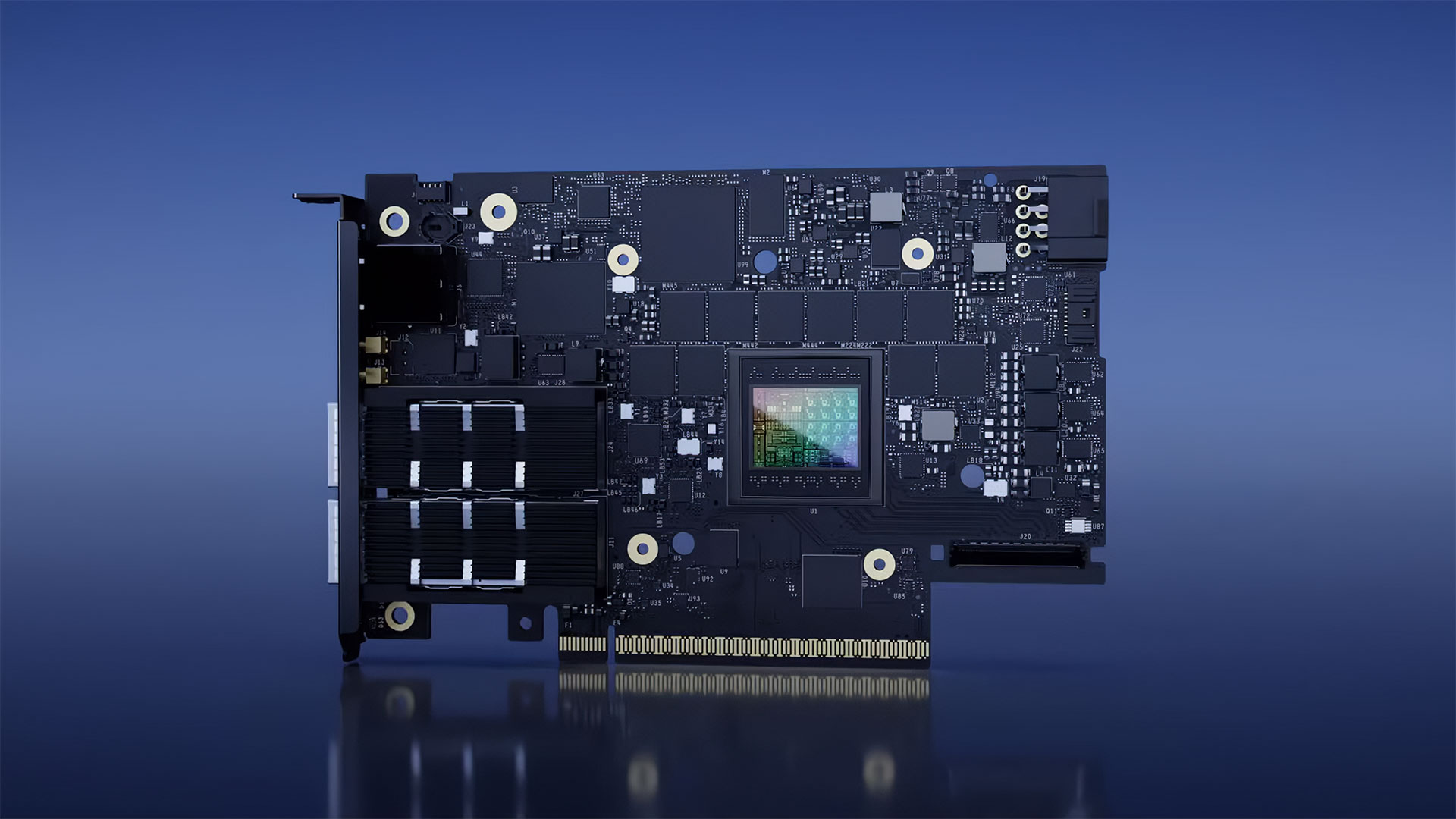

One of the advantages Nvidia offers companies is the idea of having a single platform that can scale up to the largest installations. This is the first we've heard during this keynote where Nvidia specifically mentions its Grace-Hopper solution, which we've heard about in the past. Nvidia also announced it's new L4, L40, and H100 NVL.

That last is pretty cool, as it's a PCI Express solution that uses two Hopper H100 GPUs linked together by NVLink. On its own, thanks to the 188GB of total HBM3 memory, it can handle the 165 billion parameter GPT-3 model. (In our testing of a locally running ChatGPT alternative posted earlier this week, as an example, even a 24GB RTX 4090 could only handle up to the 30 billion parameter model.)

We're now on to robotics, with Amazon talking about its Proteus robots that were trained in Nvidia Isaac Sim and are now being deployed in warehouses. This is all thanks to Nvidia Omniverse and its related technologies like Replicator, Digital Twins, and more. Lots of other name drops going on, for those that like to keep track of such things. Seriously, the number of different names Nvidia has for various tools, libraries, etc. is mind boggling. Isaac Gym is also a thing now, someone deserves credit for that one!

Anyway, Omniverse covers a huge range of possibilities. It covers design and engineering, sensor models, systems manufacturers, content creation and rendering, robotics, synthetic data and 3D assets, systems integrators, service providers, and digital twins. (Yes, I just copied that all from a slide.) It's big, and it's doing a lot of useful stuff.

Hey, look, there's Racer RTX again! I guess that missed the release date that was supposed to be last November or something. Then again, we got the incredibly demanding Portal RTX instead, so maybe it's for the best if Racer RTX gets a bit more time for optimizations and whatever else before it can become a fully playable game / demo / whatever.

More Omniverse (take another shot!), this time with the OVX servers that will be coming from a variety of vendors. These include Nvidia L40 Ada RTX GPUs, plus Nvidia's BlueField-3 processors for connectivity.

There will also be new workstations, powered by Ada RTX GPUs, in both desktop and laptop configurations, "starting in March." Which means now, apparently. Gratuitous photos of dual-CPU Grace, Grace-Hopper, and BlueField-3.

And that's a wrap for this GTC 2023 keynote. Jensen is going over the various announcements, but there will certainly be more to say in the coming days as presentations and further details are revealed. Thanks for joining us.