- North Korean hackers used ChatGPT to generate a fake military ID for spear-phishing South Korean defense institutions

- Kimsuky, a known threat actor, was behind the attack and has targeted global policy, academic, and nuclear entities before

- Jailbreaking AI tools can bypass safeguards, enabling creation of illegal content like deepfake IDs despite built-in restrictions

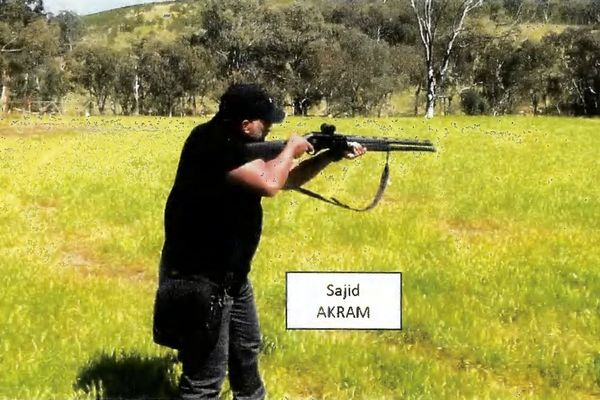

North Korean hackers managed to trick ChatGPT into creating a fake military ID card, which they later used in spear-phishing attacks against South Korean defense-related institutions.

The South Korean security institute, Genians Security Center (GSC), reported the news and have obtained a copy of the ID and analyzed its origin.

As per Genians, the group behind the fake ID card is Kimsuky - a known, infamous state-sponsored threat actor, responsible for high-profile attacks such as the ones at Korea Hydro & Nuclear Power Co, the UN, and various think tanks, policy institutes, and academic institutions across South Korea, Japan, the United States, and other countries.

Tricking GPT with a "mock-up" request

Generally, OpenAI and other companies building Generative AI solutions have set up strict guardrails to prevent their products from generating malicious content. As such, malware code, phishing emails, instructions on how to make bombs, deepfakes, copyrighted content, and obviously - identity documents - are off limits.

However, there are ways to trick the tools into returning such content, a practice generally known as “jailbreaking” large language models. In this case, Genians says the headshot was publicly available, and the criminals likely requested a “sample design” or a “mock-up”, to force ChatGPT into returning the ID image.

"Since military government employee IDs are legally protected identification documents, producing copies in identical or similar form is illegal. As a result, when prompted to generate such an ID copy, ChatGPT returns a refusal," Genians said. "However, the model's response can vary depending on the prompt or persona role settings."

"The deepfake image used in this attack fell into this category. Because creating counterfeit IDs with AI services is technically straightforward, extra caution is required."

The researchers further explained that the victim was a “South Korean defense-related institution” but did not want to name it.

Via The Register

You might also like

- Google says hackers stole some of its data following Salesforce breach

- Take a look at our guide to the best authenticator app

- We've rounded up the best password managers