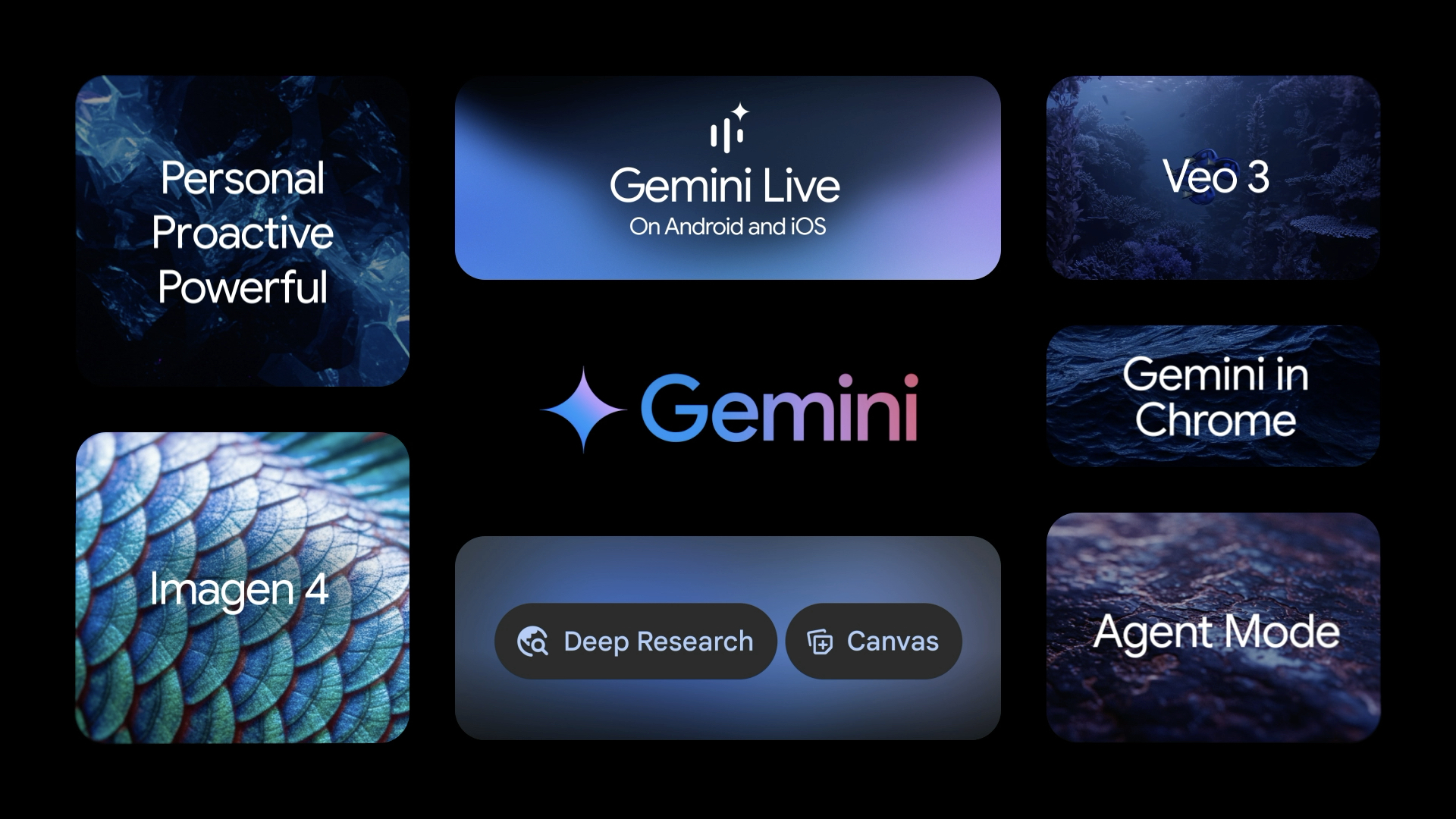

The buzz about Google’s Nano Banana, also known as Gemini 2.5 Flash, hasn’t stopped since its release. While the Nano Banana trends might be cooling off with the popularity of Sora 2, users are finding new ways to get excited about the different ways Nano Banana lets them edit reality.

Now, Google is bringing its viral Nano Banana image editing tool to AI Mode and Google Lens, letting users unleash creative image generation and editing directly within search and camera workflows.

Currently rolling out in English for users in the U.S. and India (with broader language support planned), the update adds a “Create” tab and banana-emoji prompts that let you snap or import images and then transform them with AI-powered prompts. It’s yet another step toward bridging generative AI with everyday visual tools.

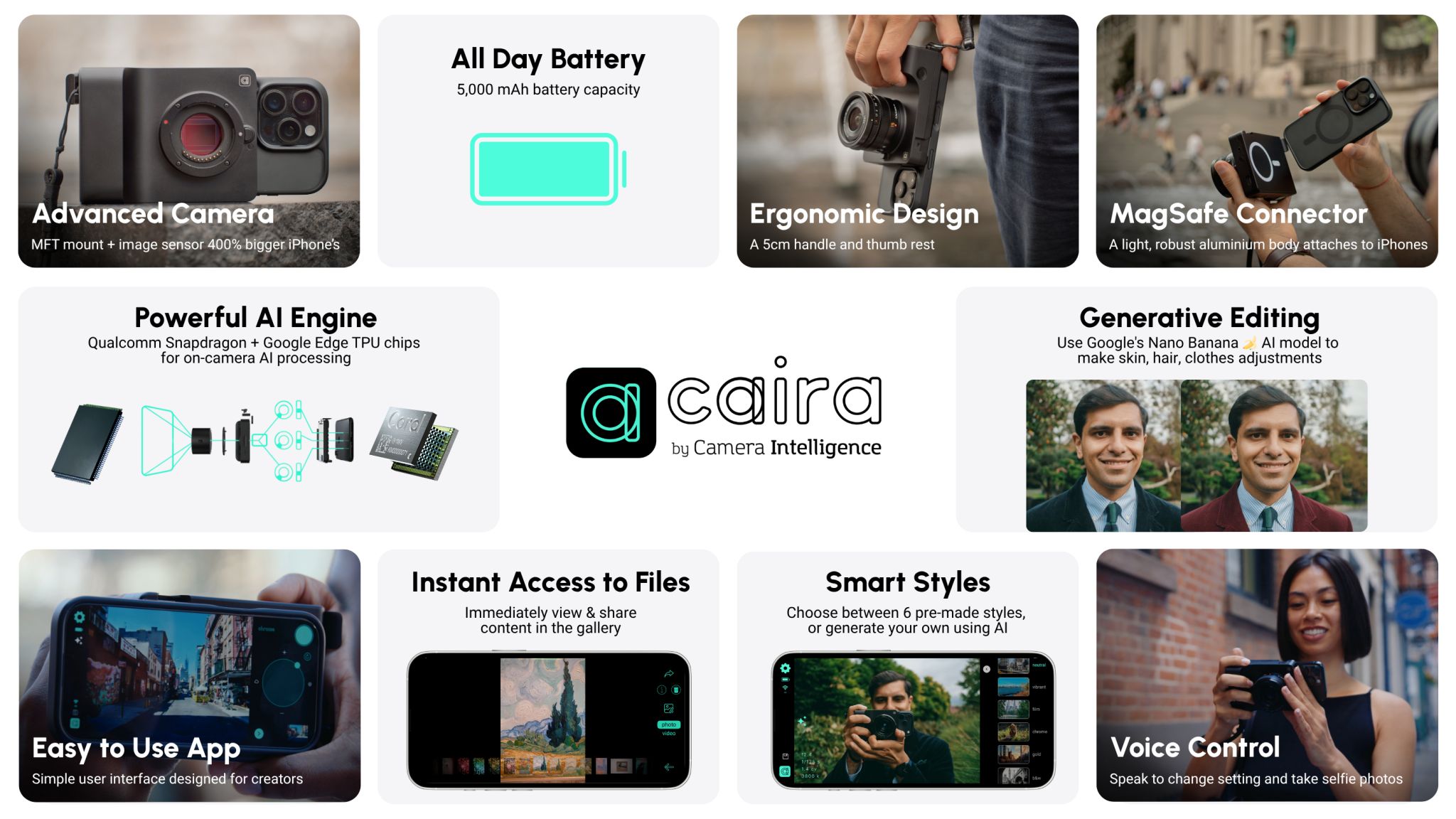

Still not enough? Google’s Nano Banana AI is also integrating with the new Caira camera. This physical camera built by a startup called Camera Intelligence, uses Google’s Nano Banana image model, which is part of the Gemini ecosystem. Camera Intelligence bills itself as "building the camera of the future" by offering what it calls "the world's first LLM-driven camera for content creation."

Using the Caira hardware, users can edit reality as they shoot, eliminating the step of uploading an image into Gemini and requesting edits.

What is the Caira camera?

Caira is a compact, AI-native camera that mounts directly to the back of your iPhone via MagSafe. It doesn’t have a built-in screen, instead, your iPhone acts as the viewfinder and controller.

Caira is expected to launch on Kickstarter on October 30, so the hardware isn’t available yet. Online rumors suggest that no one outside the company has even tested a production unit.

But the groundbreaking element that is creating the stir online is the ability to make real-time, generative edits at the moment of capture. With Google’s Nano Banana model embedded inside, you can prompt the camera to:

- Change lighting or mood (“make it golden hour”)

- Swap backgrounds or objects (“replace the soda can with a water bottle”)

- Apply stylistic filters (“add soft haze and cinematic tones”)

And all of that happens before you press the shutter.

How to get the Caira experience — with Google Lens

As we all patiently wait for the release of the Caira mount for our phones, here's how you can get the same effect using Nano Banana but without the physical attachment. Nano Banana is free and Google offers 100 image generations, so you should have plenty of opportunities to let your creativity run wild. Here's how to use Nano Banana on Google Lens.

- Open Lens. Launch Google Lens from the Google app (iOS or Android).

- Tap the new “Create” mode/tab. In Lens, there will be a Create tab (with a banana emoji) alongside other filters.

- Use the front camera (default) or switch. By default, Create opens with the front-facing camera (for selfies), but you can switch to the rear camera if desired.

- Capture an image. Tap the shutter (banana-emoji) button to take a photo, or import one. That image is then sent into AI Mode’s prompt system.

- Enter or refine a prompt. Once the image is captured, Lens directs you to the AI Mode prompt box, where you can describe how you want the image modified or what style you want to apply.

- Let the AI generate or edit. Nano Banana (Gemini 2.5 Flash Image) will process the prompt and perform image edits or generate new visuals based on your input.

- Download or share. After the generation/editing is complete, you can download the resulting image or share it. The images generally include a small Gemini sparkle watermark.

Caira vs. Gemini: What’s the real difference?

The biggest difference between the Nano Banana application and Caira is Gemini 2.5 Flash is an app and Caira is real hardware.

Caira is built for real-time editing at the moment of capture, allowing users to change lighting, swap objects or apply stylistic effects before taking the shot. Gemini applies edits after a photo has been captured.

The convenience of Caira requires new hardware; a dedicated camera with a Micro Four Thirds sensor that mounts to your iPhone via MagSafe. Gemini works with the iPhone’s native camera and doesn’t require anything extra.

Caira isn’t available yet, while Gemini is already live and accessible to anyone. In short, Caira is a hardware bet on the future of AI-native photography, while Gemini lets you simulate much of that experience using the phone you already have.

Bottom line

Google didn’t build a camera, but it did build the technology that might reshape how we shoot photos.

The Caira camera is still on the horizon, but its core feature — AI-powered, real-time image editing — is already available in Gemini right now. When the camera becomes available, I'll be curious to test it for latency, battery, guardrails and creative control.

Until then, the Nano Banana app is the best way to preview what AI-native photography might feel like.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!