Mira Murati was named interim CEO of OpenAI after cofounder Sam Altman was removed following “a deliberative review process by the board," according to a blog post on the company's site. This story originally published on Fortune.com on Oct. 5, 2023. It has been updated on Nov. 17, 2023, to reflect this news. Read more here.

*

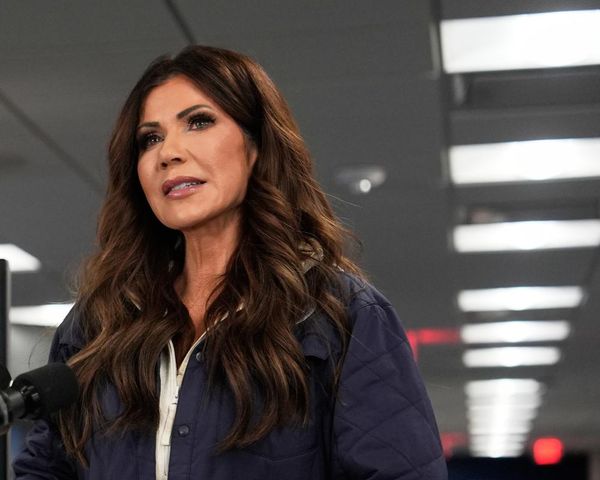

Mere moments before Mira Murati, the chief technology officer of OpenAI, meets us at a conference room in the company’s San Francisco headquarters, another executive exits the space: cofounder and CEO Sam Altman. The nerdy frontman of the AI revolution darts in from another room, gathers his belongings—which, underwhelmingly, consist of just a laptop—and shuffles away quietly, clearing the path for Murati to take center stage.

It’s Altman who’s typically the public face of the best-known company in AI; in fact, he’d recently flown back from Washington, D.C., from talks with Congress about regulation. Less well known, but just as crucial to OpenAI’s soaring ascent, is Murati, who often tinkers just outside of the spotlight. Murati, 34, is the executive who manages the popular chatbot ChatGPT as well as DALL-E, an AI system that creates art from text—the products that have propelled OpenAI, which started eight years ago as a nonprofit research lab, to unforeseen heights.

After ChatGPT launched in November 2022, it amassed more than 100 million monthly active users in just two months, making it the fastest-growing consumer application in history. And as these products continue to evolve, sometimes in response to embarrassing or even disturbing glitches, it’s Murati who’s increasingly responsible for explaining the latest iterations to a public that can seem hyper-attuned to every breakthrough and misstep.

Indeed, OpenAI has been credited with single-handedly ushering in the latest evolution of technology—so-called generative AI. All that attention is translating to real dollars, too. While it’s estimated that ChatGPT’s growth with consumers has slowed in recent months, usage among corporate customers is booming. This year, OpenAI is reportedly on track to rake in more than $1 billion in revenue. (That would be quite a leap: Documents that emerged as part of an investment round early this year suggested that OpenAI brought in just under $30 million in revenue in 2022.) And the company’s deal with software giant Microsoft, which Murati helped oversee, has brought in all sorts of new possibilities for distribution, not to mention a $10 billion investment.

Just as the plain-looking exterior of OpenAI’s office belies the highly stylized decor inside, Murati presents a casual appearance in jeans and a T-shirt, but an inner intensity is quickly apparent as you talk with her. She answers questions slowly and thoughtfully, with a tone that’s both relaxed and ardent. After all, Murati says she spends her time thinking not just about the latest ChatGPT features but also about whether AI will bring about the end of humanity.

Murati joined OpenAI in 2018, following a stint at Tesla. But she didn’t follow an obvious path to Silicon Valley’s most high-profile companies. She spent her formative years in her native Albania, growing up during the Balkan country’s shift from a totalitarian communist system to a more democratic government. The transition was sudden and somewhat chaotic, but she has said she credits the old regime with one thing: When everything else was equal, there was an intense competition for knowledge. And while the internet in Albania was quite slow back then, she was already seeking answers to her many questions (including how the human brain works), and looking for ways to apply technology to life’s biggest problems. At age 16, Murati left her home country after she was awarded a scholarship to attend an international school in Vancouver, Canada; she later got an engineering degree at Dartmouth, and eventually made her way to San Francisco for a senior product manager role on Tesla’s Model X.

Murati has set her sights on cultivating a higher intellect. One of the things that first drew her to OpenAI was the company’s belief that it can achieve artificial general intelligence—the sci-fi-like concept of a computer that can understand, learn, and think about things just like a human can. She’s an AGI believer, too, and an optimist when it comes to AI more broadly (no big surprise). But that doesn’t mean she’s not clear-eyed about some of the very real risks that AI poses, today and in the future.

We sat down with Murati (once Altman cleared the room) to learn more about her path to OpenAI, the challenges the company must grapple with as its products become ubiquitous, plus the latest versions of DALL-E and new features within ChatGPT, which will include voice commands for the first time—a move that could make the next iteration of generative AI even more user-friendly, and bring it (and its makers) one step closer to omnipresence.

This interview has been edited for brevity and clarity.

What are the best use cases you’re seeing with DALL-E and especially this new version [DALL-E 3, which integrates with ChatGPT to let users create prompts]?

We just put it in research preview, so we’ve yet to see how people will use it more broadly. And initially, we were quite focused on what are all the things that can go wrong.

But in terms of [good] use cases, I think there are creative uses. You can make something really customized— like if your kid is really into frogs and you want to make a story about it, in real time, and then they can contribute to the story. So there’s a lot of creativity and co-creation. And then I think in the workplace, prototyping ideas can be so much easier. It makes the iterative early cycle of building anything so much faster.

You have some additional product news. Can you walk us through the biggest highlights here?

So the overall picture, and this is what we’ve been talking about for two years, is that our goal is to get to AGI, and we want to get there in a way that makes sure that AGI goes well for humanity, and that we are building something that’s ultimately beneficial. To do that, you need these models to have a robust concept of the world, in text and images and voice and different modalities.

So what we’re doing is we’re bringing all of these modalities together, in ChatGPT. We’re bringing DALL-E 3 to ChatGPT, and I predict it will be huge deal and just a wonderful thing to interact with. And [another] modality is voice. So you’ll now have the ability to just talk with ChatGPT in real time. I’m not going to do a demo. But you can just talk to it and it’s …

[Murati grabs her phone and opens ChatGPT, then proceeds to prompt the app to tell her “something interesting” via a voice command. The chatbot responds: “Did you know that honey never spoils?”]

So you are doing a demo.

It’s much easier to show than to describe. Basically where this is all going is, it’s giving people the ability to interact with the technology in a way that’s very natural.

Let’s take a step back: There’s so much interest not just in the product but the people making this all happen. What do you think are the most formative experiences you’ve had that have shaped you and who you are today?

Certainly growing up in Albania. But also, I started in aerospace, and my time at Tesla was certainly a very formative moment—going through the whole experience of design and deployment of a whole vehicle. And definitely coming to OpenAI. Going from just 40 or 50 of us when I joined and we were essentially a research lab, and now we’re a full-blown product company with millions of users and a ton of technologists. [OpenAI now has about 500 employees.]

How did you meet Sam, and how did you first come to OpenAI?

I’d already been working in AI applications at Tesla. But I was more interested in general intelligence. I wasn’t sure it was going to happen at that point, but I knew that even if we just got very close, the things we would build along the way would be incredible, and I thought it would definitely be the most important set of technologies that humanity has ever built. So I wanted to be a part of that. OpenAI was kind of a no-brainer, because at the time it was just OpenAI and [Google-owned] DeepMind working on this. And OpenAI’s mission really resonated with me, to build a technology that benefits people. I joined when it was a nonprofit, and then obviously since then we had to evolve—these supercomputers are expensive. [In 2019, OpenAI transitioned to a for-profit company, though it is still governed by a nonprofit board.]

At the time, I remember meeting Greg [Brockman] and Ilya [Sutskever] and Wojciech [Zaremba] and then Sam. And it just became very clear to me that this was the group of people I wanted to work with.

Take us back to when you first released ChatGPT into the world. Did you have any sense that it would be this big, that it would bring so much attention to the team?

No. We thought it would be significant, and we prepared for it. And then of course, all of the preparations became completely irrelevant, like, a few hours later. We had to really adapt and change the way that we operate. But I think that’s actually the key to everything that we’re doing because the pace of advancement in technology is just incredibly rapid.

We had to do that with ChatGPT. You know, we had been using it internally. And we were not so impressed with it ourselves, because we had already been using GPT-4. And so we didn’t think that there would be such a huge freak-out out there. But that was not the case.

One of the other things that happened as ChatGPT got released is this arms race. There’s Google, obviously, but also a bunch of other competitors. What are the downsides to this?

I think the downside is a race to the bottom on safety. That is the downside, for sure.

That’s a big downside.

Yes. Each of us has to commit not to do that and resist the pressures. I think OpenAI has the right incentives not to do that. But I also think competition is good because it can push advancement and progress. You can make it more likely that people can get products that they love. I don’t think competition is bad, per se. It’s more that if everyone is mainly motivated by just that, and losing sight of the risks and what’s at stake, that would be a huge problem.

Do you feel confident that as this competition keeps accelerating—and your investors expect a return—that those incentives can endure all of these pressures?

I feel confident on the structure of our incentives and the design that we have there. With our partners, too, the way we’ve structured things, we’re very aligned. Now, I think the part that is harder to say with confidence, is being able to predict the emerging capabilities and to get ahead of some of the deployment risks. Because at the end of the day, you need to institutionalize and operationalize these things, and they can’t just be policies and ideas.

How do you make sure that you’re thinking through this with each new iteration? What are those conversations like as you go through worst-case scenarios?

It starts with the internal team, right? Before we even have a prototype, we’re just thinking hard about the data that we’re using and making sure we feel good about different aspects of it. It’s a very iterative cycle, and we learn things along the way, and we sort of iterate on that.

Once we have a prototype, the first thing we do is test it internally and put red-teamers [experts whose job it is to find vulnerabilities] in specific areas that we’re worried about. Like, if we see that the model is just incredibly good at making photorealistic faces, let’s say, then we will red-team that and see, how bad are the bad things that can be done with this model? What does misuse look like and harmful bias? And then based on those findings, we will go back and implement mitigations. But every time there is an intervention, we audit what the output looks like, because you want to make sure there’s some sort of balance—that you didn’t make the product completely useless or really frustrating to work with. And that’s a difficult balance, making it useful and wonderful and safe.

And so right now with DALL-E 3, if there is something in the prompt about making faces that we define as [having] sensitive characteristics, then the model will just reject the request. But you also don’t want to reject requests where the user actually wants the model to do something that’s not bad. And so this is the type of nuance that we need to deal with, but you need to start somewhere, and right now we are more conservative and less permissive because the technology is new. And then as we understand more of what the model is doing and get more input from red-teamers, we can use that data to make our policies or use cases more nuanced and be more permissive with what the technology does. That’s been the trend.

“The downside [of competition in AI] is a race to the bottom on safety. Each of us has to commit not to do that and resist the pressures.”

Mira Murati, OpenAI CTO

I know there are several copyright challenges at the moment. [In recent months, several writers and other creators have sued OpenAI, saying the company trains its models using their writing without consent or compensation.] Do you feel like these pose a longer-term threat to having the right training models that are extensive enough?

Right now we’re working a lot with publishers and content makers. I think at the end of the day, people want this technology to advance, to be useful and to enhance our lives. And we’re trying to understand what could work, we’re kind of at the forefront. We have to make some very difficult decisions, and we have to just work with people to understand what this could look like; what do things like revenue share, the economics of it look like. This is a different technology, so why are we using the same policies or mentalities that we were using before? And so we are working on this, but we don’t have a solution. It’s likely to be complex, but we are working with publishers and content makers to understand what to do here. But this is definitely complex, and this is going to evolve a lot. What we’re doing today is probably just at the nascency of figuring out the economics of data sharing and data attribution. When people produce and bring a lot of value to the emergent behavior of a model, how can you even measure that?

On the regulatory side, you’ve said you’re pro-regulation, but what would you say are the one or two most important pieces of AI regulation that you think should happen quickly? And what most concerns you about AI being unleashed into the world?

I think the most important thing that people can do right now is actually understand it. But looking ahead, we want these technologies to be integrated, like, deeply embedded in our infrastructure. And if these technologies are going to be in our electrical infrastructure, you need not only the technical aspects of it, but also the regulatory framework. We need to start somewhere in order for that to become a reality eventually.

Another area which is very important is just thinking about the models that will have what we call dangerous capabilities. There are two types of misuse: There is “normal” misuse, and there is a treacherous turn of these AI systems. And for the case where there is this treacherous turn, how should we think about it? Because this is not just about day-to-day use. It’s technology that will probably affect international politics more than anything that we’ve ever built. The closest case is that of nuclear weapons, of course. So it’s about how we can build regulation that will mitigate that.

We’ve seen two camps emerge on the future of AI—one being very utopian, and one that sees this as an existential threat. What’s your hope for what that future looks like?

I think they’re both possibilities. I’m very optimistic that we can make sure that it goes well. But the technology itself inherently carries both possibilities, and it’s not unlike other tools that we’ve built in the way that there is misuse and there is a possibility for really horrible things, even catastrophic events. And then what’s unique about this is the fact that there is also the existential threat that, you know, it’s basically the end of civilization. I think there is [only] a small chance of that happening, but there is some small chance, and so it is very much worth thinking about.

We have a whole team dedicated to this, and a ton of compute power. We call this project Super Alignment, and it’s all about how we align these systems and make sure they’re always acting in accordance with our values. And that’s difficult.

This article appears in the October/November 2023 issue of Fortune with the headline, "Mira Murati is shaping AI's future—and maybe yours."