As data center-grade processors are getting hotter, companies invent more and more creative ways to cool them down. As Nvidia and its partners are reportedly experimenting with new cold plates and immersion cooling for next-generation AI GPUs, Microsoft is proposing to etch microfluidic channels on the back of an actual chip to reduce its peak temperature by up to 65%, making it three times more efficient than cold plates.

Microsoft says it has developed a cooling system that routes fluid directly into microchannels etched on the back of the silicon die to guide the coolant to high-heat regions inside the chip. Flowing liquid inside the chip greatly reduces the efficiency of the whole setup, as liquid can almost 'touch' hot spots inside the processor, whereas in the case of more traditional liquid cooling or even immersion cooling, heat emerges several layers away from the coolant.

To optimize thermal routing, Microsoft worked with Swiss startup Corintis, which used artificial intelligence to refine channel geometry. Rather than simple straight lines, the final layout mimics patterns found in nature, such as leaf veins or butterfly wings, to guide fluid more efficiently. Also, microchannels must be small enough to function effectively but not so deep that they weaken the silicon, risking mechanical failure.

However, etching microfluid channels can only be done as a separate sequence during chip manufacturing, which means additional steps and costs. Alternatively, Microsoft proposes to produce a microfluidic heat transfer structure separately and cool down one or two chips using the structure, according to a patent. Such integration requires new packaging methods to prevent coolant leaks and maintain durability.

By now, Microsoft has discovered the right coolant fluid, developed precise etching techniques, and managed to integrate these into chip production. Therefore, Microsoft considers its technology ready for full-scale production within Microsoft's chip development pipeline. Third-party customers may license the technology from Microsoft Technology Licensing, LLC.

"Microfluidics would allow for more power-dense designs that will enable more features that customers care about and give better performance in a smaller amount of space," said Judy Priest, corporate vice president and chief technical officer of Cloud Operations and Innovation at Microsoft. "But we needed to prove the technology and the design worked, and then the very next thing I wanted to do was test reliability."

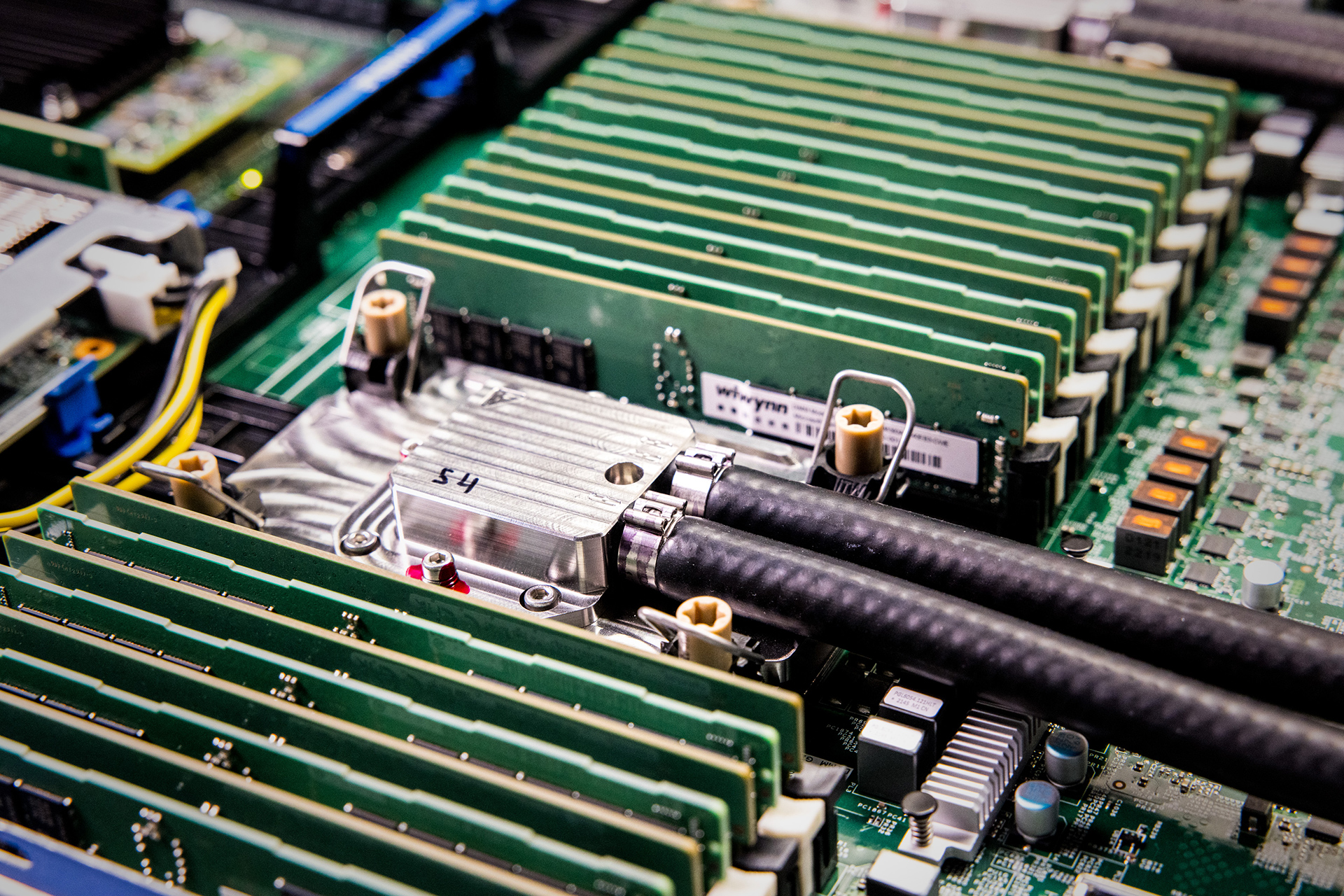

Microsoft is testing the cooling solution on servers running simulated Teams workloads, where uneven heat loads across many services are handled more efficiently, enabling higher burst performance, which helps to reduce the number of servers that otherwise sit idle when not in use during peak loads that tend to happen on the hour or on the half-hour.

Lab tests showed that this new technique can reduce peak silicon temperatures by up to 65%, depending on the specific chip and usage. Compared to cold plates, the cooling performance improvement was as much as three times, depending on workload and setup. The method also enables cooling without relying on ultra-low coolant temperatures, saving energy otherwise needed for chilling, Microsoft noted.

Microsoft has been experimenting with its microfluidic channels liquid cooling technology for years and demonstrated the first prototype in 2022, so by now, the company has plenty of lab experience with the technology. Yet, Microsoft is not the only company to develop methods to produce embedded coolant channels inside a chip or chip package. IBM also has appropriate patents.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!