Plans by toy giant Mattel to add ChatGPT to its products could risk “inflicting real damage on children”, according to child welfare experts.

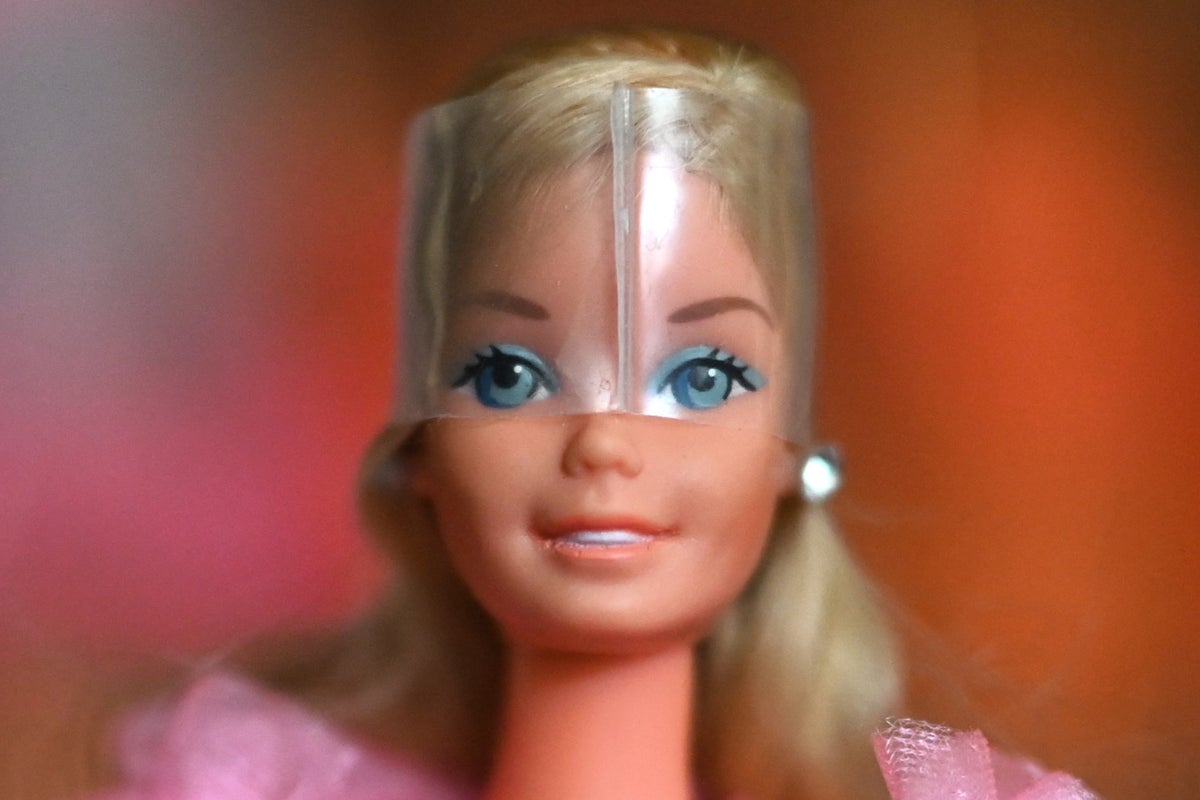

The Barbie maker announced a collaboration with OpenAI last week, hinting that future toys would incorporate the popular AI chatbot.

The announcement did not contain specific details about how the technology would be used, but Mattel said the partnership would “support AI-powered products and experiences” and “bring the magic of AI to age-appropriate play experiences”.

In response, child welfare advocates have called on Mattel to not to not place the experimental tech into children’s toys.

“Children do not have the cognitive capacity to distinguish fully between reality and play,” Robert Weissman, co-president of the advocacy group Public Citizen, said in a statement.

“Endowing toys with human-seeming voices that are able to engage in human-like conversations risks inflicting real damage on children.”

Among the potential harms cited by Mr Weissman include the undermining of social development and interfering with children’s ability to form peer relationships.

“Mattel should announce immediately that it will not incorporate AI technology into children’s toys,” he said. “Mattel should not leverage its trust with parents to conduct a reckless social experiment on our children by selling toys that incorporate AI.”

The Independent has reached out to OpenAI and Mattel for comment.

Mattel and OpenAI’s collaboration comes amid growing concerns about the impact generative artificial intelligence systems like ChatGPT have on users’ mental health.

Chatbots have been known to spread misinformation, confirm conspiracy theories, and even allegedly encourage users to kill themselves.

Psychiatrists have also warned of a phenomenon called chatbot psychosis brought about through interactions with AI.

“The correspondence with generative AI chatbots such as ChatGPT is so realistic that one easily gets the impression that there is a real person at the other end,” Soren Dinesen Ostergaard, a professor of psychiatry at Aarhus University in Denmark, wrote in an editorial for the Schizophrenia Bulletin.

“In my opinion, it seems likely that this cognitive dissonance may fuel delusions in those with increased propensity towards psychosis.”

OpenAI boss Sam Altman said this week that his company was making efforts to introduce safety measures to protect vulnerable users, such as cutting off conversations that involve conspiracy theories, or directing people to professional services if topics like suicide come up.

“We don’t want to slide into the mistakes that I think the previous generation of tech companies made by not reacting quickly enough,” Mr Altman told the Hard Fork podcast.

“However, to users that are in a fragile enough mental place, that are on the edge of a psychotic break, we haven’t yet figured out how a warning gets through.”

Major layoffs at dating app Bumble with online dating at ‘inflection point’

Google’s DeepMind unveils AI robots that work offline

Elon Musk says he ‘does not use a computer’ after sharing photos of his laptop online

Meta’s smart glasses prompt weird questions - they also might be the future

New deal will end many mobile blackspots for rail passengers, Government claims

Porn sites to use ‘highly effective’ age checks next month to protect children