Lord of the Rings: Gollum — Introduction

The Lord of the Rings: Gollum marks the fourth in a string of relatively bad PC game releases based on Unreal Engine 4. We've previously looked at Dead Island 2, Redfall, and Star Wars Jedi: Survivor, and found plenty of concerns. Jedi: Survivor is at least a decent game, especially if you're a fan of the Star Wars setting. The other two were lackluster, at best... and now we have Gollum joining the group. Even fans of the Lord of the Rings setting will likely have a tough time with this one.

PC Gamer scored it a 64, which is one of the higher scores we've seen. Metacritic has an overall average score of just 41. How bad is the game? Daedalic Entertainment went so far as to issue a public apology. Which, okay, that's certainly not the first time we've seen an apology after a dodgy release, but still.

A few words from the " The Lord of the Rings: Gollum™ " team pic.twitter.com/adPamy5EjOMay 26, 2023

Many complaints stem from technical issues and performance, but the retail release had a day-0 patch to potentially address some of those problems. Given the above tweet, you can probably guess that plenty of work remains. But if you're curious, we've been running benchmarks to see just how poorly Gollum runs on some of the best graphics cards.

Let's get to it.

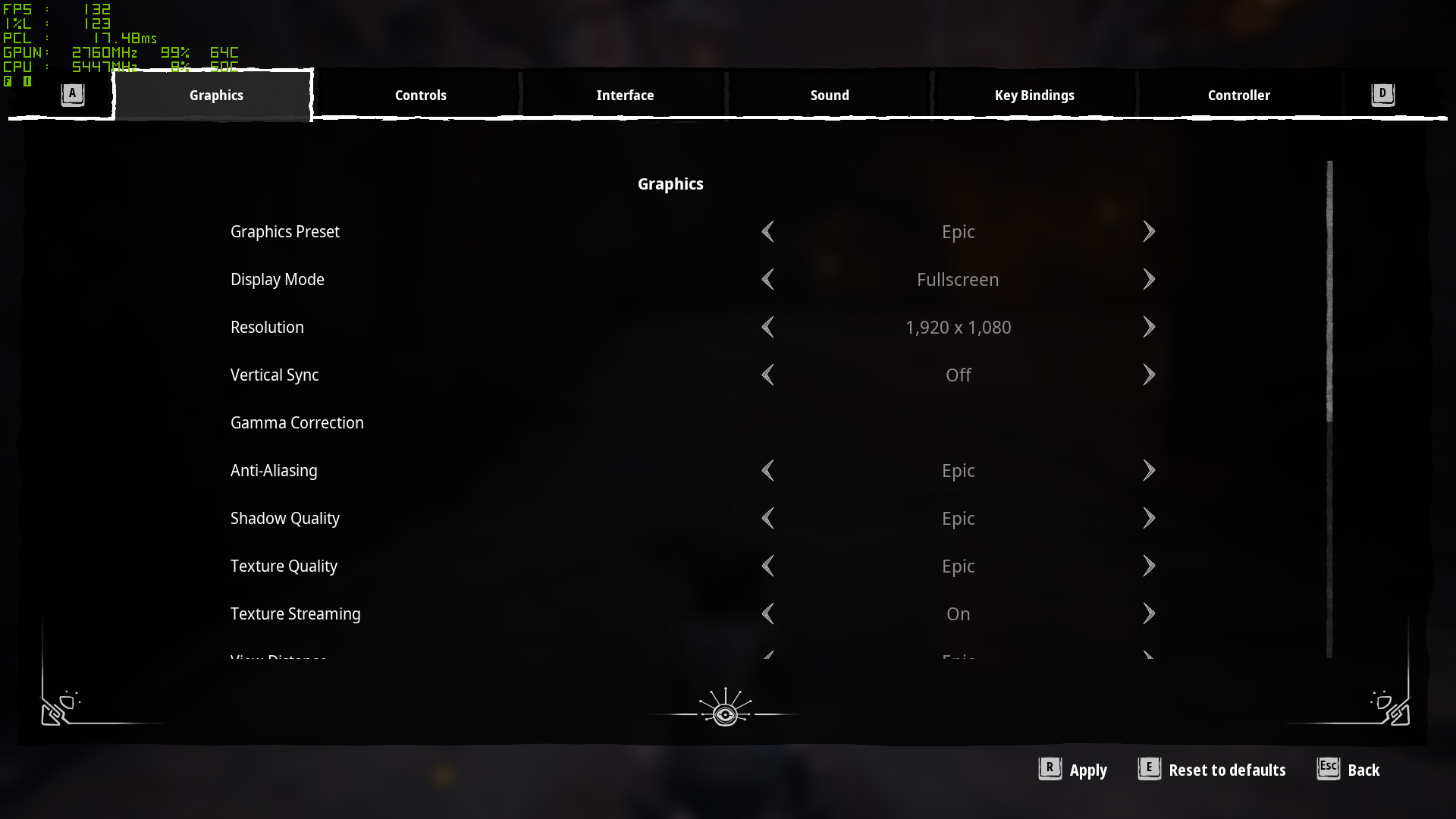

Lord of the Rings: Gollum — Settings

There are plenty of settings to tweak to potentially help the game run better. Along with four presets (Low, Medium, High, and Epic), there are eight advanced settings, plus the usual stuff like resolution, vsync, and fullscreen/windowed modes. Gollum also supports DLSS 2 upscaling, DLSS 3 Frame Generation, and FSR 2 upscaling.

We need to stop for a moment to talk about upscaling, though. The default anti-aliasing mode tends to be pretty blurry — typical of temporal AA, but Unreal Engine 4 often seems excessively blurry. If you have an Nvidia card and enable DLSS, there's a sharpening option, and it makes things look way better. This is a game where, the way it stands right now, DLSS legitimately looks better than native. FSR 2 meanwhile doesn't get a sharpening filter (even though it's supported in other games), and it makes things even blurrier than TAA. It's... not good.

And that's a problem, because a lot of GPUs are going to need help to run Gollum at decent framerates. Or maybe that's not a bad thing, considering the game itself. Anyway, here's a gallery comparing native, DLSS upscaling modes, and FSR 2 upscaling modes.

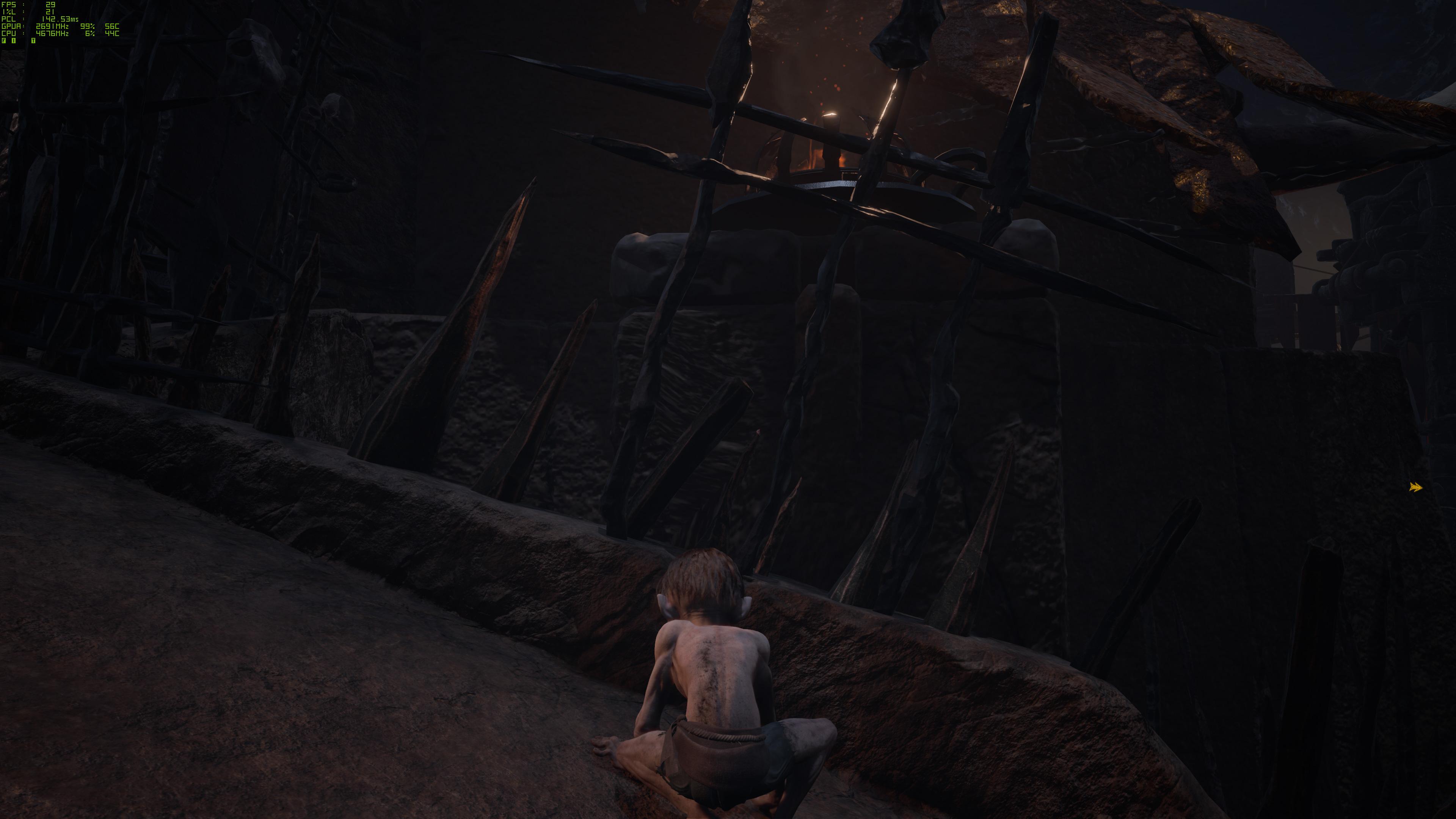

If you flip through the above gallery (you'll want a high resolution screen and you'll want to view the original 4K images), you should immediately see what we're talking about. The Nvidia and AMD images are taken on different cards (an RTX 4070 Ti and an RX 7900 XT). Don't get too hung up on the performance in the corner for now, as these aren't full benchmarks, but you'll note that both cards are seriously struggling at 4K native with epic settings and ray tracing enabled.

More importantly, while the non-upscaling results look basically identical (other than minor variations in Gollum's position and the smoke, and a few other minor differences), the upscaled results are wildly divergent. The RTX 4070 Ti with DLSS looks sharper, especially on the distant building but also on Gollum and other objects. The RX 7900 XT with FSR 2 becomes blurrier on those same objects.

What's also curious is that the DLSS results look virtually identical on all three modes — perhaps Quality mode looks slightly better. Performance also doesn't change that much when going beyond Balanced mode, and when we turned of RT, all three modes got the same ~90 fps. With FSR 2, there were clear performance difference to go with the change in fidelity, and the Performance mode upscaling looks especially blurry.

We suspect FSR 2's sharpening option would help quite a bit, but these are the current results. DLSS ends up being almost a requirement to get better visuals, while FSR 2 is more of a requirement if you want to enable ray tracing on a non-Nvidia GPU, especially at 1440p and 4K.

Let's also quickly discuss Texture Quality. Above, you can see the results of 4K with the Epic present running on an RTX 4060 Ti (without ray tracing). Then we turned down Texture Quality and Shadow Quality to High and Medium. What should be pretty obvious is that this doesn't make much of a different in image fidelity, even on a card that's clearly hitting VRAM limits. Performance on the other hand goes from 10 fps to 16 fps at high, and then 19 fps at medium — not great, but definitely improved.

There's a catch, however: We've turned Texture Streaming off. That means that the game basically loads everything into RAM/VRAM, which can hurt performance. Why would we do that, though? Simple: Enabling Texture Streaming on cards with less than 16GB of memory can cause some serious texture popping to occur, effectively meaning lower VRAM cards both look terrible and aren't really running the same workload as cards with sufficient VRAM.

Here's a sample using an RX 6750 XT 12GB, with and without Texture Streaming.

There's a massive difference between the two images, and while we used 4K Epic to better illustrate the problem, we saw plenty of texture popping on 12GB cards even at 1080p Epic. If you have a GPU with only 8GB of VRAM, you'll even see major texture popping going on at 1080p medium!

We didn't do extensive testing, but if you have a card with at least 16GB of VRAM, you can probably enable Texture Streaming and after the first few seconds in a level, you won't notice much in the way of texture popping. Performance also tended to be higher, perhaps even 15% higher, but it will depend on the GPU, how much memory it has, and the level of the game.

We felt the overall experience was better with Texture Streaming off, at least for the current version of the game (0.2.51064). This is really more of a game engine problem and something the developers should work to fix, as normally texture streaming is the smart way to handle things. However, loading in maximum resolution textures versus apparently minimum resolution textures, and then swapping between them, definitely doesn't result in a great looking experience.

Lord of the Rings: Gollum — Image Quality

Wrapping up our settings overview and analysis of how Lord of the Rings: Gollum looks, we have the above three comparisons between the various presets and other settings.

While ray tracing is exceptionally demanding in Gollum, there's also no question that it can make some areas of the game look better. The reflections off water and puddles for example look far better, and the shadows are more accurate. Are they worth the potential massive hit to performance? Well, that's a bit harder to say.

In general, with DLSS, you can get relatively close to the same performance with RT enabled as you'd get with no DLSS and no RT. For higher end Nvidia GPUs, you can make a decent argument for running at 1080p or perhaps even 1440p with maxed out settings plus DLSS.

For AMD, it's a bit harder to say if RT is even worth considering. The RX 7900 cards for example can provide good performance at 1080p and maxed out settings with FSR 2 upscaling, but the upscaling definitely reduces image quality. Unfortunately, there's no option to selectively enable RT effects, so if you only want RT reflections as an example, that's not possible using the in-game settings.

With Texture Streaming disabled, the main difference we saw with the low, medium, high, and epic presets is with the distance at which objects and lights (torches) become visible. The second group of images in the above gallery best illustrates this.

Lord of the Rings: Gollum — Test Setup

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 7600

AMD RX 6000-Series

Intel Arc A770 16GB

Intel Arc A750

Nvidia RTX 4090

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 4070

Nvidia RTX 4060 Ti

Nvidia RTX 30-Series

We're using our standard 2023 GPU test PC, with a Core i9-13900K and all the other bells and whistles. We've tested 19 different GPUs from the past two generations of graphics card architectures in Lord of the Rings: Gollum

We're also testing at 1920x1080, 2560x1440, and 3840x2160. For 1080p, we test with the medium and epic presets. Then for all three resolutions, we test with the epic preset, with and without ray tracing, plus with Quality mode upscaling and with RT enabled. For Nvidia's RTX 40-series cards, we additionally tested with Frame Generation enabled (so, Epic preset, RT, Quality upscaling, and Frame Gen).

There are many results that aren't particularly usable, meaning sub-30 fps performance. We skipped testing on lower GPUs where it clearly didn't make any sense, though a few results are still present to show, for example, exactly why you shouldn't even think of trying to run at 4K with maxed out settings and ray tracing on an 8GB card.

We run the same path each time, in the second chapter (Maggot), through the initial area of the mines. Each GPU was tested a minimum of two times at each setting, and if the results weren't consistent (which was often the case), we ran additional tests. Once we saw stable results for several runs, we took the best-case value.

Something else worth pointing out is that Gollum (and/or Unreal Engine 4) have some mouse input smoothing going on. At high fps values, everything is generally fine, though the mouse can at times feel a bit sluggish (e.g. at 1080p medium on an RTX 4090 or RX 7900 XTX). Once the fps starts to fall below 30, mouse control becomes a lot less precise, where a fractional movement can cause a relatively significant skewing of the viewport. And if you're in the single digits, it becomes almost impossible to control Gollum with any form of precision. Basically, when we say 25 fps and lower are "unplayable," we really mean it for this game.

And with that out of the way, let's get to the benchmark results.

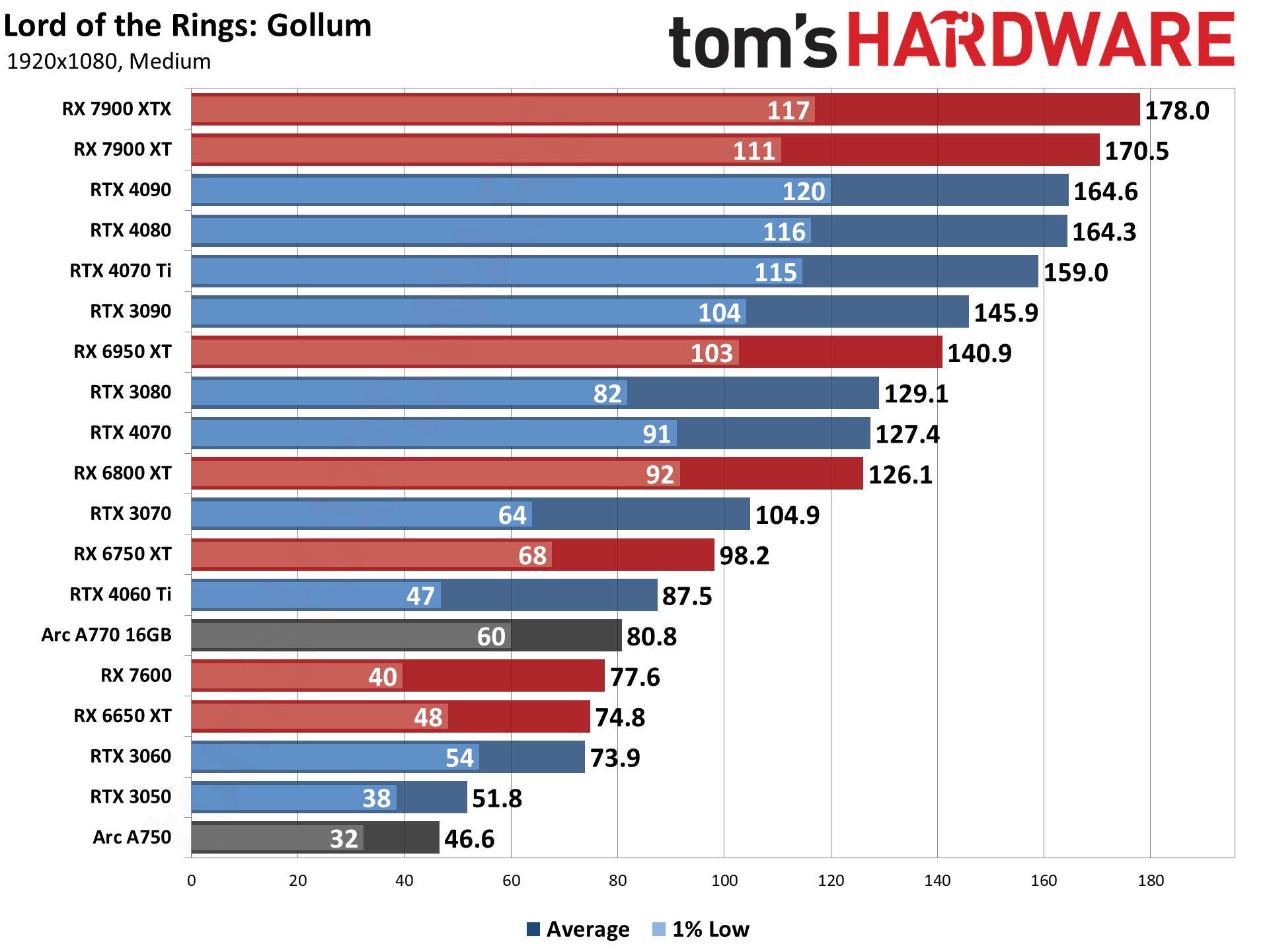

Lord of the Rings: Gollum — 1080p Medium Performance

Even our baseline tested at 1080p medium wasn't smooth sailing for all the GPUs we tested. And we're testing decent hardware, with the RTX 3050 and Arc A750 being the slowest GPUs in our test suite.

Curiously, only the Arc A750 gave us a warning about the gaming "requiring" (recommending?) 16GB, and that running it on a GPU with only 8GB might result in less than ideal performance. That warning wasn't lying, but we didn't see it with the 8GB AMD and Nvidia cards we tested. Anyway, here are the results.

The good news is that every GPU was at least "playable," breaking 30 fps. But we're looking at 1080p medium, which should be playable on far lesser GPUs. The bad news is that cards with 8GB of VRAM are already struggling quite badly. This is most apparent when you look at the poor 1% low fps on GPUs like the 3050, 6650 XT, 7600, 4060 Ti, and 3070.

Average fps was above 60 for everything except the RTX 3050 and Arc A750. Also, look at the gap between the A750 and A770 16GB — the latter is normally only about 10–15 percent faster than the A750, according to our GPU benchmarks hierarchy, but in Lord of the Rings: Gollum, there's a significant 73% advantage for the 16GB card!

If you want to keep 1% lows above 60 fps, the A770 16GB will suffice, along with the RX 6750 XT and RTX 3070. The RTX 3060 also gets reasonably close, and if you enable DLSS (which, as noted above, you should) it will also easily clear that mark.

Raw memory bandwidth (i.e. not accounting for larger caches) also factors into the performance, however. The RTX 3070 as an example blows past the RTX 4060 Ti. The RX 7600 meanwhile seems like maybe the drivers are a bit less optimized than on the RX 6650 XT, as the 1% lows tended to be far worse.

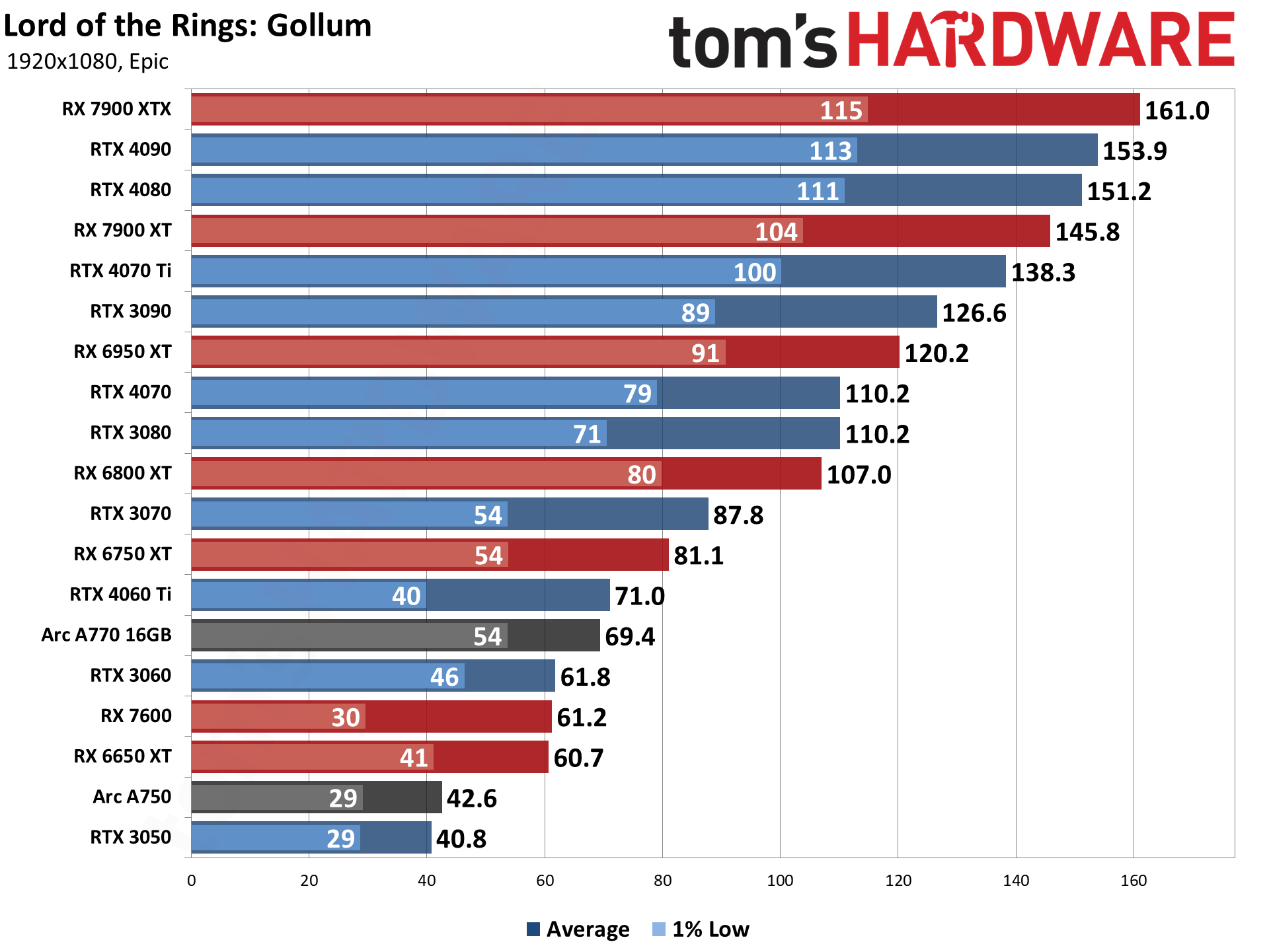

Lord of the Rings: Gollum — 1080p Epic Performance

We're going to include separate charts for Epic, Epic + Ray Tracing, Epic + Ray Tracing + Upscaling, and Epic + Ray Tracing + Upscaling + Frame Generation for the remaining sections. We'll start with the baseline Epic performance.

As before, only two of the tested GPUs fall below 60 fps, but quite a few others are just barely getting by. 1% lows are also below 60 on the RTX 3070 and below. Basically, you really need an AMD card with at least 12GB VRAM, or an Nvidia card with at least 10GB VRAM. The RTX 3060 has 12GB, but significantly less compute, so it also falls below 60 on its 1% lows.

And if you think performance looks bad in the above chart — even though the RX 6800 XT and above clear 100 fps — just wait until you see what happens when we turn on ray tracing.

With Epic and ray tracing at native 1080p, AMD GPUs lose over half of their performance — and significantly more than that on the 8GB VRAM cards. Nvidia does better overall, but the RTX 3080 and RTX 4070 still lose almost half of their baseline Epic performance. Intel's Arc GPUs also lose about half their performance.

While most of the GPUs would at least be playable at 1080p Epic, without upscaling you'd pretty much need an RX 6800 XT or higher to get a decent experience. Also notice that the RTX 3060 pulls ahead of the RTX 3070 and RTX 4060 Ti here, which shows just how much VRAM Gollum consumes.

Thankfully, upscaling helps quite a bit. Nearly everything gets back to at least mostly playable performance, though 1% lows are still questionable on many of the cards with 8GB of memory. And, as discussed earlier, DLSS looks a lot better than FSR 2 right now, so the above chart isn't really apples-to-apples.

In an interesting twist, while Gollum is a great game in some ways for making Nvidia look a lot stronger than AMD, there are also clear exceptions to that rule. The RTX 4060 Ti looks terrible here, for a brand-new $400 graphics card.

But here's the thing: We really don't put too much stake in these results. Lord of the Rings: Gollum is so clearly plagued by poor optimization and questionable coding that we fully expect to see some patches that will greatly improve performance. Hopefully, they'll fix FSR 2 upscaling by adding a sharpening filter — and including a sharpening filter outside of FSR 2 and DLSS would be a good idea.

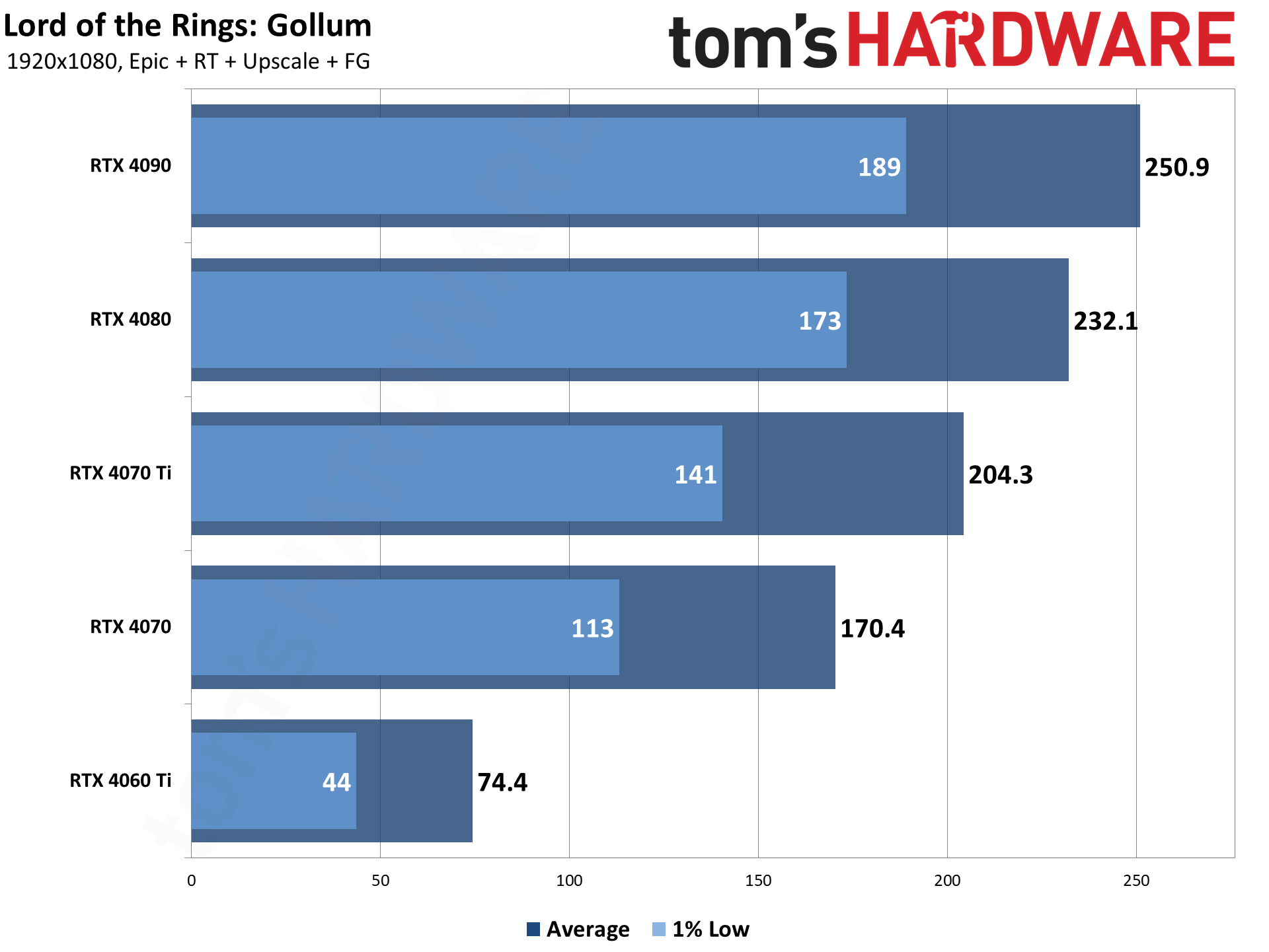

If you swipe left on the above gallery, you can also see the Frame Generation results for the RTX 40-series cards. These provide a massive improvement in frames to screen, but that's not that same as frames per second. The generated frames incorporate no new user input and add latency, so what you're really getting is a frame smoothing algorithm. That's not necessarily a bad thing, but RTX 4060 Ti for example still feels like it's running at about 40 fps, even though the number of frames being sent to your display is nearly double that value.

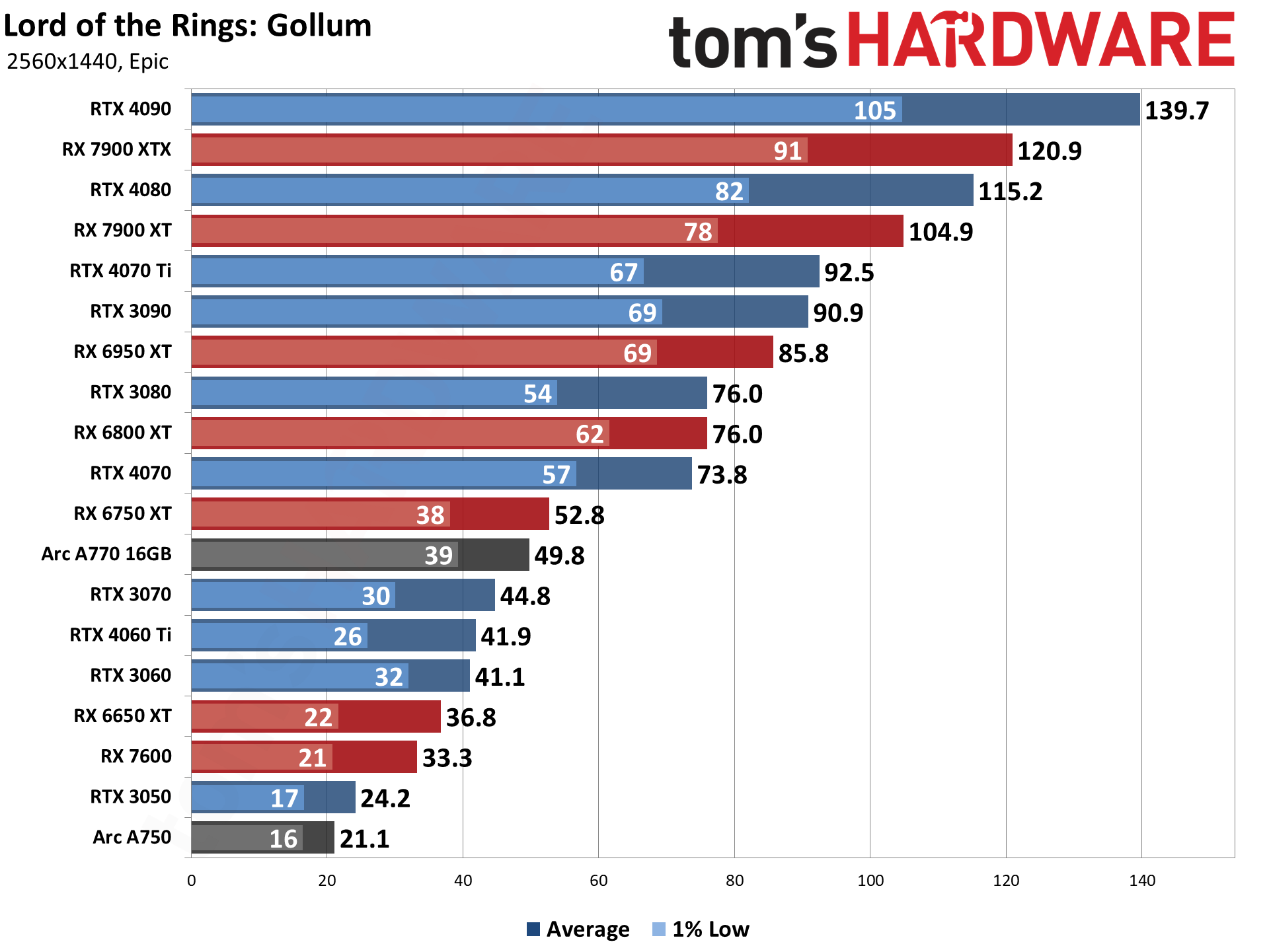

Lord of the Rings: Gollum — 1440p Epic Performance

Things only get worse at higher resolutions. We're going to put all four of the 1440p (and 4K below) charts into a gallery now, as we're mostly going to see lower performance and fewer cards in the charts.

At 1440p Epic (without upscaling or ray tracing), the RTX 4070 and above are still able to post 60 fps or higher performance. The game remains very playable on such GPUs, and even the RTX 3060 and 3070 are reasonably decent experiences. The RTX 4060 Ti meanwhile starts to get far more stuttering. You could "fix" that with Texture Streaming, but then you'd get horrible low resolution textures popping in and out as the game tries to juggle memory management.

Realistically, if you want to play Gollum at 1440p, you need a GPU with at least 10GB of VRAM. Alternatively, you can either try lower settings and/or enable DLSS upscaling. Maybe FSR 2 upscaling will improve in quality with future patches, but right now, native 1080p tends to look at least as good as 1440p with FSR 2 upscaling.

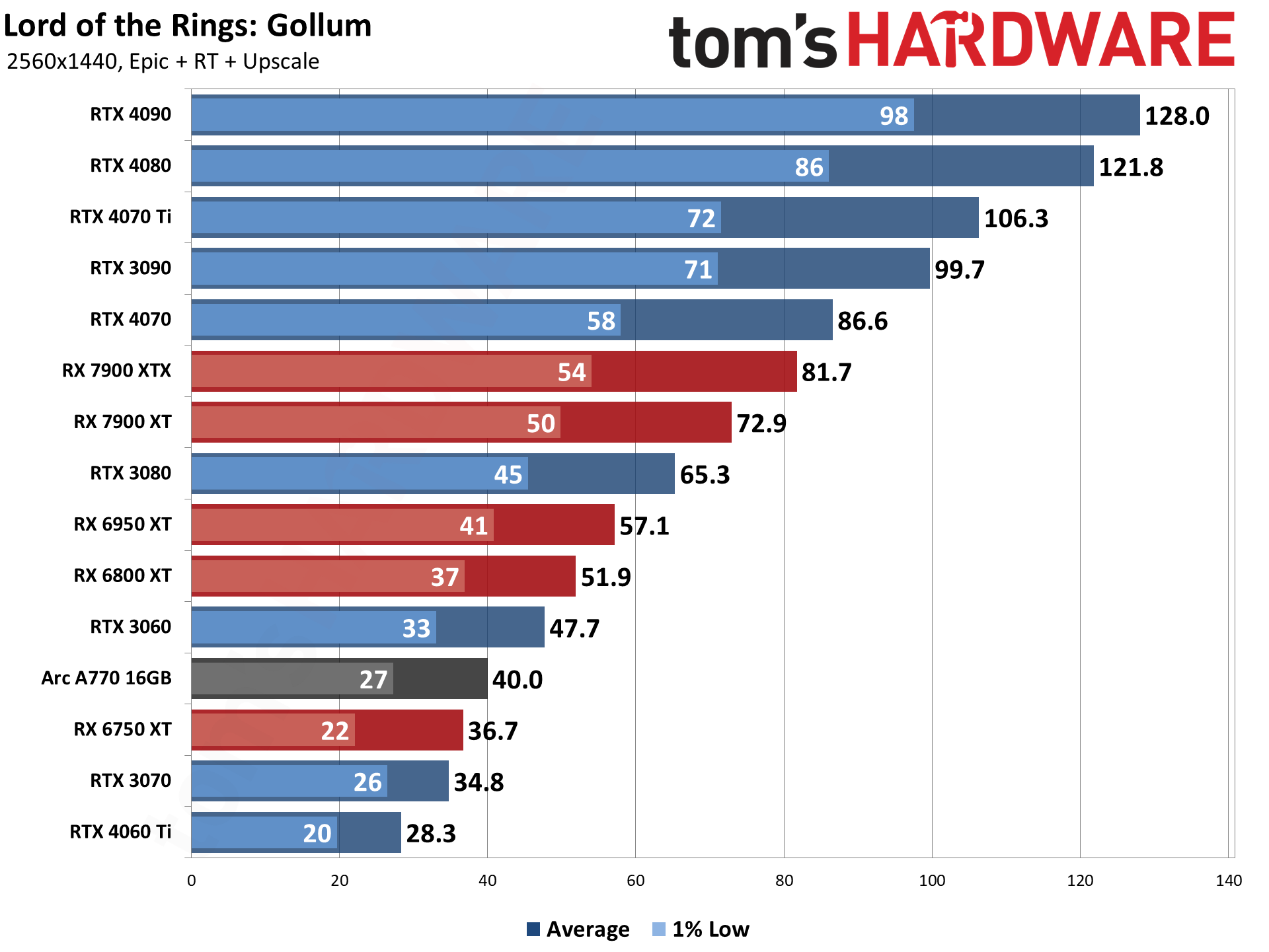

Native 1440p with ray tracing really needs a potent graphics card and lots of VRAM. The RX 7900 XT and RTX 4070 Ti have 1% lows of 29 fps, so playable but not great. Even the RTX 4090 has 1% lows of less than 60 fps. DLSS helps in a big way, however, and the RTX 4070 jumps by about 125% on average fps, and 140% for 1% lows. The RTX 4070 and above also outperform the fastest AMD GPUs with upscaling enabled.

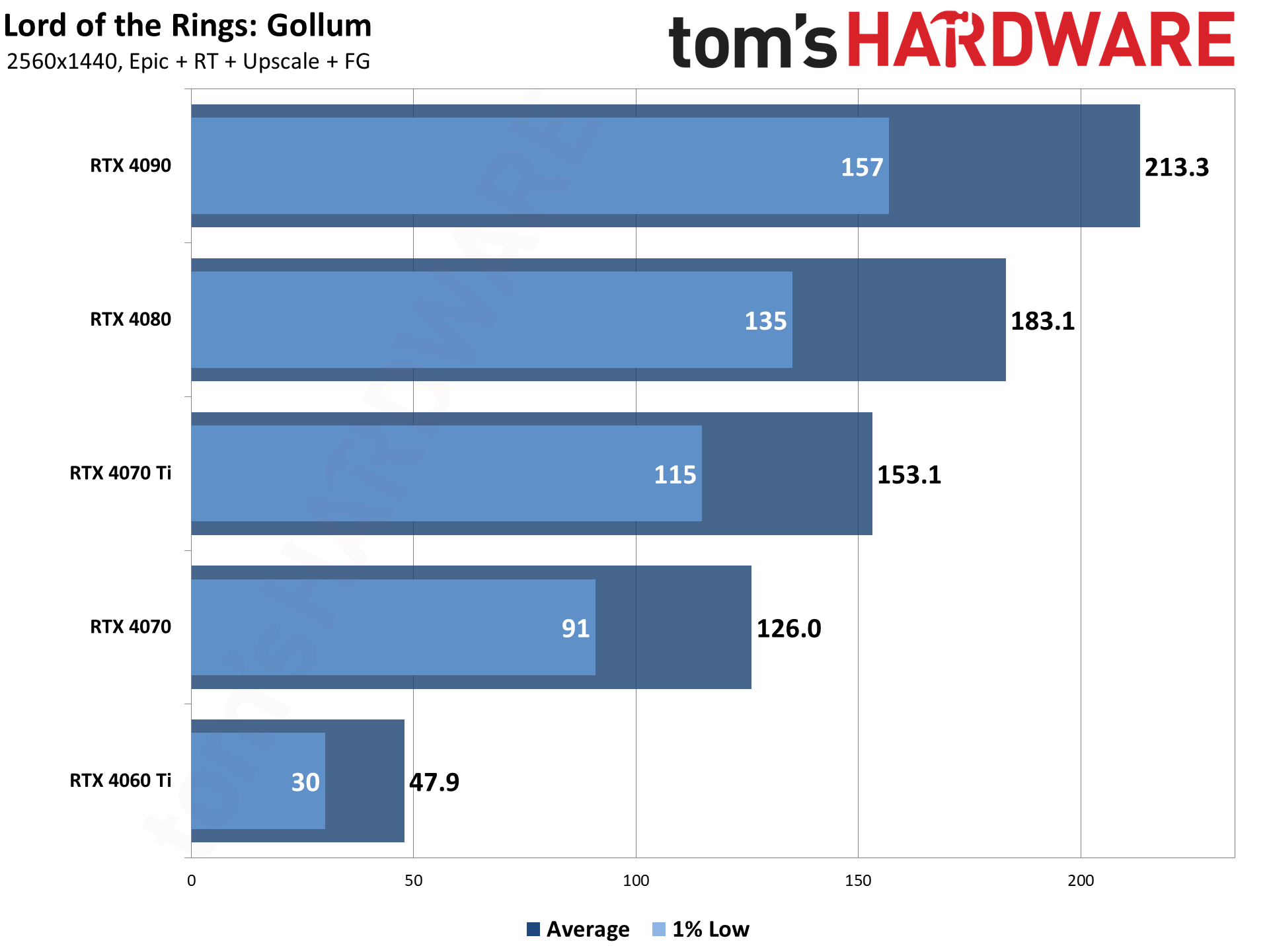

Frame Generation meanwhile provides a further smoothing out of performance. The RTX 4060 Ti doesn't really feel that smooth, however, so really you'd want to either disable ray tracing on the 4060 Ti, drop other settings a notch or two, or opt for 1080p. But the RTX 4070 and above deliver a great performance, if you have one of Nvidia's latest and greatest GPUs with at least 12GB of memory.

Lord of the Rings: Gollum — 4K Epic Performance

Guess what: 4K Epic needs a pretty good graphics card, and 4K Epic with ray tracing is only truly viable with upscaling enabled.

Without ray tracing, 4K epic clears 60 fps averages on the RTX 4080, RX 7900 XTX, and RTX 4090. And that's it! None of those three cards keeps 1% lows above 60 fps, though the RTX 4090 and RX 7900 XTX are probably close enough — especially if you have a monitor with G-Sync / FreeSync support. Even 16GB of VRAM isn't really "enough" for Gollum at 4K epic — we've discussed many of the reasons that's the case in our article on why 4K gaming is so demanding.

You could play at 4K epic on the RTX 3080 and above with okay results, and upscaling would definitely help push many of the GPUs above 60 fps. But 4K with ray tracing and no upscaling is basically death to performance. The RTX 4090 is still playable, with 30 fps on its 1% lows, but nothing else is even close.

Upscaling on the other hand makes 4K run great on the 4090, and pretty decently on many of the other GPUs. The 10GB VRAM on the RTX 3080 is still a problem, though, and higher DLSS upscaling ratios don't seem to be fully functional right now. Or maybe we just had some odd experiences.

We also included RT + DLSS + FrameGen results for all five of the RTX 40-series GPUs, even if the RTX 4060 Ti isn't remotely playable at these settings. Remember: It's more frame smoothing than actual frames, so even if the average fps shows 26.6, it feels more like 14 fps. There are also some rendering artifacts, as the DLSS 3 Optical Flow Field doesn't really deal very well with choppy and low framerates.

Lord of the Rings: Gollum — Closing Thoughts

There's an okay game hiding within Lord of the Rings: Gollum, but it's mostly for true devotees of the Middle-Earth setting. Because, let's face it: Gollum was never a loveable guy. Playing as a dual-personality, skin-stretched-over bones character throughout the 10–15 hour campaign probably wasn't ever going to resonate with a lot of people. Do you want to know what he was doing in between The Hobbit and The Lord of the Rings? Gollum will fill in some of the details and let you explore parts of Middle-Earth, but it can feel quite tedious and uninspired.

But right now, even getting through the story can be particularly painful if you're not running high-end hardware. Midrange GPUs can suffice, but definitely don't try maxing out settings, even at 1080p, unless you have more than 8GB of VRAM. And leave off ray tracing unless you have an Nvidia RTX card (or maybe an RX 7900 XT/XTX). That's our advice, at least.

So far, major game promotions from both AMD and Nvidia seem to be batting .750 for 2023. Dead Island 2, Redfall, and now Lord of the Rings: Gollum are, at best, pretty marginal games. Star Wars Jedi: Survivor was a much better game, though like Gollum, it had some serious technical issues at launch. (Recent patches have improved things, though we're not sure it's smooth sailing just yet.) Considering all four games use Unreal Engine 4, you might be tempted to think that's a contributing factor. Hmmm...

As it stands now, Lord of the Rings: Gollum could be used as a clear indication that 8GB of VRAM is absolutely insufficient for a modern GPU like the RTX 4060 Ti. The problem is that with poor performance, plus some settings that don't seem to work properly, we can't take the current performance at face value. Even on some of the best graphics cards around, it looks to be more like an example of how to make a game require a lot of memory for little actual benefit.

Better coding would certainly improve things, as we've seen a lot of better looking games that don't need 8GB over the past several years. Red Dead Redemption 2 for example runs just fine with 8GB. Hopefully, Daedalic can tune Unreal Engine to be better about balancing VRAM use and texture quality with future updates. Whether it can also make the game more enjoyable remains an open question.