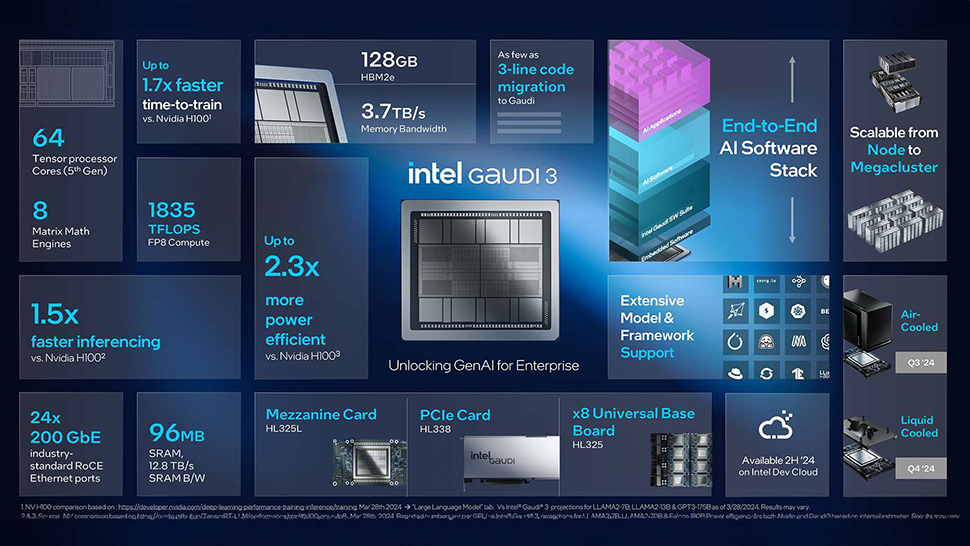

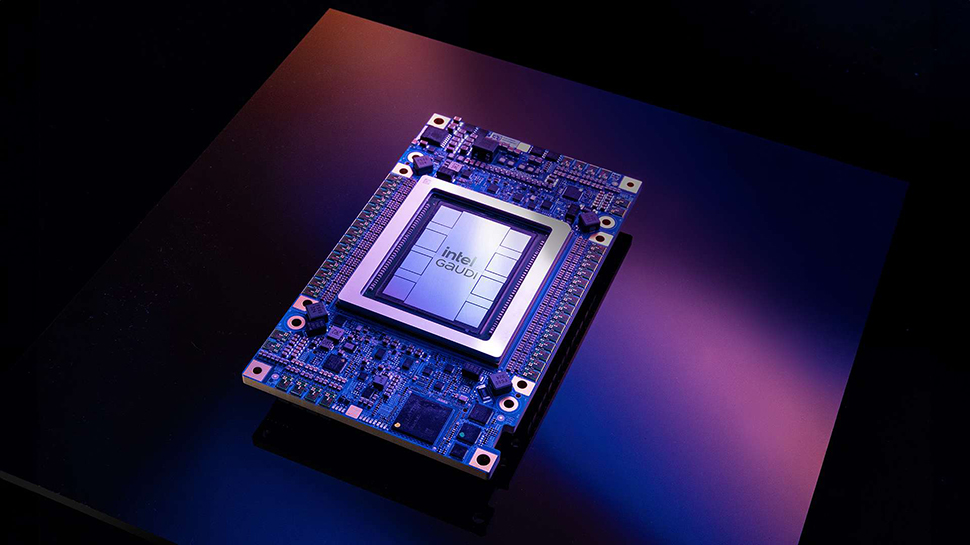

At Intel Vision 2024, Intel launched its Gaudi 3 AI accelerator which the company is positioning as a direct competitor to Nvidia's H100, claiming that it offers faster training and inference performance on leading GenAI models.

The Gaudi 3 is projected to outperform the H100 by up to 50% in various tasks, including training time, inference throughput, and power efficiency.

Building on the performance and efficiency of the Gaudi 2 AI accelerator, Gaudi 3 reportedly boasts 4x AI compute for BF16, a 1.5x increase in memory bandwidth, and 2x networking bandwidth for massive system scale out, compared with its predecessor.

Superior performance

Manufactured on a 5nm process, Gaudi 3 features 64 AI-custom and programmable TPCs and eight MMEs capable of 64,000 parallel operations. It offers 128GB of memory (HBM2e not HBM3E), 3.7TB of memory bandwidth, and 96MB of on-board SRAM for processing large datasets efficiently. With 24 integrated 200Gb Ethernet ports, it allows for flexible system scaling and open-standard networking.

Intel claims Gaudi 3 is superior to H100 across various models, including 50% faster training time on Llama 7B and 13B parameters, as well as GPT-3 175B models. Additionally, there is a 50% increase in inference throughput and 40% greater power efficiency on Llama 7B and 70B parameters, and Falcon 180B models. Intel says Gaudi 3 also outperforms H200 in inferencing speed on Llama 7B and 70B parameters, and Falcon 180B parameter models by 30%. As these are Intel benchmarks, feel free to take them with a pinch of salt.

Tom's Hardware notes, "At the end of the day, the key to dominating today’s AI training and inference workloads resides in the ability to scale accelerators out into larger clusters. Intel’s Gaudi takes a different approach than Nvidia’s looming B200 NVL72 systems, using fast 200 Gbps Ethernet connections between the Gaudi 3 accelerators and pairing the servers with leaf and spine switches to create clusters."

Justin Hotard, Intel executive vice president and general manager of the Data Center and AI Group, said, “In the ever-evolving landscape of the AI market, a significant gap persists in the current offerings. Feedback from our customers and the broader market underscores a desire for increased choice. Enterprises weigh considerations such as availability, scalability, performance, cost, and energy efficiency. Intel Gaudi 3 stands out as the GenAI alternative presenting a compelling combination of price performance, system scalability, and time-to-value advantage.”

Gaudi 3 will be available to OEMs in the second quarter of 2024, with general availability expected in the third quarter.