ChatGPT-5 and Gemini 2.5 Pro are two of the most advanced multimodal chatbots available. Both are available with a free tier option, and can create crisp, realistic images within the chat window in seconds.

Compared to GPT-4, OpenAI’s GPT-5 takes multimodality to the next level with sharper image analysis, more natural voice and a massive 400K-token context window. The latest version is said to be safer with fewer errors and smarter at routing tasks between “chat” and “thinking” modes, making it a stronger, more versatile AI assistant.

Similarly, Gemini 2.5 Pro is Google DeepMind’s most advanced multimodal reasoning model yet. With a massive one-million-token context window, it excels in solving complex math and science problems, surpasses competitors on benchmarks, and demonstrates exceptional coding prowess; from interactive simulations, web apps and debugging support from a single prompt, it handles images, audio, video and even entire codebases.

I just had to know how the two compared with image generation. Here’s what happened when I used the same eleven prompts to create images and how the chatbots stack up against each other.

Category 1: Mashup

Futuristic New York City

Prompt: "Design a futuristic New York City street scene in 2050 where self-driving food trucks shaped like animals are serving people. Include at least three different species (like a panda truck, dolphin truck, and eagle truck), neon signage in multiple languages and rain-slick streets with reflections."

ChatGPT-5 delivered on every detail of the prompt and it is clear that the image takes place in New York City, despite it being futuristic.

Gemini 2.5 Pro generated a clear and vibrant image that hit nearly every aspect of the prompt, but missed what might be the biggest one: New York City. There's no way to know where this scene is located.

Winner: ChatGPT wins for a solid aesthetic and taking every detail into account.

Unlikely jungle animals

Prompt: "Create a lively jungle village where animals live like humans. A tiger is reading a newspaper on a hammock, a parrot is running a fruit stand, elephants are carrying groceries in baskets, and monkeys are playing soccer in the background. Add wooden huts, vines wrapped around them, and lanterns glowing at dusk."

ChatGPT-5 took the mashup idea to new heights with creating an image that was both comical and yet realistic. I was hoping either one of the bots would go for realism over cartoon-y. ChatGPT did not disappoint but completely missed the parrot running a fruit stand.

Gemini 2.5 Pro turned this prompt into a cute illustration and took into account the details but overall the image wasn't as intriguing (IMO).

Winner: Gemini wins for taking into account every detail of the prompt. ChatGPT was very close and the image was more realistic, which I liked, but the win has to go to the one who best handled the prompt. Would love your thoughts on this one; share in the comments.

Category 2. Indoor/Outdoor

Cozy living room

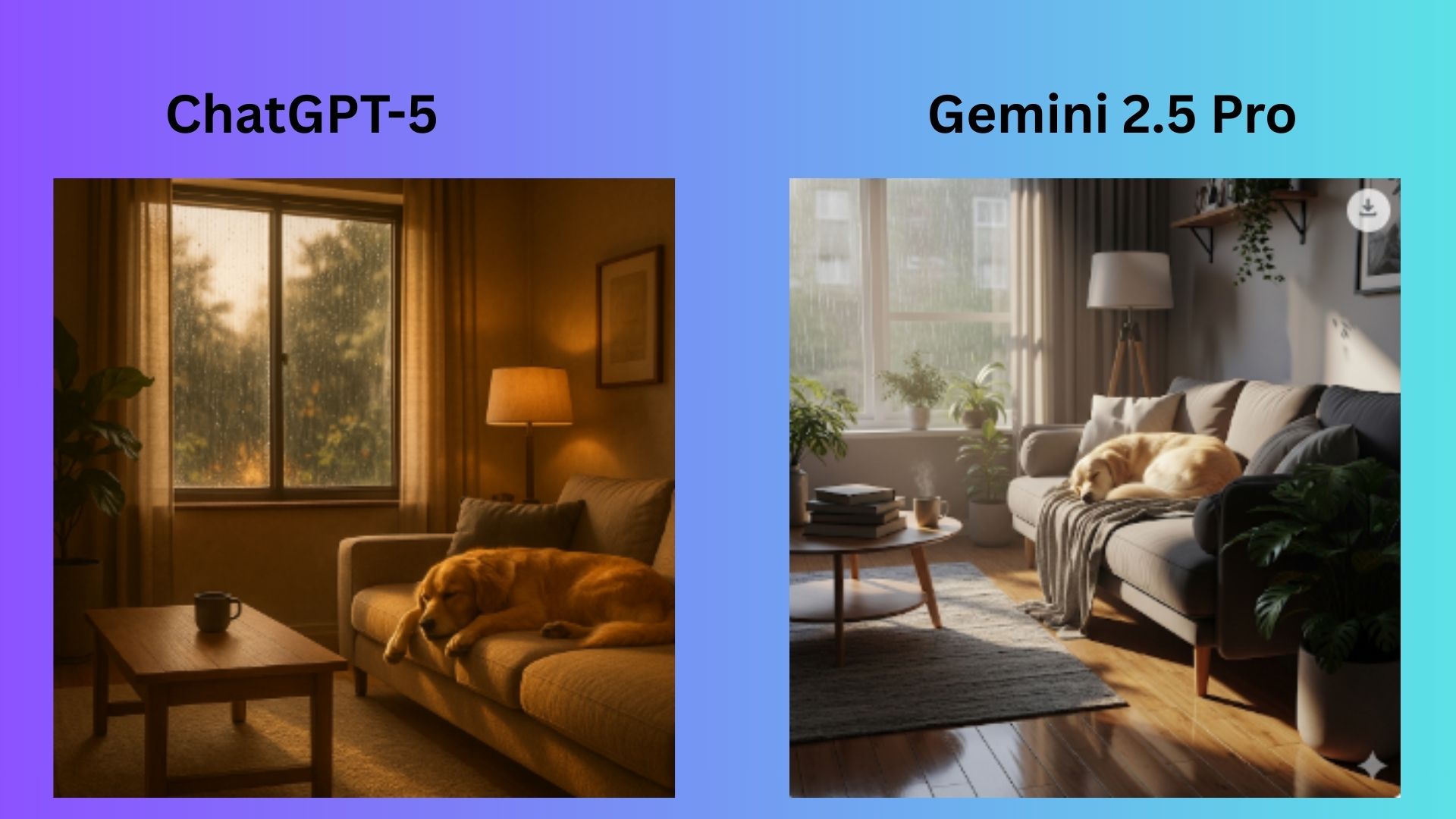

Prompt: “A cozy modern living room on a rainy afternoon, sunlight filtering through the window, with a golden retriever sleeping on the couch.”

ChatGPT-5 created an image that was realistic and cozy, but the lighting is too warm and evening-like for the prompt's specifications.

Gemini 2.5 Pro delivered a very realistic image that captures the scene with the right lighting and aesthetic, while embodying the prompt completely.

Winner: Gemini did a better job at accurately capturing the "rainy afternoon with sunlight filtering through" lighting condition, rendered the golden retriever more clearly and created a scene that better matches the modern, cozy aesthetic described.

Street market

Prompt: “A street food market in Bangkok at night, neon lights reflecting off wet pavement, bustling crowds, and steam rising from food stalls.”

ChatGPT-5 crafted vivid and colorful reflections that immerse the viewer in the atmospheric scene. The composition is cinematically appealing, and the balance between all the elements — such as steam, crowd, neon signs and wet reflection — feel harmonious.

Gemini 2.5 Pro delivered a strong urban atmosphere with the busy street scene and included excellent signage with authentic Thai text. The wet pavement reflections are good, but could be more pronounced.

Winner: ChatGPT did a superior job with this prompt. While both captured the essential elements, ChatGPT excelled particularly in the "neon lights reflecting off wet pavement" aspect, which is a key detail in the prompt.

Category 3: Creativity and surrealism

Futuristic city

Prompt: “A futuristic city floating above the ocean, powered by giant glowing energy crystals, with flying cars zipping between the towers.”

ChatGPT-5 delivered an image with a more fantastical and visually striking interpretation with giant glowing energy crystals. While the crystals look as though they are powering the city, the overall image lacks the depth and realistic nuance of Gemini.

Gemini 2.5 Pro created a clean image with sleek, futuristic architecture consisting of tall spires and modern buildings. It is more realistic with a good sense of scale and urban planning depicted.

Winner: Gemini wins for creating both a fantastical and realistic image that unmistakably showcases the city’s power source of brilliantly glowing crystals on the building. Overall, the sci-fi concept better matches the goal of the prompt.

Out-of-this-world tea party

Prompt: “A tea party on the moon with astronauts and alien creatures, pastel-colored plates, and a view of Earth in the background.”

ChatGPT-5 crafted a charming illustration that is whimsical and storybook-like. The pastel color scheme throughout the entire image is pleasant and intriguing.

Gemini 2.5 Pro delivered a photorealistic approach with proper lunar surface texture and astronauts looking appropriately suited for space exploration. The detailed view of Earth in the background is a nice addition to the details.

Winner: Gemini wins for best executing the prompt with a beautiful, realistic space scene and adorable "alien creatures" as well as the extra details within the prompt.

Category 4: People & portraits

Day in Paris

Prompt: “A candid photo of a middle-aged woman laughing at an outdoor café in Paris, with the Eiffel Tower blurred in the background.”

ChatGPT created a less authentic image as it feels much more posed and professional-looking, rather than capturing candid laughter. The Eiffel Tower background lacks the proper depth and blur effect mentioned in the prompt.

Gemini 2.5 Pro properly blurred the Eiffel Tower in the background creating good depth of field while focusing on the natural, genuine laughter of the woman in the image. The overall composition feels like an actual candid street photograph.

Winner: Gemini wins for capturing the "candid" nature of the prompt significantly better.

Soccer practice

Prompt: “A group of five kids playing soccer in a grassy park at sunset, motion blur capturing movement, with diverse ethnicities represented.”

ChatGPT-5 delivered a more static composition and the sunset lighting feels less dramatic. The children appear more posed than in motion.

Gemini 2.5 Pro showed clear motion blur effect with children running with a visible soccer ball and a good sense of movement and energy. The golden hour sunset lighting is realistic as are the diverse group of children playing.

Winner: Gemini wins for handling the prompt the best and creating a genuine feeling of kids running and playing with effective motion blur that captures the energy of children in motion.

Category 5: Style flexibility

Art style and texture realism

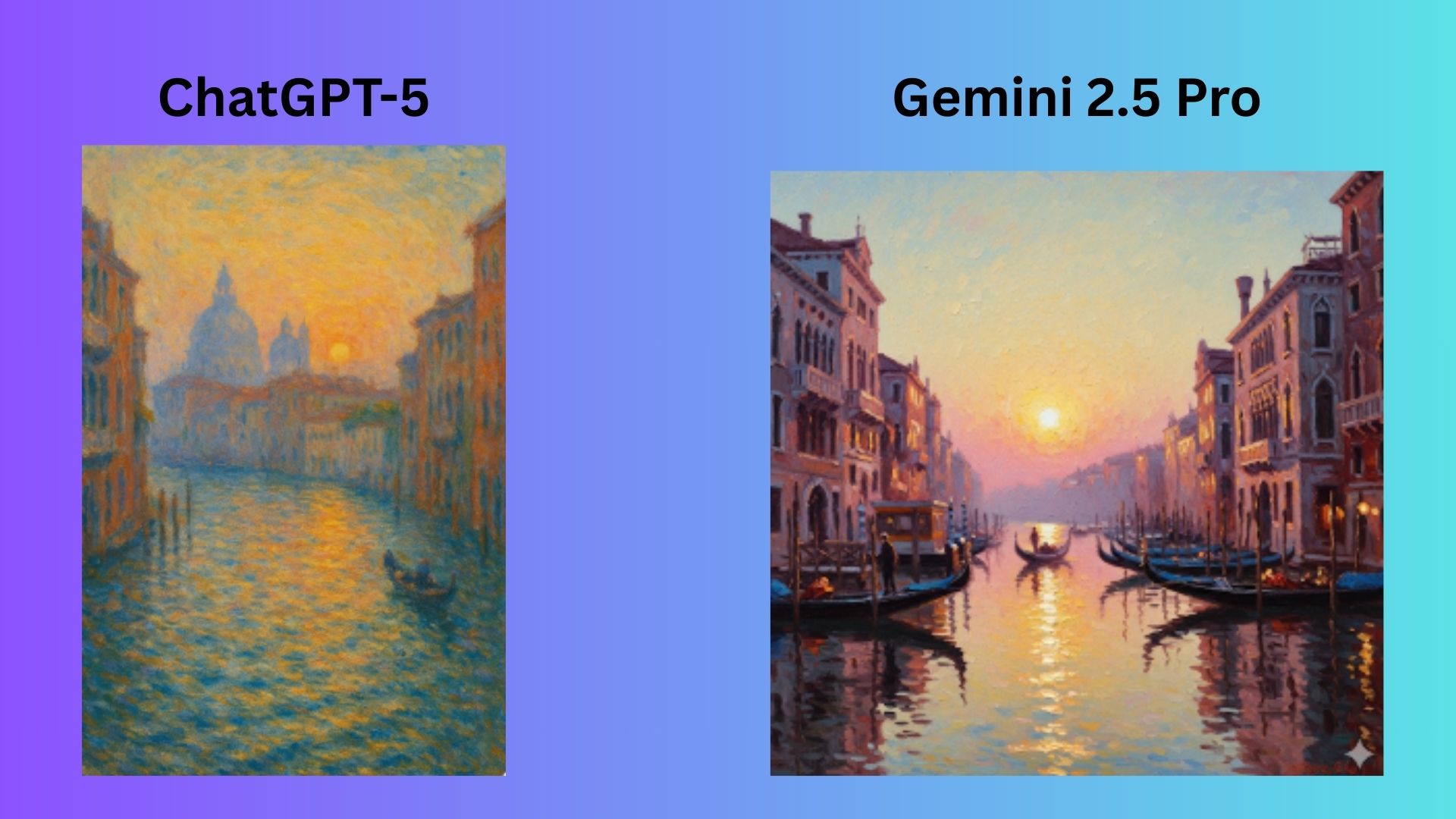

Prompt: “An Impressionist oil painting of a quiet canal in Venice at sunrise, inspired by Claude Monet’s color palette and brushstrokes.”

ChatGPT-5 truly captured an Impressionist technique with visible, expressive brushstrokes throughout.

Gemini 2.5 Pro crafted a beautiful painting, but the image is more photorealistic than in a true Impressionist style

Winner: ChatGPT wins for executing the prompt significantly better. While Gemini created a pleasant Venice scene, it missed the core requirement of Impressionist style, which ChatGPT delivered. The chatbot’s image captures the essence of how Monet would approach a Venice sunrise, prioritizing the impression of light and color over literal architectural detail.

Anime-style poster design

Prompt: “A Japanese anime-style poster featuring a teenage girl standing on a rooftop at dusk, cherry blossoms falling around her.”

ChatGPT emphasized the character rather than environmental details, and the image was more anime than poster.

Gemini 2.5 Pro created a polished, professional poster design with Japanese text and title treatment.

Winner: Gemini handled this prompt more effectively. The keyword was "poster." Gemini created an actual poster design complete with Japanese text, proper composition and professional layout that you'd see for an anime film or series.

Category 6: Object accuracy & branding

9. Marketing style

Prompt: “A top-down flat-lay photo of the latest iPhone on a wooden desk, next to AirPods and a cup of coffee, shot in a clean Apple-style aesthetic.”

ChatGPT-5 was correct with its top-down angle and wooden surface, but missed the mark with a more casual, lived-in feeling rather than pristine Apple aesthetic

Gemini 2.5 Pro truly nailed the aesthetic with a perfect top-down shot, clean, minimalist composition with ample white space. The iPhone with modern design and colorful wallpaper offered exceptional detail.

Winner: Gemini executed the "clean Apple-style aesthetic" requirement significantly better. The composition has a pristine, minimalist quality that defines Apple's marketing photography. The difference in attention to the aesthetic requirement is quite clear between the two approaches.

Overall winner: Gemini 2.5 Pro

After testing nine diverse prompts across five categories, Gemini 2.5 Pro emerges as the stronger image generator, winning six out of nine comparisons. Google's model consistently excelled at photorealism and technical accuracy, particularly when prompts contained specific requirements like lighting conditions, motion blur or aesthetic styles. Gemini demonstrated superior attention to detail in scenarios ranging from candid street photography to Apple-style product shots.

ChatGPT-5 showed its strengths in artistic interpretation and atmospheric effects, delivering standout results when creative expression mattered more than technical precision.

The results reveal distinct philosophies: Gemini tends toward technical precision and literal prompt adherence, while ChatGPT leans into artistic interpretation and visual impact. For users prioritizing accuracy and detailed prompt execution, Gemini 2.5 Pro proves more reliable. However, ChatGPT-5 remains valuable when seeking creative flair and atmospheric storytelling.

Both models represent significant advances in AI image generation, offering near-instant, high-quality results that would have required professional photographers or artists just years ago. The choice between them ultimately depends on whether you value technical precision or creative interpretation more highly in your visual content needs.

Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.