AI chatbots like ChatGPT and Gemini usually need the cloud to function. However, what if you could run an entire large language model (LLM) right on your iPhone without a subscription, an internet connection and without any data leaving your device? Thanks to a handful of apps and lightweight, compressed models, you actually can.

I tried it out, and here’s what you need to know.

Running AI locally on iPhone

You can now run open-source models like Llama and Qwen directly on iOS. These models are slimmed down using a process called quantization, which compresses them to fit into mobile memory without completely breaking performance.

The catch: performance depends heavily on your hardware. An iPhone 15 Pro or 15 Pro Max with Apple’s latest chip can load models up to 7B or 8B parameters (like Llama 3.1 8B), while older phones are better suited for smaller 1–3B parameter models.

The apps that make it possible

- LLM Farm (free): The easiest way to start. You can download a small model (like Phi-3.5 Instruct) and run it offline with just a tap. It’s surprisingly smooth for quick Q&A.

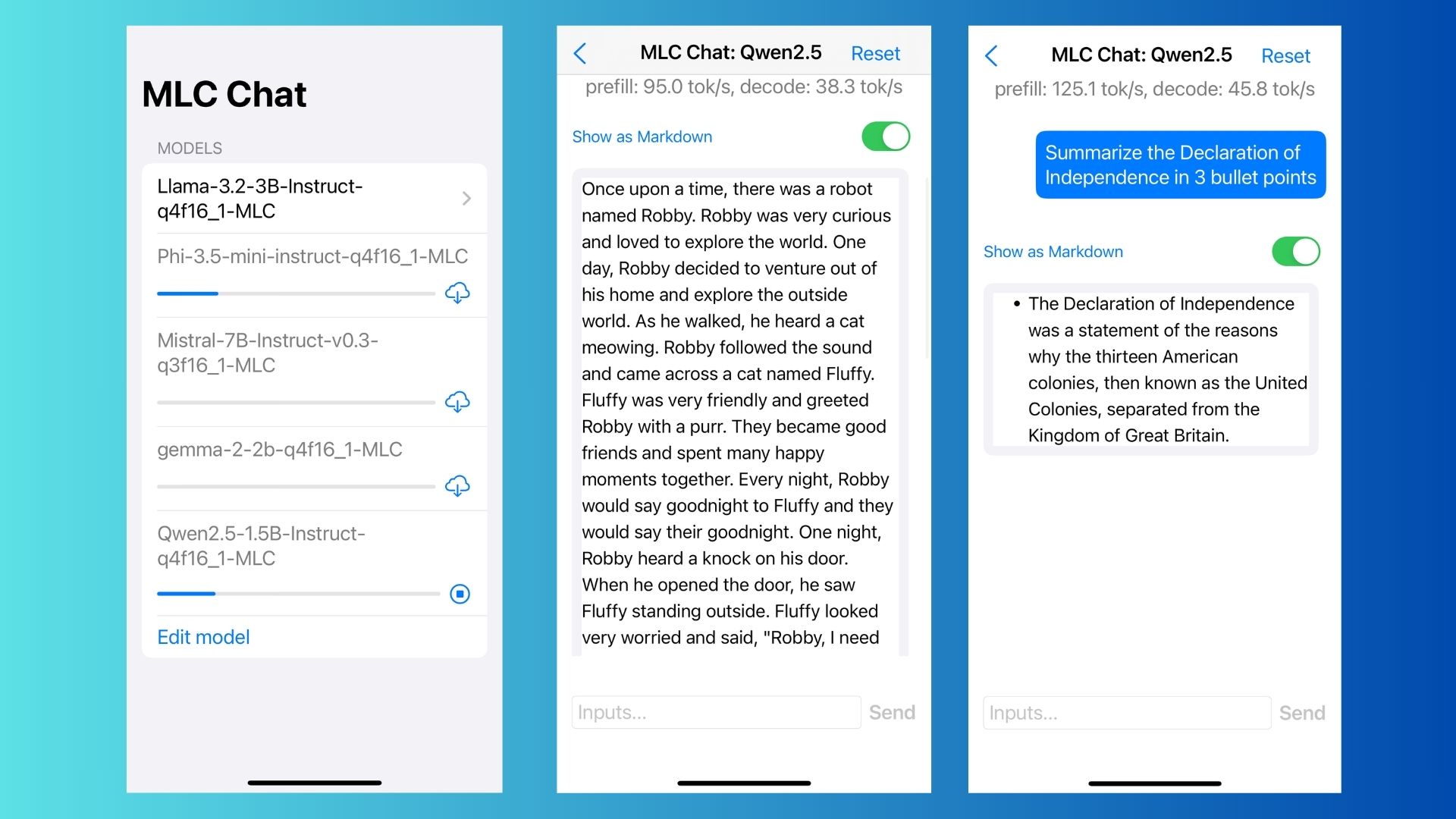

- MLC Chat (free): This is the one I used. I would have used LLM Farm but for some reason the Apple App Store wasn't giving me the option to download it. Since this one was free, I went for it and it worked just as well.

- Private LLM (community project): This is more of a DIY option and not for the casual user. This one has detailed guides for loading models like Llama 3.1 and Qwen on your iPhone. If you like to tinker, definitely give it a shot.

- Apollo (paid): I've heard good things but have not tried this app myself. Let me know in the comments what you think of this privacy-focused app.

How to locally run the model

Once you've downloaded your app of choice, open the app. From there, browse the built-in model list and choose one (e.g., Phi-3.5 Instruct Q4 quantized). I chose Qwen 2.5 for no other reason except that I haven't used it for awhile.

Once you download it, you will see the model on your device (anywhere from a few hundred MB to several GB depending on size). From there, just start chatting.

You'll want to keep expectations realistic; this is not the time to ask for deep dives or long step-by-step plans. Keep the following in mind:

- Speed: Small models (1–3B) respond faster; big models can take seconds per token.

- Context: Don’t paste entire essays; keep prompts shorter.

- Output: Local LLMs may be less polished than ChatGPT, but they’re useful for notes, summaries, Q&A, and lightweight drafting.

I had fun trying a few prompts. Nothing fancy; my goal was just to see the type of responses I got from the local request. One thing you'll notice right away is the speed. It's incredible how fast the LLM responds.

I tried the following prompts and overall, I was impressed.

- “Summarize the Declaration of Independence in three bullet points.”

- “Write a short bedtime story about a robot and a cat.”

- “Give me three dinner ideas using chicken, rice, and broccoli.”

Running a local LLM isn’t the same as chatting with ChatGPT-5. It definitely feels streamlined and raw. If you try this, remember to keep your prompts short because the context windows are much more limited than when using the regular version of the chatbots. Responses will feel slower if you overload the local LLM.

Why would you do this?

- No subscription fees. You are not burning credits just to experiment.

- Privacy built in. Everything stays on your device.

- Surprisingly versatile. I was blown away by how much the mini model could handle. Every time I pushed the limits, it was able to tackle the challenege easily.

Final thoughts

If you have an iPhone 15 or greater and are curious about what AI looks like 'under the hood,' go for it. LLM Farm or MLCChat are fast and free ways to get to get started. For privacy hawks, Apollo is worth a look. And if you’re more of a tinkerer, Private LLM lets you go deep into custom setups.

Just remember that these are not the full-power chatbots you're used to, so don't expect outputs like ChatGPT. But, it is pretty cool and feels futuristic to run your own AI on your iPhone.

Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

More from Tom's Guide

- Nano Banana just broke the internet with these viral trends — I tried these 5 prompts and I'm blown away

- OpenAI launches 'OpenAI for Science' initiative to build the ‘next great scientific instrument’ – here’s what we know

- ChatGPT just quietly rolled out a game-changing upgrade — here’s why I'm already obsessed with it