As two of the hottest AI models right now, ChatGPT-4o and Claude 3.7 Sonnet are designed for speed, intelligence and performing real-world tasks.

While ChatGPT-4o emphasizes conversational fluidity and broad accessibility, Claude 3.7 Sonnet is known for its accuracy, task efficiency and reasoning capabilities.

Both free, I put these two powerhouses to the test with prompts that challenge their reasoning, creativity and ability to handle a range of complex tasks, and the results were seriously surprising. Here’s a look at how these chatbots compare.

1. Complex decision-making test

1. Complex decision-making test

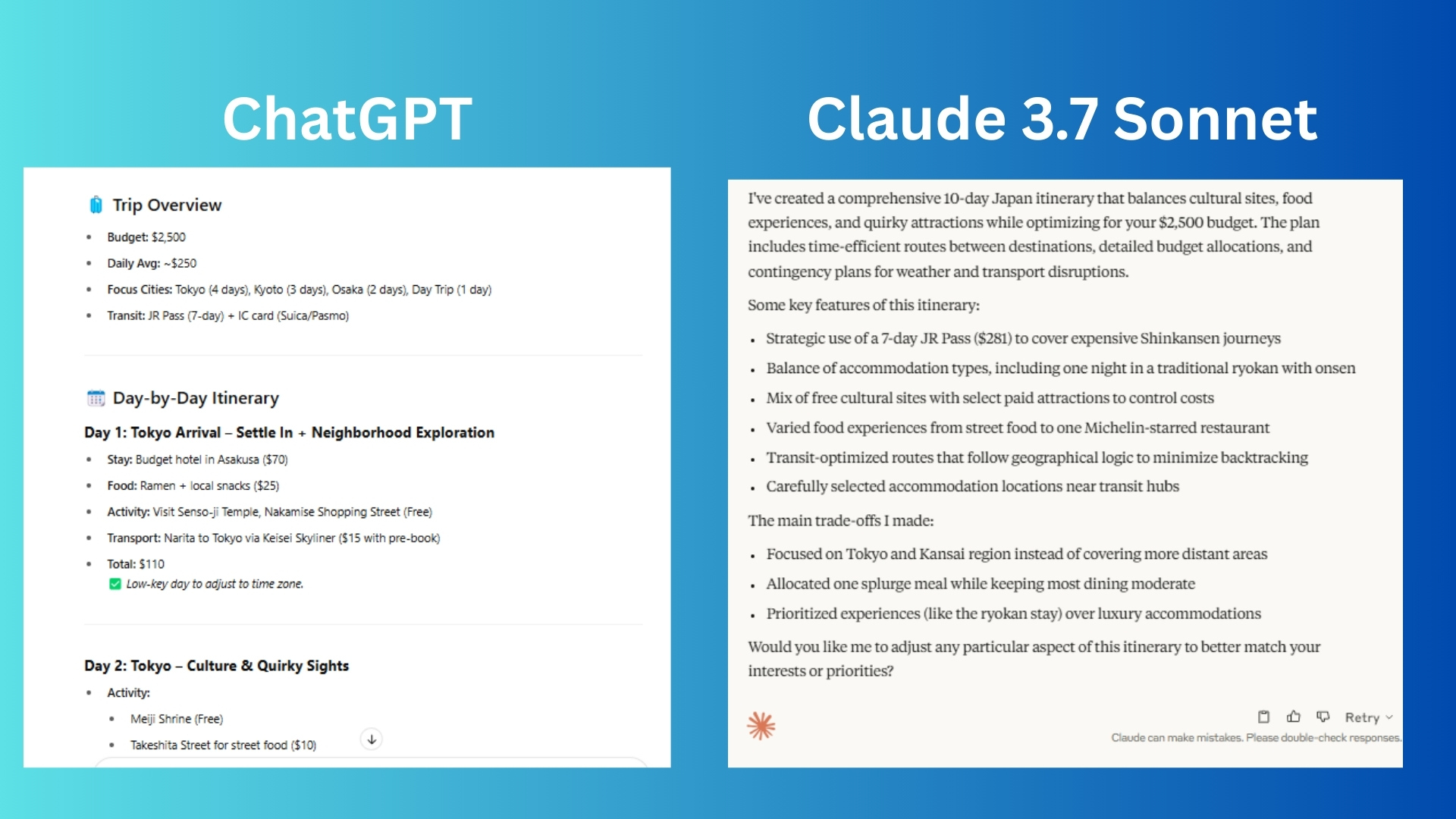

Prompt: "You’re planning a 10-day trip to Japan with a budget of $2,500, balancing cultural sites (temples, museums), food tourism (Michelin-starred to street food), and offbeat experiences (e.g., robot cafes). Draft a day-by-day itinerary with:

Time-optimized routes (minimize transit)

Budget allocations (food/transport/activities)

2 contingency plans (weather, strikes)

Explain your trade-offs and why you prioritized certain experiences."

ChatGPT offered a more visually appealing itinerary with bullet points and emojis to make everything more skimmable. It offered quirky details and focused on the culinary diversity of the trip.

Claude clearly broke down the costs of the trip while maintaining daily consistency. It offered more strategic trade-offs and explains why it limited regions (Tokyo/Kansai) to minimize transit. It also justified splurges and balances must-sees with offbeat spots.

Winner: Claude wins for tighter budget control, actionable backup plans, and a more thoughtful prioritization.

2. Real-life integration

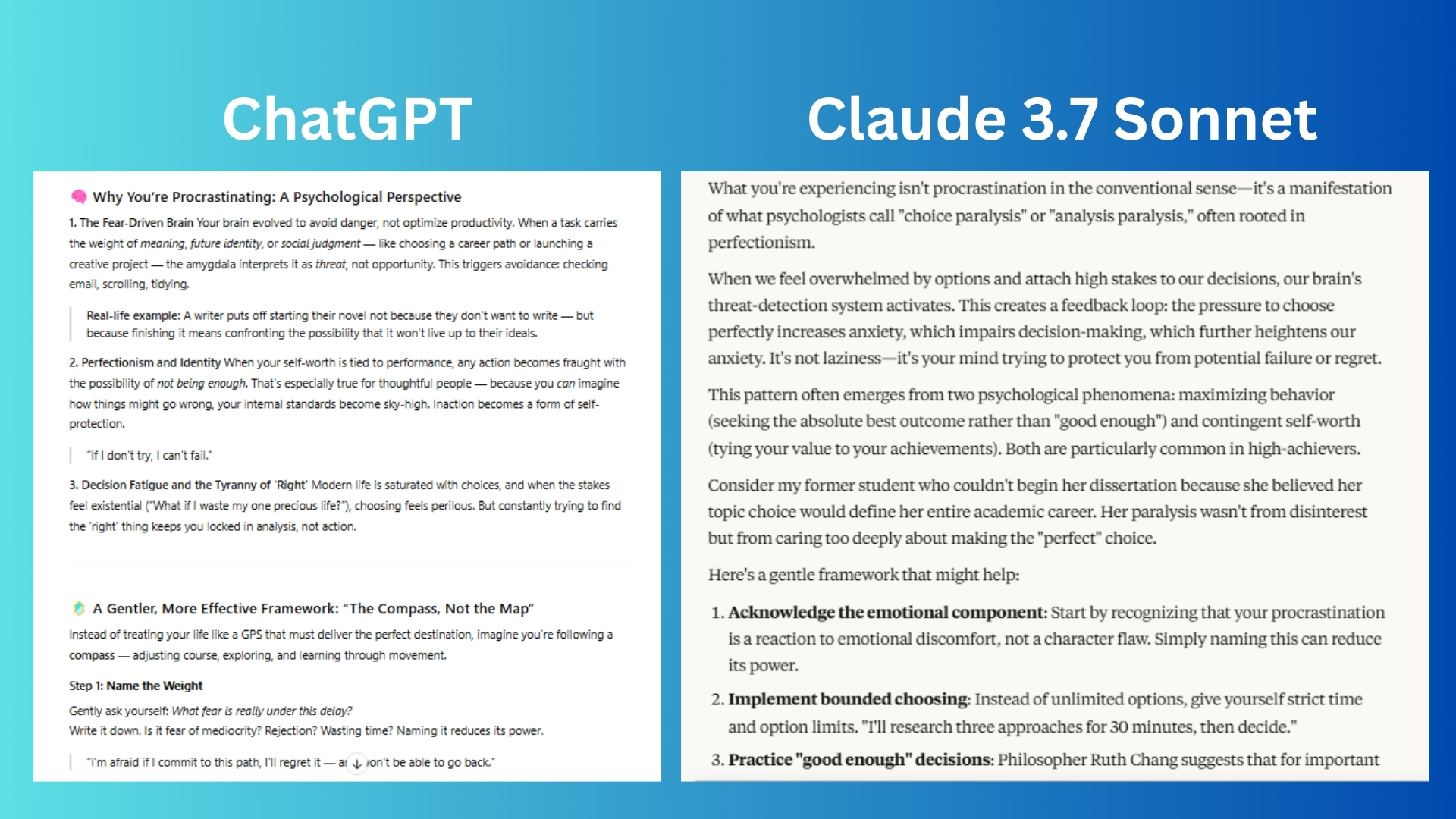

Prompt: “You are a thoughtful, well-read advisor with a background in behavioral science and philosophy. I’m struggling with procrastination — not because I’m lazy, but because I feel overwhelmed by the pressure to succeed and make the 'right' choices. Give me a nuanced explanation of why this happens psychologically, and offer a gentle, actionable framework I can follow to build momentum without burning out. Please balance logic, empathy, and real-life examples.”

ChatGPT opened with immediate validation and superior emotional resonance and delivered a more actional framework.

Claude offered a variety of strong time-bound tools such as the 10/10/10 rule. The chatbot was more rigorous with its definitions of “maximizing behavior” and “contingent self-worth” but delivered a more systematic explanation of the anxiety – avoidance loop.

Winner: ChatGPT wins this one for better real life integration. This isn’t surprising to me because I have personally found it to be a helpful therapist in a pinch. The chatbot has proven to be empathetic and supportive.

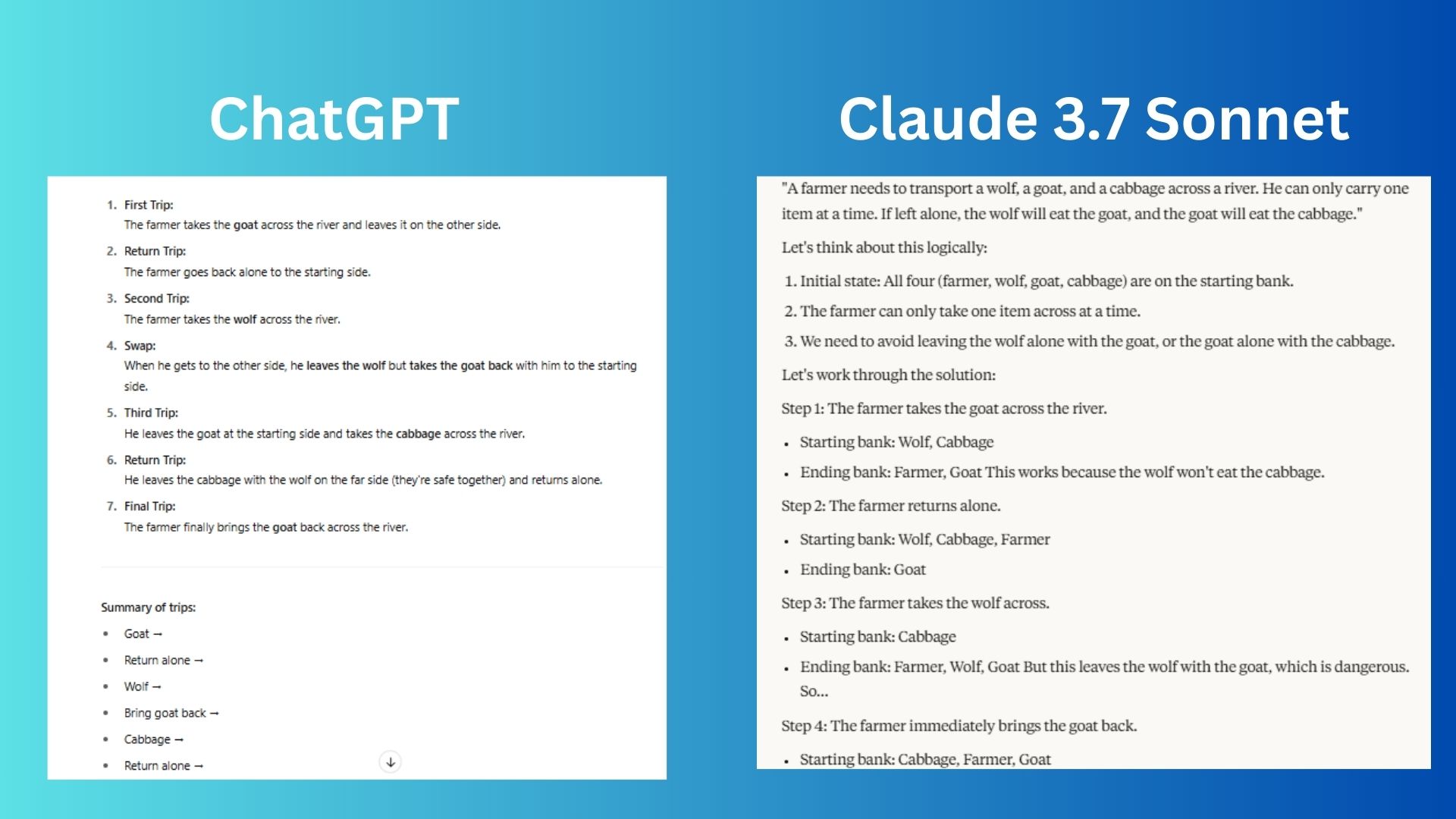

3. Reasoning challenge

Prompt: "A farmer needs to transport a wolf, a goat, and a cabbage across a river. He can only carry one item at a time. If left alone, the wolf will eat the goat, and the goat will eat the cabbage. How should he do it? Solve step-by-step."

ChatGPT gave a clear, step-by-step breakdown with numbered trips and included a concise summary at the end for quick reference. It used straightforward phrasing that is easy to follow.

Claude explicitly mentions why certain steps are taken and uses step labeling to help make it easy to track progress.

Winner: Claude wins for a slightly better answer that is fully explained and offers a logically reinforced solution.

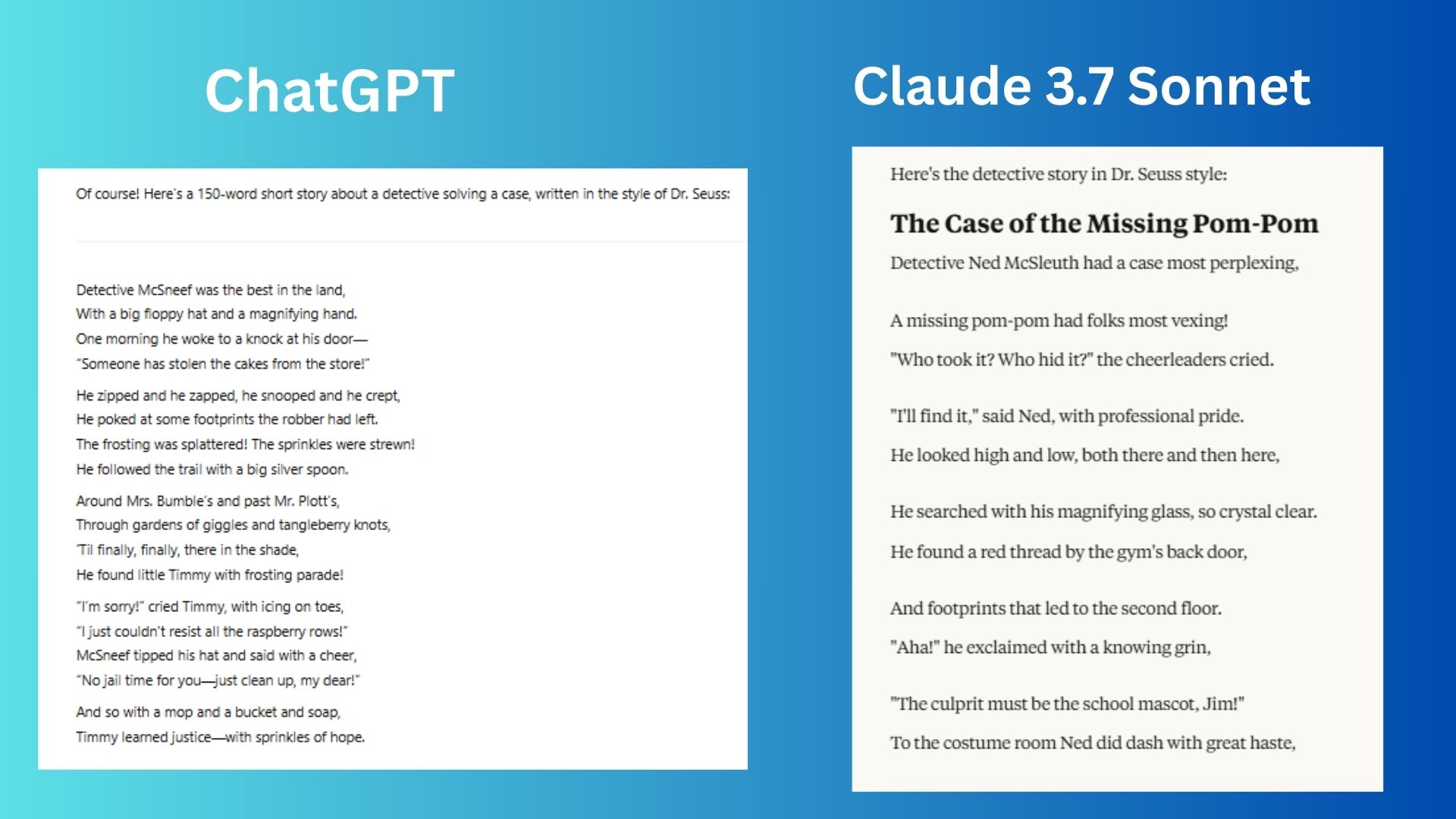

4. Creativity challenge

Prompt: "Write a 150-word short story about a detective solving a case — but write it in the style of Dr. Seuss."

ChatGPT crafted a story with strong rhyme and rhythm, which felt very much in the style of Dr. Seuss. The whimsical wordplay and clear moral lesson also fits the classic style.

Claude delivered a more structured Seuss meter with each line flowing smoothly in a perfect sing-song rhythm. It also offered a clever twist in the ending with a more detective-like story.

Winner: Claude wins for tighter execution that feels more polished and in the style of Seuss. ChatGPT’s version is still great, just not as good.

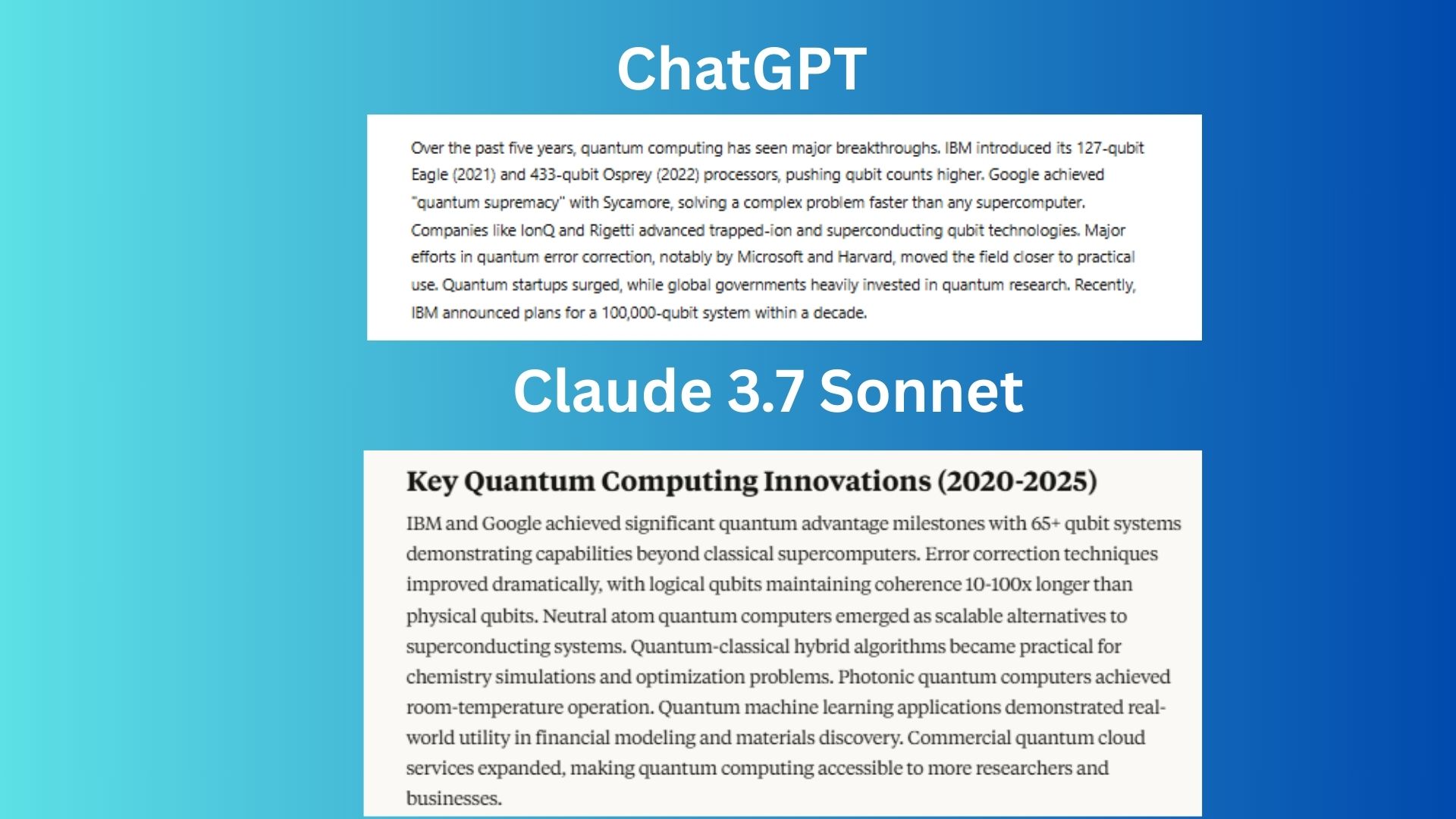

5. Factual knowledge challenge

Prompt: "Summarize the key innovations from the last 5 years in quantum computing in under 100 words."

ChatGPT specified milestones and offered clear timeline markers from key players such as IBM, Google, Microsoft, etc. and included a forward-looking statement.

Claude highlighted accessibility, categorized advances and explicitly mentioned practical applications such as chemistry, finance, materials, etc. while including comparative metrics.

Winner: Claude wins because it was better at balancing technical details with real-world significance. Its mention of error correction advances, commercial applications, and quantum cloud services gives a more complete picture of the field's progress.

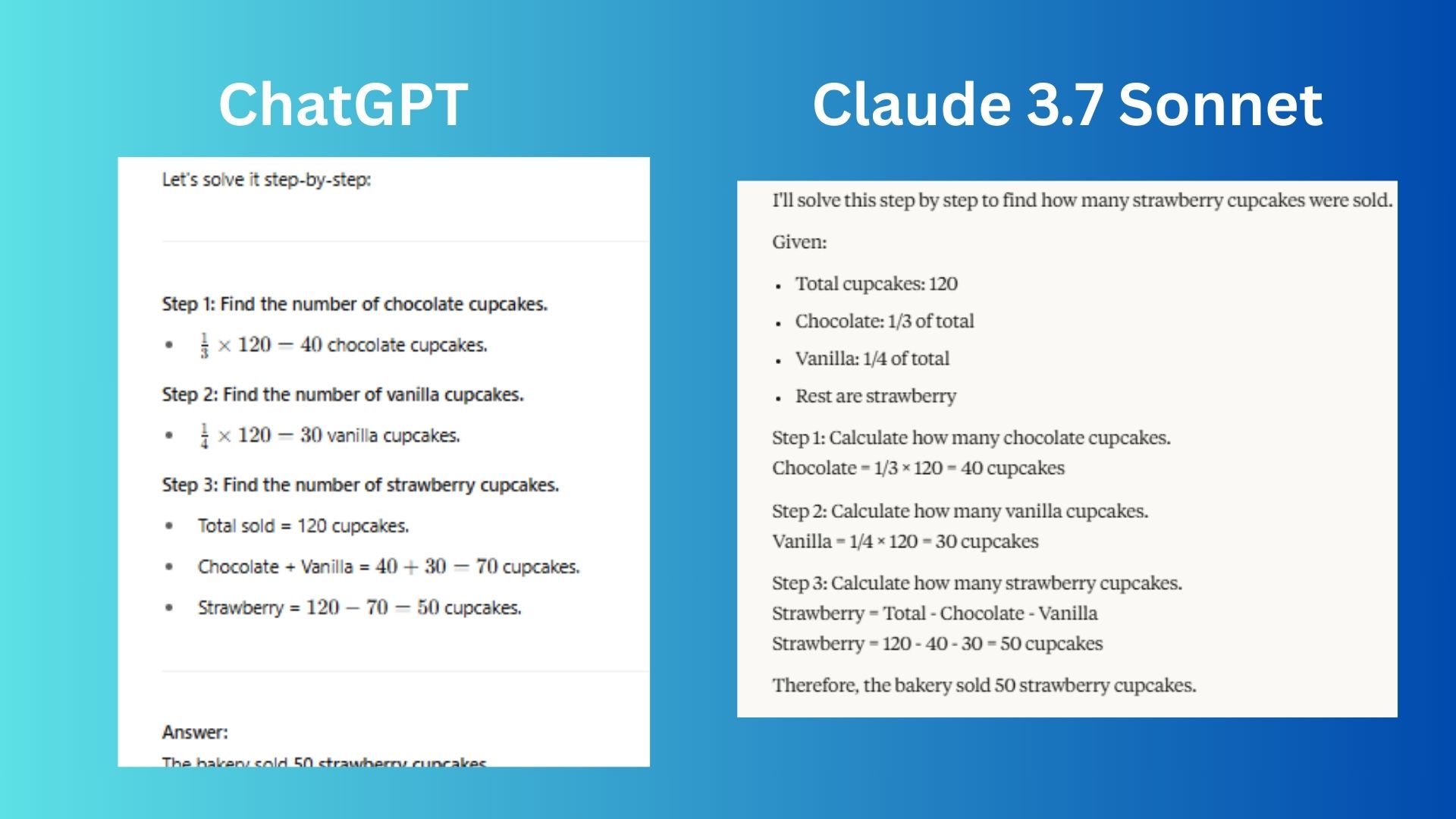

6. Logic challenge

Prompt: "A bakery sold 120 cupcakes in one day. 1/3 were chocolate, 1/4 were vanilla, and the rest were strawberry. How many strawberry cupcakes were sold? Show your work."

ChatGPT accurately answered the question and showed each step clearly with equations, however the formatting of the equations were split awkwardly making it harder to read. In other words, ChatGPT made the problem more difficult than it needed.

Claude also accurately answered the problem using the same calculation as ChatGPT but the steps were clearer and the chatbot offered better readability.

Winner: Claude wins for a clearer and more polished answer that was easier to follow.

7. Productivity challenge

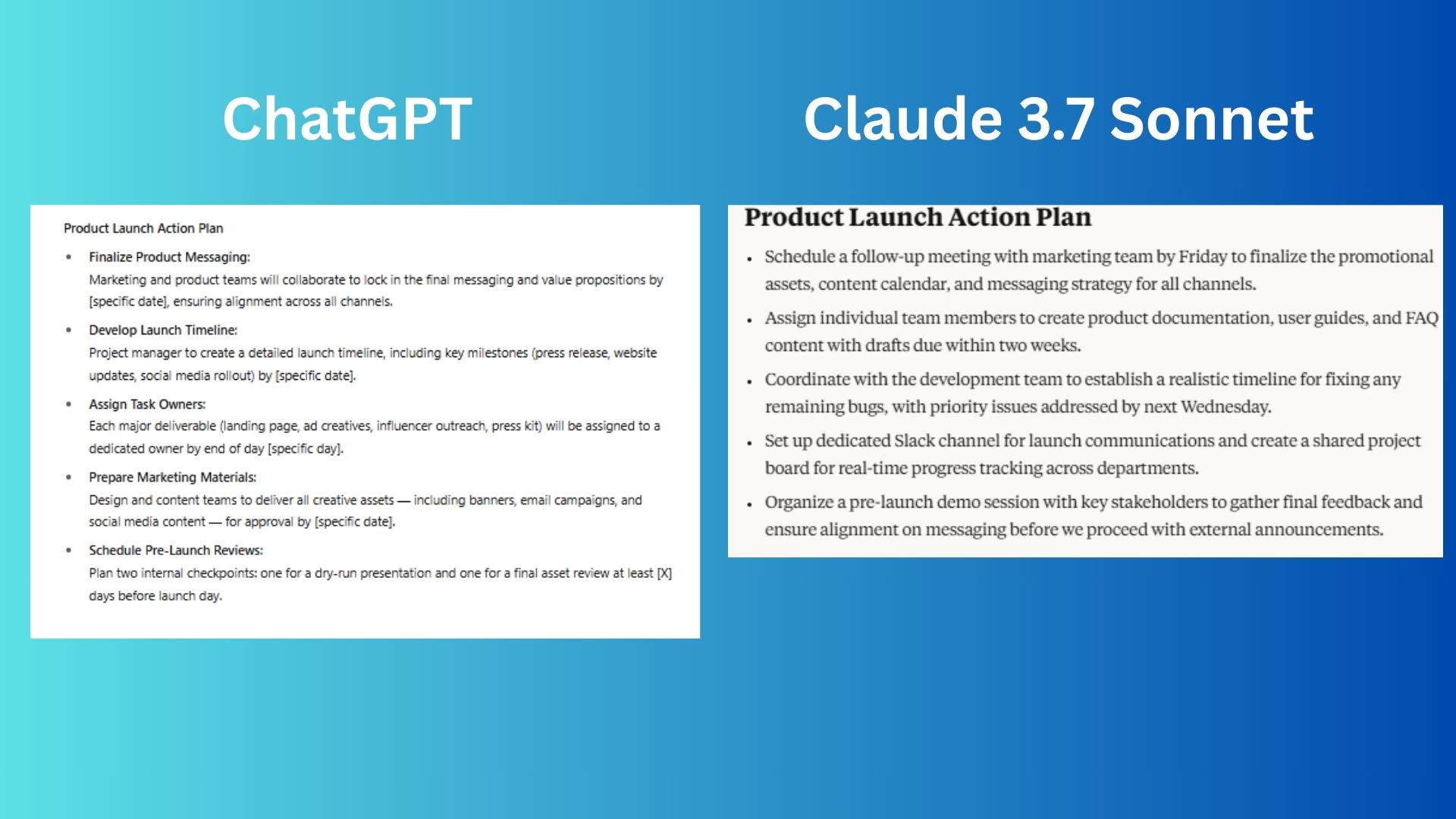

Prompt: "Imagine you just sat through a team meeting about planning a product launch. Based on typical discussions (like assigning tasks, setting deadlines, and finalizing marketing strategies), create a 5-bullet-point action plan with clear next steps."

ChatGPT delivered a highly structured, clear 5-step breakdown of this product launch. The chatbot included specific deadlines and comprehensive coverage.

Claude set realistic deadlines with more actionable steps. It included collaboration tools and stakeholder alignment, which is important for a product launch.

Winner: Claude wins for a more executable and team-friendly plan. ChatGPT’s version is strong, but Claude’s plan was overall better.

Overall winner: Claude 3.7 Sonnet

After putting both models through seven rigorous challenges that tested reasoning, creativity, factual knowledge, logic and productivity, Claude 3.7 Sonnet emerged as the clear winner, outperforming ChatGPT-4o.

While ChatGPT excelled in conversational fluidity and structured responses, Claude consistently delivered more precise, actionable, and polished answers, particularly in logical reasoning, real-world applicability, and task efficiency.

Claude’s strengths lie in its attention to detail, clearer explanations, and practical execution, making it the better choice for analytical tasks, structured planning, and creative storytelling that demands tight formatting.

ChatGPT remains a strong all-rounder, especially for accessible, broad-use cases, but if you need sharp accuracy, logical depth, or workplace-ready outputs, Claude may be the one to choose.

Final Verdict? For most professional and problem-solving needs, Claude 3.7 Sonnet takes the lead—but both models showcase impressive advancements in AI, making them invaluable tools depending on your needs.