Europe’s busy election schedule in 2025 and 2026 is being targeted by AI-generated manipulation on social media. But this time around, Europe’s political landscape is transforming. The fight for voters’ hearts is no longer waged on the streets but on screens, through artificially generated images, cloned voices and sophisticated deepfakes.

It began in Moldova. In late December 2023, a video purportedly showing President Maia Sandu disowning her government and mocking the country’s European ambitions went viral on Telegram.

The Moldovan government swiftly dismissed the clip as fake, but the damage was done.

According to Balkan Insight, an investigative news website, and Bot Blocker, a fake-news watchdog, the Kremlin-linked bot network “Matryoshka” generated the clip using the Luma AI video platform.

The footage, voiced in Russian, caricatured Sandu as ineffective and corrupt, recycling earlier disinformation tied to fugitive oligarch Ilan Shor.

French cybersecurity agency Viginum later described how AI-generated deepfake videos, including the one mimicking President Sandu, were distributed through Telegram and TikTok by a pro-Russian propaganda network affiliated with the Russian media outlet Komsomolskaya Pravda.

Viginum said websites like moldova-news.com were backed by what it called a “structured and coordinated pro-Russian propaganda network.”

Troll factories and cloning

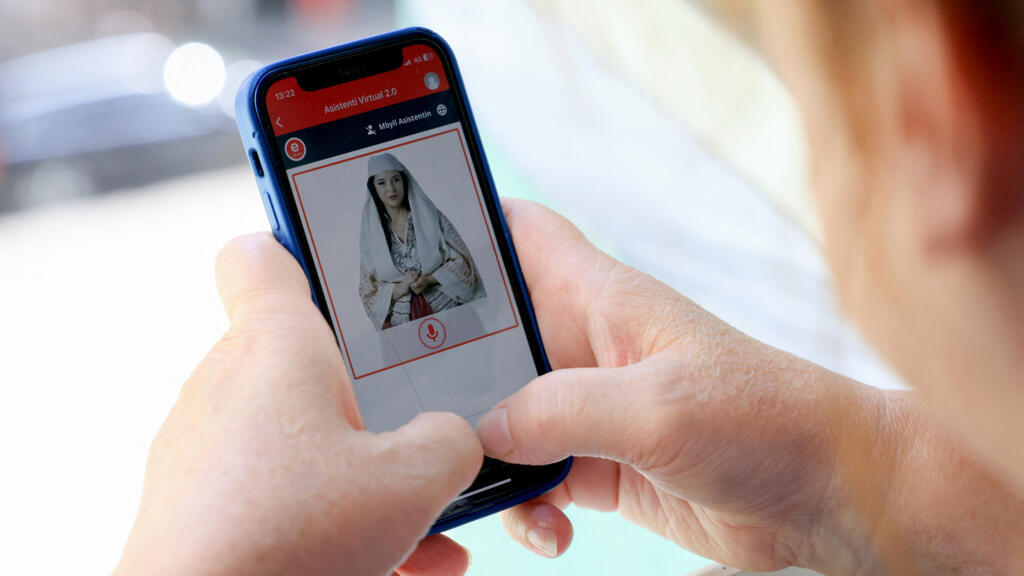

Saman Nazari, a researcher with Alliance4Europe, a Europe-wide pro-democracy platform, told RFI the use of AI to influence elections is massively increasing.

In the past, he said people who wanted to influence elections would copy-paste the same text over and over again.

“They just have AI rewrite them, publish them across different accounts, different pages, with small variations aimed at specific target audiences,” Nazari said.

Nazari also said AI tools are now used to make disinformation operations look legitimate.

France’s Foreign Ministry said Storm 1516, a cyber-attack group, had launched 77 Russian disinformation campaigns targeting France, Ukraine and other Western countries since 2023.

According to Nazari, the operations were run by the successor to the Saint-Petersburg-based Internet Research Agency [founded by Yevgeny Prigozhin in 2013 and dissolved in 2023], the Russian Foundation for Battling Injustice – which created websites that look exactly like well-known media outlets.

The groups running these websites clone news sites, fill them with stolen articles that are rewritten or translated and then re-publish them to appear credible, Nazari said.

Alliance4Europe has counted hundreds of such websites during European elections.

"In the past, it was quite a big job to create a website and fill it with content, but now it's being done almost automatically," Nazari said.

Personal targets

The threat is spreading into Western Europe. Professor Dominique Frizon de Lamotte of CY Cergy Paris University was targeted by an AI-generated video that faked his image and voice and attempted to link him to pro-Russian groups in Moldova.

“I have no connection with Moldova; I don’t even use Telegram,” he told France 3 television. The video was flagged by EUvsDisinfo, an EU misinformation monitoring group, and French media as an attempt to undermine trust in academics.

The older generation may not be able to distinguish between a real video and a deep fake. And there is a large portion of the voting popultation which is in that upper bracket.

REMARKS by Saman Nazari, researcher with Alliance4Europe

The 2024 presidential election in Romania brought further evidence of AI-linked interference.

Officials said the interference, widely attributed by European governments to Russian-backed actors, led to the annulment of the election results by Romania’s Constitutional Court, an unprecedented move in Europe.

During the rerun in mid-2025, far-right narratives and fabricated content circulating on TikTok and Telegram sought to influence public opinion. Pro-European candidate Nicusor Dan ultimately won the repeat vote.

All eyes on Hungary

Hungary is preparing for a flood of AI-influenced content ahead of its 2026 elections.

Pro-government groups, including the National Resistance Movement, have already spent over €1.5 million promoting unlabelled AI videos attacking opposition leader Peter Magyar.

Some clips show fabricated scenes of Hungarian soldiers dying in Ukraine to provoke nationalist sentiment. Magyar has called the videos “pathetic” and “election fraud”.

Analysts say that even when viewers think content might be fake, emotional impact still shapes opinions.

Within a larger legal framework, the European Union has rules forcing platforms to show who is behind political adverts.

Within a wider framework, the European Union has already introduced the Digital Services Act in 2022 to strengthen platform rules on transparent political advertising.

The commission also operates a Rapid Alert System and an AI Integrity Taskforce to detect and counter manipulative content across languages and borders.

French cyber agency warns TikTok manipulation could hit Romania's vote, again

Voters at risk

Nazari said young people are used to seeing altered images and deepfakes online.

“Young people have grown up with memes, with people making deep fakes. Edited images and videos and so on. [They] are familiar with the concept.”

But older voters, he told RFI, are more likely to be misled.

“They might not be able to distinguish between a real video and a deep fake video,” Nazari said, adding they are especially vulnerable in countries where digital literacy is not very high.