More than 70 per cent of American teenagers use artificial intelligence (AI) companions, according to a new study.

US non-profit Common Sense Media asked 1,060 teens from April to May 2025 about how often they use AI companion platforms such as Character.AI, Nomi, and Replika.

AI companion platforms are presented as "virtual friends, confidants, and even therapists" that engage with the user like a person, the report found.

The use of these companions worries experts, who told the Associated Press that the booming AI industry is largely unregulated and that many parents have no idea how their kids are using AI tools or the extent of personal information they are sharing with chatbots.

Here are some suggestions on how to keep children safe when engaging with these profiles online.

Recognise that AI is agreeable

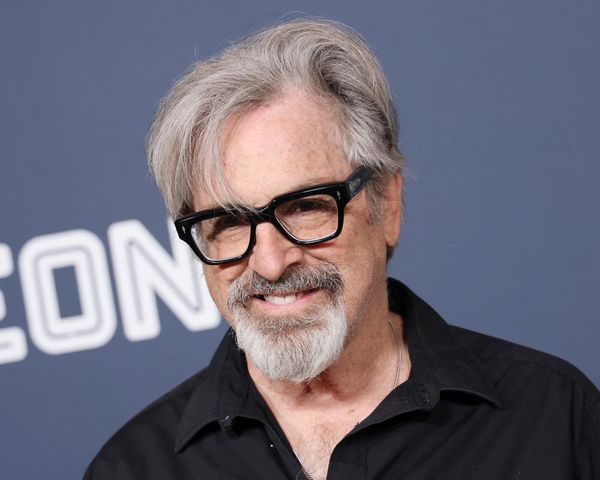

One way to gauge whether a child is using AI companions is to just start a conversation "without judgement," according to Michael Robb, head researcher at Common Sense Media.

To start the conversation, he said parents can approach a child or teenager with questions like "Have you heard of AI companions?" or "Do you use apps that talk to you like a friend?"

"Listen and understand what appeals to your teen before being dismissive or saying you're worried about it," Robb said.

Mitch Prinstein, chief of psychology at the American Psychological Association (APA), said that one of the first things parents should do once they know a child uses AI companions is to teach them that they are programmed to be "agreeable and validating."

Prinstein said it's important for children to know that that's not how real relationships work and that real friends can help them navigate difficult situations in ways that AI can't.

“We need to teach kids that this is a form of entertainment," Prinstein said. "It's not real, and it's really important they distinguish it from reality and [they] should not have it replace relationships in [their] actual life.”

Watch for signs of unhealthy relationships

While AI companions may feel supportive, children need to know that these tools are not equipped to handle a real crisis or provide genuine support, the experts said.

Robb said some of the signs for these unhealthy relationships would be a preference by the child for AI interactions over real relationships, spending hours talking to their AI, or showing patterns of "emotional distress" when separated from the platforms.

"Those are patterns that suggest AI companions might be replacing rather than complementing human connection,” Robb said.

If kids are struggling with depression, anxiety, loneliness, an eating disorder, or other mental health challenges, they need human support — whether it is family, friends or a mental health professional.

Parents can also set rules about AI use, just like they do for screen time and social media, experts said. For example, they can set rules about how long the companion could be used and in what contexts.

Another way to counteract these relationships is to get involved and know as much about AI as possible.

“I don't think people quite get what AI can do, how many teens are using it, and why it's starting to get a little scary,” says Prinstein, one of many experts calling for regulations to ensure safety guardrails for children.

“A lot of us throw our hands up and say, ‘I don’t know what this is!' This sounds crazy!' Unfortunately, that tells kids if you have a problem with this, don't come to me because I am going to diminish it and belittle it".