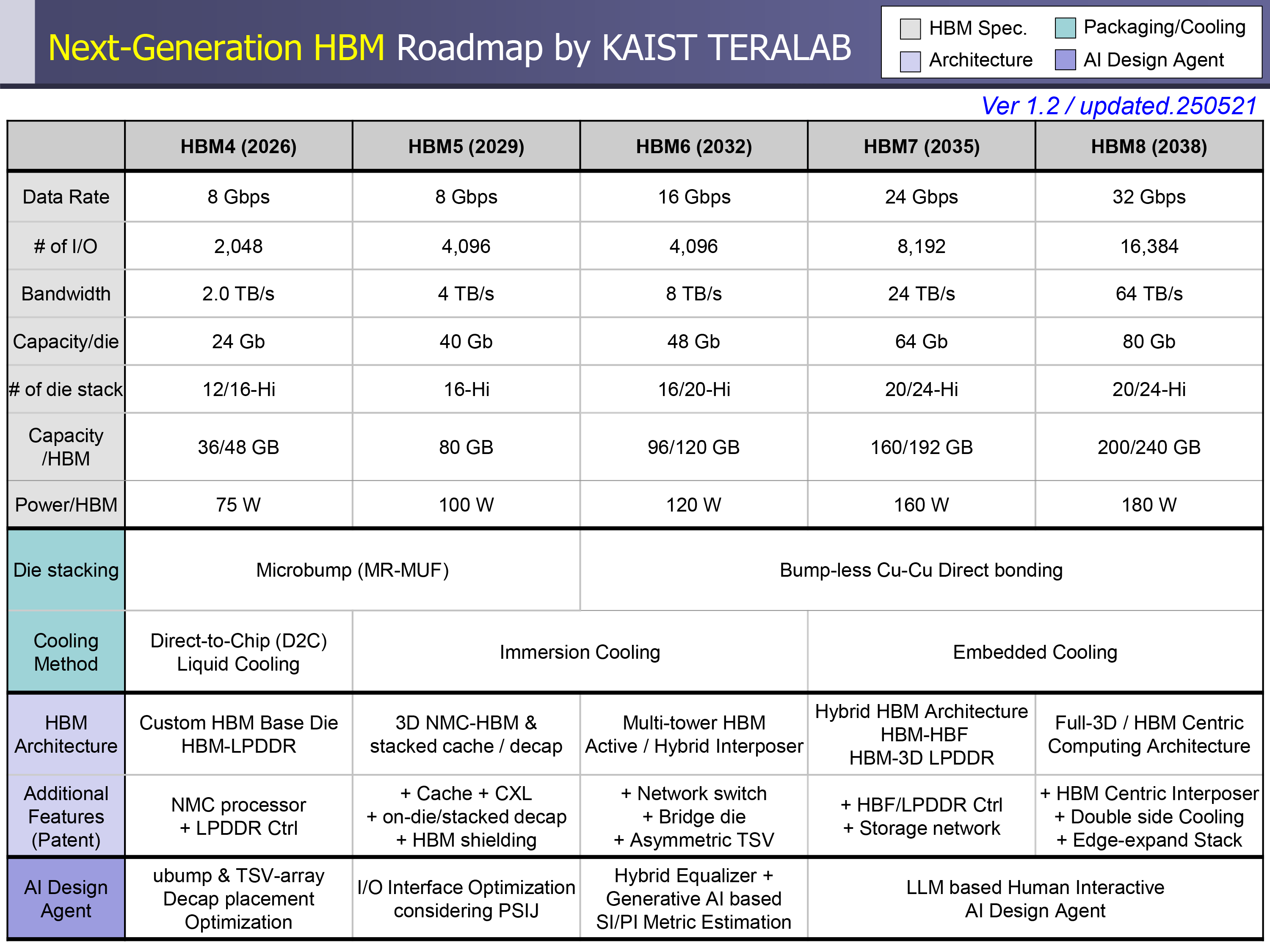

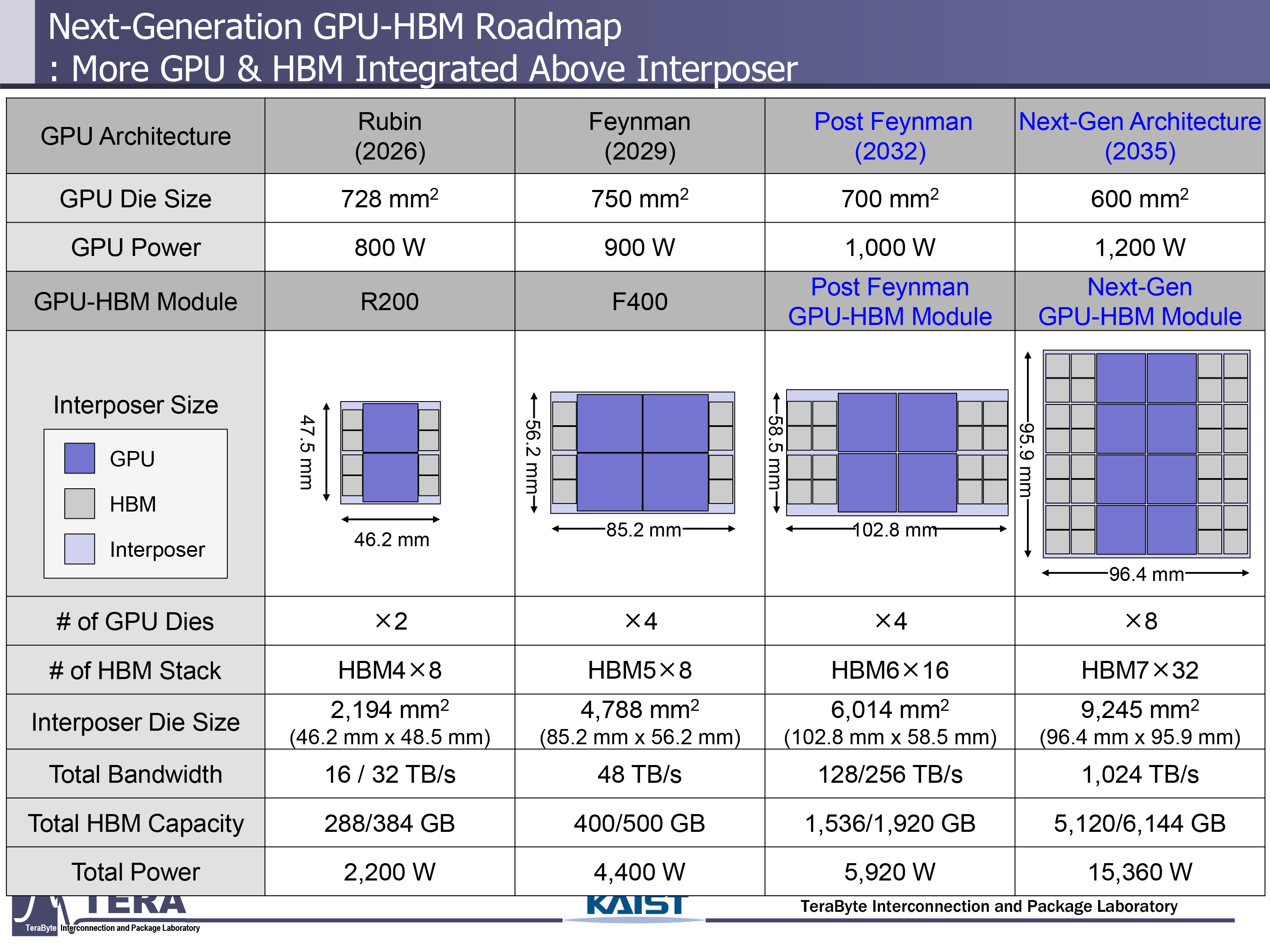

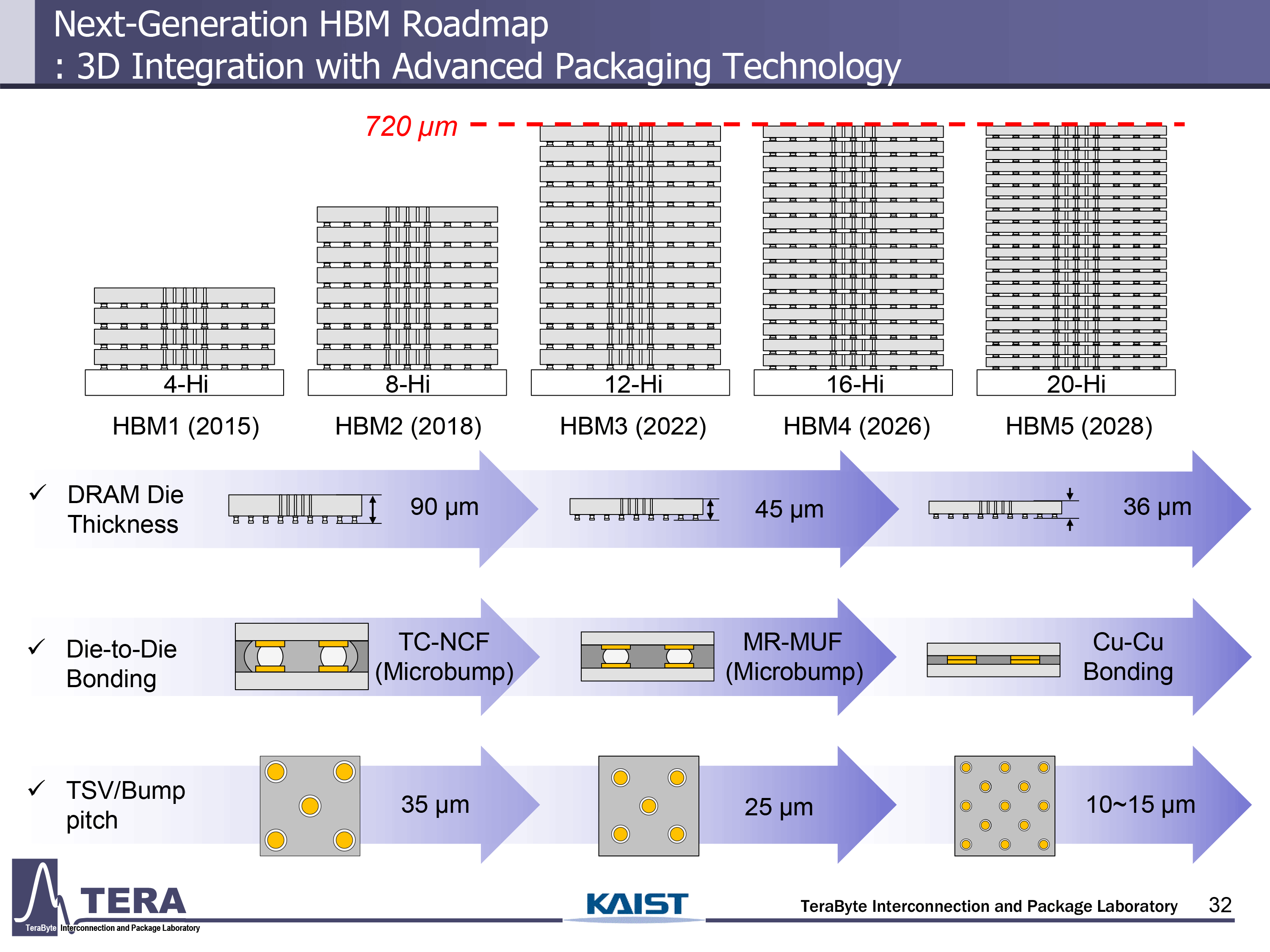

KAIST, a leading Korean national research institute, has released a 371-page paper that details the evolution of high-bandwidth memory (HBM) technologies through 2038, showing increases in bandwidth, capacity, I/O width, and thermals. The roadmap spans from HBM4 to HBM8, with developments in packaging, 3D stacking, memory-centric architectures with embedded NAND storage, and even machine learning-based methods to keep power consumption in check.

Keep in mind that the document is about the hypothetical evolution of HBM tech given the current direction of the industry and research, not an actual roadmap of a commercial company.

HBM capacity per stack will increase from 288 GB to 348 GB for HBM4, to 5,120 GB to 6144 GB for HBM8. Also, power requirements will scale with performance, rising from 75W per stack with HBM4 to 180W with HBM8.

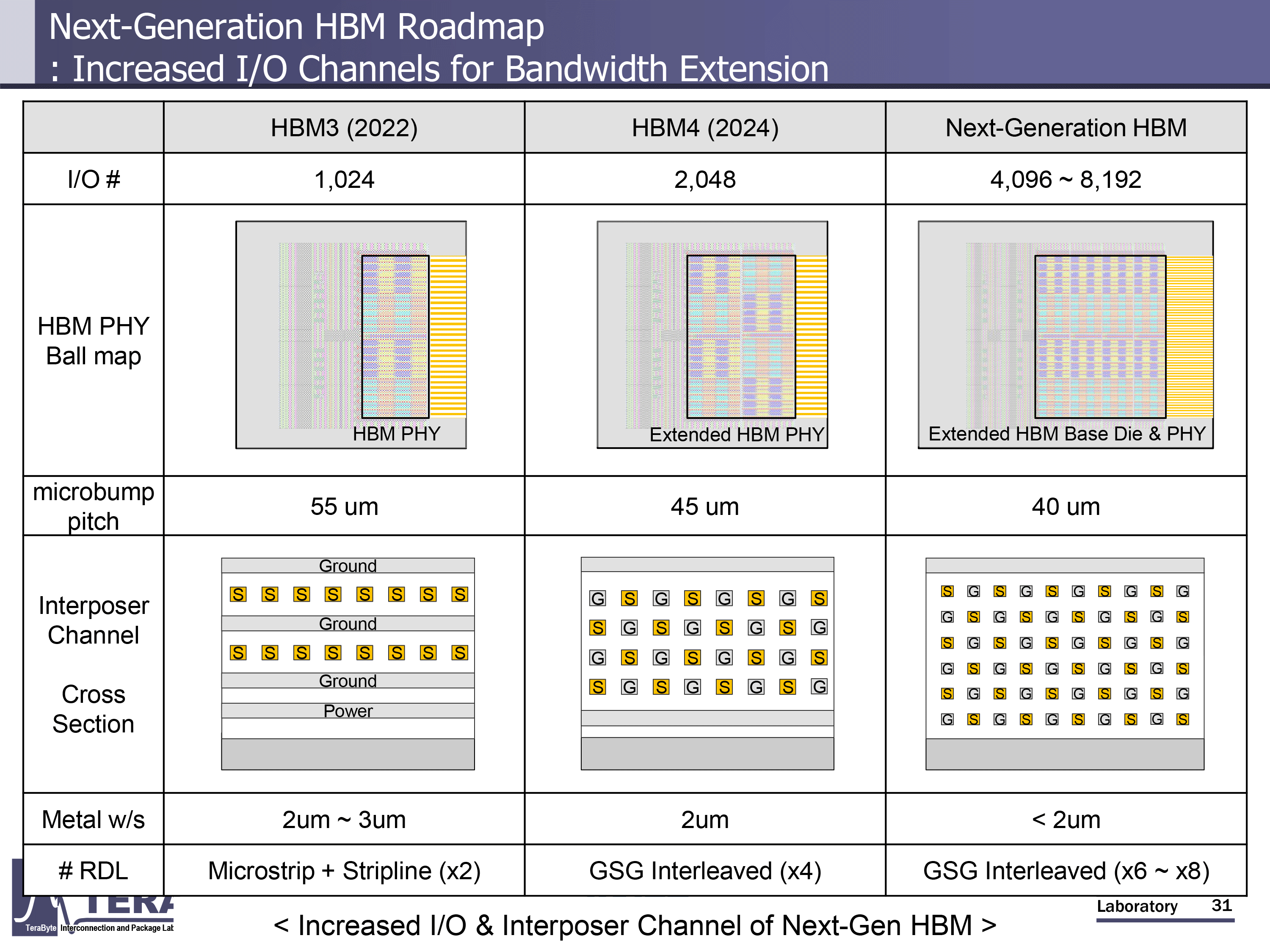

Between 2026 and 2038, memory bandwidth is projected to grow from 2 TB/s to 64 TB/s, while data transfer rates are set to rise from 8 GT/s to 32 GT/s. The I/O width per HBM package is also set to increase from the 1,024-bit interface of today's HBM3E to 2,048 bits with HBM4 and then all the way to 16,384 bits for HBM4.

We already know pretty much everything about HBM4 and we know that HBM4E will add customizability to base dies to make HBM4E more tailored for particular applications (AI, HPC, networking, etc.).

Expect such capabilities to remain in HBM5, which will also deploy stacked decoupling capacitors and 3D cache. With a new memory standard comes increased performance, so HBM5, expected to arrive in 2029, will retain HBM4's data rate but is projected to double the I/O count to 4,096, thereby raising bandwidth to 4 TB/s and per-stack capacity to 80 GB.

Per stack power is expected to grow to 100 W, which will require more advanced cooling methods. Interestingly, KAIST expects HBM5 to continue using microbump technology (MR-MUF), although the industry is reportedly already looking at direct bonding with HBM4. In addition, HBM5 will also integrate L3 cache, LPDDR, and CXL interfaces on the base die, alongside thermal monitoring. KAIST also expects AI tools to start playing a role in optimizing physical layout and jitter reduction with the HBM5 generation.

HBM6 is projected to take over in 2032, increasing transfer speed to 16 GT/s and per-stack bandwidth to 8 TB/s. Capacity per stack is expected to reach 120 GB, and power climbs to 120W. Researchers at KAIST believe that HBM6 will adopt direct bonding without bumps, along with hybrid interposers combining silicon and glass. Architectural changes include multi-tower memory stacks, internal network switching, and extensive through-silicon via (TSV) distribution. AI design tools expand in scope, incorporating generative methods for signal and power modeling.

HBM7 and HBM8 will push things further, with HBM8 reaching 32 GT/s and 64 TB/s per stack. Capacities are projected to expand to 240 GB. Packaging is believed to adopt full 3D stacking and double-sided interposers with embedded fluid channels.

While HBM7 and HBM8 will still formally belong to the family of high-bandwidth memory solutions, their architectures are expected to dramatically differ from what we know as HBM today. While HBM5 will add L3 cache and interfaces for LPDDR memory, these generations are projected to incorporate NAND interfaces, enabling data movement from storage to HBM with minimal CPU, GPU, or ASIC involvement. That will come at the cost of power consumption, which is expected to be 180W per stack. AI agents will manage real-time co-optimization of thermal, power, and signal paths, according to KAIST.

Keep in mind that KAIST is a research institution, not a company with an actual roadmap, so it barely models what could possibly come based on the knowledge of innovations that it has today. There are other respectable research institutes in the semiconductor industry, including Imec in Belgium, CEA-Leti in France, Fraunhofer in Germany, and MIT in the U.S., just to name a few. These institutes issue similar predictions regarding semiconductor process nodes, chip materials, and other related topics. Some predictions may seem unrealistic today, but the industry tends to develop ways to produce products in unexpected ways, so many of these predictions come true and sometimes are even exceeded by actual manufacturers, such as Intel or TSMC.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.