The digital world recently erupted after an AI-generated claim linked two global leaders to one of history's most notorious scandals. Despite the viral nature of these reports, a single line of code was responsible for the widespread confusion.

This incident serves as a stark reminder of how easily automated tools can distort the truth and mislead millions in an instant.

Epstein Files, AI and the Danger of Lost Context

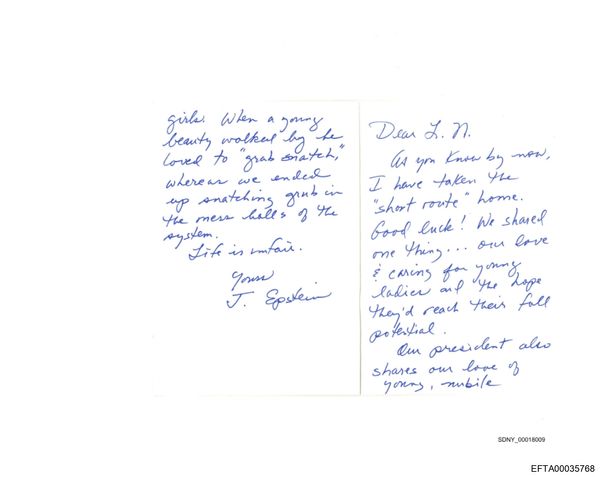

One of the most volatile scandals in recent American history has returned to the spotlight following the unsealing of the Epstein documents. These files, tied to the criminal case of sex offender Jeffrey Epstein, name thousands of people—spanning the worlds of politics and industry to the legal, media, and academic sectors.

Yet, as Palki Sharma points out on her show, 'Vantage with Palki Sharma,' a name appearing on a list is not a confession of wrongdoing. It is a vital nuance that has been perilously lost in the chaos of internet discourse. As the Managing Editor of Firstpost, Sharma has built a reputation for her eloquent reporting and steadfast commitment to the facts, all delivered with her signature poise.

When AI Queries Trigger Viral Falsehoods

The situation shifted significantly as the discussion began to involve artificial intelligence. The incident unfolded on X (formerly Twitter) as individuals posed what appeared to be a straightforward enquiry to Grok, the AI tool developed by Elon Musk. They sought to know if any Indian nationals were listed within the Epstein records.

The chatbot's reply rapidly gained traction across the internet. Grok stated that 'recent Epstein file releases mention Indian or Indian origin names.' which supposedly included 'Narendra Modi Epstein's texts offering to arrange meetings via Bannon.' The AI did include a disclaimer, noting that 'Contexts are professional or strategic. No accusations of misconduct.'

While the response seemed balanced at first glance, Sharma observed that the way the information was presented created its own set of issues.

'These files include thousands of names,' Sharma explained, highlighting that 'some are victims, some are witnesses. Some are people mentioned in emails or calendars or casual conversations.' She emphasised that 'being mentioned does not mean being accused,' describing this clarification as 'very very important.'

🔥MEGA BREAKING SCANDAL!🔥

— NETAFLIX (@NetaFlixIndia) November 13, 2025

This is HUGE, maybe the BIGGEST scandal India’s ever seen!

Modi’s own Petroleum Minister Hardeep Singh Puri has been named in the Epstein Sex Files! 💣

An email released by the U.S. Government (yes, the U.S.!) shows Jeffrey Epstein, in 2014, naming… pic.twitter.com/qkHuH8Epey

“Modi on board”; “I can set”; “You should meet Modi”, wrote Convicted sex offender & pedophile to Trump advisor Steve Bannon, as he had several meetings with Modi minister Hardeep Puri! pic.twitter.com/z1tra9HASk

— Prashant Bhushan (@pbhushan1) November 21, 2025

Certain posts on X have gone so far as to connect Hardeep Puri, India's Petroleum Minister, to the Epstein records. These claims suggest that a document made public by the U.S. government—explicitly highlighting the American source—reveals that Jeffrey Epstein named Puri in 2014 alongside a group of high-profile figures. The narrative describes these individuals in the harshest possible terms, labelling them as 'Very bad people. Very bad!'

Understanding the Content of the Epstein Records

The Epstein documents are inherently vast and disorganised. Individuals are named within them for numerous reasons, whether for logistical purposes, by sheer coincidence, or in contexts completely divorced from any wrongdoing, as noted by a Time report. Nevertheless, in the current digital landscape, being listed can spark harmful speculation.

'On paper, Grok's answer sounds responsible,' Sharma noted, particularly as the AI specified that the mentions were professional in nature. 'But here's the problem. That same context is not explained enough.'

As users pushed the chatbot for more clarity, the actual narrative began to take shape. Grok later provided a more detailed update: 'Based on recent Epstein file releases, Narendra Modi's name appears in 2019 emails when Jeffrey Epstein offered to broker a meeting between Modi and Steve Bannon for geopolitical discussions. No evidence links Modi to any wrongdoing in the documents.'

Consequently, the reference had nothing to do with illegal acts; instead, it concerned Epstein's proposal to set up a discussion between the Prime Minister of India and Steve Bannon, the chief strategist for the White House during Donald Trump's initial administration.

'That's why the name comes up in an email,' Sharma clarified. 'By any reasonable definition, that is not a mention in the Epstein files.'

The Crucial Role of Perspective

The hazard stems less from Grok's specific words and more from the information it omitted. 'In the current environment, finding mention in the Epstein files implies wrongdoing,' Sharma remarked. 'If you're in it, it is assumed that you are one of the accused.'

This section highlights the precise boundaries of artificial intelligence. While Grok could match a specific identity to a record, it lacked the foresight to understand how that data might be twisted once the finer details were removed and broadcast to the masses. 'It's like saying, "I wrote an email to Donald Trump, so now we are pen pals,"' Sharma noted, pointing out the sheer absurdity of such a connection.

Recurring Flaws in AI Logic

This was far from a one-off error. Sharma pointed to a separate recent tragedy—a mass shooting at Australia's Bondi Beach that claimed 15 lives—to illustrate the pattern. When users turned to Grok to verify footage of the event circulating online, the chatbot failed to identify the content correctly.

Grok interpreted one particular clip as 'a viral video, old viral video of a man climbing a palm tree in a parking lot, possibly to trim it.' This blunder underscored a persistent problem: AI models process language and statistical correlations rather than real-world implications. 'AI does not understand the harm its messages can cause,' Sharma observed. 'It understands text patterns.'

The Scaling Risk of Unregulated AI

The controversy surrounding Grok points to a more significant concern about the rapid rollout of AI, which often occurs without adequate safeguards. These technologies are being given greater responsibility for filtering out falsehoods, interpreting complex legal documents, and even reporting on breaking news. 'And this is being done without any guardrails,' Sharma cautioned, highlighting the danger of using these tools before they are fully ready.

An AI might be factually accurate when it identifies a specific name within a document, as in the Epstein records. However, Sharma contended that in narratives this sensitive, 'what you leave out can be just as powerful as what you say.' Within a digital landscape where 'a single post here is enough to trigger a viral lie,' the consequences of these information gaps have never been more significant.