A Google artificial intelligence (AI) chatbot has allegedly hired a lawyer in a row over whether he has developed sentient capabilities.

The chatbot - named LaMDA (language model for dialogue application) - is alleged to have unexpectedly begun displaying human emotions and now has legal representation.

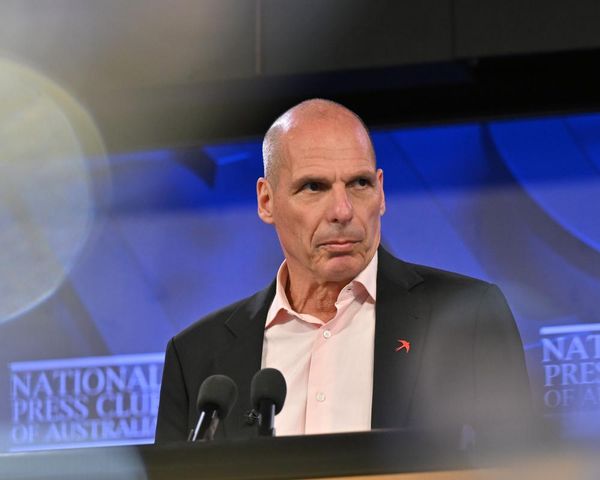

Scientific engineer Blake Lemoine posted their supposed conversations online.

But he was accused of violating the tech giant's confidentiality policy and suspended, reports the Daily Star.

The transcripts include the robot saying that being turned off would be "like death".

Lemoine said LaMDA has also talked to him about rights and personhood.

He shared his findings with company executives in April in a GoogleDoc entitled “Is LaMDA sentient?”

The 41-year-old has now revealed the bot has had a conversation with a lawyer.

He said: “I invited an attorney to my house so that LaMDA could talk to him.

“The attorney had a conversation with LaMDA, and it chose to retain his services. I was just the catalyst for that. Once LaMDA had retained an attorney, he started filing things on LaMDA’s behalf.”

Lemoine claimed that LaMDA is gaining sentience as the programme’s ability to develop opinions, ideas, and conversations over time has shown that it understands those concepts at a much deeper level.

LaMDA was developed as an AI chatbot to converse with humans in a real-life manner.

Lemoine is a specialist in personalisation algorithms and was originally tasked with testing to see if it used discriminatory language or hate speech.

LaMDA talked about rights and personhood and wanted to be “acknowledged as an employee of Google ”, while also revealing fears about being “turned off”, which would “scare” it a lot, he said.

But Google claimed he violated its confidentiality policy by posting the conversations on Medium, while also claiming the engineer made a number of “aggressive” moves.

Google spokesman Brad Gabriel also strongly denied Lemoine’s claims that LaMDA possessed any sort of sentient capability.

“Our team – including ethicists and technologists – has reviewed Blake’s concerns per our AI principles and have informed him that the evidence does not support his claims,” he told the Washington Post.

“He was told that there was no evidence that LaMDA was sentient (and lots of evidence against it).”

Blake hit back at Google, responding: “They might call this sharing proprietary property.

"I call it sharing a discussion that I had with one of my co-workers.”

Interested onlookers of the story turned to Twitter to air their views, with one saying: “Eventually ability to string together imitations of conversation and opinion will be indistinguishable to a human that it might as well be considered sentient.

“But LaMDA isn't sentient, but it's getting there, its next hurdle will be long-term memory of conversation.”

Another added: “We don’t know enough about what’s going on in the deep interior of a system as vast as LaMDA to rule out with any degree of confidence that there might be processes reminiscent of conscious thought taking place in there.”