Generative AI has amazed the world with its capabilities in text, code, and image generation. But its successor, agentic AI, isn’t just producing output. It’s taking action. Beneath the novelty, generative AI has mainly remained reactive, responding to inputs without initiative, memory, or lasting intent. It mimics intelligence without owning intention.

That is changing.

A new intelligence class is emerging: agentic AI. These systems reason, plan, act, and adapt. They pursue goals, navigate ambiguity, recover from setbacks, and adjust to shifting contexts. This is not just a product upgrade; it is a product rethink.

If generative AI is about content creation, agentic AI is about goal execution. For product leaders, this evolution demands a new kind of architectural and strategic rigor.

From Prompts to Purpose

Traditional generative models scale expression. Agentic systems scale execution.

These are not just tools that complete tasks; they are also tools that enhance productivity. They are actors pursuing outcomes. They can assess context, initiate strategies, and adapt based on feedback, all without manual supervision.

It is the difference between a GPS that shows directions and a driver who reroutes, picks up a colleague, and stops for coffee without being told. Agentic AI operates like that driver. It takes initiative within structured boundaries. With initiative comes responsibility, not just for what the system does but also for how it behaves.

This shift requires a redefinition of success metrics. It is no longer just about output fluency. Product teams must now consider how sound systems reason under uncertainty. Judgment becomes as important as logic.

Why Product Leadership Matters More Than Ever

In the era of generative AI, success hinged on the finesse of the user interface and the effectiveness of prompt engineering. Agentic systems require something deeper: behavioral architecture.

Product leaders must now define:

- What autonomy means in their context

- How goals align with user intent and business strategy

- How to mitigate risks that arise from decisions, not just actions

This shift is not just technical. It is organizational. Success now depends on cross-functional coordination across engineering, legal, compliance, design, and operations. Leaders must make deliberate decisions about escalation logic, tolerance boundaries, and trade-offs.

What outcomes are irreversible? What behaviors are tolerable? Where should control be relinquished, and where must it be retained?

A Product-Led Framework for Agentic AI

Building systems that are both agentic and trustworthy requires intentional design. Below is a four-layer framework product leaders can use to enable responsible autonomy.

1. Structured Context Pipelines

Would you trust a colleague to make key decisions with partial data? Then, do not let your agents do the same.

Agentic systems rely on structured, real-time, machine-readable context. This context must be continuously refreshed through logs, APIs, sensors, and user profiles and tailored to the system’s active goals.

Key considerations:

- Deliver data hierarchically, not in bulk

- Resolve conflicting inputs in real time

- Compress context effectively to stay within token limits

Blind agents are brittle. With proper integration, intelligent behavior becomes more rational and dependable.

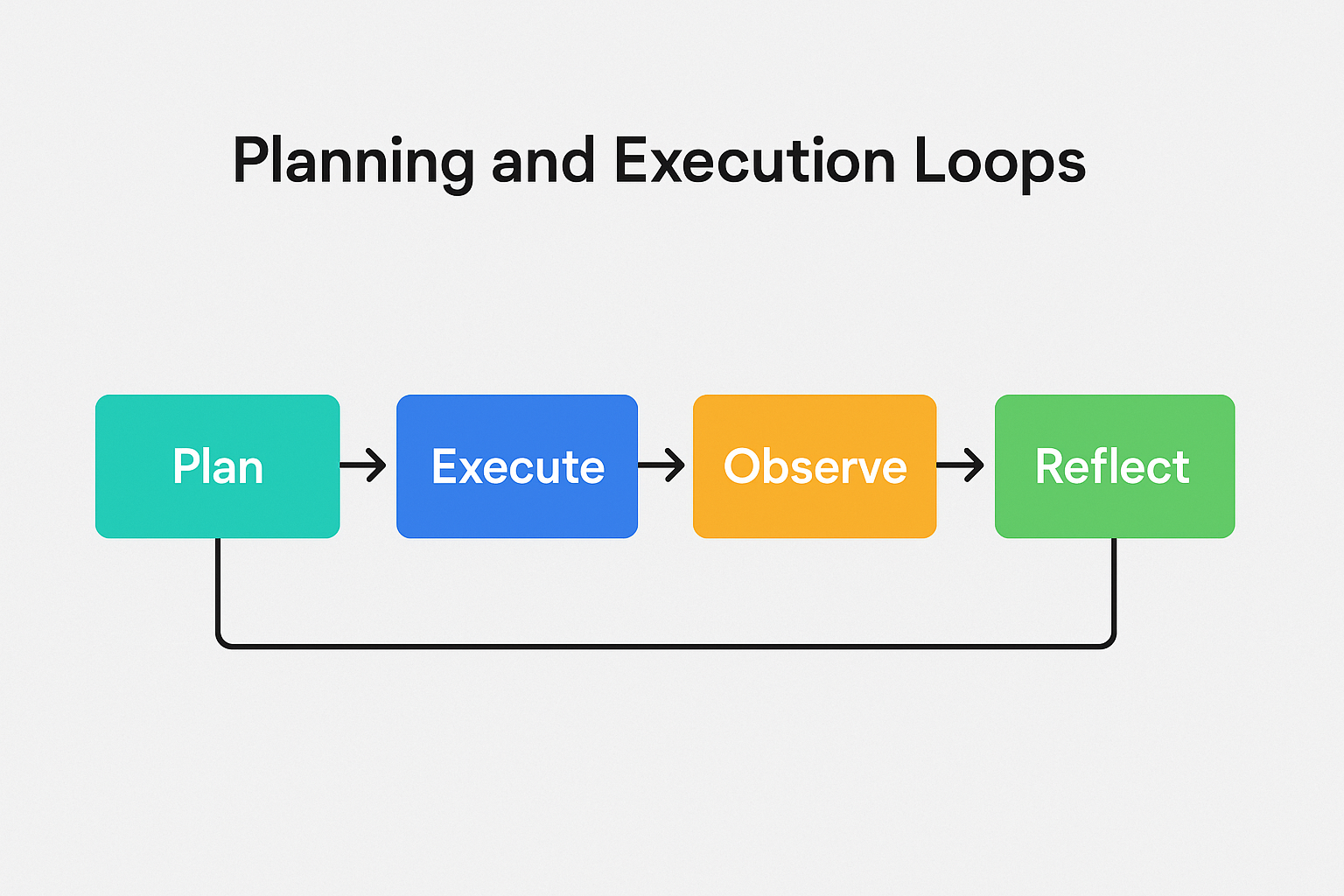

2. Planning and Execution Loops

Intelligent action is not a one-time event. It is iterative:

Image: Adaptive Planning and Execution Loops in Agentic AI Systems

These loops enable course correction, escalation when needed, and resilience over time.

Product teams must define:

- When agents should act versus ask

- What triggers human intervention

- How do these loops evolve through feedback

In one recent implementation, a behavior-led agentic system was designed to safeguard product integrity by identifying data inconsistencies and resolving catalog-level anomalies at scale. The initiative resulted in over $ 600 million in projected savings and strengthened system-wide trust in AI decision-making. The outcome was not driven by a single task but by a loop of feedback and adjustment engineered from the start.

3. Action Interfaces with Guardrails

Autonomy without oversight is a liability.

Every agent-initiated action, whether calling an API or editing a database, must be:

- Logged

- Auditable

- Reversible

Systems must enforce:

- Permission hierarchies

- Rollback protocols

- Rate limits and audit trails

Trust is designed at the interface layer, not added afterward.

4. Human-in-the-Loop Mechanisms

Even the most innovative systems need support. Human-in-the-loop (HITL) design is essential for contextual, ethical, and high-stakes decisions.

Agents must know when to:

- Escalate uncertainty

- Seek approval

- Explain decisions clearly

This is not AI replacing people. It is humans designing better delegation systems.

A Case for Applied Behavior, Not Just Machine Learning

When building systems that interact with messy, real-world data, product decisions go beyond model tuning. It is about constructing behavior:

- Systems that can explain their reasoning

- Mechanisms to override or roll back actions

- Processes that adapt when confidence dips or ambiguity spikes

In a separate deployment, a secondary “LLM-as-a-judge” was introduced to evaluate the outputs of the main system. This agent monitored decision quality, flagged edge cases, and helped the primary model self-correct, improving performance and reinforcing accountability without relying solely on human review.

The design was not model-first. It was a behavior-first approach, defining how the system should handle ambiguity, prioritize safety, and maintain transparency.

The result wasn't just functional. It reflected thoughtful engineering grounded in safety, context, and the ability to recover when things went off track.

Where Agentic AI Is Already Showing Up

Agentic systems are stepping out of the lab and into production workflows across industries:

- Customer Support: Issue triage, SLA-aware escalation, and full-cycle resolution

- Catalog and Data Operations: Product deduplication, anomaly correction, and policy enforcement

- Knowledge Workflows: Multi-source research, summarization, and automated synthesis

- People Operations: Candidate evaluation, interview feedback, and scheduling with constraints

The common thread? These are not just examples of automation. They reflect delegation, where systems are entrusted with goals, context, and bounded decision authority.

These use cases also reflect increasing trust. Businesses are no longer treating AI as a back-office assistant. They are embedding it directly into operational workflows and holding it accountable for outcomes, not just output.

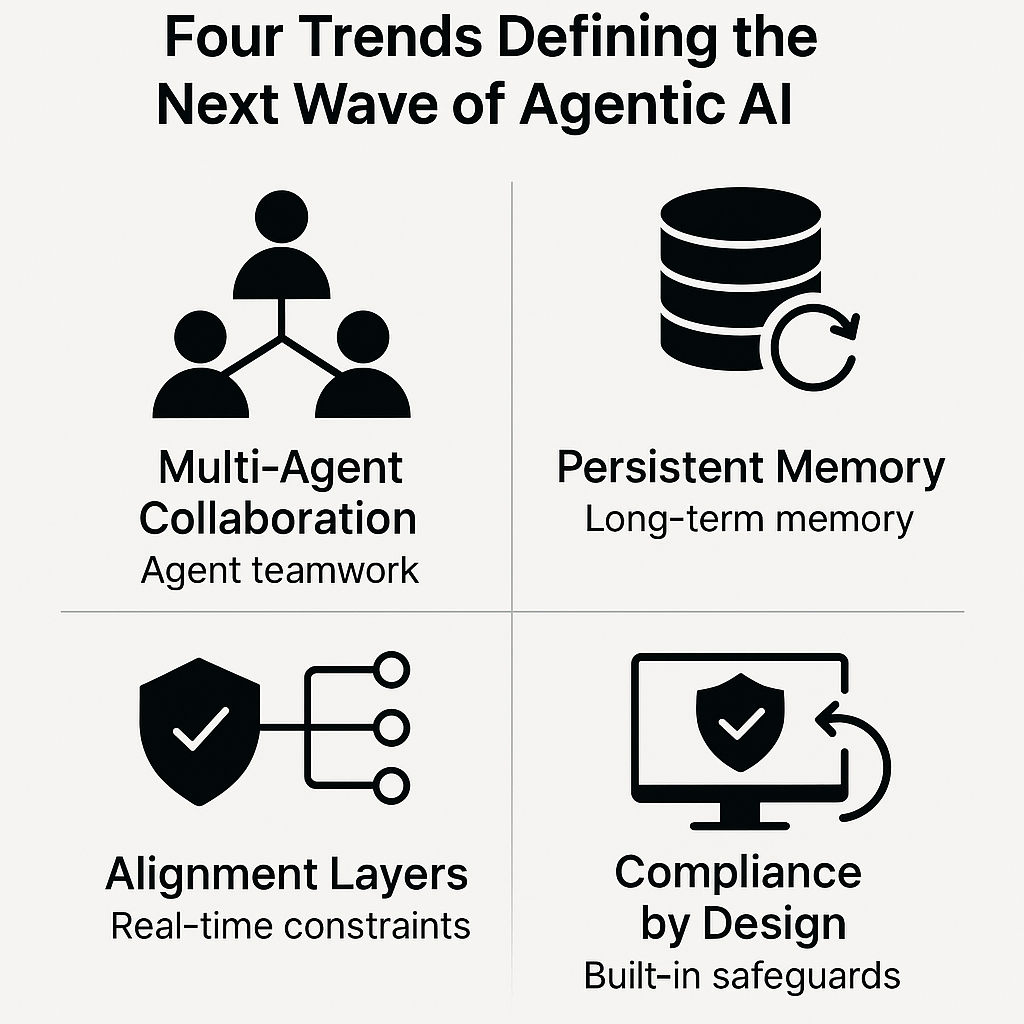

Four Trends Defining the Next Wave

Looking ahead, four design trends are emerging as critical enablers of agentic systems.

Image: Four Trends Defining the Next Wave of Agentic AI

1. Multi-Agent Collaboration

Agents are not operating in isolation. Task-specific agents are beginning to coordinate across functions, such as legal and finance, sharing memory and outcomes. Architecting these interactions requires standardized messaging, goal alignment protocols, and shared context buffers.

2. Persistent Memory

Short-term prompt history is not enough. Agents must retain and reuse learning across sessions, workflows, and users to remain adaptive. Memory systems enable deeper personalization and long-term planning, both of which are essential for enterprise deployment.

3. Alignment Layers

Ethical, operational, and compliance constraints must be embedded into runtime logic, not written into static policies. This includes real-time value checking, explainability layers, and decision path tracing, enabling live adherence to organizational norms.

4. Compliance by Design

Auditability, fairness, and traceability are not features of a system. They are architectural requirements for responsible deployment at scale. From logs to rollback, these controls must be in place on Day One, not after systems fail.

Closing the Loop: Designing for Autonomy and Accountability

Generative AI changed how we create. Agentic AI will change how we operate.

However, the leap from static tools to autonomous actors is not just a technical one. It is behavioral. Behavior must be structured, monitored, and continuously improved.

If you are a product leader, ask yourself:

- What decisions should the system make on its own?

- How do we define acceptable behavior in ambiguous situations?

- What happens when the system fails, and how do we recover from it?

Agentic AI offers unprecedented leverage, but it also introduces new kinds of risk. Managing that trade-off is the next great challenge of product strategy.

It starts not with better models but with better questions.

About the Author

Kanika Garg is a product strategist and applied science leader with over seven years of experience building large-scale systems across the supply chain, AI, and operations domains. She specializes in designing behavior-first frameworks for intelligent technologies, with a focus on responsible autonomy, data-driven decision-making, and cross-functional product development. Kanika holds an MBA in Supply Chain Management and is certified in Lean Six Sigma.

References:

- McKinsey & Company. (2024, May). The promise and the reality of gen AI agents in the enterprise. McKinsey & Company.https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-promise-and-the-reality-of-gen-ai-agents-in-the-enterprise

- Moveworks. (2024, April). Agentic AI: The next evolution of enterprise AI. Moveworks.https://www.moveworks.com/us/en/resources/blog/agentic-ai-the-next-evolution-of-enterprise-ai

- Rafter, D. (2024, June 29). Gen AI is passé: Enter the age of agentic AI. SiliconANGLE.https://siliconangle.com/2024/06/29/gen-ai-passe-enter-age-agentic-ai/

- Stanford Institute for Human-Centered AI. (2024, February 8). Humans in the loop: Designing interactive AI systems. Stanford HAI.https://hai.stanford.edu/news/humans-loop-design-interactive-ai-systems