Photo: Shutterstock

AI is radically reshaping how we verify identities.

Organizations must verify users' identities to maintain security and trust, whether you're accessing banking services, booking flights, or logging into an e-commerce platform. But as fraud tactics evolve, the technology must keep pace.

I’m Priya Singh. As a Lead Data Scientist working on enterprise-scale fraud analytics, I’ve built AI-driven fraud detection systems that have saved millions.

In this article, I’ll explore the latest AI and ML innovations shaping identity verification, discuss our challenges, and share how to leverage these technologies to build more secure and efficient verification systems.

The Importance of AI/ML in Identity Verification

The digital transformation of industries has created an increasing need for secure, scalable, and efficient identity verification solutions. Gone are the days when usernames and passwords were enough. The demand for AI-driven identity verification systems that can quickly authenticate individuals and prevent fraud is growing rapidly. Whether in banking, healthcare, or border security, AI is stepping in to provide faster, more accurate authentication.

As Marc Benioff, Chair, CEO, and Co-founder of Salesforce, aptly put it,

“Artificial intelligence and generative AI may be the most important technology of any lifetime.”

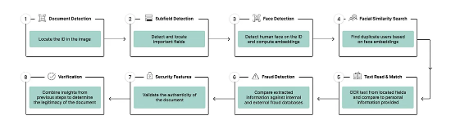

Figure 1. AI-Based Identity Verification Workflow – This illustrates how modern identity verification systems combine document analysis, biometric matching, fraud detection, and AI-driven verification steps to ensure security and accuracy.

This sentiment reflects AI and ML's profound impact across sectors—identity verification is leading the charge. As a Lead Data Scientist, I've seen firsthand how machine learning models, especially neural networks, have improved identity verification to a new level of precision.

In my role, I developed fraud detection models that flagged suspicious activities with higher precision, ultimately saving financial institutions millions in potential losses. AI doesn’t just help detect fraud—it can predict it before it happens.

Deep Learning-Based Facial Recognition

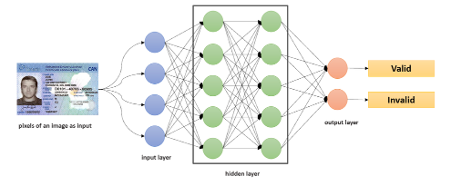

Facial recognition technology is one of the most widely used AI-driven methods for verifying identity. The introduction of models like ArcFace—a deep face recognition model that applies an additive angular margin loss to the softmax function—has drastically improved face verification systems. By encouraging greater angular separation between feature vectors from different classes, ArcFace generates more discriminative face embeddings.

This design innovation is directly responsible for its improved empirical performance. Compared to earlier models like SphereFace and CosFace, ArcFace substantially lowers false positive rates in benchmark evaluations and enhances verification precision and reliability.

A study by Deng et al supports this, showing that ArcFace significantly outperforms previous models, reducing false positives. However, despite its success, liveness detection still has challenges, such as ensuring that someone isn’t spoofing their identity with an image or video.

From my experience working on fraud analytics projects, facial recognition has become a cornerstone of real-time transaction security. When integrated into a payment system, it ensures the person making the transaction is the same one who registered the account.

While ArcFace and similar deep learning models have elevated facial recognition accuracy to impressive levels, relying on a single biometric input still introduces vulnerabilities. For example, sophisticated spoofing attacks can manipulate or mimic facial data.

To mitigate these risks, identity verification systems are now evolving toward multimodal authentication frameworks that combine facial data with other biometric and behavioral signals, creating more resilient and secure verification pipelines.

Multimodal Authentication: Beyond Just Facial Recognition

Many systems are now incorporating multiple forms of biometric data, often called multimodal authentication, to enhance the security and reliability of identity verification. This could include:

- Facial recognition combined with voice recognition.

- Gait analysis and behavioral biometrics such as keystroke dynamics.

A study by Neurohive highlights the growing trend of combining facial and voice recognition, which can significantly reduce fraud and improve accuracy. By pairing AI-driven selfie matching with voice recognition, these systems can more confidently verify that users are who they claim to be, significantly raising the barrier for fraud attempts.

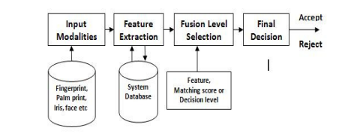

Figure 3. Multimodal Biometric Authentication System – This outlines how multiple input modalities, like face, iris, or fingerprint, are processed through feature extraction and fusion to generate a final decision

In my experience working on fraud detection systems within the payments domain, incorporating multiple data points significantly improved the predictive accuracy of our models. This experience taught me that combining different biometric features enhances security and ensures a smoother user experience.

A user’s face and voice are verified in seconds, with top-tier accuracy and seamless convenience. While multimodal authentication offers significantly greater security than single-mode systems, it too faces growing pressure from more advanced attack vectors.

One of the most formidable threats today is deepfake technology—sophisticated AI-generated media that can mimic faces, voices, and behavioral patterns. These hyper-realistic forgeries can now evade even multimodal checks, demanding the development of specialized deepfake detection models.

Deepfake Detection with AI Models

Deepfake technology has introduced a significant challenge for identity verification systems. As deepfakes become more sophisticated, attackers can easily impersonate individuals and bypass facial recognition systems.

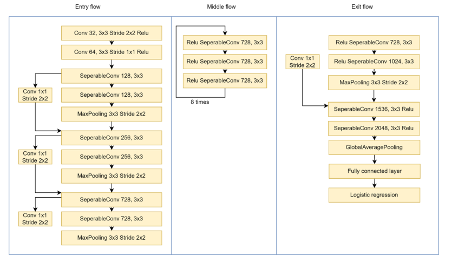

A leading approach to this challenge is XceptionNet, a deep convolutional neural network that replaces standard convolutions with depthwise separable convolutions. This variation is more efficient and reduces the number of parameters and computational cost. Its structure allows it to capture subtle spatial patterns and inconsistencies common in manipulated facial imagery.

Its unique architecture directly contributes to its strong empirical results: in benchmark datasets like FaceForensics++, XceptionNet consistently achieves high accuracy in detecting deepfakes, outperforming traditional CNNs by identifying pixel-level artifacts that forgeries often leave behind.

Figure 4. XceptionNet Architecture – This shows the three main flows of the Xception network: entry, middle, and exit, used to capture subtle spatial patterns in deepfake detection.

However, the battle against deepfakes is ongoing. We need to keep improving deepfake detection to stay ahead of evolving fraud techniques. Based on my experience, model training remains a critical area for ongoing improvements in handling these emerging threats.

Key Takeaways:

- XceptionNet: A deep learning model that effectively detects deepfakes.

- Specialized Datasets: Trained on datasets designed to identify manipulated content.

- Ongoing Challenge: Continuous model refinement is needed to keep up with evolving deepfake techniques.

As deepfakes become more advanced, improving detection models is essential—but so is rethinking how we train those models. Many AI systems still rely on centralized datasets containing highly sensitive biometric information, posing significant risks in the event of data breaches or misuse.

To balance performance with privacy, researchers turn to federated learning—a decentralized approach that enables powerful model training without exposing personal data to centralized servers.

Federated Learning for Privacy-Preserving Verification

Privacy has always been a significant concern in biometric identity verification, particularly with traditional methods that store sensitive personal data in central repositories. Federated learning solves this by training models on data locally, without storing sensitive information centrally. This approach helps reduce privacy risks while still benefiting from machine learning capabilities.

According to a study by ScienceDirect, more organizations are using federated learning to protect privacy in biometric verification. It allows AI models to be trained on sensitive data without exposing it to breaches.

In regulated industries like financial services, I've worked on implementing federated learning approaches in fraud detection models. This approach processes data locally to maintain security while delivering valuable insights. It lets identity systems scale while keeping privacy intact.

Challenges in AI-Driven Identity Verification

Photo: Shutterstock

While AI and ML hold immense potential for enhancing identity verification, researchers and developers must address several challenges to ensure these systems are secure, accurate, and fair. One of the most pressing concerns is the rise of AI-powered fraud, particularly through deepfakes—AI-generated content that convincingly mimics real individuals.

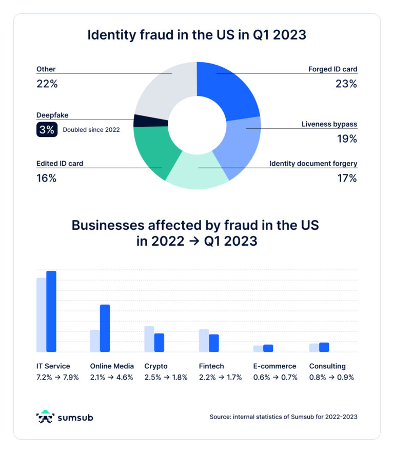

In recent years, deepfake-related fraud cases have surged at an alarming rate, threatening the integrity of biometric systems. This isn’t a distant concern—it’s a present reality. For instance, data from Q1 2023 shows that in the U.S. alone, deepfake-based identity fraud tripled, and certain business sectors saw notable increases in fraud exposure.

Deepfake and Spoofing Attacks

Deepfake technology threatens identity verification as attackers evolve new ways to bypass detection. According to Taigman et al., Facebook’s DeepFace model achieved near-human accuracy in facial recognition but also exposed vulnerabilities related to bias, privacy, and spoofing attacks.

Its high precision made it a prime target for adversarial attacks, while issues of algorithmic bias raised concerns about fairness and reliability. Addressing these risks requires ongoing research to enhance deepfake detection and stay ahead of evolving fraud techniques.

Bias and Fairness in AI Models

AI bias is a serious hurdle for identity verification systems. Critics have pointed out that facial recognition systems often show bias—against race, gender, and age.

Having worked with machine learning models in credit risk and fraud analytics within regulated sectors, I understand the importance of addressing these biases. The data used to train these models must represent all groups to ensure that identity verification systems are fair and accurate for everyone.

Data Privacy and Security

Another primary concern with AI-driven identity verification is data privacy. Biometric data is highly sensitive, and its misuse or mishandling could have serious consequences. Developers must store and process biometric data securely to maintain user trust.

What’s Next in AI-Driven Identity Verification?

The future of identity verification lies in further improving AI and ML technologies while addressing the challenges mentioned above. We’re seeing exciting developments in decentralized identity solutions, where users have more control over their data. A study by Payments Canada points out how AI can play a pivotal role in digital identity verification, especially when ensuring that privacy standards are upheld while maintaining security.

The path ahead will involve further advancements in federated learning, enhanced deepfake detection, and the creation of more ethical and fair AI models. We can create a more secure digital landscape by tackling these challenges directly.

AI and machine learning have already transformed identity verification systems, making them more secure, efficient, and scalable. However, as fraud tactics become more sophisticated, continued innovation is essential. Leveraging technologies like ArcFace, federated learning, and deepfake detection can help address the industry’s biggest challenges.

From my experience in fraud detection and credit risk modeling across the payments space, I’ve witnessed firsthand the power of AI to detect and prevent fraud. It’s not just the technology itself but how we use it responsibly to ensure user privacy and fairness that will build trust in these systems.

If you work in identity verification, explore AI and ML models to improve your security and anticipate new threats.

About the Author

Priya Singh is a Lead Data Scientist with fraud analytics, machine learning, and credit risk modeling expertise. She develops and optimizes AI models to improve fraud detection and credit risk prediction. She has led several high-impact projects that have enhanced the security and efficiency of financial systems. Priya holds a Master’s degree in Business Analytics from the University of Connecticut and has contributed to numerous advancements in AI-driven security solutions.

References:

- Paturi, P. and Hamid, S. (2021, November 25). AI Solutions for Digital ID Verification. Payments Canada. https://www.payments.ca/ai-solutions-digital-id-verification-overview-machine-learning-ml-technologies-used-digital-id

- Mitrev, D. (2018, September 24). Identity Verification with Deep Learning: ID-Selfie Matching Method. Neurohive. https://neurohive.io/en/state-of-the-art/identity-verification-with-deep-learning-id-selfie-matching-method/

- Taigman, Y., Yang, M., Ranzato, M.A. & Wolf, L. (2014).DeepFace: Closing the Gap to Human-Level Performance in Face Verification. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR): pp. 1701-1708. https://scispace.com/pdf/deepface-closing-the-gap-to-human-level-performance-in-face-3wcmadzjn3.pdf

- Beltrán, M. and Calvo, M. (2023, September).A privacy threat model for identity verification based on facial recognition. Computers & Security, 132, 103324. https://www.sciencedirect.com/science/article/pii/S0167404823002341

- Deng, J., Guo, J., Xue, N. & Zafeiriou, S. (2019). ArcFace: Additive Angular Margin Loss for Deep Face Recognition. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR): pp. 4690-4699. https://openaccess.thecvf.com/content_CVPR_2019/papers/Deng_ArcFace_Additive_Angular_Margin_Loss_for_Deep_Face_Recognition_CVPR_2019_paper.pdf