A network of far-right Facebook groups is exposing hundreds of thousands of Britons to racist and extremist disinformation and has become an “engine of radicalisation”, a Guardian investigation suggests.

Run by otherwise ordinary members of the public – many of whom are of retirement age – the groups are a hotbed of hardline anti-immigration and racist language, where online hate goes apparently unchecked.

Experts who reviewed the Guardian’s months-long data project said such groups help to create an online environment that can radicalise people into taking extreme actions, such as last year’s summer riots.

The network is exposed just weeks after 150,000 protesters from all over the country descended on London for a far-right protest, the scale of which dwarfed police estimates and whose size and toxicity shocked politicians.

The Guardian’s data projects team identified the groups from the profiles of those who took part in the riots that followed the killing of three girls in Southport last summer.

From them emerged an ecosystem where mainstream politicians are described as “treacherous”, “traitors” and “scum”, the courts and police engage in “two-tier” justice and the RNLI is a “taxi service”.

The Guardian analysed more than 51,000 text posts from three of the largest public groups in the network.

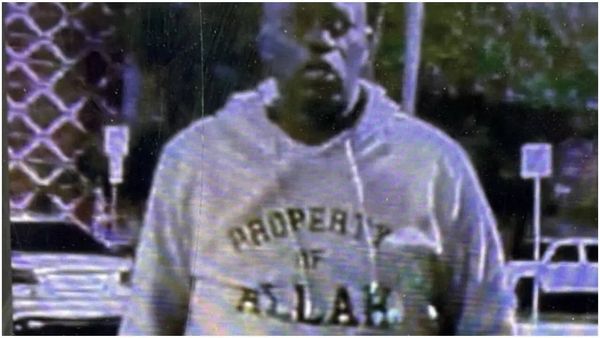

This found hundreds of concerning posts that experts said were peppered with misinformation and conspiracy theories, containing far-right tropes, the use of racist slurs and evidence of white nativism.

A key element of the network’s success are the groups’ admins – a team of mostly middle-aged Facebook users responsible for the invites to the group, the moderation of often far-right language and the spread of rumour and misinformation, which they repost to other groups in the network.

Research showed they are scattered across England and Wales, mostly in the south-east of England and the Midlands.

They come from different social backgrounds and home lives, from a large townhouse overlooking the sea on the south coast to a neat new-build detached house on the outskirts of Loughborough and a small red-brick council house in urban Birmingham.

Most of the admins the Guardian contacted would not speak on the record, but from her doorstep in a Leicestershire village, one, who moderates six groups with nearly 400,000 members between them, including “Nigel Farage for PM” – said far-right users were “deleted and blocked” from the groups.

However, the investigation found swathes of examples of extreme far-right posts, including disinformation and well-known debunked conspiracy narratives, some of which were spread word-for-word or with slight variations in writing across multiple connecting groups.

Immigrants come in for the most vituperative language, including demonising and dehumanising slurs: “criminal”, “parasites”, “primitive”, “lice”.

Muslims are variously described as “barbaric and intolerant”, “an army”, “archaic”, “medieval” and “not compatible with the UK way of life”.

One post read: “We need a humongous nit comb. To scrape the length and breast [sic] of the uk, to get rid of all the blood sucking lice out of our country once and for all!!”

Another read: “Our own government has put us all at risk by allowing these primitive minded people onto our land.”

Another user posted: “If you think immigration is bad. Then just wait until all our towns and cities are full! And they start leaching out into our quaint beautiful quiet little villages and bringing all their crimes and third world culture with them.”

The Guardian’s investigation into these vastly popular forums casts new light on the scale of far-right disinformation, which appears to be disseminated at industrial scale in social media groups.

The content shared in the groups also raises fresh concerns about moderation policies, months after Meta announced sweeping content moderation changes.

In the past, far-right ideas online emerged out of platforms more readily associated with that part of the political spectrum, such as 4chan, Parler and Telegram, which were usually home to younger audiences.

On Facebook, the moderator the Guardian spoke to is one of more than 40 people who act as moderators or administrators of one or more of three groups included in the data analysis, a mixture of men and women over the age of 60.

Dr Julia Ebner, a radicalisation researcher at the Institute for Strategic Dialogue and an expert on online radicalisation, said such spaces acted as a breeding ground for extremist ideologies and “definitely play a role in the radicalisation of individuals”.

“What is new is that the online spaces amplify a lot of these dynamics,” she said.

“The algorithmic amplification, the speed at which people can end up in a radicalisation engine. Then there are the new technologies from fabricated videos to deepfakes to bot automation.

“The digital age means that people trust the content that is produced or spread by individual accounts, by influencers regardless of their ideological leanings, more than they tend to trust established institutions’ accounts. And that is inherently dangerous.”

Meta, which owns Facebook, reviewed the three groups used in the Guardian’s analysis and a spokesperson confirmed the content did not violate its hateful conduct policy.

The combined membership of the groups across the network stood at 611,289 as recorded by the Guardian’s methodology on 29 July 2025: however, this figure almost certainly includes double counting of individuals can be members of more than one group.

Additional reporting by Olivia Lee and Carmen Aguilar García.

The full methodology including our use of OpenAI’s API can be found here. The Guardian’s generative AI principles can be found here.