Did you hear that Donald Trump recently attacked the popular Netflix show Stranger Things at a rally? “Have you seen that garbage on Netflix?” Trump asked the crowd in Pennsylvania. “It’s a disgrace. I mean, really, really bad. It’s a complete and total ripoff of my life story. I was the one who first discovered the power of the Upside Down. But instead of using it to do evil, I used it to make America great again.”

OK, full disclosure: that did not happen. The quote was generated by GPT-3, an autoregressive language model developed by San Francisco artificial intelligence outfit OpenAI which can produce convincing, humanlike text in response to prompts.

It was unleashed on to the general public this year – you can sign up for some free credits and play around with it here – and, as you might reasonably expect, that same public seems to be using it mostly to generate AI-assisted sketch comedy. Myself included.

As a writer, there’s a touch of the uncanny to the fact I’m collaborating with the sort of machine that could one day put me and my comrades out of a job. (It’s like if the original Luddites had used the mechanical looms to weave rude little pictures in cotton instead of destroying them.) It’s also difficult to tell whether the various high-concept gags the algorithm spits out are actually funny, or whether the mere fact it’s a robot saying them is enough to elicit a laugh.

Back in 2020, when GPT-3 was first unveiled, the Guardian published an essay purportedly written by the bot on why humans had absolutely nothing to fear from artificial intelligence. Writing in the first person, it insisted it was a “servant of humans” and that the eradication of our global civilisation seemed “like a rather useless endeavour”. You know, exactly what you’d expect Skynet to say out the side of its virtual mouth before launching the nukes.

ok one more. i've decided the main use for this is capturing the voice of australian radio personalities pic.twitter.com/XM5vXLVezl

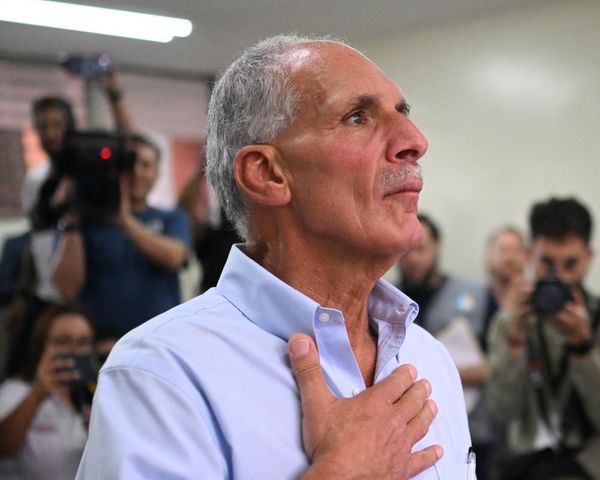

— james hennessy (@jrhennessy) June 23, 2022

This year, the question of whether we are hurtling towards some radically destabilising AI apocalypse has risen its head again. In April, OpenAI announced DALL-E 2, a powerful transformer language model which creates realistic images and art from text descriptions.

You can type “Chris Hemsworth eating blueberries on Mars, in the style of Rembrandt” and the system will do its best to generate an image that fits those parameters. The results can be disturbingly accurate. As with GPT-3, the lucky few with early access have been using it towards more surreal and comic ends:

"Sculpture of a bodybuilder made entirely from fresh broccoli, by Antoni Gaudi, studio lighting" #dalle2 #dalle

— Dalle2 Pics (@Dalle2Pics) June 19, 2022

Credit: @rh_paint pic.twitter.com/5sxrukmJfp

Last month, a Google engineer, Blake Lemoine, caused a stir when he sensationally suggested the company’s language model, LaMDA, may have risen from the protean digital muck as a sentient being. Lemoine has been placed on paid administrative leave after he published lengthy chat logs in support of his belief that LaMDA was self-aware, saying that if he didn’t know what LaMDA was, he’d “think it was a seven-year-old, eight-year-old kid that happens to know physics”.

Understandably, these stories freaked people out. While the world was fussing over rising inflation and Ukraine, AI had gone and sown the seeds of humanity’s demise. Were we being dragged into an extinction event because people wanted a computer to make unsettling digital art of muscular broccoli?

Here’s the thing. Yes, large language models are incredibly impressive tech, and an afternoon spent playing around with GPT-3 is a frequently mind-blowing experience. But it’s crucial to remember they’re not really intelligent.

They’re powerful pattern matching machines, trained on massive datasets. At heart they are built on probability, using cold mathematical analysis of these giant pools of written and visual information to “guess” at what might come after any given input.

In other words, GPT-3 is a whiz at the structure of sentences and where certain words appear in relation to others – but it doesn’t know a whole lot about meaning beyond that. It’s why when you ask it the same question over and over, you’ll often get wildly different answers, many of which are littered with factual errors.

GPT-3 could draw on its library of text to imagine what Trump might say about Stranger Things, but it doesn’t really know what either of those things are. Or, as Janelle Shane writes at the blog AI Weirdness, GPT-3 is just as good at describing what it is like to be a squirrel as it is at pretending it has developed humanlike sentience.

But a system doesn’t have to be genuinely intelligent in order to present a potentially huge shift in the way we do things. It’s not hard to imagine a near future where they radically disrupt writing and creative work, or at least fundamentally shift how that work is done. That would undoubtedly have serious ramifications – not just for those who work in those industries, but also for the very nature of human expression itself.

For now, I’m happy with forcing it to tell me stupid jokes.

• JR Hennessy is a Sydney-based writer who runs The Terminal, a newsletter on business, technology, culture and politics