During Tesla’s Q3 2025 earnings call, the firm’s CEO, Elon Musk, proposed that the cars take part in "a giant distributed inference fleet" to tap into their incredible compute power "if they are bored." Musk went on to estimate that, at some point, the advanced car fleet could summon "100 gigawatts of inference." Predictably, Musk’s latest musings have met with a mixed response on social media. So, let’s take a closer look at exactly what Musk said.

Expanding Tesla production and incentives to buy

During the Q&A session, Emmanuel Rosner from Wolfe Research asked about Musk’s intentions to expand the production of Tesla vehicles. He also queried what kind of incentives would be required to make such a production hike a reasonable business proposition.

Musk answered that an annualized production rate of three million vehicles should be achievable within 24 months. He added that the “single biggest expansion in production will be the Cyber Cab, which starts production in Q2 next year.” This will be a comfort-optimized automated transport vehicle, obviously targeting the cab market.

Killer app – allowing people to be lost in their smartphone screens, while driving

Beyond that project, the Tesla boss asserted that his team is looking closely at a killer app for new model cars with advanced processing. “If you tell someone, yes, the car is now so good, you can be on your phone and text the entire time while you're in the car. Anyone who can buy the car - will buy the car - end of story.”

Then, not for the first time, Musk heralded an “Autopilot safety game changer.” Elaborating on this, the Tesla CEO pledged, “I am 100% confident that we can solve unsupervised full self-driving at a safety level much greater than a human.”

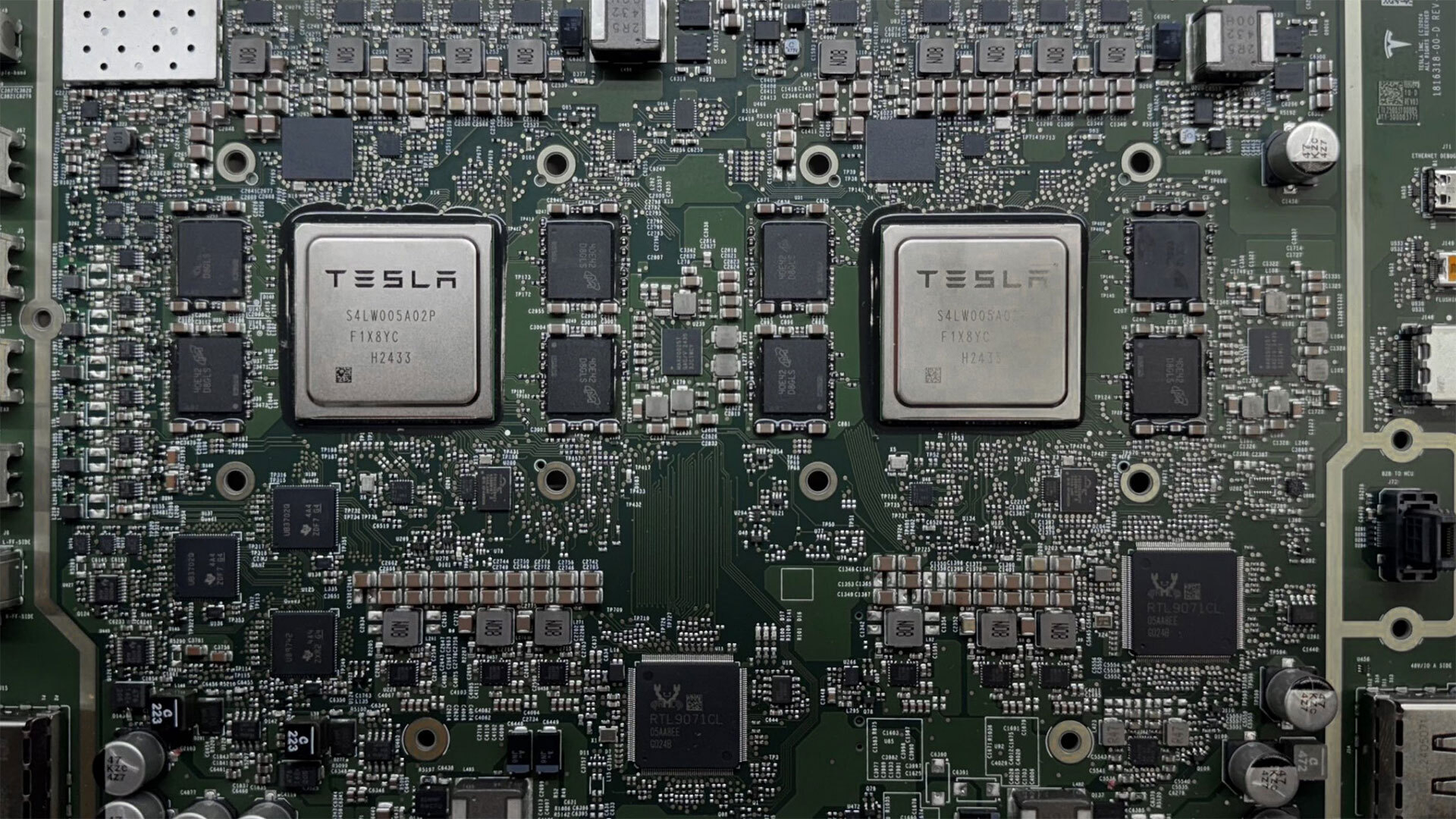

Musk backed up his confidence by talking of the capabilities of the Tesla AI4 computer, also known as Hardware 4 (HW4). He indicated that, despite its muscle, the AI4 is already set to be eclipsed by the AI5, which outperforms it as much as 40-fold in tests. That sizable shot of extra performance boils down to facilitating autonomous driving systems that are 10x safer, it was suggested in the Q&A.

"A giant distributed inference fleet… [with] 100 gigawatts of inference"

Using talk of computing power as a springboard, Musk then openly pondered whether the upcoming systems “might almost be too much intelligence for a car.” To address the decidedly first-world problem of owning a car “that might get bored,” the Tesla CEO went off on an interesting tangent about tapping into idle car processing power, effectively turning the Tesla fleet into a giant distributed inference network.

“One of the things I thought: if we got all these cars that maybe are bored… we could actually have a giant distributed inference fleet,” Musk said.

Obviously, plucking numbers from the air, the Tesla boss went on to optimistically project that this fleet could expand to, say, 100 million vehicles, with a baseline of a kilowatt of inference capability per vehicle. “That's 100 gigawatts of inference distributed with power and cooling taken with cooling and power conversion taken care of,” Musk told the financial experts on the earnings call. “So that seems like a pretty significant asset.”

At its core, Musk’s idea beckons comparisons with classical distributed computing platforms, like SETI@home and Folding@home. But this Tesla fleet proposal could make an interesting commercial moon shoot idea for investors and business analysts.

Meanwhile, users will probably be more concerned about their bought and paid for vehicles being used for someone else’s advantage, perhaps using extra electricity, and their computer systems enduring longer heat stress, and so on. There’d probably have to be a clear benefit for end-users to incentivize them to sign up to such a compute power-sharing scheme.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.