Artificial intelligence has been the flavor of the month ever since ChatGPT ushered in a new big bang for Silicon Valley. The big players involved in this race now are all gunning for ludicrously dense GPU clusters that can scale AI operations rapidly. One of those players is Elon Musk, who owns X and its namesake xAI, which seems determined to one-up Sam Altman and OpenAI, which Musk, interestingly enough, co-founded. Last month, the titular investor said that xAI will use 50 million H100-equivalent GPUs in the coming five years, and yesterday he reiterated that goal.

Older post but for those that are wondering about what @xai will be up to over the next few years, let’s just say that we’ll be busy… https://t.co/rWkhaz57u5August 23, 2025

It's important to note how Musk specifically says "H100 equivalent-AI compute" because he mentions his version of 50 million GPUs offering that kind of power is better (read: more efficient). Right now, Nvidia's Blackwell-based B200 GPUs are the most efficient AI accelerators on the market, but Musk's wording could suggest xAI will move away from Nvidia in the future. This could mean a pivot to AMD, or xAI developing its own accelerators with a partner like Broadcom, which already designs custom ASICs for others.

Yesterday's reply is a simple doubling down on this goal, but more interestingly, Musk teases AI compute at a magnitude not conceived yet by saying, "Eventually, billions." That implies xAI will one day have enough power to match billions of H100 GPUs. Of course, this sounds like a bit of a disconnect from reality, considering how AI is already perpetuating environmental risks and these large datacenters are constantly affecting local populations. There are also thermal and electrical requirements to consider.

Having thought about it some more, I think the 50 million H100 equivalent number in 5 years is about right. Eventually, billions. https://t.co/VlYsmDgqLhAugust 23, 2025

Across the pond, Musk's foil, Sam Altman, has highlighted his own mission of over a million H100 GPUs by year-end, along with a vision of bringing 100 million GPUs online by some point. That's going to require as much money as the entire GDP of the UK. In comparison, at the moment, xAI is operating with around 200,000 H200 GPUs, significantly short of the 10 million per year (based on 50 over five) that Musk wants.

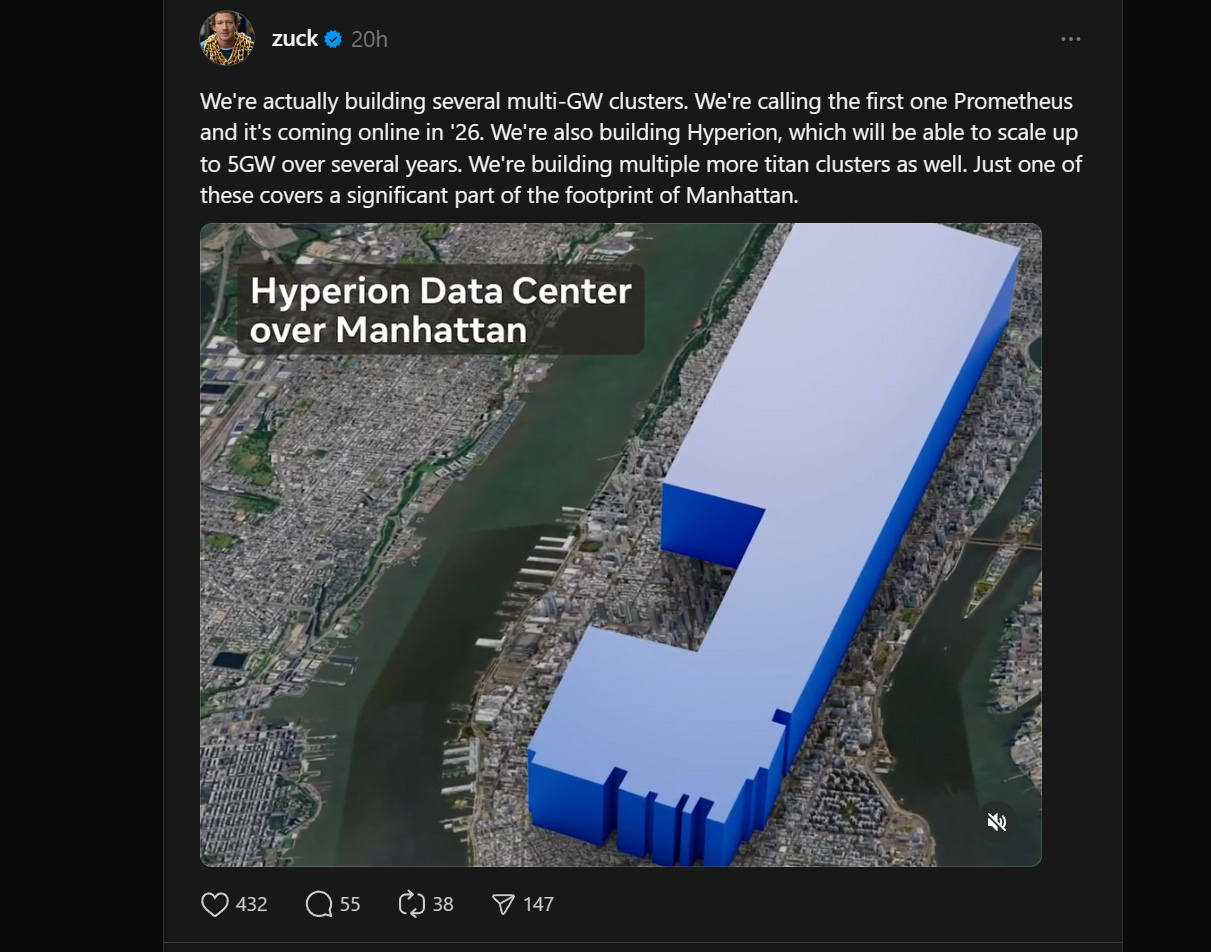

Meta is another competitor in this league, and its head honcho, Mark Zuckerberg, shares similar visions. He's building a "Hyperion" data center that's almost as big as Manhattan and will consume up to 5GW of power, which is nearly equal to NYC's base electrical load. In terms of actual compute, Zuckerberg has also promised to break the million AI GPUs barrier by the end of this year, but, more importantly, the company is on the cusp of developing homegrown chips that would end its reliance on foreign manufacturers.

All of this comes at the same time as xAI's older model, Grok 2.5 has been made open-source. X used Grok 2.5 before its shift to Grok 3, which Musk also says will go open-source in 6 months. Jury is still out on Grok 4, though, as the AI has been recently doused in a wave of controversy and isn't exactly enjoying the best reputation right now. Sure, some bad actors would be aching to get their hands on this tech, but we can only hope that the hundreds of billions Mr. Musk is planning to spend on expanding his AI clusters can produce a bot that doesn't call itself MechaHitler.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.