AI image generators like ChatGPT can pull some clever tricks and produce images ranging from dreamy landscapes to futuristic robots. But as powerful as AI image models can be, the tool is only as good as the instructions you give it. It's not an artist able to interpret things independently; it's more like a very literal genie with a limited reference pool. Thinking beforehand about the prompt you're submitting can make the result a lot better.

Sometimes, that means working out what not to do in the prompt as much as what to include. Here are three of the most common mistakes people make when trying to create ChatGPT images, and what you should do instead.

Don’t overload your prompt

When people first try image generation, there’s a tendency to pack every idea into a single prompt. Say you want to build up a fantasy scene and have a lot of concepts you want to include. You might write: “A magical forest at sunset with glowing mushrooms, a fairy sitting on a rock, an owl flying above, floating lanterns, a crystal river, ancient ruins, a portal to another realm, and a unicorn drinking tea.”

The result you can see above on the left. The overloaded prompt makes sense in writing, and the result technically covers all the bases, but even advanced image models can struggle with so much visual complexity. The more distinct elements you add, the more likely the model is to omit some, blend them weirdly, or have a lower quality image as a whole. They can't always balance many elements in a composition, especially if those elements require different lighting, scales, or locations in the scene. The way the unicorn is drinking tea and the slightly muddy colors attest to that.

You're better off choosing one or two subjects and a setting that enhances them. Try limiting yourself to three distinct visual ideas per prompt and describe the atmosphere rather than listing every object. So, you might instead write, “A fairy sitting on a glowing mushroom in a quiet forest at sunset, with soft fireflies surrounding her and a faint magical glow in the background, illustrated in a dreamy, painterly style.”

You can see the result above on the right. This version has a clear subject (the fairy), a secondary detail (glowing mushrooms and fireflies), and a strong stylistic cue. It keeps the scene visually manageable and evocative without overwhelming the model. If you want a more complex scene, you might break it into a sequence of prompts and assemble a larger image afterward. Think of each prompt as one page in a visual storybook instead of the entire novel.

Don't contradict yourself in a prompt

One of the easiest ways to confuse an image model is by accidentally including conflicting or vague information in your prompt. Unlike a human artist, the model can’t guess what you meant if you ask for something like “a bald man with long flowing hair.” It will try to do both at the same time, leading to an awkward mix, as when I asked for “A hyperrealistic cartoon-style portrait of a robot wearing medieval armor, with shiny chrome skin and natural freckles, holding a holographic scroll made of parchment.”

As you can see above, that mess of mixed signals led to more of an animated suit of armor with an odd smiley face and a scroll that's half parchment and half hologram. It's like an iPad carved from wood; just because it's technically a tablet doesn't mean it's what you meant by the term. If your prompt includes contradictions, the model will either blend them into something uncanny or ignore parts of the prompt entirely

Scan your prompt for any phrases that contradict each other or pair poorly. Choose a single consistent visual logic. You can still fuse styles, but it helps to use bridging language like "inspired by" or "reminiscent of." To get a less bizarre image, I asked for “A detailed digital illustration of a sleek robot wearing medieval-inspired armor with chrome detailing, holding a glowing holographic scroll, in a stylized sci-fi concept art style.” Now everything makes sense. It's a robot in armor inspired by medieval design, but still futuristic. The scroll is high-tech, with no parchment.

Don’t forget to use negative prompts

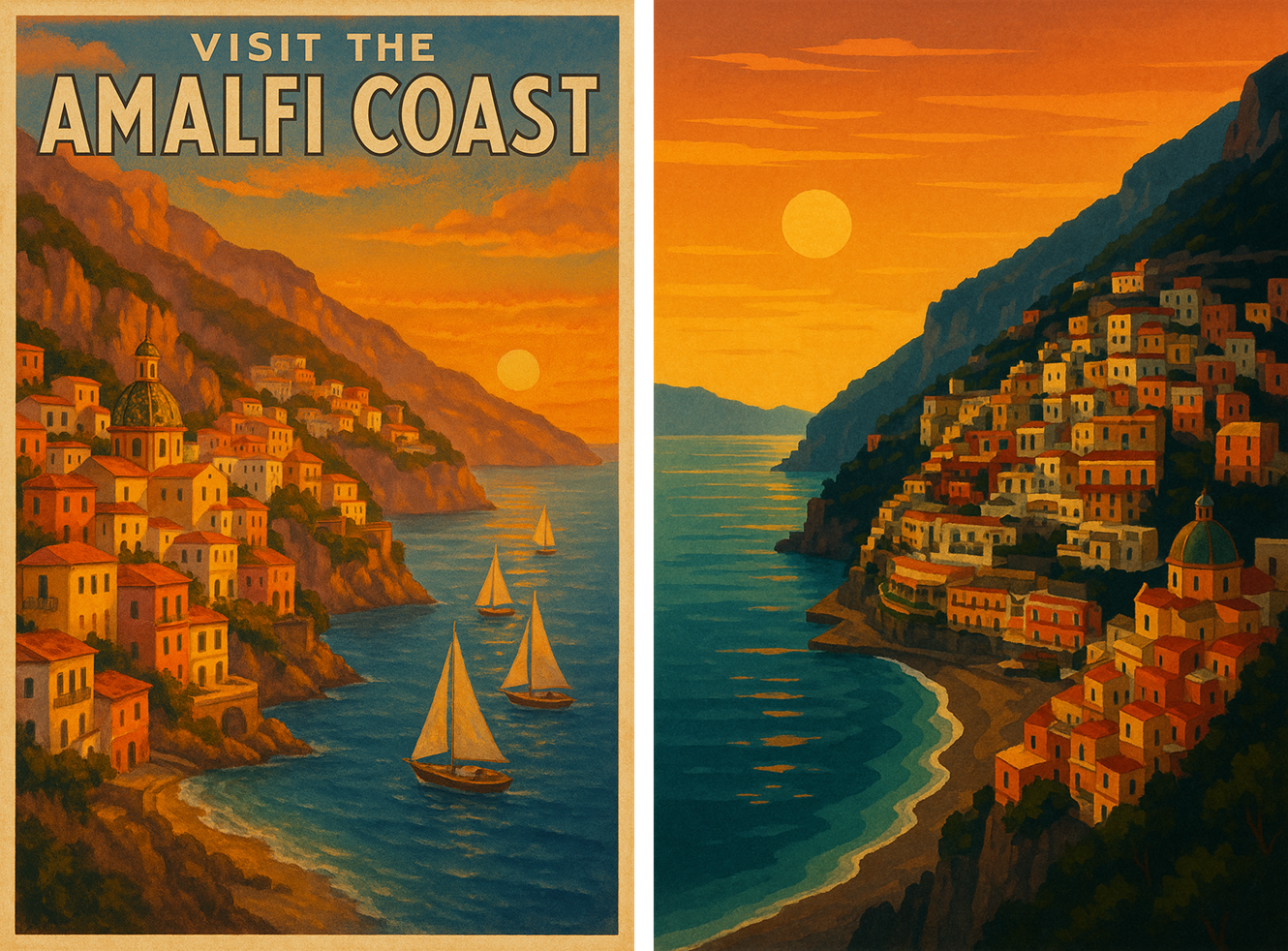

An overlooked tool in image prompting is the negative instruction. You can explicitly tell the model what to avoid, which is especially crucial when trying to adjust or remove things like logos and words. That doesn't mean the actual image is nonsensical, just not what you want. For instance, I asked for “A vintage travel poster of the Amalfi Coast at sunset, with cliffs, colorful buildings, and sailboats.”

The result is exactly that, including "Visit Amalfi" written on the poster. Without specific exclusions, the model fills in blanks using patterns from its training. It might assume you want title text, but if I want that kind of poster image without words, I need to say that. So, I followed up by asking for “A vintage-style travel poster of the Amalfi Coast at sunset, featuring cliffs and colorful buildings, with clean composition, no text, no logos, no watermarks.”

The negative phrases filter out the bits I don't want, and you can see that's what I ended up with. It's particularly useful to use negative prompts in posts, character portraits, and images with open sky or flat surfaces, where you might see text appear in certain conditions. It's also good to use negative prompts when trying for a picture of an animal or human, i.e., asking for “no extra limbs,” “no duplicate faces,” and “no distorted anatomy.”

You might also like

- I tried the trend of using ChatGPT to turn photos into Renaissance paintings: here’s how to do it

- I compared Google Gemini's new image editing feature to ChatGPT's, and it's much better at sticking to the original

- I compared Adobe’s new Firefly Image Model 4 to ChatGPT’s image generator, and it’s like they went to the same art school