Ed Newton-Rex, the head of the team responsible for developing Stability AI's AI music generator Stable Audio, has resigned from his post in protest over the company's use of copyrighted material in training its AI models.

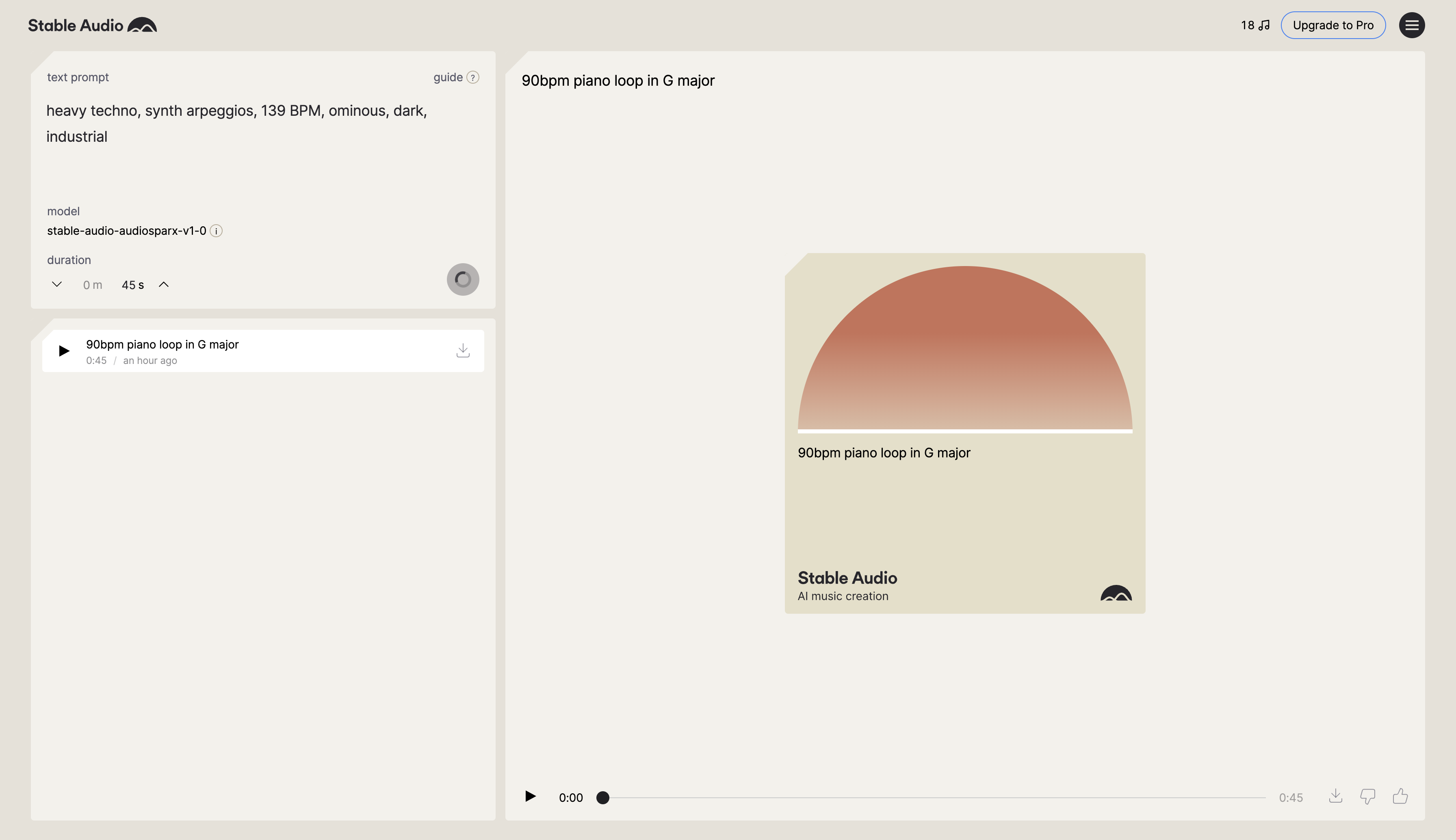

Stability AI is the company behind two of the most advanced tools in generative AI: Stable Audio, which generates music based on instructions provided by the user, and Stable Diffusion, which generates images in the same fashion. Stable Audio was trained on 19,500 hours of music from the content library AudioSparx, obtained through a data access agreement between the two parties.

In a statement posted to his Twitter/X account, Newton-Rex takes issue with the Stability AI's claim that the use of copyrighted material in training AI models is acceptable under 'fair use', a provision in US copyright law that permits limited use of such material without permission from the copyright holder, if it is being used for purposes such as criticism, news reporting, teaching, and research.

"This is a position that is fairly standard across many of the large generative AI companies, and other big tech companies building these models ," Newton-Rex says. "But it’s a position I disagree with." Newton-Rex points out that US law says fair use does not apply if the use of copyrighted material affects "the potential market for value of the copyrighted work".

"Today’s generative AI models can clearly be used to create works that compete with the copyrighted works they are trained on", he continues. "I don’t see how using copyrighted works to train generative AI models of this nature can be considered fair use."

Not only are Stability AI's training practices not protected under fair use, Newton-Rex says, they are morally wrong. "Companies worth billions of dollars are, without permission, training generative AI models on creators’ works, which are then being used to create new content that in many cases can compete with the original works," he says. "I don’t see how this can be acceptable in a society that has set up the economics of the creative arts such that creators rely on copyright."

"I’m a supporter of generative AI," Newton-Rex continues. 'It will have many benefits - that’s why I’ve worked on it for 13 years. But I can only support generative AI that doesn’t exploit creators by training models - which may replace them - on their work without permission."

Stability AI CEO Emad Mostaque responded to Newton-Rex's post by saying that "this is an important discussion". Mostaque also linked to a document outlining the company's official response to the United States Copyright Office's inquiry into AI and copyright.

Many in the replies to Newton-Rex's post have questioned his position, comparing the way AI models "learn" from copyrighted material to the way that humans learn to play and write their own music by studying and taking influence from existing works. "Why is training a model on copyrighted works any different than a student reading a text book, or a musician being "influenced" by the Beatles?", asks one respondent.

Revisit our guide to using generative AI in music production.

I’ve resigned from my role leading the Audio team at Stability AI, because I don’t agree with the company’s opinion that training generative AI models on copyrighted works is ‘fair use’.First off, I want to say that there are lots of people at Stability who are deeply…November 15, 2023