High-risk artificial intelligence such as neurotechnology may need to be banned "under certain circumstances", the University of Newcastle believes.

Concerns about neurotech - devices that interface with the nervous system - were highlighted in a federal government probe into "responsible AI".

Examples include brain implants and wearable devices, promoted to "unlock human potential" and meet medical needs.

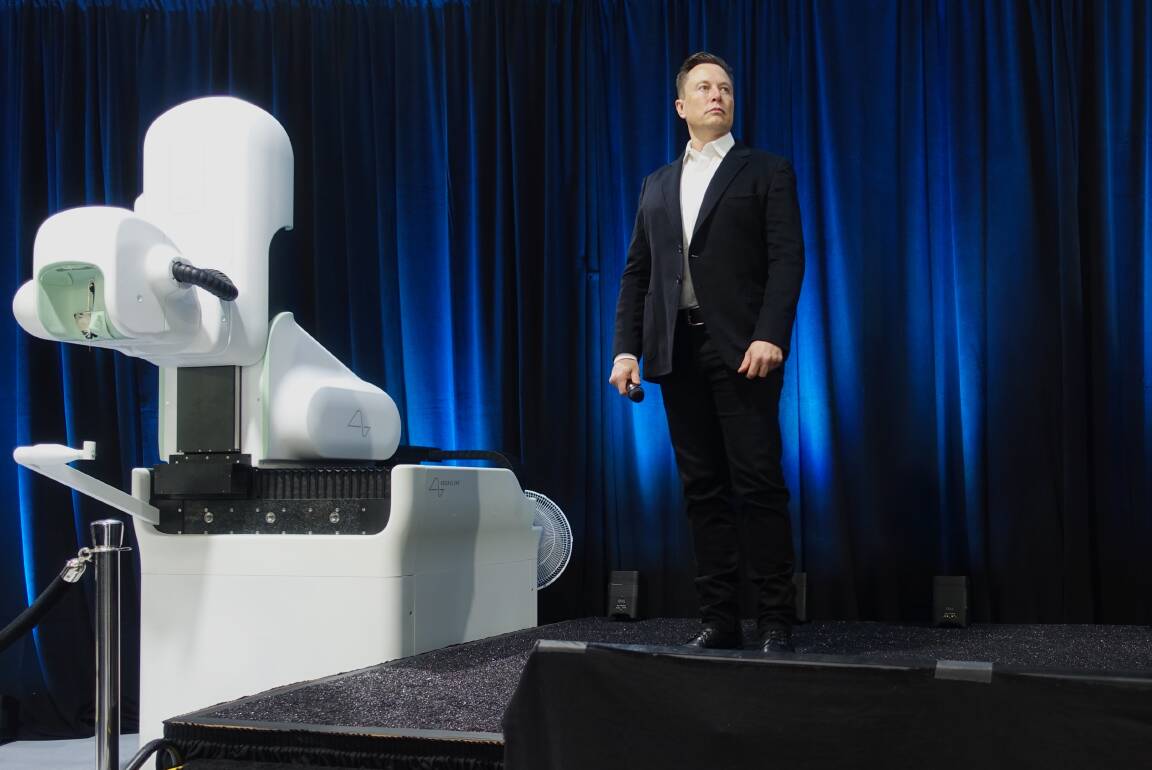

Elon Musk's Neuralink, for example, has started human trials of brain implants for paralysis patients.

University of Newcastle Professor Tania Sourdin, who researches AI, told the Newcastle Herald that "it seems likely that humans will be enhanced with neurotech" in the near future.

"I think that within the next 10 to 20 years, brain implants - and certainly external brain simulators - will be as common as implanted heart devices," Professor Sourdin said.

"Often they will be paired with pharmaceutical inputs."

Professor Sourdin said neurotech advances "may mean that decisions and actions are prompted by forms of AI".

"AI developments are inevitably taking us to a future that we are probably not ready for," she said.

"Do we really want AI to know our most intimate thoughts? What if the AI is influencing our behaviour?

"What happens with a neurotech device if the company who makes it goes under? What about privacy and data capture?"

The federal government released its interim response on Wednesday to its discussion paper, titled "Supporting responsible AI".

In a submission to the discussion paper, the University of Newcastle raised concerns about neurotech including "embedded materials" such as "microbots".

The Australian Human Rights Commission said "neurotechnology has created unheard of opportunities for collecting, maintaining and utilising brain data".

This could be used to "understand and manipulate the human mind".

"Such applications have immense benefits for individuals and will revolutionise the way we live," its submission said.

"However, neurotechnologies also raise profound human rights problems."

The submission said researchers had found ways to use neurotech to "implant artificial memories or images into a mouse's brain - generating hallucinations and false memories of fear".

"Human brains have been directly connected to cockroach brains. This allowed the human to control certain behaviours, such as steering their paths by thought alone.

"Could the technology be improved to manipulate human thoughts and actions?"

The University of Newcastle submission also highlighted "significant democratic risks" if AI replaces judges in the legal system.

In her research, Professor Sourdin has raised concerns about the "dehumanisation of the justice process".

The government said it was examining ways to strengthen AI safeguards for citizens.

Professor Sourdin said the government had to strike a balance.

"On the one hand, it is about encouraging AI innovation. On the other hand, there are safety and systemic concerns."

Federal Minister for Industry and Science Ed Husic said the government's response was "targeted towards the use of AI in high-risk settings, where harms could be difficult to reverse".

This included critical industries such as water, electricity, health and law enforcement.

The government sought to ensure that "the vast majority of low risk AI use continues to flourish largely unimpeded".

Mr Husic reportedly prefers amendments to existing laws, rather than a new legislative framework for high-risk AI.

Professor Sourdin said some existing laws could be adapted to deal with AI issues, but "new laws will also be required".

Consumer group Choice said in its submission that it supported the European Union's AI framework as "the most advanced risk-based model".

"High-risk technologies would be subject to restrictions or prohibitions," it said.

The EU's proposed new law would ban AI systems that "deploy subliminal techniques beyond a person's consciousness" to "distort behaviour" and cause "physical or psychological harm".

The Tech Council of Australia said "Australia has a sound, existing legal framework relevant to AI".

"To fully realise the potential of AI, Australia must look beyond regulation towards enabling uptake in the technology."

Its submission said: "AI is the 21st century equivalent of manufacturing in the 20th century".

"It will unleash a widespread wave of productivity and economic enhancements."

The Tech Council did not support following the path of the EU "in developing a standalone AI Act".

But Choice said AI systems had already harmed consumers through "algorithmic discrimination, perpetuation of bias, scams, misinformation and chatbots".

"Businesses that use AI should not be trusted to regulate themselves," it said.

"Strong laws enforced by well-resourced regulators are needed to promote the responsible use of AI and protect consumers from further harm."