Enjoy our content? Make sure to set Windows Central as a preferred source in Google Search, and find out why you should so that you can stay up-to-date on the latest news, reviews, features, and more.

Big tech corporations are racing to hop onto the AI bandwagon, investing billions into the ever-evolving technology. However, market analysts and investors have raised concerns about the exorbitant spending on the technology with no clear path to profitability amid claims and predictions that we're in an AI bubble that's on the precipice of bursting.

Microsoft was recently snubbed in Time Magazine's famed "Person of the Year" cover story despite its relentlessness to integrate AI and Copilot across its tech stack. But the company, alongside other AI giants like OpenAI and Google, may have bigger fish to fry.

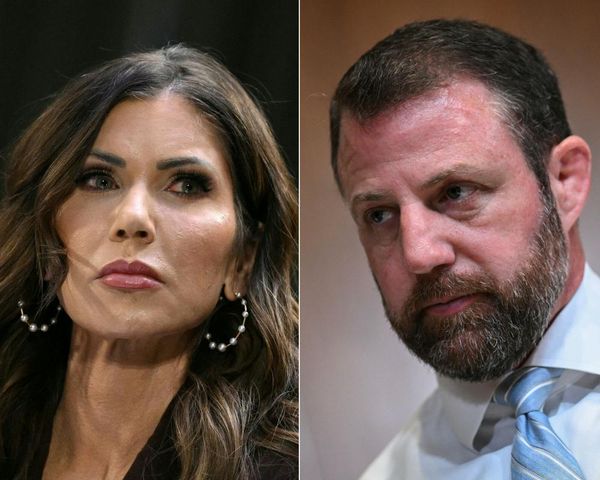

Late last week, a group of state attorneys general, joined by dozens of AGs from U.S. states and territories through the National Association of Attorneys General, sent a letter to leading AI labs warning them to address “delusional outputs.” The letter cautioned that failure to remedy this issue could constitute a violation of state law and expose the companies to legal consequences (via TechCrunch).

The letter demands that companies implement elaborate measures and safeguards designed to protect users, including transparent third-party audits of LLMs to foster early identification of delusions of syciphancy. Additionally, the letter demands new incident reporting procedures that will notify users when AI-powered chatbots generate harmful content.

This news comes amid a rise in the number of suicide incidents related to AI. A family sued OpenAI, claiming that ChatGPT encouraged their son to commit suicide. Consequently, the AI firm integrated parental controls into ChatGPT's user experience to mitigate the issue.

Perhaps more importantly, the letter dictates that the safeguards should also allow academic and civil society groups to “evaluate systems pre-release without retaliation and to publish their findings without prior approval from the company".

According to the letter:

"GenAI has the potential to change how the world works in a positive way. But it also has caused—and has the potential to cause—serious harm, especially to vulnerable populations. In many of these incidents, the GenAI products generated sycophantic and delusional outputs that either encouraged users’ delusions or assured users that they were not delusional.”

Finally, the letter suggested that AI labs should treat mental health incidents the same way tech corporations handle cybersecurity incidents. It'll be interesting to see if research AI labs like OpenAI adopt some of these suggestions, especially after a recent damning report claimed that the company is being less than truthful about its research, only publishing findings that shine a bright light on its tech.

Follow Windows Central on Google News to keep our latest news, insights, and features at the top of your feeds!