Until now, determining whether artificial intelligence-powered platforms are spreading disinformation or negatively targeting kids fell to the companies operating them or to the few research organizations capable of sifting through publicly available data.

That was until the European Union in late August began such monitoring as it implements its law known as the Digital Services Act, which applies to online platforms like social media companies and search engines that have more than 45 million active European users. The law is designed to combat hate speech and spread of disinformation as well as prevent targeting of minors.

Although national governments of the 27-member European Union are responsible for enforcing the law, the EU also established the European Centre for Algorithmic Transparency, or ECAT, that now audits algorithms and underlying data from the 19 major platforms that meet the law’s criteria.

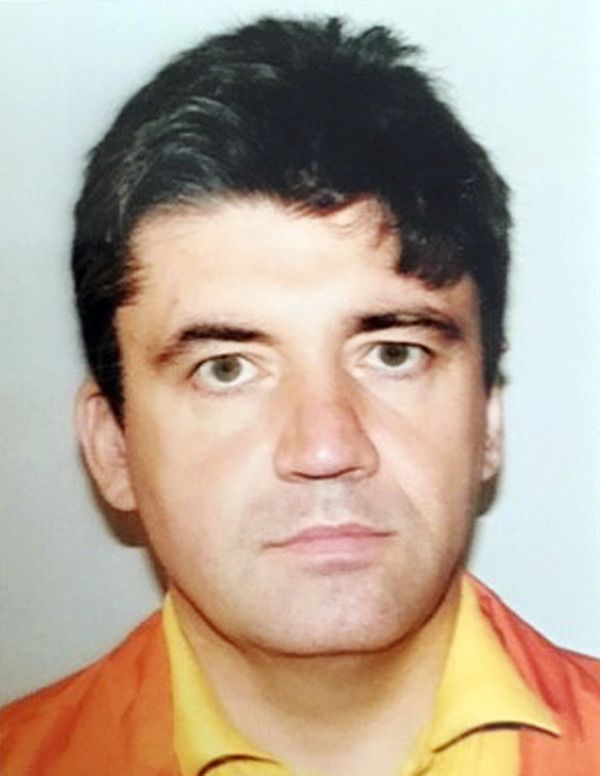

“When we say algorithmic transparency, auditing is what we are doing,” E. Alberto Pena Fernandez, head of the ECAT unit, said in an interview in his office in Brussels. “We are doing a systematic analysis of what the algorithm is doing.”

He said ECAT examines samples of a platform’s data, and the software that produces recommendations or promotes posts, videos, and other material to users.

In the U.S., however, the idea of auditing is still in its infancy. Some lawmakers and advocates have been pushing for transparency, but legislation hasn’t advanced. One reason may be the critical questions that have to be confronted, as illustrated by the EU’s handling of the process.

Fernandez likened the European method to financial auditors who certify a company’s performance.

“We say, ‘this is the risk we are trying to avoid’ and we ask the company to show us the algorithm that deals with that risk,” Fernandez said. In the case of disinformation, for example, “we look at the algorithm dealing with the disinformation or the moderation of that content and ask the company to show us how it works.”

The 19 companies that meet the EU’s definition of large platforms include top U.S. companies such as Amazon.com Inc.; Apple Inc.’s App Store; the Bing search engine, part of Microsoft Corp.; Facebook and Instagram, both part of Meta Platforms Inc.; Google LLC; Pinterest; and YouTube; as well as European and Chinese companies including Alibaba Express, Booking.com, and TikTok.

The EU unit is building a set of “bullet-proof methodologies and protocols” by testing software and data provided by companies under strict confidentiality agreements to see if the algorithms produce results that are prohibited, such as disinformation and targeting of minors, Fernandez said.

If testing results don’t match a platform’s claims of safety and compliance, the company can be asked to change its algorithm. If that doesn’t happen, the company would be taken to court, Fernandez said. Companies face fines of as much as 6 percent of global sales and a potential ban from the European market.

No U.S. proposal has gone as far as that level of fine, and two senators have only recently suggested a new agency should be created to counter disinformation.

Sen. Edward J. Markey, D-Mass., proposed legislation in July that would require online platforms to disclose to users what personal information they collect and how algorithms use it to promote certain content. The bill would give the Federal Trade Commission authority to set rules for such disclosure.

In the previous Congress, Sen. Chris Coons, D-Del., proposed legislation that would require large online platforms to provide certified third-party researchers access to algorithms and data to help understand how their platforms work.

Google calls for audits

The idea of auditing is becoming central to ensuring that artificial intelligence systems operate within safe boundaries and don’t create widespread harms.

A legislative framework recently proposed by Sens. Richard Blumenthal, D-Conn., and Josh Hawley, R-Mo., that would hold AI systems accountable calls for a new oversight agency that “should have the authority to conduct audits” and issue licenses to companies developing AI systems used in high-risk situations such as facial recognition and others.

Blumenthal, who is chair of the Senate Judiciary Committee’s panel on Privacy, Technology, and Law, told reporters last week that the idea of licensing and audits for AI systems is recommended by top AI scientists who have appeared before the subcommittee.

The National Telecommunications and Information Administration, a Commerce Department agency, received about 1,400 comments from companies and advocacy groups about creating an accountability system for artificial intelligence technologies.

Charles Meisch, a spokesman for the NTIA, said the agency couldn’t comment on its recommendations for an auditing system until it issues a public report later in the year.

But in public comments submitted to the agency, Google and AI safety company Anthropic PBC each backed the idea of audits.

“Assessments, audits, and certifications should be oriented toward promoting accountability, empowering users, and building trust and confidence,” Google said, adding that a cadre of independent auditors must be trained to carry out such tasks.

Google also said that “audits could assess the governance processes of AI developers and deployers,” while calling for an international audit standard.

Anthropic called for developing effective evaluations, defining best practices, and building an auditing framework that various government agencies can implement.

Irrespective of what Congress does on auditing AI, a U.S. government entity having the power that the EU’s algorithmic center has would be highly unusual, said Rumman Chowdhury, Responsible AI fellow at the Berkman Klein Center for Internet & Society at Harvard University.

“We’ve never had a government entity say ‘we will be the determinant of whether or not your privately owned platform is impacting democratic processes,’” in the form of disinformation, Chowdhury said.

If such algorithmic auditing practices become the global norm, some autocratic governments could use the power to silence critics in the name of squelching disinformation, said Chowdhury, who is also a consultant to the European Centre for Algorithmic Transparency.

In Europe, although the algorithmic transparency unit is designed to tackle online disinformation and targeting of minors on social media platforms and search engines, Fernandez said the unit’s research on algorithms is likely to play a role in how the EU tackles AI in general.

The center’s analysis and impact assessments of algorithms will likely help the EU as the bloc moves closer to enacting another law, known as the EU Artificial Intelligence Act, by early next year, Fernandez said. The law would categorize AI systems according to risks and prohibit certain types of applications while requiring strict controls over high-risk applications.

Note: This is the third in a series of stories examining the European Union’s regulations on technology and how it contrasts with approaches being pursued in the United States. Reporting for this series was made possible in part through a trans-Atlantic media fellowship from the Heinrich Boell Foundation, Washington D.C.

The post As US debates, EU begins to audit AI appeared first on Roll Call.