Apple Intelligence, Apple’s entry into the modern AI era, has been steadily evolving since its debut at WWDC 2024 — and in 2026, it’s far more central to the Apple experience than it was at launch. Designed to take on the advances made by Google, OpenAI and other AI leaders, Apple Intelligence now underpins key features across the iPhone, iPad, and Mac.

At its core, Apple Intelligence is meant to make everyday tasks smarter through on-device intelligence, deeper automation, and tighter integration across Apple’s ecosystem. Instead of treating AI as a standalone tool, Apple has woven it into writing tools, image search, Siri and system-level workflows that operate in the background.

Privacy remains Apple’s defining differentiator in the AI race. Unlike many rivals that rely heavily on cloud processing, Apple prioritizes on-device AI whenever possible, turning to its secure private cloud only when more advanced processing is required. The goal is to deliver the benefits of modern AI while minimizing the amount of personal data that ever leaves your device.

Apple Intelligence’s rollout hasn’t been perfectly smooth — early versions lagged behind competitors in some areas — but the platform has matured significantly through consistent updates. Apple continues to expand its capabilities, refine performance, and close the gap with its rivals.

In this guide, we’ll break down how Apple Intelligence works in 2026, what it can do today, which devices support it, and where it still has room to grow.

Latest updates

Live Translation

Live Translation is one of the most notable additions in Apple’s recent Apple Intelligence updates — and exactly what it sounds like: a system-wide, real-time language translator designed to make cross-language communication feel natural rather than clunky.

The feature is deeply embedded across Apple’s core communication tools, including Messages, FaceTime, and regular phone calls. That means you can text, video chat, or speak with someone in another language and have the conversation translated in real time, without needing a separate translation app.

Apple has also extended Live Translation beyond digital conversations. When paired with AirPods 3, the feature can translate in-person speech, effectively turning your iPhone and AirPods into a real-time interpreter. This makes it particularly useful for travel, business meetings, or everyday interactions with people who speak a different language.

True to Apple’s privacy-first philosophy, all Live Translation processing happens on-device through Apple Intelligence. Conversations are not stored, logged, or sent to third-party servers, ensuring that sensitive or personal discussions remain private.

At launch, Live Translation supports a limited set of languages, but Apple has confirmed plans to expand this over time. Future updates are expected to add Italian, Japanese, Korean, and Chinese, making the feature far more globally useful.

Visual Intelligence updates

Visual Intelligence is one of the foundational layers of Apple Intelligence, designed to make your iPhone smarter about what you see and interact with on your screen.

In practice, it functions similarly to Google Lens. Users can analyze images, text, or objects displayed on their iPhone and search for related information directly from the screen — without needing to switch apps or manually upload images.

With recent updates, Visual Intelligence can now surface visually similar images from Google, as well as pull relevant results from apps that are integrated into Apple’s ecosystem. That means you can point your iPhone at something — or select something on your screen — and quickly get context, shopping links, or related content.

Apple has also tied Visual Intelligence into its partnership with ChatGPT. If you come across something on your screen that you don’t understand — an image, document, or piece of text — you can ask ChatGPT questions about it directly through the Visual Intelligence interface. This effectively turns your iPhone into a more interactive, AI-powered visual assistant.

Workout Buddy

Workout Buddy is one of the more unique — and distinctly Apple — applications of Apple Intelligence, blending AI with health and fitness in a new way.

Apple describes it as a first-of-its-kind, AI-powered coaching experience built directly into the iPhone and Apple Watch ecosystem. Rather than offering generic fitness tips, Workout Buddy personalizes its guidance based on your individual workout data and history.

During a workout, the feature continuously analyzes real-time metrics such as heart rate, pace, distance, and performance trends. Using Apple Intelligence, it then delivers spoken motivation and feedback tailored specifically to you — similar to having a personal trainer in your ear.

What sets this apart from standard fitness coaching apps is how the guidance is generated. Apple uses a new text-to-speech model to transform workout insights into a dynamic, natural-sounding voice that has been trained on real-life fitness coaches. The result is motivational feedback that feels more human than robotic.

As with other Apple Intelligence features, all of this health data is processed privately and securely on your device, ensuring that your fitness history and biometric data remain protected.

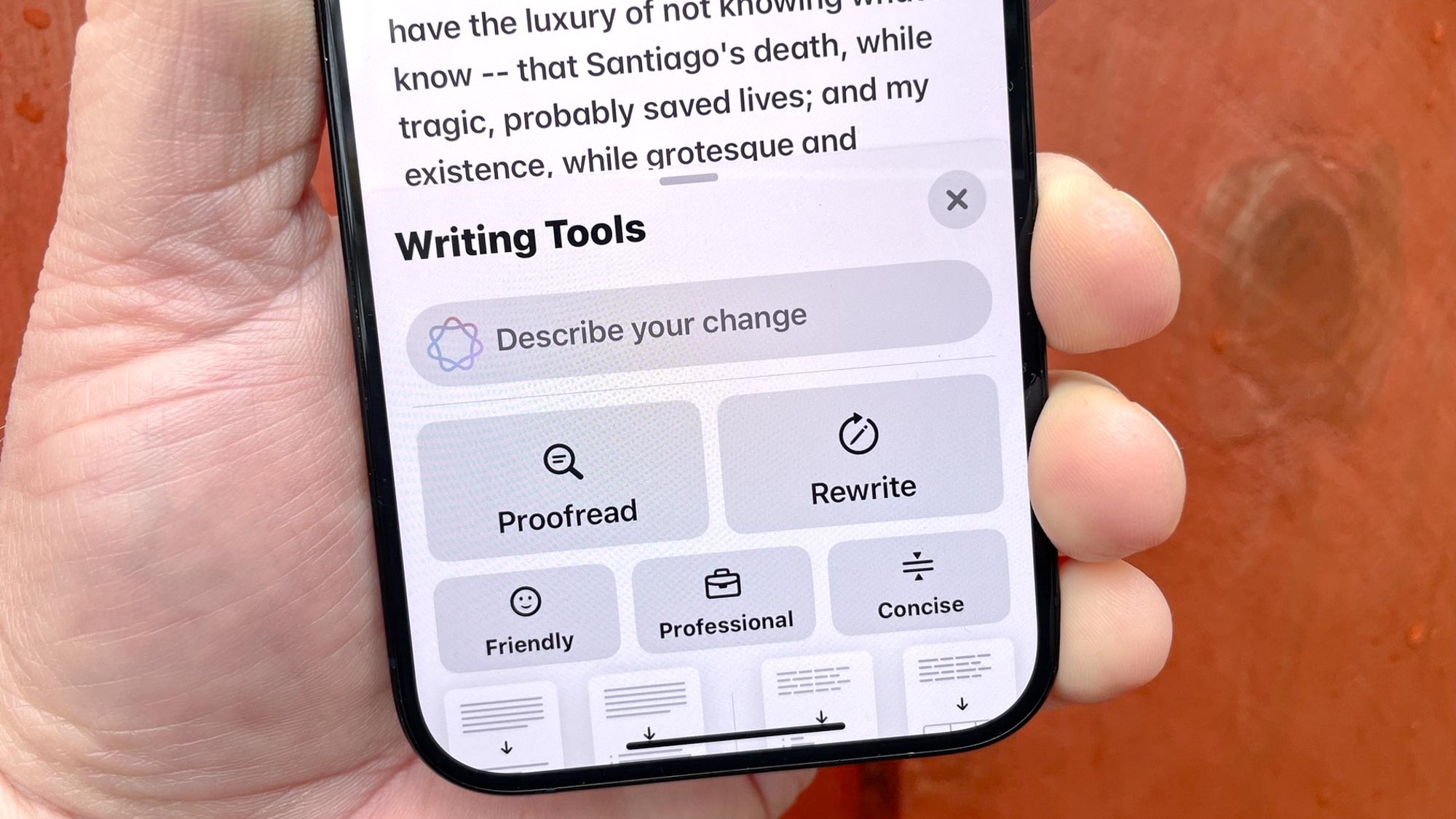

Expanded contextual Writing Tools

Apple Intelligence has gotten better at helping you write and refine text across the system:

- System-wide writing suggestions — improved drafting, rewriting, summarizing, and expanding for apps like Mail, Notes and Safari.

- Tone and intent controls — you can prompt Apple Intelligence to tailor tone (e.g., professional, casual, concise) directly within the keyboard suggestions.

These features now feel more fluid and capable than at launch and are increasingly context-aware, meaning they adapt to the app you’re using.

Deeper integration in Apple apps

Beyond Messages and FaceTime, Apple Intelligence has been woven into more of Apple’s native apps:

- Mail & Calendar: Smarter subject lines, summary suggestions and reminder insights.

- Safari: Enhanced search previews and contextual sidebars that pull relevant info from linked pages.

- Files & Notes: Contextual tagging and better content understanding (e.g., scanning a set of files and highlighting key items).

These integrations make the intelligence feel more “system-native” rather than a bolt-on feature.

Improved Siri Intelligence

Apple Intelligence has powered a quieter but notable upgrade to Siri:

- More conversational responses

- Follow-up question understanding

- Better context continuity

Multimodal interactions

Building on Visual Intelligence, Apple has expanded multimodal capabilities, you can now combine text, image, and screen selections in a single query.

For example: “What’s this ingredient on screen, and how can I use it in a dinner recipe tonight?”

Or: “Find similar outfits to this screenshot and show me places I can buy them.”

This is broader than simple object recognition — it’s more like integrated visual reasoning.

Enhanced knowledge / local context

Apple has worked on better local context understanding — meaning Apple Intelligence can now pull together information from your device (emails, texts, documents, photos) in a more proactive and secure way.

For example:

- Summarizing all mentions of a specific event across your messages and calendar

- Suggesting reminders based on previous conversations

- Contextual alerts about travel bookings or deadlines the system detects in your files

This stays entirely on-device, preserving privacy but giving you a “memory layer” that feels more personalized.

Key points

- Siri revamp: A more intelligent, conversational Siri that remembers context and can handle more with ChatGPT integration.

- Multimodal capabilities: Features like Visual Intelligence leverage the camera live view to help you learn more about the world around you and take action.

- System-wide AI enhancements: Integrated AI across Mail, Messages, Notes, Safari, and third-party apps.

- On-device & Cloud AI hybrid: Apple balances local processing for privacy with cloud AI for complex tasks, ensuring fast and efficient performance.

- AI-powered Writing Tools: Writing assistance across Mail, Messages, Notes, and more.

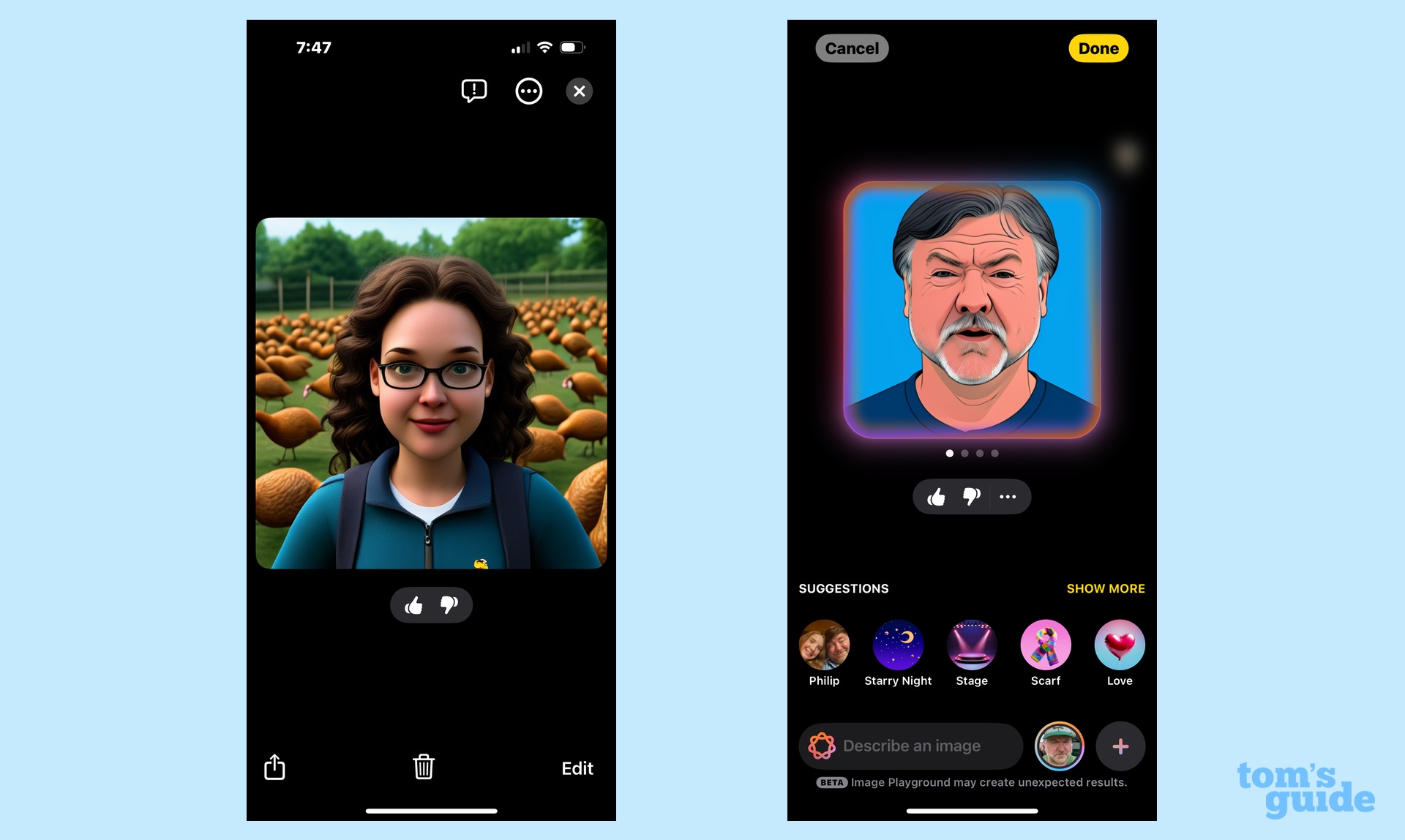

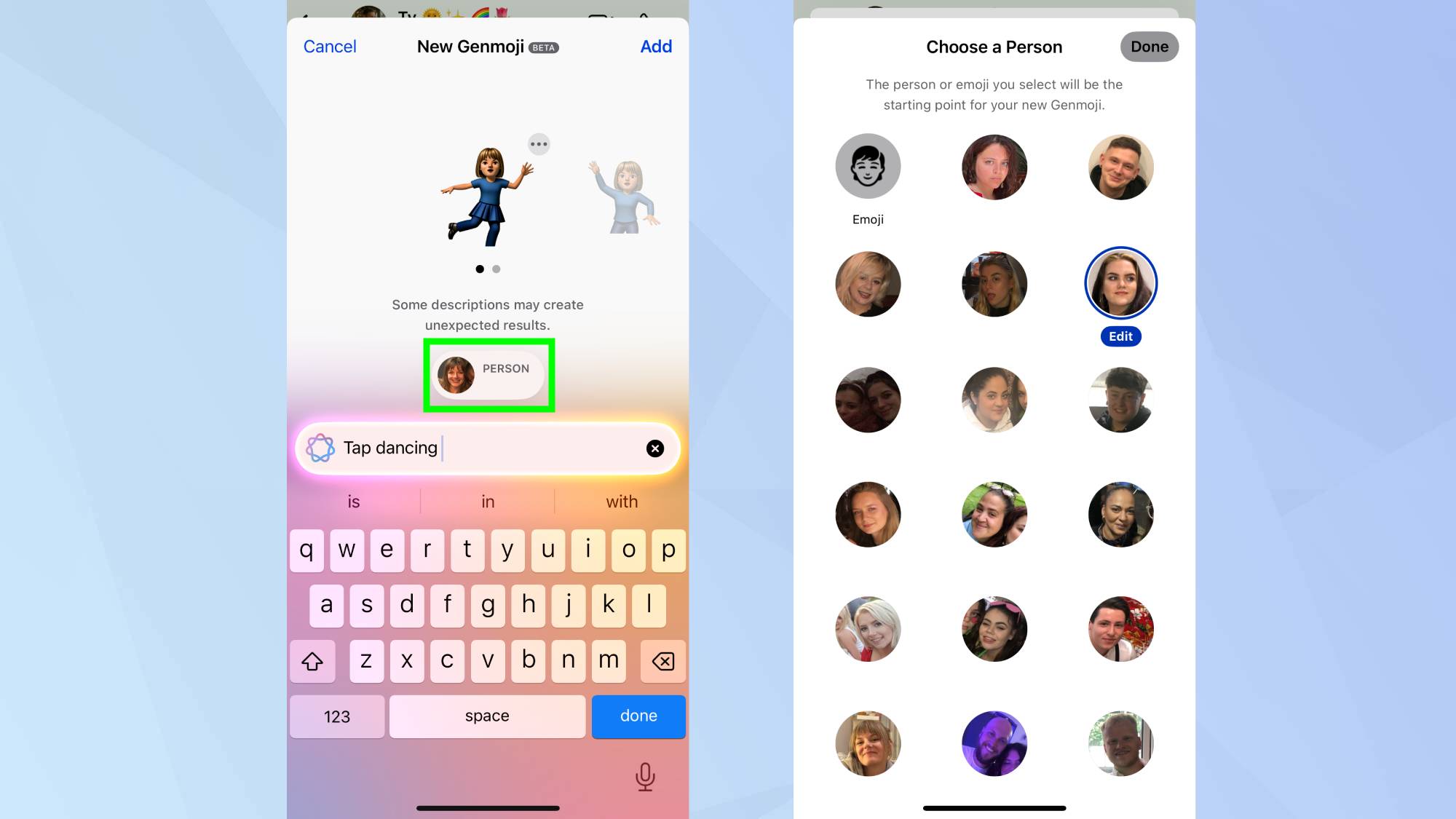

- Image generation: AI-powered tools that allow users to generate images based on descriptions in Image Playground and Genmoji.

- Smart photo editing: Tools like Photo Cleanup can help remove unwanted objects or subjects from photos.

Key features

With the release of iOS 18.4, Apple continues to enhance its suite of AI-driven features under the Apple Intelligence initiative, aiming to provide users with more intuitive and efficient experiences across their Apple devices.

Writing Tools

A standout feature since the launch, Writing Tools assists users in refining their text by checking spelling and grammar, or completely rewriting sections in different tones, and summarizing highlighted content.

ChatGPT also works seamlessly within Writing Tools and is accessible wherever text input is available from email and messages to notes.

Photo enhancements

Photo Cleanup: Easily edit photos by identifying and removing unwanted objects or individuals within a feature that is similar to Google's Magic Eraser. This tool gives users the chance to alter images directly within the Photos app, maintaining the aesthetic quality of their photo collections.

Natural Language Search in Photos: This feature gives users the ability to find the images they are looking for by describing something about it. Be it a color, time of year, or subject within the photo, users can search photos with a quick prompt and locate specific images.

Notification and message enhancements

Notification summaries: To manage the influx of notifications, Apple Intelligence introduced Notification Summaries. The functionality was designed to help users get a brief idea of what the notice is about to reduce distrations from less important alerts.

Message summaries: For lengthy emails or texts, Apple Intelligence can generate summaries, allowing users to grasp the main points without reading the entire message.

Reduce Interruptions Focus: In an effort to help users maintain concentration, this feature helps to prioritize notifications, permitting only important alerts to come through.

Summarized audio recordings: iOS 18.4 introduces the ability to record and transcribe phone calls (when using Apple's phone app) and summarize the content of these conversations. This feature extends to recordings made in the Notes app.

Smart Reply: This feature offers AI-generated responses in emails and messages, adapting suggestions based on the content of the received message.

Siri

Siri enhancements: Siri has received significant updates since Apple Intelligence, and more upgrades have been made in iOS 18.4, including a more conversational tone and improved understanding of user queries.

Unfortunately, an even more improved Siri may not arrive with iOS 18.5 later this spring, it appears we may not see Siri 2.0 until later this year, possibly not until 2026.

"It’s going to take us longer than we thought to deliver on these features and we anticipate rolling them out in the coming year," Apple spokesperson Jaqueline Roy reportedly told Daring Fireball.

The delayed Siri upgrades include personal context, on-screen awareness, and in-app actions across a ton of mostly Apple apps.

ChatGPT integration: Through a partnership with OpenAI, iOS 18.4 integrates ChatGPT into Siri, allowing users to engage with the chatbot using voice commands. This integration expands Siri's capabilities, providing access to a broader range of information and functionalities.

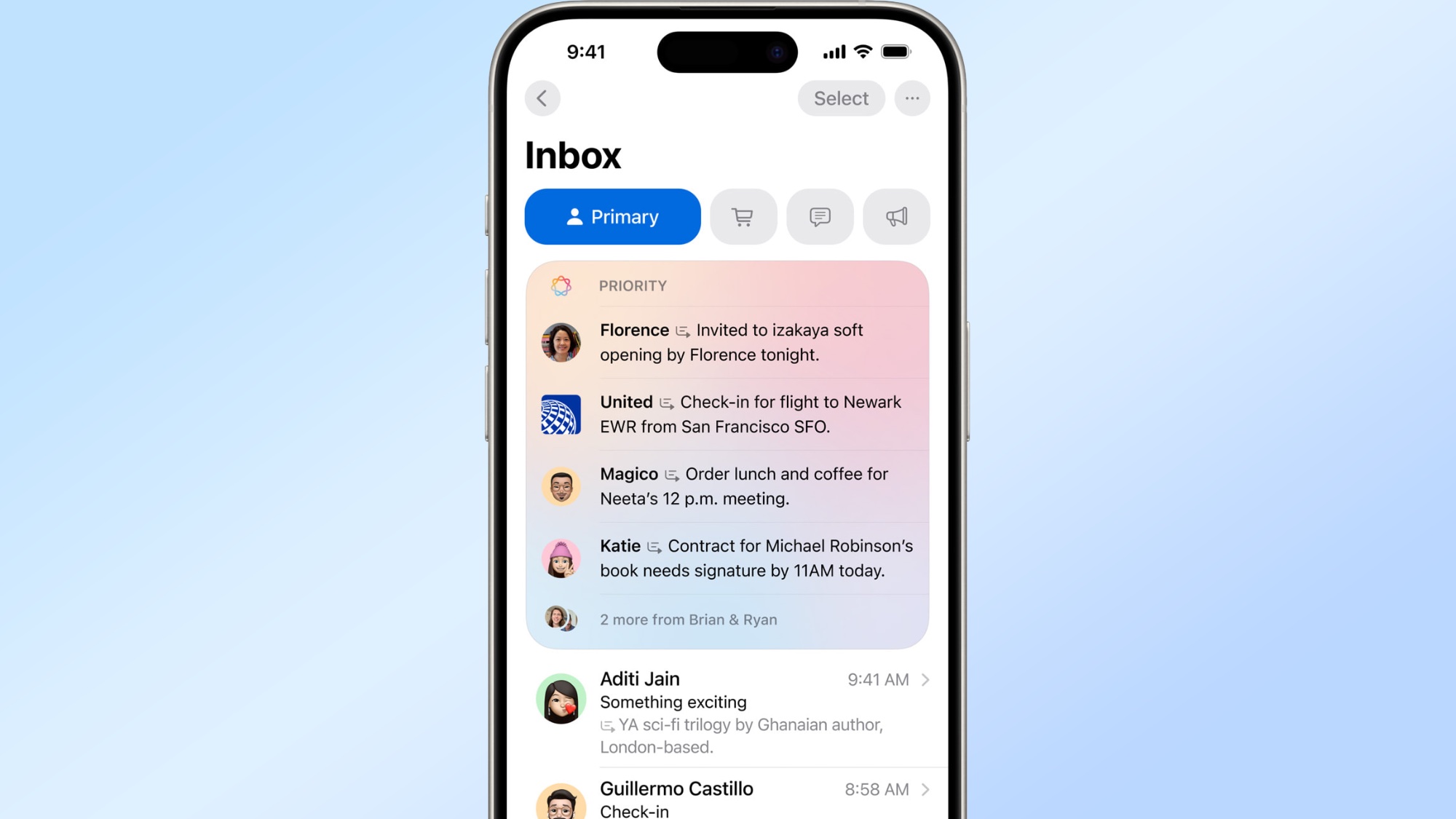

Priority Messages in Mail

Apple Intelligence now aids in identifying time-sensitive emails and messages, pushing them to the top of the inbox with Priority Messages. Users have the choice of keeping the old mail experience of "List Mode" or chose to categorize their emails with "Categories" such as Priority, Transactions, Promptions, and Updates.

This prioritization ensures that users can address urgent communications promptly without sifting through less critical content.

Visual Intelligence

Visual Intelligence functions similarly to Google Lens, enabling users to analyze the world around them through the camera.

Accessible via the Camera Control button on the iPhone 16 series, this feature allows for real-time translation, web searches, and more, enhancing the device's interactive capabilities.

Visual Intelligence is also available via the Action Button on the iPhone 15 Pro and iPhone 16e.

Image Playground

Apple Intelligence's Image Playground makes it easy for users to generate images in customized styles such as sketch or cartoon. With a simple text prompt, Apple AI generates images based on the users request.

The AI takes image generation even further by letting users transform hand-drawn sketches into detailed images within the Notes app using the Image Wand.

Genmoji

Genmoji, introduced in iMessages, gives users the creative freedom to create emojis that don't yet exist. With a simple text prompt, customized emojis are generated and then used within texts and iMessages to add a more personalized touch.

Apple Intelligence Supported devices

Apple Intelligence requires high-performance Apple Silicon chips, meaning it won’t be available on older devices. The following devices are suitable for Apple Intelligence:

Mac:

MacBook Air (M1, 2020) or newer

MacBook Pro (M1, 2020) or newer

Mac Mini (M1, 2020) or newer

Mac Studio (M1 Max, 2022) or newer

iMac (M1, 2021) or newer

iPad:

iPad Pro (5th Gen, 2021) or newer

iPad Air (5th Gen, 2022) or newer

iPhone:

Since Apple Intelligence relies on Apple Silicon chips, older iPhones and Intel-based Macs will not support these features.

What’s next for Apple Intelligence?

Apple’s approach to AI sets it apart from competitors like Google and Microsoft by emphasizing privacy, on-device intelligence (user information is not stored), and seamless ecosystem integration. As Apple continues to refine its AI models, we can expect to see several features in the future, beyond the anticipated Siri updates.

Expanded language support: Initially launching in English, we are sure to see language support expanding globally.

More third-party app integrations: Developers may gain tools to integrate Apple Intelligence into their apps.

Advancements in multimodal AI: Future updates could bring video generation, deeper AR/VR integration, and real-time collaboration tools.

AI-Powered productivity features: Apple Intelligence is expected to enhance productivity apps like Notes, Calendar, and Reminders with more automation. We may even see something like what Gmail recently launched with Google Calendar.

Although Apple may be slower with AI advancement compared to other tech giants, they continue to place privacy first, which should help users gain trust in and feel comfortable with AI.

Final thoughts

Apple Intelligence is one of the most significant AI updates in Apple’s history, combining cutting-edge AI with Apple’s signature privacy and user-first approach.

With a focus on on-device intelligence, a smarter Siri, powerful writing tools, and creative AI features, Apple is setting the stage for the future of AI-powered experiences across iPhones, iPads, and Macs.

As AI becomes an essential part of everyday tech, Apple Intelligence is poised to redefine how users interact with their devices—securely, seamlessly, and intelligently.