AWS has reaffirmed its intentions to be one of the world’s major hardware providers with the launch of its most powerful and efficient chips today.

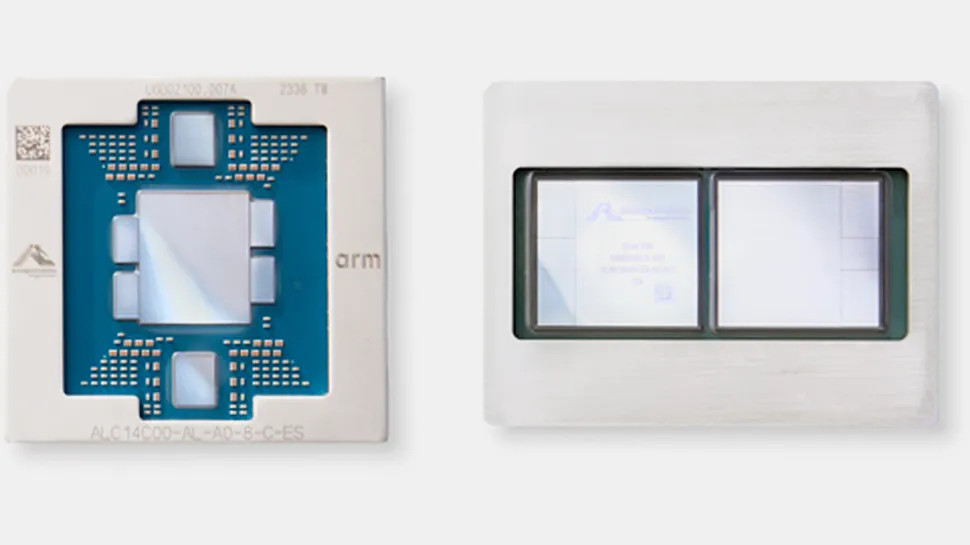

The new Graviton4 and Trainium2 chips, unveiled at its AWS re:Invent 2023 event by CEO Adam Selipsky are designed to power the next generation of AI and machine learning models, offering more performance and efficiency than ever before.

“Silicon underpins every customer workload, making it a critical area of innovation for AWS,” said David Brown, vice president of Compute and Networking at AWS. “By focusing our chip designs on real workloads that matter to customers, we’re able to deliver the most advanced cloud infrastructure to them.

AWS Graviton4 and Trainium2

AWS is promising a major step up for Graviton4, claiming it provides up to 30% better compute performance, 50% more cores, and 75% more memory bandwidth than the current generation Graviton3 processors.

The company says that as they experiment and deploy more and more AI-powered workloads, customers are seeing their compute, memory, storage, and networking requirements increase, so need higher performance and larger instance sizes, all at an affordable cost and energy efficiency level to lessen any effects on the environment.

AWS is making Graviton4 available in Amazon EC2 R8g instances that it says offer larger instance sizes with up to three times more vCPUs and three times more memory than current generation, allowing customers to process larger amounts of data, scale their workloads, improve time-to-results, and lower their total cost of ownership.

Following its original launch in 2020, the second-generation Trainium2 looks to offer faster and more efficient training for current and future AI models that are using bigger datasets than ever, with today’s most advanced FMs and LLMs boasting hundreds of billions to trillions of parameters.

AWS says Trainium2 will deliver up to four times faster training than the first generation hardware, along with offering three times more memory capacity and can improve energy efficiency up to two times.

It can be deployed in EC2 UltraClusters of up to 100,000 chips, making it possible to train foundation models and large language models in a fraction of the time previously taken, with the company giving the example of training a a 300-billion parameter LLM in weeks versus months.