Tech cognoscenti and major media responded in unison this fall when Amazon announced its $1.25 billion investment in Anthropic, a generative AI startup. Amazon Web Services was scrambling to stay relevant in the post-ChatGPT world, they all agreed, “trying to keep pace” with Microsoft and Google (New York Times) or “racing to catch” them (CNBC) because it “doesn’t look like the leader” (Business Insider).

Never mind that AWS is the world’s dominant cloud provider, far larger than Microsoft’s Azure or Alphabet’s Google Cloud. The conventional wisdom was that cloud services had abruptly entered a new phase—Cloud 2.0, in which giants must compete based on how well their software, storage, and other tools support AI—and AWS wasn’t equipped to win under the new rules.

When asked if AWS is "catching up,” CEO Adam Selipsky doesn’t explicitly deny it. “We’re about three steps into a 10K race,” he tells Fortune. “Which runner is a half-step ahead or behind? It’s not really the important question. The important questions are, who are the runners? What does the course look like, what do the spectators and officiators of the race look like, and where do we think the race is going?”

Selipsky’s message to doubters, in other words, is to stop hyperventilating and stay focused on the long term. It’s exactly the message you’d expect from a company that has indeed seemed a half-step behind on AI. But it’s also a message Amazon has preached internally with great success since its founding—to “accept that we may be misunderstood for long periods of time,” to quote its famous Leadership Principles.

Recent events have certainly underscored the idea that it's too early for any company to declare victory. The industry-wide panic attack that surrounded the OpenAI board's mid-November firing of CEO Sam Altman—and the company's almost immediate decision, heavily influenced by Microsoft, to subsequently rehire him—reminded the business world that generative AI is very much in its Wild West years.

Still, it’s reasonable for customers, competitors, and investors to wonder whether AWS’s Anthropic investment signals a broader strategic problem: In the Cloud 2.0 era, will AWS fall from its throne? And long term, who will be AI’s dominant global purveyor?

To come to grips with those questions, start with Anthropic. AWS bought into the startup, taking a minority stake with both sides able to raise the investment to as much as $4 billion, for two reasons. The obvious reason is that Anthropic will make AWS its primary cloud provider—bringing with it Claude, its foundation model (a vast digital network like the one that supports ChatGPT), and the AI assistant by the same name that comes with it. (Anthropic introduced Claude in March; Claude 2 debuted in July, and a faster, cheaper version called Claude Instant 1.2 was released in August.) Future versions of Claude will be available to AWS customers through a service called Bedrock, which offers access to many foundation models. AWS has built or acquired access to several such models, and it argues that its cybersecurity and range of services for those models exceed what OpenAI currently offers. Still, none of AWS’s models has shown the range or efficacy of OpenAI’s GPT-4—hence the “falling behind” narrative.

The less obvious reason, at least to non-techies, is that Anthropic will use AWS’s proprietary AI chips to train and deploy future models. That’s important because AWS customers can use such models at much lower cost than using those built on other companies’ hardware, such as Nvidia’s popular, expensive chips. (Thanks largely to enthusiasm about AI, Nvidia’s share price has more than tripled in 2023.) Brad Shimmin, an analyst at research firm Omdia, explains, “If AWS can go to an enterprise and say, ‘You could run Anthropic for one-third the cost of hosting OpenAI for GPT yourself,’ they’re going to win.”

Cost is a critical factor in the future of AI broadly. Building foundation models is enormously expensive. OpenAI CEO Sam Altman has said that training GPT-4, the latest model, cost “more than $100 million,” in part because of OpenAI’s use of Nvidia’s top-tier chips. Amazon CEO Andy Jassy has told investors he’s “optimistic” that many foundation models will eventually be built on AWS chips, and AWS’s scale makes that aspiration highly plausible.

To get a sense of the upside for Amazon, consider the experience of LexisNexis, a longtime AWS customer that offers software and online services to law firms. It recently introduced a service called Lexis+ AI that can draft briefs and contracts, summarize judicial decisions, and analyze a firm’s own legal documents, among other features.

LexisNexis chief technology officer Jeff Reihl says his company started working with Anthropic before AWS invested in the startup; it also had access to Bedrock. As AWS and Anthropic expanded their relationship, the stars aligned, and the combined offering gave Lexis+ AI the best version of what it wanted. Reihl adds, “Whatever is the best model to solve a specific problem at the best performance, accuracy, and price is the one we’re going to pick.”

AWS is accustomed to offering a wealth of options and winning on cost. Proponents of Cloud 2.0 mistake AI’s importance for its effect on the basic nature of the cloud industry, Selipsky says. His view of the conventional wisdom: “AI is going to be a really, really, really big thing in the cloud—which it is—so, ‘Oh, it’s a different thing.’ ” But in fact, he continues, generative AI hasn’t changed the fundamentals of the cloud industry. “We’ve shown over the past 17 years that companies should not be operating their own data centers or buying their own servers or worried about networking,” he says. “The world will quickly come to the same realization with generative AI, which is that companies should figure out how to use it, not how to rack and stack it.”

Daniel Newman, CEO of the Futurum tech research and advisory firm, takes Selipsky’s argument a step further. The “massive costs” of AI mean that “the ability to afford this at scale is going to be limited to a very small number of companies,” he says. “I actually think AWS, Microsoft, and Google are too big to fail.”

Partnerships like Anthropic’s with Amazon and OpenAI’s with Microsoft could make Newman’s prediction even more likely to come true, says Ben Recht, a computer scientist at the University of California at Berkeley who follows the emerging industry. “At this point, the ‘startups’ and the ‘Big Tech’ people are all the same,” Recht says. “It’s the same rich people making each other even richer.”

“At this point, the ‘startups’ and the ‘Big Tech’ people are all the same.”

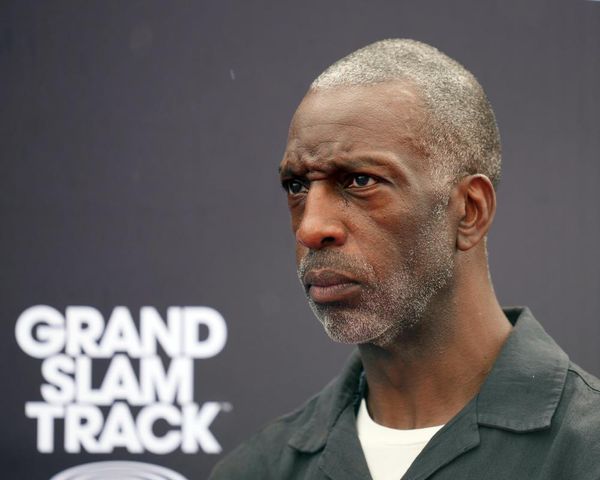

Ben Recht, computer scientist, UC–Berkeley

It’s tempting to venture that AWS could remain No. 1 for a long time. Its cloud market share has held steady in the 31% to 33% range for a decade, while Microsoft and Google remain far behind (23% and 10%, respectively). But both competitors have been gaining share steadily. Millions of companies have contractual relationships with Microsoft through their use of Office 365 and other productivity software; Azure could help those customers integrate AI into Excel, Word, and other Microsoft products. Google computer scientists, meanwhile, have made AI breakthroughs going back years. For AWS, competition in the AI era will only intensify.

AI has also drastically accelerated the pace of change. “It’s just unbelievable the progress that has been made in the past 18 months,” Reihl observes; the year ahead will likely bring more surprises.

The elevation of Anthropic to Big Tech partner is an unexpected chapter for one of AI’s more unusual startups. (Google announced an investment in Anthropic at the same time AWS did.) That said, some of OpenAI's business partners clearly think Anthropic is more than ready to compete on the same playing field: During the OpenAI leadership kerfuffle, tech website The Information reported that more than 100 OpenAI customers contacted Anthropic.

Anthropic was founded in 2021 by former staffers of OpenAI, who left that startup over differences regarding OpenAI’s partnership with Microsoft—including concerns that OpenAI was abandoning a mission of making safer, more ethical AI and becoming too preoccupied with commercial concerns. Dario Amodei, CEO of Anthropic, was previously OpenAI’s vice president of research; his sister Daniela Amodei, Anthropic’s president, was VP of safety and policy.

Anthropic is also among a crop of AI startups that have staked out a claim to putting ethics first. It operates as a public benefit corporation, a status that requires the company to weigh its social impact alongside its business objectives—and to prove its seriousness by regularly publishing metrics on that impact. (It’s the same corporate structure used by Patagonia, Ben & Jerry’s, and Etsy.)

The founders of Anthropic are also active in effective altruism, or EA, a philosophical and social movement that focuses on using logical analysis to pinpoint the most potent avenues for aiding those in need. Its roots in EA helped Anthropic raise $500 million from an investor whose ethics credentials have since been revoked: FTX founder Sam Bankman-Fried, who was convicted of fraud in early November.

Some of Anthropic’s efforts to distinguish itself on ethics grounds have attracted some admiring interest. Jim Hare, a distinguished VP analyst at Gartner, highlights the startup’s emphasis on “constitutional AI,” which integrates ethical principles into an AI model’s training process in part by soliciting input from the public and incorporating ideas from sources like the United Nations Universal Declaration of Human Rights.

Others see limits to Anthropic’s ambitions. Gary Marcus, an emeritus professor of cognitive science at New York University and an expert on the constraints of the “deep learning” that underlies AI, notes that Claude is “still very heavily dependent on large language models.” He adds, “We know that large language models are fundamentally opaque, that they hallucinate a lot, that they’re unreliable, and I think that’s a really poor substrate for ethical AI.”

It remains to be seen how Anthropic’s new partnerships will affect its efforts to position itself as an ethical AI player. (The company declined to comment for this story.) What will happen if the business needs of AWS or Google and their clients diverge from Anthropic’s best practices, or trigger apprehensions around bias or user privacy? Given how quickly the field is evolving, we may not have to wait long for answers.

A version of this article appears in the December 2023/January 2024 issue of Fortune with the headline, "Cloud giants are making it rain for AI."