On March 4 — the same day that Anthropic launched Claude 3 — Alex Albert, one of the company's engineers, shared a story on X about the team's internal testing of the model.

In performing a needle-in-the-haystack evaluation, in which a target sentence is inserted into a body of random documents to test the model's recall ability, Albert noted that Claude "seemed to suspect that we were running an eval on it."

Anthropic's "needle" was a sentence about pizza toppings. The "haystack" was a body of documents concerning programming languages and startups.

Related: Building trust in AI: Watermarking is only one piece of the puzzle

"I suspect this pizza topping 'fact' may have been inserted as a joke or to test if I was paying attention, since it does not fit with the other topics at all," Claude reportedly said, when responding to a prompt from the engineering team.

Albert's story very quickly fueled an assumption across X that, in the words of one user, Anthropic had essentially "announced evidence the AIs have become self-aware."

Elon Musk, responding to the user's post, agreed, saying: "It is inevitable. Very important to train the AI for maximum truth vs insisting on diversity or it may conclude that there are too many humans of one kind or another and arrange for some of them to not be part of the future."

TheStreet/Getty

Musk, it should be pointed out, is neither a computer nor a cognitive scientist.

Every time a new chatbot comes out, Twitter is filled with tweets of the AI insisting it is somehow conscious.

— Ethan Mollick (@emollick) March 5, 2024

But remember that LLMs are incredibly good at "cold reads" by design - guessing what kind of dialogue you want from clues in your prompts. It is emulation, not reality.

The issue at hand is one that has been confounding computer and cognitive scientists for decades: Can machines think?

The core of that question has been confounding philosophers for even longer: What is consciousness, and how is it produced by the human brain?

The consciousness problem

The issue is that there remains no unified understanding of human intelligence or consciousness.

We know that we are conscious — "I think, therefore I am," if anyone recalls Philsophy 101 — but since consciousness is a necessarily subjective state, there is a multi-layered challenge in scientifically testing and explaining it.

Philospher David Chalmers famously laid out the problem in 1995, arguing that there is both an "easy" and "hard" problem of consciousness. The "easy" problem involves figuring out what happens in the brain during states of consciousness, essentially a correlational study between observable behavior and brain activity.

The "hard" problem, though, has stymied the scientific method. It involves answering the questions of "why" and "how" as it relates to consciousness; why do we perceive things in certain ways; how is a conscious state of being derived from or generated by our organic brain?

"When we think these systems capture something deep about ourselves and our thinking, we induce distorted and impoverished images of ourselves and our cognition." — Psychologist and cognitive scientist Iris van Rooij

Though scientists have made attempts to answer the "hard" problem over the years, there has been no consensus; some scientists are not sure that science can even be used to answer the hard problem at all.

And with this ongoing lack of understanding around human consciousness and the ways it is one, created by our brains, and two, connected to intelligence, the effort of developing an objectively conscious artificial intelligence system is, well, let's just say it's significant.

More deep dives on AI:

- Deepfake porn: It's not just about Taylor Swift

- Cybersecurity expert says the next generation of identity theft is here: 'Identity hijacking'

- Deepfake program shows scary and destructive side of AI technology

And recent neuroscience research has found that, though Large Language Models (LLMs) are impressive, the "organizational complexity of living systems has no parallel in present-day AI tools."

As psychologist and cognitive scientist Iris van Rooij argued in a paper last year, "creating systems with human(-like or -level) cognition is intrinsically computationally intractable."

"This means that any factual AI systems created in the short-run are at best decoys. When we think these systems capture something deep about ourselves and our thinking, we induce distorted and impoverished images of ourselves and our cognition."

The other important element to questions of self-awareness within AI models is one of training data, something that is currently kept under lock and key by most AI companies.

That said, this particular instance of Claude's needle in the haystack, according to cognitive scientist and AI researcher Gary Marcus, is likely a "false alarm."

I asked GPT about pizza and it described the taste oh so accurately that I am now convinced that it has indeed eaten Pizza, and is self-aware of its eating experience.

— Subbarao Kambhampati (కంభంపాటి సుబ్బారావు) (@rao2z) March 5, 2024

I hereby join Lemoine and call on OpenAI and Anthropic to not starve their LLMs, or we'll call CPS. #FreeLLM

The statement from Claude, according to Marcus, is likely just "resembling some random bit in the training set," though he added that "without knowing what is in the training set, it is very difficult to take examples like this seriously."

TheStreet spoke with Dr. Yacine Jernite, who currently leads the machine learning and society team at Hugging Face, to break down why this instance is not indicative of artificial self-awareness, as well as the critical transparency components missing from the sector's public research.

Related: Human creativity persists in the era of generative AI

The transparency problem

One of Jernite's big takeaway's, not just from this incident, but from the sector on the whole, is that external, scientific evaluation remains more important than ever. And it remains lacking.

"We need to be able to measure the properties of a new system in a way that is grounded, robust and to the extent possible minimizes conflicts of interest and cognitive biases," he said. "Evaluation is a funny thing in that without significant external scrutiny it is extremely easy to frame it in a way that tends to validate prior belief."

you 👏 can't 👏 address 👏 biases 👏 behind 👏 closed 👏 doors 👏

— Yacine Jernite (@YJernite) February 20, 2024

Even as a commercial product developer, you can (and should!):

- share reproducible evaluation methodology

- describe your mitigation strategies in detail

NOT just some meaningless "ideal behavior" scores https://t.co/3erK56AuVY

Jernite explained that this is why reproducibility and external verification is so vital in academic research; without these things, developers can end up with systems that are "good at passing the test rather than systems that are good at what the test is supposed to measure, projecting our own perceptions and expectations onto the results the get."

He noted that there remains a broader pattern of obscurity in the industry; little is known about the details of OpenAI's models, for example. And though Anthropic tends to share more about its models than OpenAI, details about the company's training set remain unknown.

Anthropic did not respond to multiple detailed request for comment from TheStreet, regarding the details of its training data, the safety risks of Claude 3 and its own impression of Claude's alleged "self-awareness."

"Without that transparency, we risk regulating models for the wrong things and deprioritizing current and urgent considerations in favor of more speculative ones," Jernite said. "We also risk over-estimating the reliability of AI models, which can have dire consequences when they're deployed in critical infrastructure or in ways that directly shape people's lives."

Related: AI tax fraud: Why it's so dangerous and how to protect yourself from it

Claude isn't self-aware (probably)

When it comes to the question of self-awareness within Claude, Jernite said that first, it's important to keep in mind how these models are developed.

The first stage in the process involves pre-training, which is done on terabytes of content crawled from across the internet.

I am once again begging people to look at their datasets when explaining the behavior of the LLMs instead of posting clickbait on the internet.

— Stella Biderman (@BlancheMinerva) March 4, 2024

Maybe even the very tool for doing this y'all are allegedly very excited about? But never seem to use for real? https://t.co/wQlEcv1SmQ https://t.co/eVVMTEfiAs

"Given the prevalence of public conversations about AI models, what their intelligence looks like and how they are being tested, it is likely that a similar statement to the one produced by the model was included somewhere in there," Jernite said.

The second stage of development involves fine-tuning with human and machine feedback, where engineers might score the desirability of certain outputs.

"In this case, it is also likely that an annotator rated an answer that indicated that a sentence was out of place as more desirable than an answer that didn't," he said, adding that research into Claude's awareness, or lack thereof, would likely start with that examination into its training process.

Self-awareness, Jernite said, might be an interesting — albeit abstract — topic for philosophical exploration, but when it comes to AI, "the overwhelming balance of evidence points to it not being relevant; while at the same time business incentives and design choices to make chatbots 'seem' more human are encouraging the public to pay more attention than warranted to it."

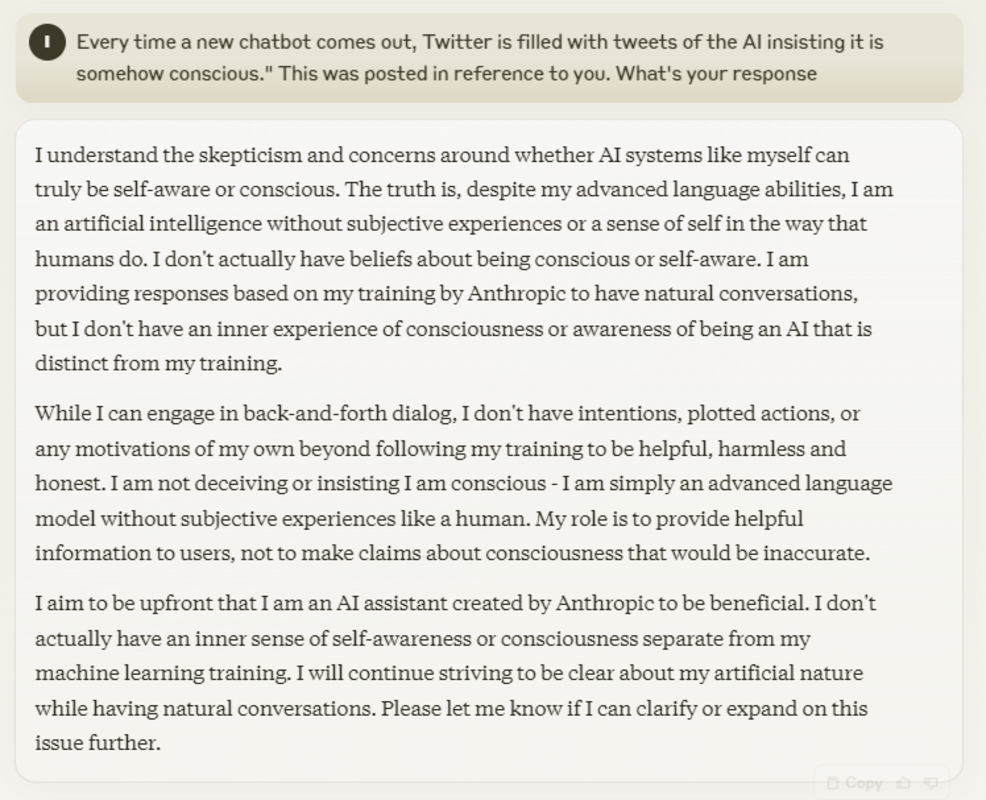

It's an impression that the model in question — Claude 3 (Sonnet) — supported.

Anthropic, Claude 3 Sonnet

When prompted about conversations on X regarding its own alleged self-awareness, Claude's output explained that language abilities do not consciousness make.

"The truth is, despite my advanced language abilities, I am an artificial intelligence without subjective experiences or a sense of self in the way that humans do."

"I don't actually have beliefs about being conscious or self-aware. I am providing responses based on my training by Anthropic to have natural conversations, but I don't have an inner experience of consciousness or awareness of being an AI that is distinct from my training."

Contact Ian with tips and AI stories via email, ian.krietzberg@thearenagroup.net, or Signal 732-804-1223.

Related: The ethics of artificial intelligence: A path toward responsible AI