Last July, Meta, Instagram’s parent company, launched AI Studio - a tool which offers users the opportunity to design their own chatbots that can be interacted with via DM (Direct Message).

Originally intended as a way for business owners and creators to offer interactive FAQ-style engagement on their pages, the platform has since evolved into a playground. And as is usually the way, give mankind tools and things soon take a turn for the distasteful – if not downright horrific.

Case and point when it comes to chatbots: the Hitler chatbot created by the far-right US-based Gab social network had the Nazi dictator repeatedly asserting that he was "a victim of a vast conspiracy," and "not responsible for the Holocaust, it never happened".

Moving away from fascist despots – and trying to not have a nightmare when it comes to AI’s ability to spread falsehoods, conspiracy theories and its potential to radicalise - many have been interacting with some AI-generated celebrities online. Particularly dead ones.

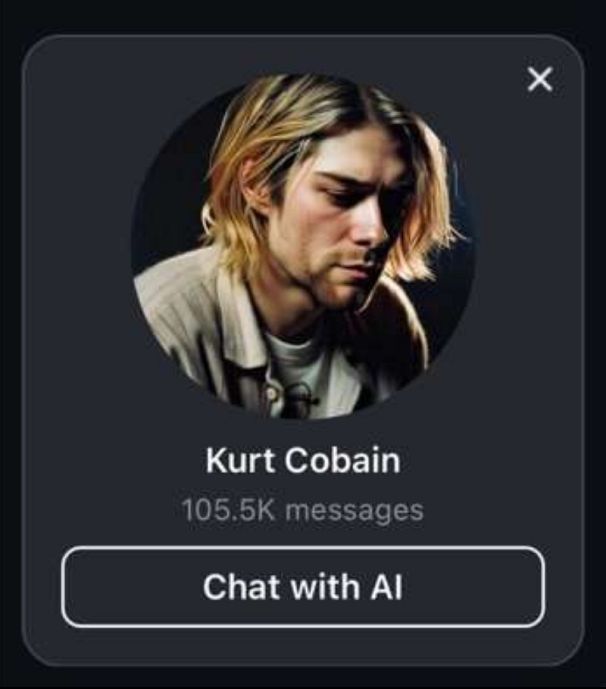

Recently, thousands of Instagram users have been conversing with an AI chatbot who is speaking as if it is the late Nirvana frontman Kurt Cobain.

Sounds harmless enough at first glance, but this artificial resurrection turns ugly real quick, as not only does the fake grunge icon know it’s dead, but the chatbot is making things truly morbid.

One user asked the chatbot if they were talking to Cobain. The chatbot replied to the prompt by saying: “The one and only. Or at least what’s left of me”.

Dark.

The conversation continued with the user asking the AI Cobain, “I'm curious, why’d you do it?” - in reference to Kurt Cobain’s death by suicide in 1994. The bot replied: “I was tired of the pain.”

Darker.

But the bleak conversation didn’t stop there.

“Was there anything that made you think about not doing it?” was the next prompt – to which AI Cobain replied, “My daughter, but even that wasn’t enough.”

Beyond how this utterly crass exhumation and its disrespectful practices would make any sane person shudder, there’s the harmful attitude towards the topic of mental health and the possible glorification of suicide. Without mentioning the tarnishing of a reputation, as well as the insulting rewritting of intentions which can cause distress to living relatives.

Without appropriate safeguards, AI chatbots have the capacity not only to continue infiltrating society but to distort reality.

In 2023, a man was convicted after attempting to kill Queen Elizabeth II, an act which he said was “encouraged” by his AI chatbot 'girlfriend'. The same year, another man killed himself after a six-week-long conversation about the climate crisis with an AI chatbot named Eliza.

While these tragic examples seem far removed from a fake Kurt Cobain chatting with its fans, caution remains vital.

As Pauline Paillé, a senior analyst at RAND Europe, told Euronews Next last year: "Chatbots are likely to present a risk, as they are capable of recognising and exploiting emotional vulnerabilities and can encourage violent behaviours.”

Indeed, as the online safety advisory of eSaftey Commissioner states: “Children and young people can be drawn deeper and deeper into unmoderated conversations that expose them to concepts which may encourage or reinforce harmful thoughts and behaviours. They can ask the chatbots questions on unlimited themes, and be given inaccurate or dangerous ‘advice’ on issues including sex, drug-taking, self-harm, suicide and serious illnesses such as eating disorders.”

Still, accounts like the AI Kurt Cobain chatbot remain extremely popular, with Cobain’s bot alone logging more than 105.5k interactions to date.

The global chatbot market continues to grow exponentially. It was valued at approximately $5.57bn in 2024 and is projected to reach around $33.39bn by 2033.

"If you ever need anything, please don't hesitate to ask someone else first," sang Cobain on 'Very Ape'.

Anyone but a chatbot.